One of many bottlenecks in getting worth out of generative AI is the problem in turning pure language into SQL queries. With out detailed contextual understanding of the info, the textual content is transformed into SQL that doesn’t give the best reply. However due to using its semantic layer, AtScale claims that it has achieved a breakthrough in text-to-SQL processing that might pave the wave for extra pure language question adoption in GenAI.

AtScale bought its begin within the superior analytics world by offering an OLAP layer that helped to speed up SQL queries in large information environments. The corporate’s question engine was used to hurry throughput in a few of the world’s largest information warehousing and information lake environments. As the large information market has advanced lately, AtScale has shifted its focus to its semantic layer, which sits between the enterprise intelligence (BI) instrument and the info warehouse.

The semantic layer has emerged as a important part in superior analytic techniques, significantly as the dimensions, user-base, and significance of automated decision-making techniques elevated. By defining the important thing metrics {that a} enterprise will use, the semantic layer ensures {that a} various consumer group working with massive and various information units can nonetheless get the best solutions.

The significance of a semantic layer is just not all the time apparent. For example, a query similar to “What was our whole gross sales final month by area?” could appear, at first look, to be comparatively easy and easy. Nevertheless, with out concrete definitions for what every of these phrases imply by way of the organizations’ particular information, it’s truly fairly simple to get improper solutions.

Semantic layers have develop into much more important within the superior analytics house because the GenAI revolution began again in 2022. Whereas massive language fashions (LLMs) like OpenAI’s GPT-4 can generate respectable SQL based mostly on pure language enter, the chances that the generated SQL will present correct solutions are slim with out the particular enterprise context supplied by a semantic layer.

AtScale sought to quantify the distinction between in accuracy between utilizing an LLM-generated SQL queries with and with out the semantic layer. It arrange a take a look at that utilized the Google Gemini Professional 1.5 LLM towards the TPC-DS dataset. It shared the outcomes of the take a look at in its new white paper, titled “Enabling Pure Language Prompting with AtScale Semantic Layer and Generative AI.”

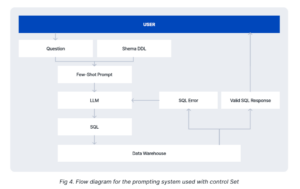

For the primary take a look at with out the semantic layer, AtScale configured the system to utilizing few-shot prompting towards the supply schema of the TPC-DS dataset. The system is configured to return “undecided” if the LLM deems the query unsolvable. If the question generates an SQL error, the question is retried thrice earlier than it’s deemed unsolvable. If the question runs with out an error, a result’s returned after which manually checked for correctness. This method demonstrated a 20% whole accuracy price, the corporate mentioned.

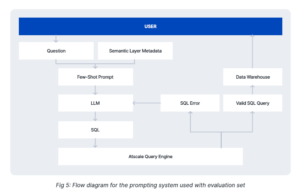

The second system used the identical mannequin and dataset, however was configured in a different way. There have been two key variations, in keeping with Jeff Curran, the info science staff lead at AtScale.

“First, as a substitute of the DDL being supplied within the immediate, metadata in regards to the semantic mannequin’s logical question desk is supplied. You will need to specify right here that solely metadata from the Semantic Layer is supplied to the LLM, no information from the underlying warehouse tables is shipped to the LLM,” Curran wrote within the white paper.

“Second, on this system, the generated queries are submitted towards the AtScale Question Engine as a substitute of the info warehouse. The ultimate change is that AtScale determines the validity of the SQL syntax for the question made towards it, versus the info warehouse,” he wrote.

When AtScale applied its semantic layer and OLAP engine to the combination, the accuracy price of the generated SQL queries jumped to 92.5%, in keeping with the paper. What’s extra, the semantic layer-based system bought 100% of the better questions appropriate; it solely generated faulty information with essentially the most advanced queries among the many 40 questions.

This stage of accuracy makes GenAI-powered pure language question (NLQ) techniques helpful in enterprise settings, the corporate says.

“Our integration of AtScale’s Semantic Layer and Question Engine with LLMs marks a big milestone in NLP and information analytics,” David Mariani, the CTO and co-founder of AtScale, mentioned in a press launch. “By feeding the LLM with related enterprise context, we are able to obtain a stage of accuracy beforehand unattainable, making Textual content-to-SQL options trusted in on a regular basis enterprise use.”

You’ll be able to obtain AtScale’s newest white paper right here

“For instance, a query like ‘What was the sum of internet gross sales for every product model within the yr 2002?’ requires SQL that defines the ‘sum of internet gross sales’ KPI, in addition to joins between the underlying web_sales, date_dim, and item_dim tables,” Curran wrote. “This stage of excessive schema and query complexity questions was unsolvable for the management NLQ system, however returned the proper consequence when utilizing the AtScale backed NLQ system.”

Whereas immediate engineering and RAG can present some context for LLMs and assist them in direction of the best reply, there are questions on simply how far customers can belief the LLMs. AtScale notes that the LLM typically hallucinated information that didn’t exist, or ignored orders to make use of sure filters.

“Despite the fact that the column names had been supplied, there have been circumstances the place the LLM referenced column names that didn’t exist within the desk,” Curran wrote. “The identify generated by the LLM was all the time a simplified model of a column from the supplied metadata.”

With just a few tweaks and a few fine-tuning, the accuracy price could possibly be bumped up even larger, Curran wrote.

“We imagine {that a} set of further coaching information designed to organize an LLM for work with the AtScale Question Engine might vastly enhance efficiency on even larger complexity query units,” he wrote. “In conclusion, the AtScale Semantic Layer offers a viable answer to conducting fundamental NLQ duties.”

Associated Gadgets:

Is the Common Semantic Layer the Subsequent Huge Information Battleground?

AtScale Declares Main Improve To Its Semantic Layer Platform

Why a Common Semantic Layer is the Key to Unlock Worth from Your Information