Databricks Workflows is the cornerstone of the Databricks Information Intelligence Platform, serving because the orchestration engine that powers crucial knowledge and AI workloads for 1000’s of organizations worldwide. Recognizing this, Databricks continues to put money into advancing Workflows to make sure it meets the evolving wants of contemporary knowledge engineering and AI initiatives.

This previous summer time, we held our greatest but Information + AI Summit, the place we unveiled a number of groundbreaking options and enhancements to Databricks Workflows. Current updates, introduced on the Information + AI Summit, embody new data-driven triggers, AI-assisted workflow creation, and enhanced SQL integration, all geared toward enhancing reliability, scalability, and ease of use. We additionally launched infrastructure-as-code instruments like PyDABs and Terraform for automated administration, and the final availability of serverless compute for workflows, guaranteeing seamless, scalable orchestration. Trying forward, 2024 will carry additional developments like expanded management movement choices, superior triggering mechanisms, and the evolution of Workflows into LakeFlow Jobs, a part of the brand new unified LakeFlow resolution.

On this weblog, we’ll revisit these bulletins, discover what’s subsequent for Workflows, and information you on methods to begin leveraging these capabilities as we speak.

The Newest Enhancements to Databricks Workflows

The previous 12 months has been transformative for Databricks Workflows, with over 70 new options launched to raise your orchestration capabilities. Beneath are a number of the key highlights:

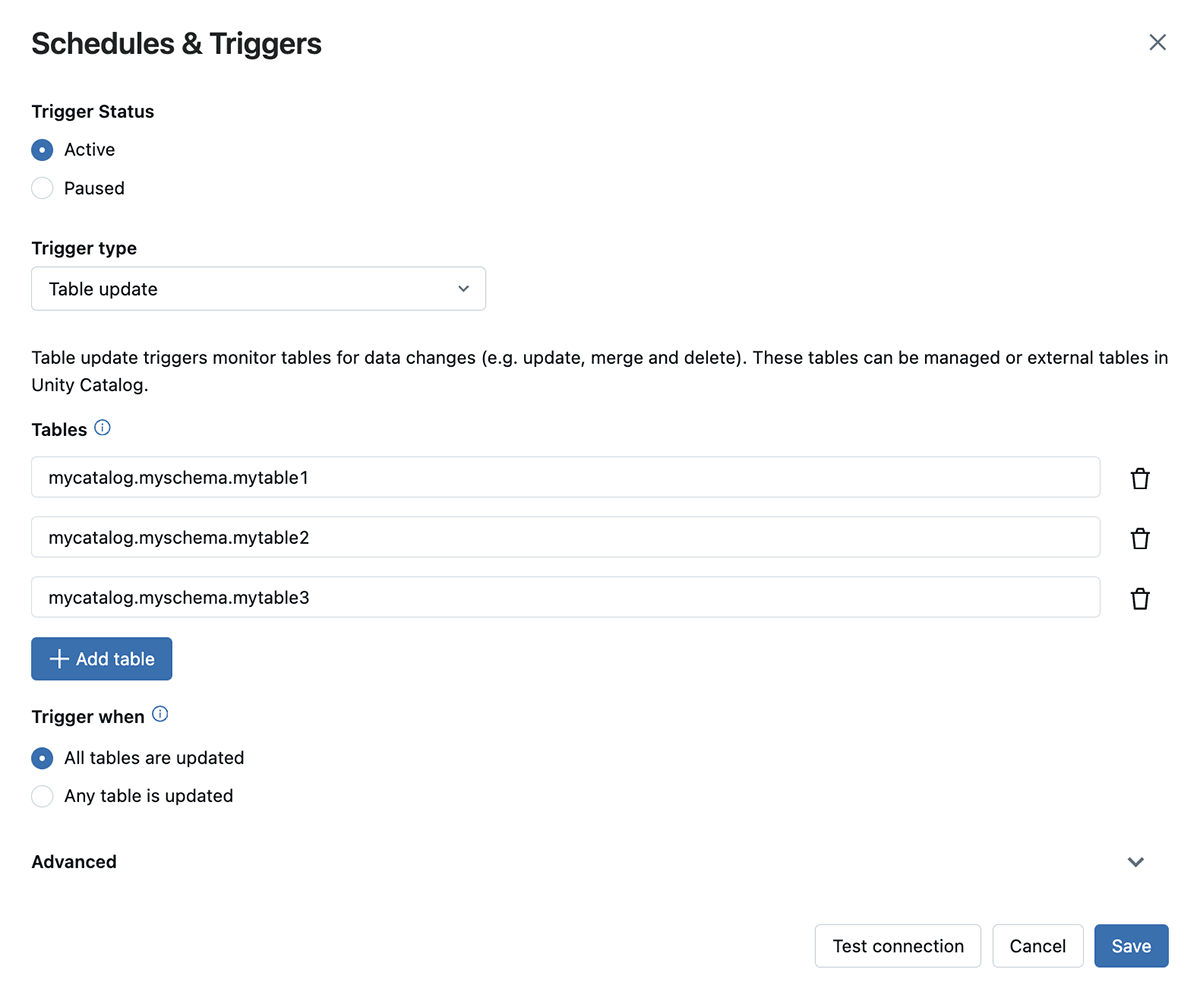

Information-driven triggers: Precision while you want it

- Desk and file arrival triggers: Conventional time-based scheduling shouldn’t be enough to make sure knowledge freshness whereas lowering pointless runs. Our data-driven triggers be certain that your jobs are initiated exactly when new knowledge turns into obtainable. We’ll verify for you if tables have up to date (in preview) or new information have arrived (typically obtainable) after which spin up compute and your workloads while you want them. This ensures that they devour assets solely when essential, optimizing price, efficiency, and knowledge freshness. For file arrival triggers particularly, we have additionally eradicated earlier limitations on the variety of information Workflows can monitor.

- Periodic triggers: Periodic triggers mean you can schedule jobs to run at common intervals, corresponding to weekly or each day, with out having to fret about cron schedules.

AI-assisted workflow creation: Intelligence at each step

- AI-Powered cron syntax technology: Scheduling jobs might be daunting, particularly when it entails advanced cron syntax. The Databricks Assistant now simplifies this course of by suggesting the right cron syntax primarily based on plain language inputs, making it accessible to customers in any respect ranges.

- Built-in AI assistant for debugging: Databricks Assistant can now be used instantly inside Workflows (in preview). It supplies on-line assist when errors happen throughout job execution. In the event you encounter points like a failed pocket book or an incorrectly arrange job, Databricks Assistant will supply particular, actionable recommendation that can assist you shortly determine and repair the issue.

Workflow Administration at Scale

- 1,000 duties per job: As knowledge workflows develop extra advanced, the necessity for orchestration that may scale turns into crucial. Databricks Workflows now helps as much as 1,000 duties inside a single job, enabling the orchestration of even probably the most intricate knowledge pipelines.

- Filter by favourite job and tags: To streamline workflow administration, customers can now filter their jobs by favorites and tags utilized to these jobs. This makes it straightforward to shortly find the roles you want, e.g. of your workforce tagged with “Monetary analysts”.

- Simpler choice of job values: The UI now options enhanced auto-completion for job values, making it simpler to move info between duties with out guide enter errors.

- Descriptions: Descriptions permit for higher documentation of workflows, guaranteeing that groups can shortly perceive and debug jobs.

- Improved cluster defaults: We have improved the defaults for job clusters to extend compatibility and cut back prices when going from interactive growth to scheduled execution.

Operational Effectivity: Optimize for efficiency and value

- Price and efficiency optimization: The brand new timeline view inside Workflows and question insights present detailed details about the efficiency of your jobs, permitting you to determine bottlenecks and optimize your Workflows for each pace and cost-effectiveness.

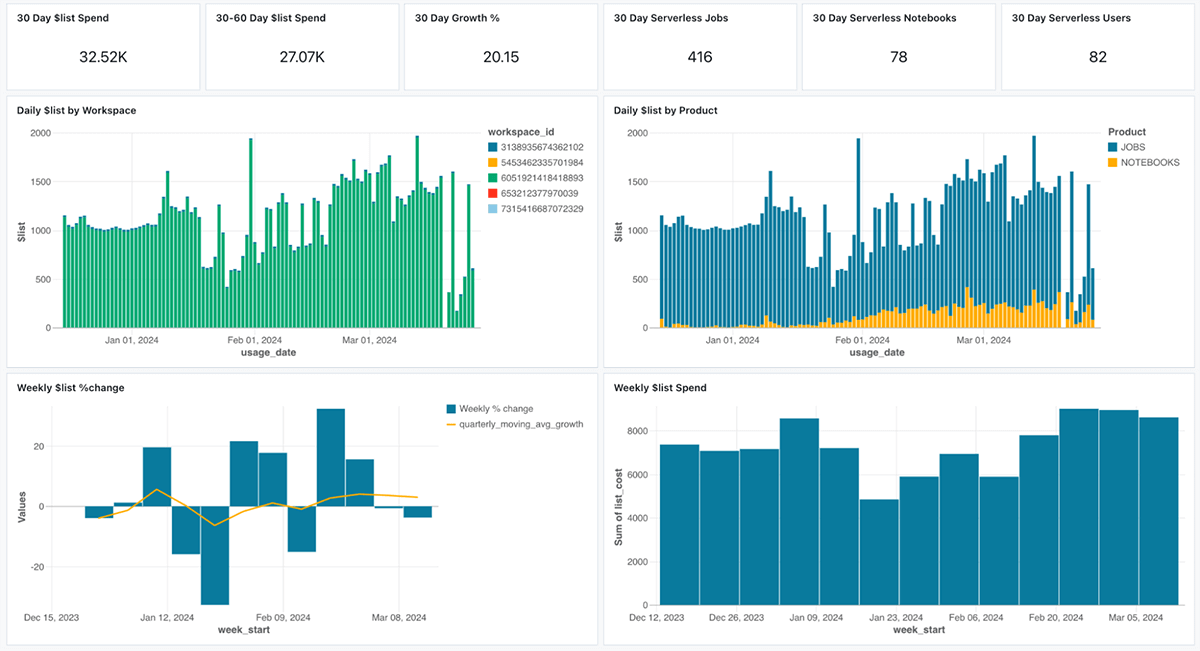

- Price monitoring: Understanding the associated fee implications of your workflows is essential for managing budgets and optimizing useful resource utilization. With the introduction of system tables for Workflows, now you can monitor the prices related to every job over time, analyze tendencies, and determine alternatives for price financial savings. We have additionally constructed dashboards on prime of system tables which you can import into your workspace and simply customise. They will help you reply questions corresponding to “Which jobs price probably the most final month?” or “Which workforce is projected to exceed their price range?”. You can even arrange budgets and alerts on these.

Enhanced SQL Integration: Extra Energy to SQL Customers

- Activity values in SQL: SQL practitioners can now leverage the outcomes of 1 SQL job in subsequent duties. This characteristic permits dynamic and adaptive workflows, the place the output of 1 question can instantly affect the logic of the subsequent, streamlining advanced knowledge transformations.

- Multi-SQL assertion assist: By supporting a number of SQL statements inside a single job, Databricks Workflows presents larger flexibility in establishing SQL-driven pipelines. This integration permits for extra subtle knowledge processing with out the necessity to swap contexts or instruments.

Serverless compute for Workflows, DLT, Notebooks

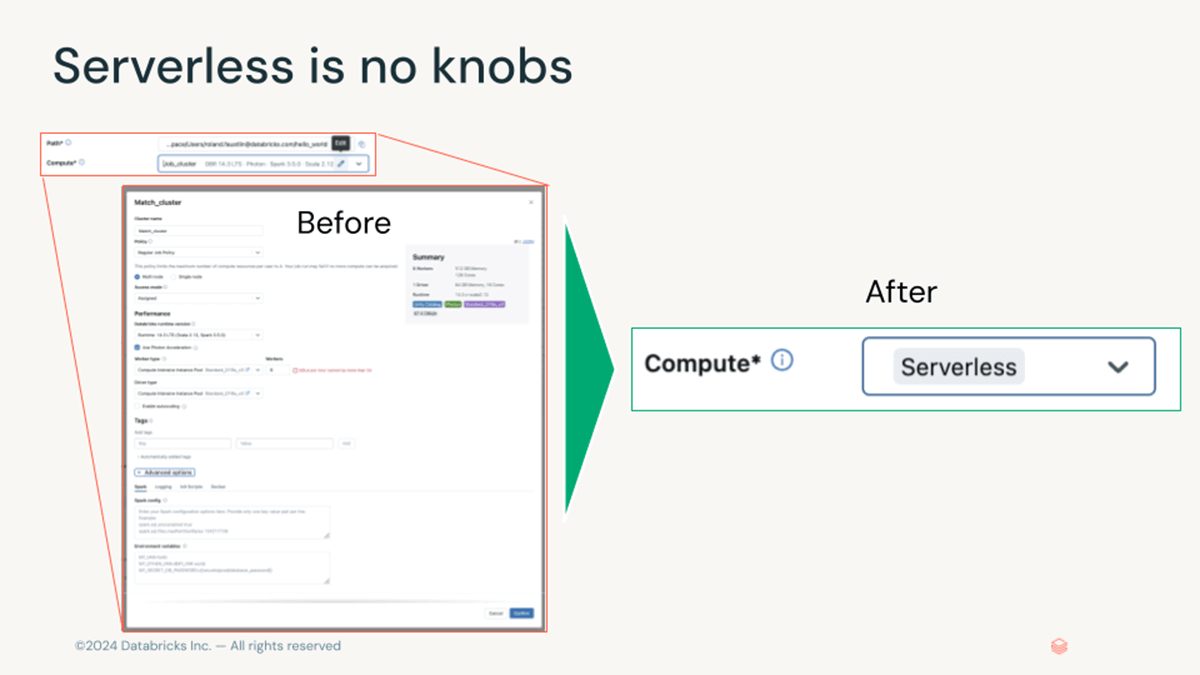

- Serverless compute for Workflows: We have been thrilled to announce the final availability of serverless compute for Notebooks, Workflows, and Delta Stay Tables at DAIS. This providing was rolled out to most Databricks areas, bringing the advantages of performance-focuses quick startup, scaling, and infrastructure-free administration to your workflows. Serverless compute removes the necessity for advanced configuration and is considerably simpler to handle than traditional clusters.

What’s Subsequent for Databricks Workflows?

Trying forward, 2024 guarantees to be one other 12 months of great developments for Databricks Workflows. Here is a sneak peek at a number of the thrilling options and enhancements on the horizon:

Streamlining Workflow Administration

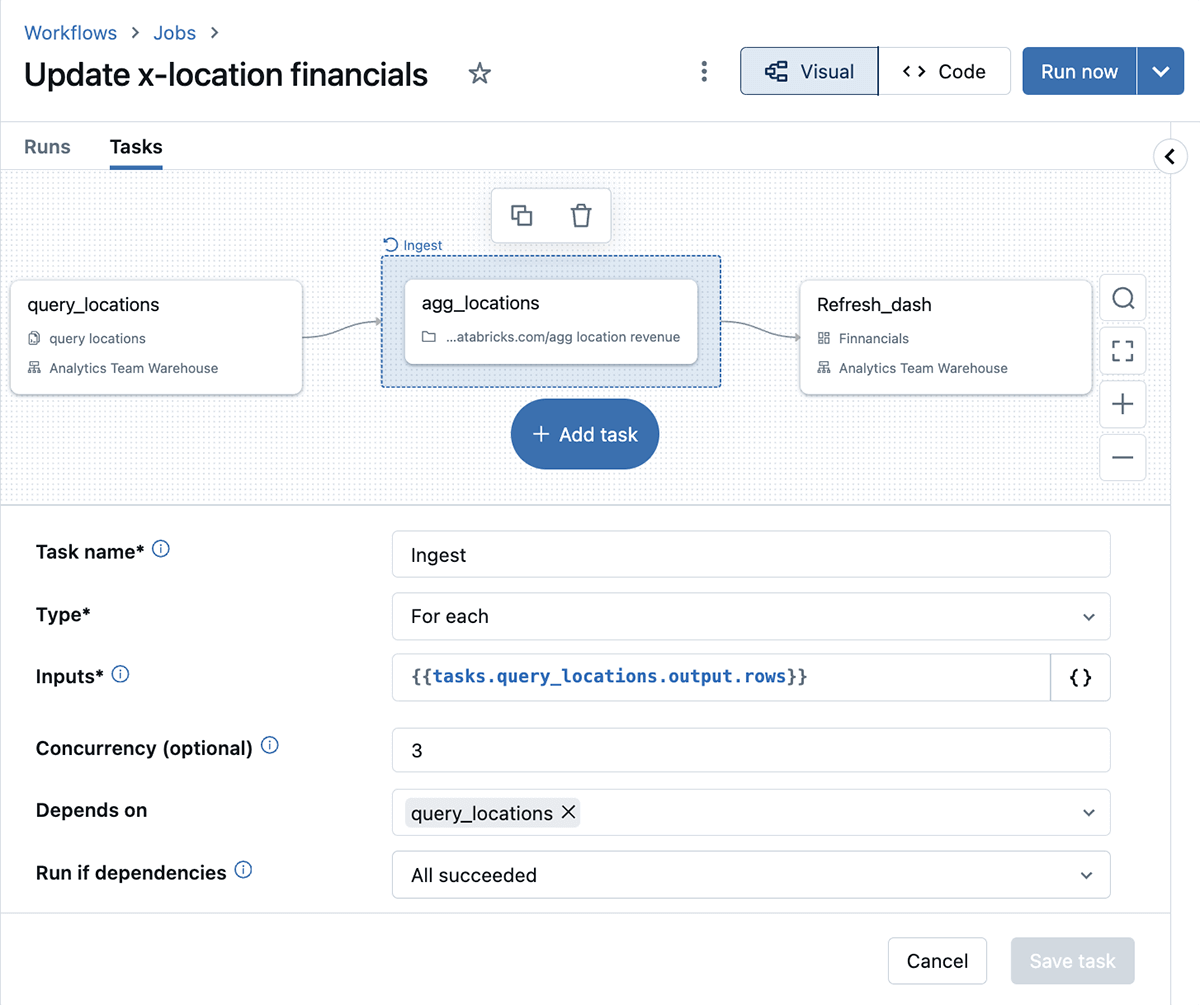

The upcoming enhancements to Databricks Workflows are targeted on enhancing readability and effectivity in managing advanced workflows. These modifications purpose to make it simpler for customers to arrange and execute subtle knowledge pipelines by introducing new methods to construction, automate, and reuse job duties. The general intent is to simplify the orchestration of advanced knowledge processes, permitting customers to handle their workflows extra successfully as they scale.

Serverless Compute Enhancements

We’ll be introducing compatibility checks that make it simpler to determine workloads that may simply profit from serverless compute. We’ll additionally leverage the ability of the Databricks Assistant to assist customers transition to serverless compute.

Lakeflow: A unified, clever resolution for knowledge engineering

Through the summit we additionally launched LakeFlow, the unified knowledge engineering resolution that consists of LakeFlow Join (ingestion), Pipelines (transformation) and Jobs (orchestration). All the orchestration enhancements we mentioned above will grow to be part of this new resolution as we evolve Workflows into LakeFlow Jobs, the orchestration piece of LakeFlow.

Strive the Newest Workflows Options Now!

We’re excited so that you can expertise these highly effective new options in Databricks Workflows. To get began: