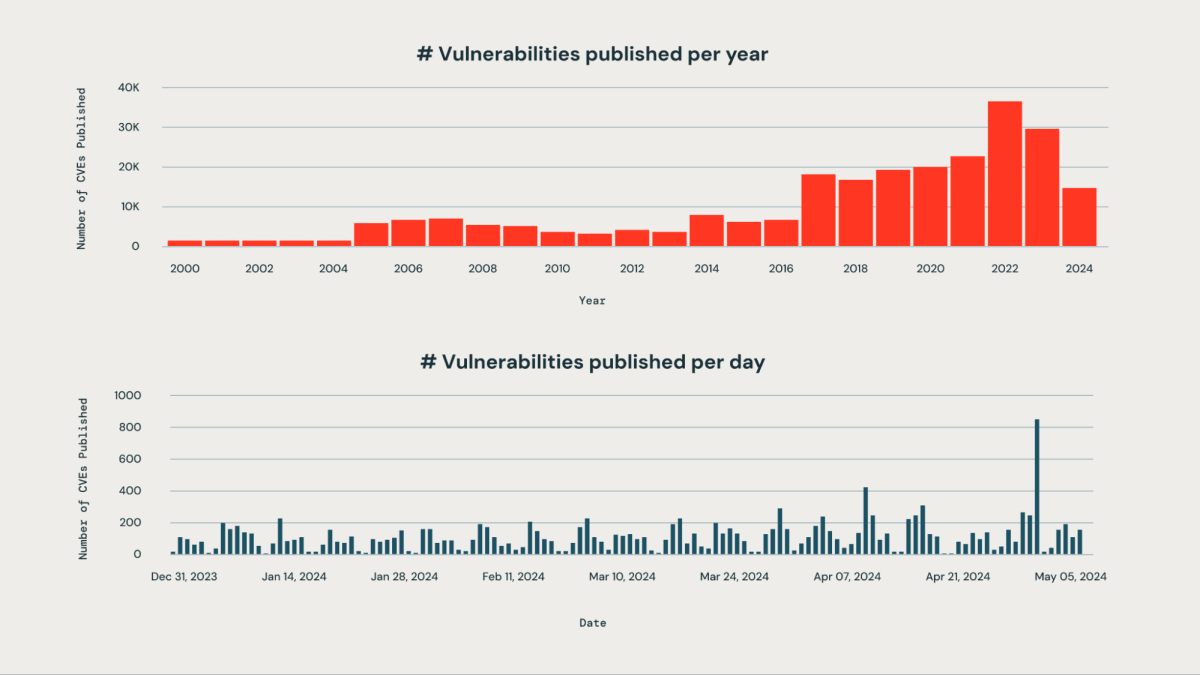

Each group is challenged with accurately prioritizing new vulnerabilities that have an effect on a big set of third-party libraries used inside their group. The sheer quantity of vulnerabilities revealed every day makes guide monitoring impractical and resource-intensive.

At Databricks, certainly one of our firm goals is to safe our Information Intelligence Platform. Our engineering group has designed an AI-based system that may proactively detect, classify, and prioritize vulnerabilities as quickly as they’re disclosed, based mostly on their severity, potential affect, and relevance to Databricks infrastructure. This method permits us to successfully mitigate the danger of essential vulnerabilities remaining unnoticed. Our system achieves an accuracy charge of roughly 85% in figuring out business-critical vulnerabilities. By leveraging our prioritization algorithm, the safety group has considerably decreased their guide workload by over 95%. They’re now in a position to focus their consideration on the 5% of vulnerabilities that require fast motion, reasonably than sifting by means of a whole lot of points.

Within the subsequent few steps, we’re going to discover how our AI-driven method helps establish, categorize and rank vulnerabilities.

How Our System Constantly Flags Vulnerabilities

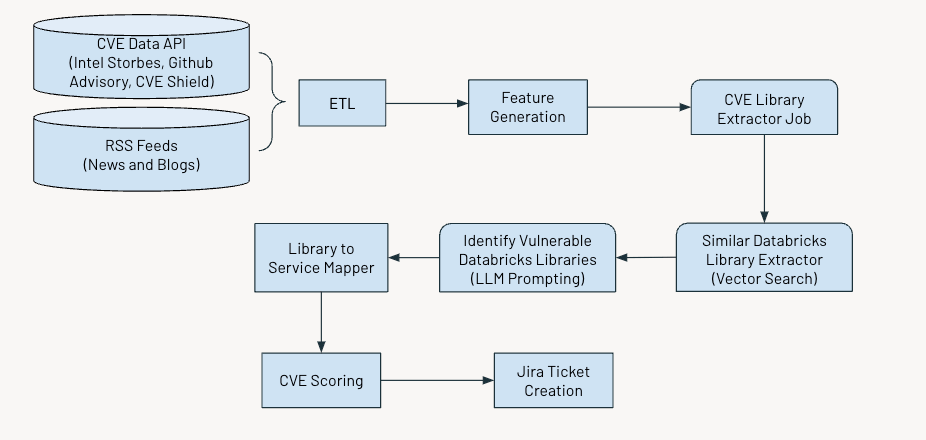

The system operates on an everyday schedule to establish and flag essential vulnerabilities. The method includes a number of key steps:

- Gathering and processing knowledge

- Producing related options

- Using AI to extract details about Widespread Vulnerabilities and Exposures (CVEs)

- Assessing and scoring vulnerabilities based mostly on their severity

- Producing Jira tickets for additional motion.

The determine under exhibits the general workflow.

Information Ingestion

We ingest Widespread Vulnerabilities and Exposures (CVE) knowledge, which identifies publicly disclosed cybersecurity vulnerabilities from a number of sources equivalent to:

- Intel Strobes API: This gives data and particulars on the software program packages and variations.

- GitHub Advisory Database: Generally, when vulnerabilities are usually not recorded as CVE, they seem as Github advisories.

- CVE Defend: This gives the trending vulnerability knowledge from the latest social media feeds

Moreover, we collect RSS feeds from sources like securityaffairs and hackernews and different information articles and blogs that point out cybersecurity vulnerabilities.

Function Era

Subsequent, we’ll extract the next options for every CVE:

- Description

- Age of CVE

- CVSS rating (Widespread Vulnerability Scoring System)

- EPSS rating (Exploit Prediction Scoring System)

- Affect rating

- Availability of exploit

- Availability of patch

- Trending standing on X

- Variety of advisories

Whereas the CVSS and EPSS scores present invaluable insights into the severity and exploitability of vulnerabilities, they could not totally apply for prioritization in sure contexts.

The CVSS rating doesn’t totally seize a corporation’s particular context or setting, that means {that a} vulnerability with a excessive CVSS rating may not be as essential if the affected element shouldn’t be in use or is sufficiently mitigated by different safety measures.

Equally, the EPSS rating estimates the likelihood of exploitation however would not account for a corporation’s particular infrastructure or safety posture. Due to this fact, a excessive EPSS rating would possibly point out a vulnerability that’s prone to be exploited typically. Nevertheless, it would nonetheless be irrelevant if the affected programs are usually not a part of the group’s assault floor on the web.

Relying solely on CVSS and EPSS scores can result in a deluge of high-priority alerts, making managing and prioritizing them difficult.

Scoring Vulnerabilities

We developed an ensemble of scores based mostly on the above options – severity rating, element rating and subject rating – to prioritize CVEs, the small print of that are given under.

Severity Rating

This rating helps to quantify the significance of CVE to the broader group. We calculate the rating as a weighted common of the CVSS, EPSS, and Affect scores. The information enter from CVE Defend and different information feeds permits us to gauge how the safety group and our peer firms understand the affect of any given CVE. This rating’s excessive worth corresponds to CVEs deemed essential to the group and our group.

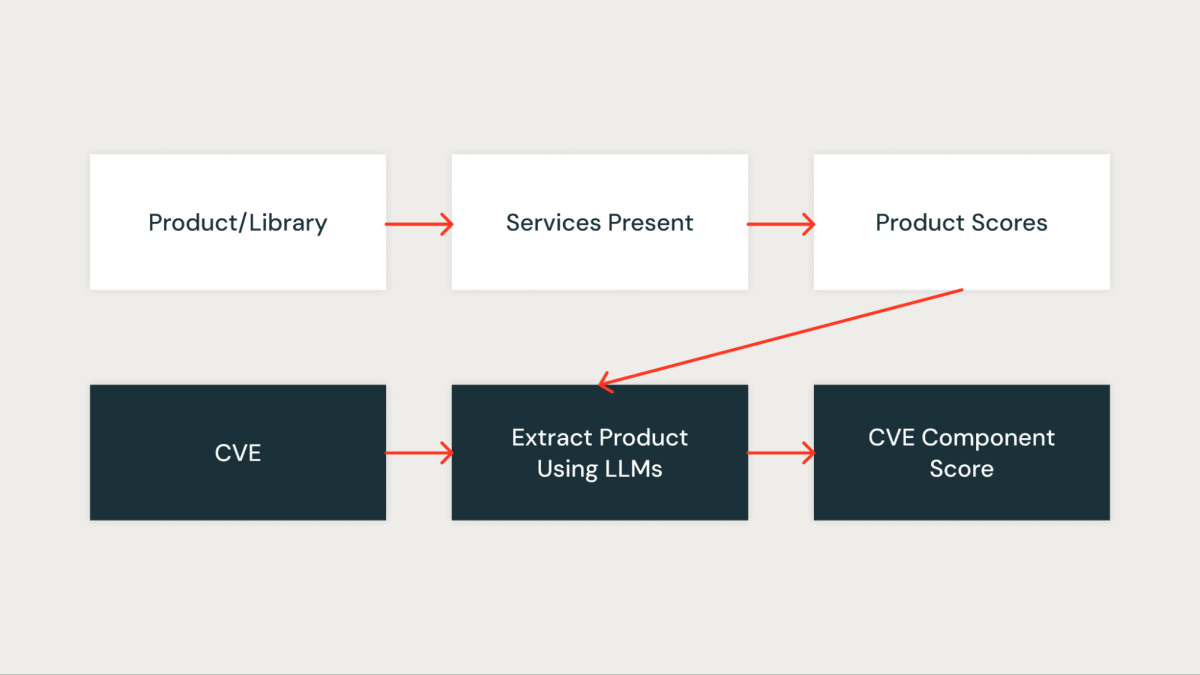

Element Rating

This rating quantitatively measures how vital the CVE is to our group. Each library within the group is first assigned a rating based mostly on the companies impacted by the library. A library that’s current in essential companies will get a better rating, whereas a library that’s current in non-critical companies will get a decrease rating.

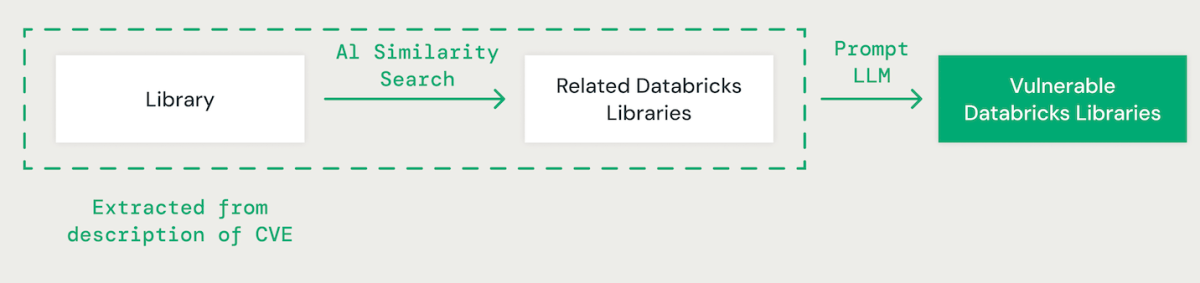

AI-Powered Library Matching

Using few-shot prompting with a big language mannequin (LLM), we extract the related library for every CVE from its description. Subsequently, we make use of an AI-based vector similarity method to match the recognized library with present Databricks libraries. This includes changing every phrase within the library identify into an embedding for comparability.

When matching CVE libraries with Databricks libraries, it is important to grasp the dependencies between totally different libraries. For instance, whereas a vulnerability in IPython might in a roundabout way have an effect on CPython, a difficulty in CPython might affect IPython. Moreover, variations in library naming conventions, equivalent to “scikit-learn”, “scikitlearn”, “sklearn” or “pysklearn” should be thought of when figuring out and matching libraries. Moreover, version-specific vulnerabilities must be accounted for. For example, OpenSSL variations 1.0.1 to 1.0.1f is likely to be weak, whereas patches in later variations, like 1.0.1g to 1.1.1, might deal with these safety dangers.

LLMs improve the library matching course of by leveraging superior reasoning and business experience. We fine-tuned numerous fashions utilizing a floor reality dataset to enhance accuracy in figuring out weak dependent packages.

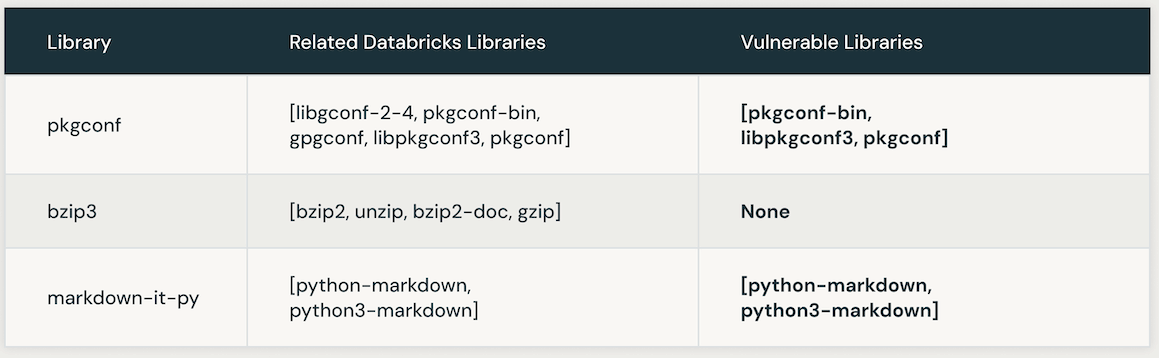

The next desk presents cases of weak Databricks libraries linked to a particular CVE. Initially, AI similarity search is leveraged to pinpoint libraries carefully related to the CVE library. Subsequently, an LLM is employed to determine the vulnerability of these related libraries inside Databricks.

Automating LLM Instruction Optimization for Accuracy and Effectivity

Manually optimizing directions in an LLM immediate will be laborious and error-prone. A extra environment friendly method includes utilizing an iterative methodology to mechanically produce a number of units of directions and optimize them for superior efficiency on a ground-truth dataset. This methodology minimizes human error and ensures a more practical and exact enhancement of the directions over time.

We utilized this automated instruction optimization approach to enhance our personal LLM-based resolution. Initially, we offered an instruction and the specified output format to the LLM for dataset labeling. The outcomes have been then in contrast towards a floor reality dataset, which contained human-labeled knowledge offered by our product safety group.

Subsequently, we utilized a second LLM generally known as an “Instruction Tuner”. We fed it the preliminary immediate and the recognized errors from the bottom reality analysis. This LLM iteratively generated a collection of improved prompts. Following a overview of the choices, we chosen the best-performing immediate to optimize accuracy.

After making use of the LLM instruction optimization approach, we developed the next refined immediate:

Choosing the proper LLM

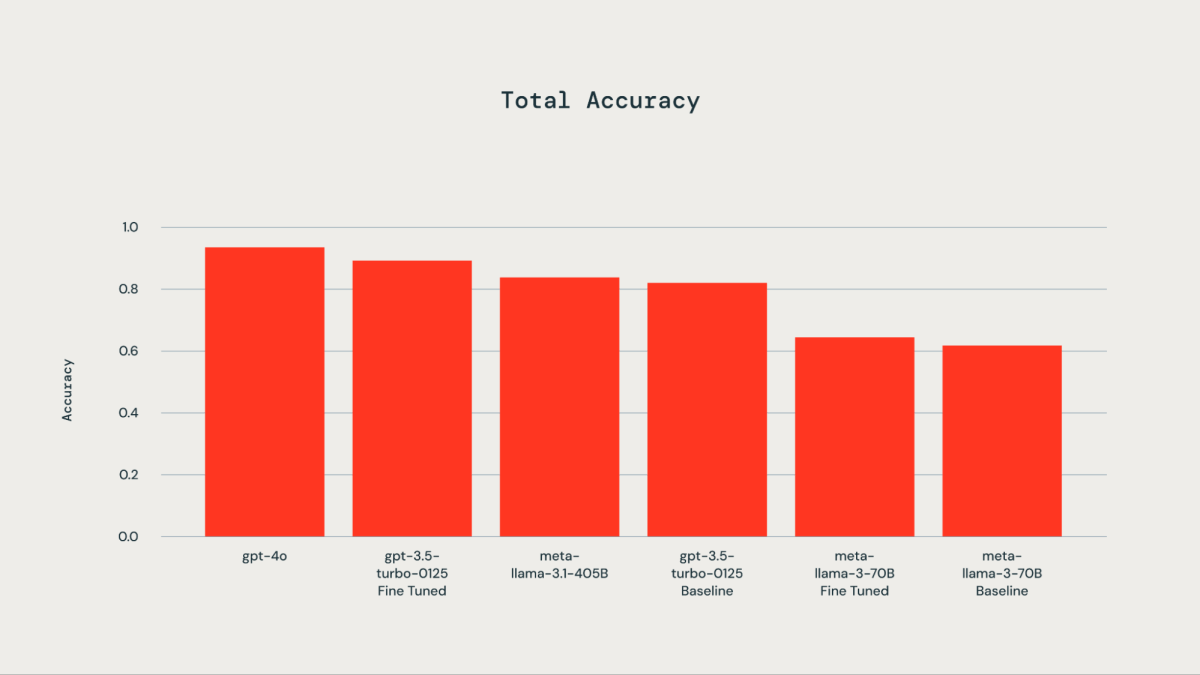

A floor reality dataset comprising 300 manually labeled examples was utilized for fine-tuning functions. The examined LLMs included gpt-4o, gpt-3.5-Turbo, llama3-70B, and llama-3.1-405b-instruct. As illustrated by the accompanying plot, fine-tuning the bottom reality dataset resulted in improved accuracy for gpt-3.5-turbo-0125 in comparison with the bottom mannequin. Nice-tuning llama3-70B utilizing the Databricks fine-tuning API led to solely marginal enchancment over the bottom mannequin. The accuracy of the gpt-3.5-turbo-0125 fine-tuned mannequin was corresponding to or barely decrease than that of gpt-4o. Equally, the accuracy of the llama-3.1-405b-instruct was additionally corresponding to and barely decrease than that of the gpt-3.5-turbo-0125 fine-tuned mannequin.

As soon as the Databricks libraries in a CVE are recognized, the corresponding rating of the library (library_score as described above) is assigned because the element rating of the CVE.

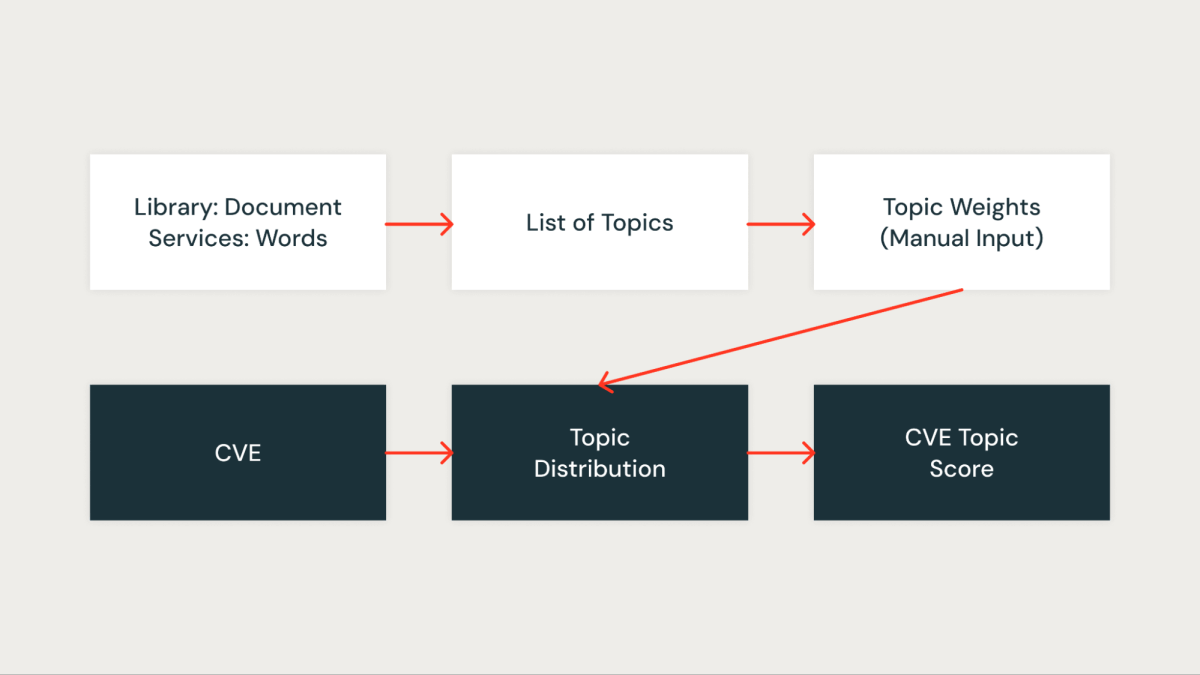

Matter Rating

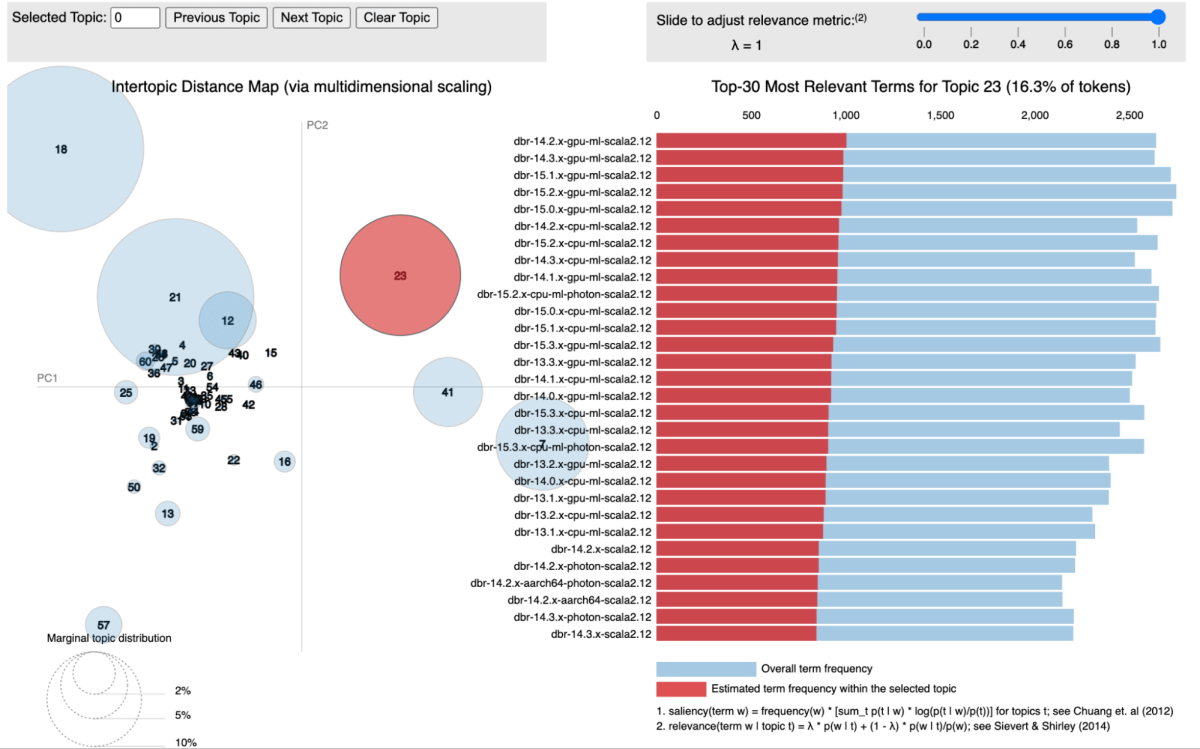

In our method, we utilized subject modeling, particularly Latent Dirichlet Allocation (LDA), to cluster libraries based on the companies they’re related to. Every library is handled as a doc, with the companies it seems in performing because the phrases inside that doc. This methodology permits us to group libraries into subjects that signify shared service contexts successfully.

The determine under exhibits a particular subject the place all of the Databricks Runtime (DBR) companies are clustered collectively and visualized utilizing pyLDAvis.

For every recognized subject, we assign a rating that displays its significance inside our infrastructure. This scoring permits us to prioritize vulnerabilities extra precisely by associating every CVE with the subject rating of the related libraries. For instance, suppose a library is current in a number of essential companies. In that case, the subject rating for that library might be greater, and thus, the CVE affecting it can obtain a better precedence.

Affect and Outcomes

We have now utilized a variety of aggregation strategies to consolidate the scores talked about above. Our mannequin underwent testing utilizing three months’ value of CVE knowledge, throughout which it achieved a powerful true constructive charge of roughly 85% in figuring out CVEs related to our enterprise. The mannequin has efficiently pinpointed essential vulnerabilities on the day they’re revealed (day 0) and has additionally highlighted vulnerabilities warranting safety investigation.

To gauge the false negatives produced by the mannequin, we in contrast the vulnerabilities flagged by exterior sources or manually recognized by our safety group that the mannequin didn’t detect. This allowed us to calculate the proportion of missed essential vulnerabilities. Notably, there have been no false negatives within the back-tested knowledge. Nevertheless, we acknowledge the necessity for ongoing monitoring and analysis on this space.

Our system has successfully streamlined our workflow, reworking the vulnerability administration course of right into a extra environment friendly and targeted safety triage step. It has considerably mitigated the danger of overlooking a CVE with direct buyer affect and has decreased the guide workload by over 95%. This effectivity achieve has enabled our safety group to focus on a choose few vulnerabilities, reasonably than sifting by means of the a whole lot revealed every day.

Acknowledgments

This work is a collaboration between the Information Science group and Product Safety group. Thanks to Mrityunjay Gautam Aaron Kobayashi Anurag Srivastava and Ricardo Ungureanu from the Product Safety group, Anirudh Kondaveeti Benjamin Ebanks Jeremy Stober and Chenda Zhang from the Safety Information Science group.