Introduction: Why Constructing an AI Mannequin Issues As we speak

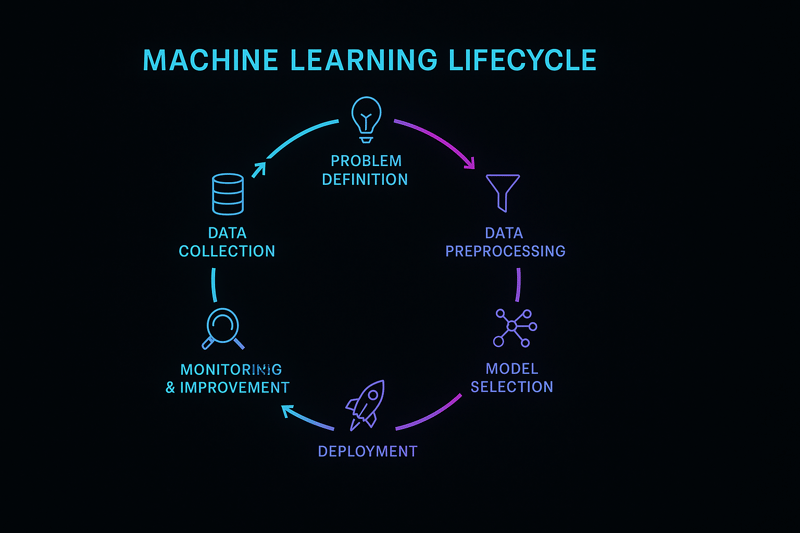

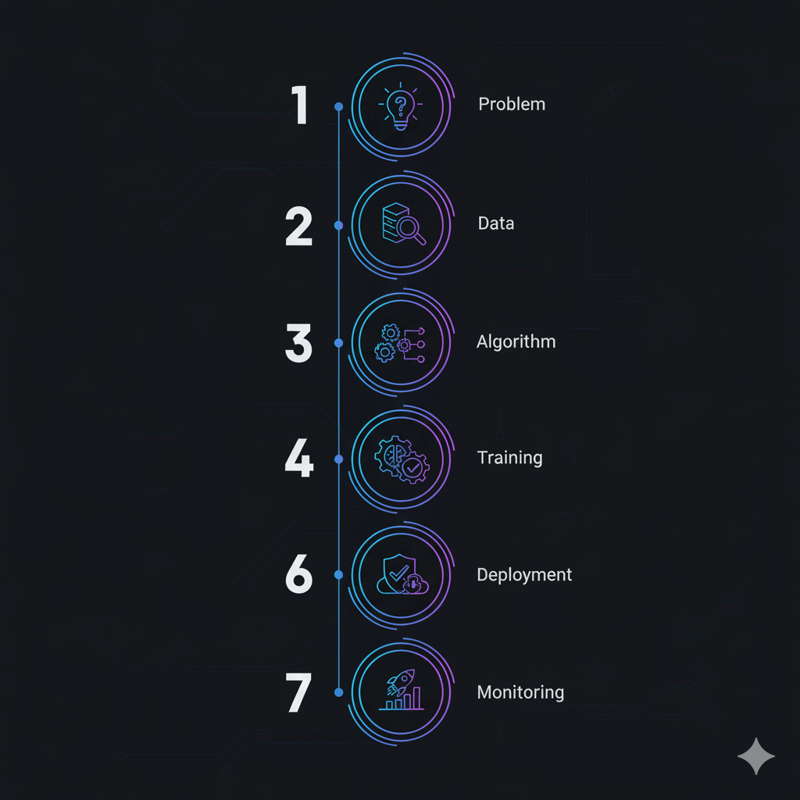

Synthetic intelligence has moved from being a buzzword to a important driver of enterprise innovation, private productiveness, and societal transformation. Corporations throughout sectors are desperate to leverage AI for automation, actual‑time decision-making, customized companies, superior cybersecurity, content material technology, and predictive analytics. But many groups nonetheless battle to maneuver from idea to a functioning AI mannequin. Constructing an AI mannequin includes greater than coding; it requires a scientific course of that spans downside definition, knowledge acquisition, algorithm choice, coaching and analysis, deployment, and ongoing upkeep. This information will present you, step-by-step, the way to construct an AI mannequin with depth, originality, and a watch towards rising traits and moral accountability.

Fast Digest: What You’ll Study

- What’s an AI mannequin? You’ll learn the way AI differs from machine studying and why generative AI is reshaping innovation.

- Step‑by‑step directions: From defining the downside and gathering knowledge to deciding on the proper algorithms, coaching and evaluating your mannequin, deploying it to manufacturing, and managing it over time.

- Skilled insights: Every part features a bullet listing of knowledgeable suggestions and stats drawn from analysis, trade leaders, and case research to offer you deeper context.

- Artistic examples: We’ll illustrate complicated ideas with clear examples—from coaching a chatbot to implementing edge AI on a manufacturing facility ground.

Fast Abstract—How do you construct an AI mannequin?

Constructing an AI mannequin includes defining a transparent downside, amassing and making ready knowledge, selecting acceptable algorithms and frameworks, coaching and tuning the mannequin, evaluating its efficiency, deploying it responsibly, and repeatedly monitoring and bettering it. Alongside the best way, groups ought to prioritize knowledge high quality, moral concerns, and useful resource effectivity whereas leveraging platforms like Clarifai for compute orchestration and mannequin inference.

Defining Your Downside: The Basis of AI Success

How do you determine the proper downside for AI?

The first step in constructing an AI mannequin is to make clear the issue you need to remedy. This includes understanding the enterprise context, person wants, and particular goals. As an example, are you making an attempt to predict buyer churn, classify photographs, or generate advertising and marketing copy? And not using a effectively‑outlined downside, even essentially the most superior algorithms will battle to ship worth.

Begin by gathering enter from stakeholders, together with enterprise leaders, area consultants, and finish customers. Formulate a clear query and set SMART objectives—particular, measurable, attainable, related, and time‑sure. Additionally decide the kind of AI activity (classification, regression, clustering, reinforcement, or technology) and determine any regulatory necessities (comparable to healthcare privateness guidelines or monetary compliance legal guidelines).

Skilled Insights

- Failure to plan hurts outcomes: Many AI initiatives fail as a result of groups soar into mannequin growth and not using a cohesive technique. Set up a transparent goal and align it with enterprise metrics earlier than gathering knowledge.

- Take into account area constraints: An issue in healthcare may require HIPAA compliance and explainability, whereas a finance mission might demand sturdy safety and equity auditing.

- Collaborate with stakeholders: Involving area consultants early helps guarantee the issue is framed appropriately and related knowledge is on the market.

Artistic Instance: Predicting Tools Failure

Think about a producing firm that wishes to cut back downtime by predicting when machines will fail. The downside is just not “apply AI,” however “forecast potential breakdowns within the subsequent 24 hours primarily based on sensor knowledge, historic logs, and environmental situations.” The workforce defines a classification activity: predict “fail” or “not fail.” SMART objectives may embrace decreasing unplanned downtime by 30 % inside six months and reaching 90 % predictive accuracy. Clarifai’s platform might help coordinate the info pipeline and deploy the mannequin in a neighborhood runner on the manufacturing facility ground, guaranteeing low latency and knowledge privateness.

Gathering and Making ready Information: Constructing the Proper Dataset

Why does knowledge high quality matter greater than algorithms?

Information is the gas of AI. Regardless of how superior your algorithm is, poor knowledge high quality will result in poor predictions. Your dataset must be related, consultant, clear, and effectively‑labeled. The information assortment part consists of sourcing knowledge, dealing with privateness considerations, and preprocessing.

- Establish knowledge sources: Inside databases, public datasets, sensors, social media, internet scraping, and person enter can all present useful data.

- Guarantee knowledge range: Goal for range to cut back bias. Embody samples from totally different demographics, geographies, and use instances.

- Clear and preprocess: Deal with lacking values, take away duplicates, appropriate errors, and normalize numerical options. Label knowledge precisely (supervised duties) or assign clusters (unsupervised duties).

- Break up knowledge: Divide your dataset into coaching, validation, and take a look at units to guage efficiency pretty.

- Privateness and compliance: Use anonymization, pseudonymization, or artificial knowledge when coping with delicate data. Strategies like federated studying allow mannequin coaching throughout distributed units with out transmitting uncooked knowledge.

Skilled Insights

- High quality > amount: Netguru warns that poor knowledge high quality and insufficient amount are frequent causes AI initiatives fail. Accumulate sufficient knowledge, however prioritize high quality.

- Information grows quick: The AI Index 2025 notes that coaching compute doubles each 5 months and dataset sizes double each eight months. Plan your storage and compute infrastructure accordingly.

- Edge case dealing with: In edge AI deployments, knowledge could also be processed domestically on low‑energy units just like the Raspberry Pi, as proven within the Stream Analyze manufacturing case research. Native processing can improve safety and cut back latency.

Artistic Instance: Establishing an Picture Dataset

Suppose you’re constructing an AI system to categorise flowers. You possibly can acquire photographs from public datasets, add your individual pictures, and ask group contributors to share footage from totally different areas. Then, label every picture in accordance with its species. Take away duplicates and guarantee photographs are balanced throughout lessons. Lastly, increase the info by rotating and flipping photographs to enhance robustness. For privateness‑delicate duties, think about producing artificial examples utilizing generative adversarial networks (GANs).

Selecting the Proper Algorithm and Structure

How do you resolve between machine studying and deep studying?

After defining your downside and assembling a dataset, the following step is deciding on an acceptable algorithm. The selection relies on knowledge kind, activity, interpretability necessities, compute sources, and deployment setting.

- Conventional Machine Studying: For small datasets or tabular knowledge, algorithms like linear regression, logistic regression, resolution bushes, random forests, or help vector machines typically carry out effectively and are simple to interpret.

- Deep Studying: For complicated patterns in photographs, speech, or textual content, convolutional neural networks (CNNs) deal with photographs, recurrent neural networks (RNNs) or transformers course of sequences, and reinforcement studying optimizes resolution‑making duties.

- Generative Fashions: For duties like textual content technology, picture synthesis, or knowledge augmentation, transformers (e.g., GPT‑household), diffusion fashions, and GANs excel. Generative AI can produce new content material and is especially helpful in artistic industries.

- Hybrid Approaches: Mix conventional fashions with neural networks or combine retrieval‑augmented technology (RAG) to inject present data into generative fashions.

Skilled Insights

- Match fashions to duties: Techstack highlights the significance of aligning algorithms with downside sorts (classification, regression, generative).

- Generative AI capabilities: MIT Sloan stresses that generative fashions can outperform conventional ML in duties requiring language understanding. Nonetheless, area‑particular or privateness‑delicate duties should still depend on classical approaches.

- Explainability: If selections should be defined (e.g., in healthcare or finance), select interpretable fashions (resolution bushes, logistic regression) or use explainable AI instruments (SHAP, LIME) with complicated architectures.

Artistic Instance: Selecting an Algorithm for Textual content Classification

Suppose it’s essential classify buyer suggestions into classes (optimistic, unfavourable, impartial). For a small dataset, a Naive Bayes or help vector machine may suffice. You probably have giant quantities of textual knowledge, think about a transformer‑primarily based classifier like BERT. For area‑particular accuracy, a high-quality‑tuned mannequin in your knowledge yields higher outcomes. Clarifai’s mannequin zoo and coaching pipeline can simplify this course of by offering pretrained fashions and switch studying choices.

Choosing Instruments, Frameworks and Infrastructure

Which frameworks and instruments do you have to use?

Instruments and frameworks allow you to construct, practice, and deploy AI fashions effectively. Choosing the proper tech stack relies on your programming language choice, deployment goal, and workforce experience.

- Programming Languages: Python is the most well-liked, because of its huge ecosystem (NumPy, pandas, scikit‑be taught, TensorFlow, PyTorch). R fits statistical evaluation; Julia gives excessive efficiency; Java and Scala combine effectively with enterprise methods.

- Frameworks: TensorFlow, PyTorch, and Keras are main deep‑studying frameworks. Scikit‑be taught gives a wealthy set of machine‑studying algorithms for classical duties. H2O.ai offers AutoML capabilities.

- Information Administration: Use pandas and NumPy for tabular knowledge, SQL/NoSQL databases for storage, and Spark or Hadoop for big datasets.

- Visualization: Instruments like Matplotlib, Seaborn, and Plotly assist plot efficiency metrics. Tableau or Energy BI combine with enterprise dashboards.

- Deployment Instruments: Docker and Kubernetes assist containerize and orchestrate purposes. Flask or FastAPI expose fashions through REST APIs. MLOps platforms like MLflow and Kubeflow handle mannequin lifecycle.

- Edge AI: For actual‑time or privateness‑delicate purposes, use low‑energy {hardware} comparable to Raspberry Pi or Nvidia Jetson, or specialised chips like neuromorphic processors.

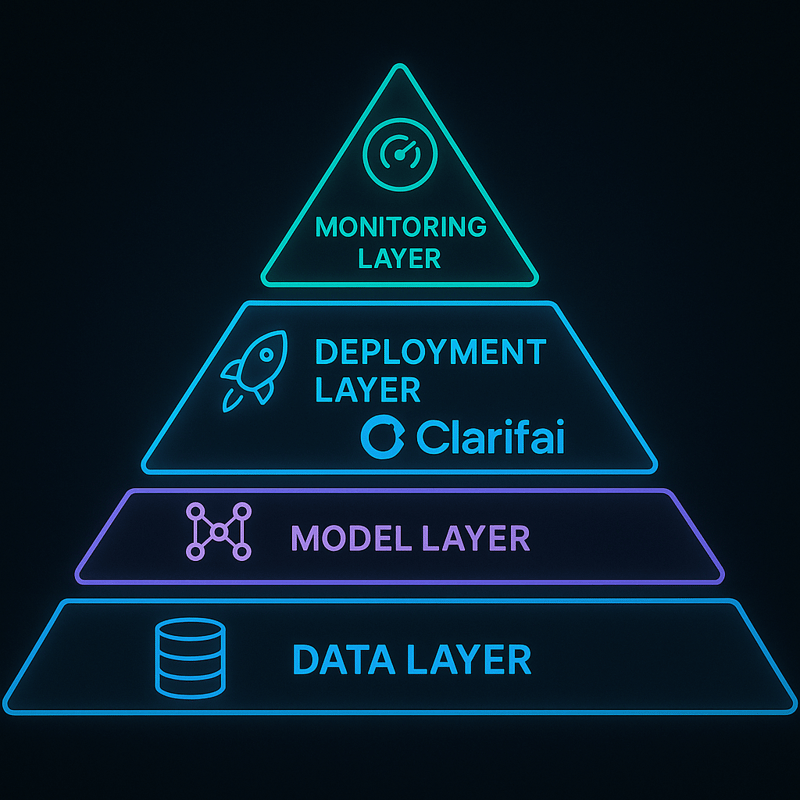

- Clarifai Platform: Clarifai gives mannequin orchestration, pretrained fashions, workflow enhancing, native runners, and safe deployment. You may high-quality‑tune Clarifai fashions or carry your individual fashions for inference. Clarifai’s compute orchestration streamlines coaching and inference throughout cloud, on‑premises, or edge environments.

Skilled Insights

- Framework alternative issues: Netguru lists TensorFlow, PyTorch, and Keras as main choices with sturdy communities. Prismetric expands the listing to incorporate Hugging Face, Julia, and RapidMiner.

- Multi‑layer structure: Techstack outlines the 5 layers of AI structure: infrastructure, knowledge processing, service, mannequin, and utility. Select instruments that combine throughout these layers.

- Edge {hardware} improvements: The 2025 Edge AI report describes specialised {hardware} for on‑machine AI, together with neuromorphic chips and quantum processors.

Artistic Instance: Constructing a Chatbot with Clarifai

Let’s say you need to create a buyer‑help chatbot. You should utilize Clarifai’s pretrained language fashions to acknowledge person intent and generate responses. Use Flask to construct an API endpoint and containerize the app with Docker. Clarifai’s platform can deal with compute orchestration, scaling the mannequin throughout a number of servers. Should you want on‑machine efficiency, you’ll be able to run the mannequin on a native runner within the Clarifai setting, guaranteeing low latency and knowledge privateness.

Coaching and Tuning Your Mannequin

Coaching and Tuning Your Mannequin

How do you practice an AI mannequin successfully?

Coaching includes feeding knowledge into your mannequin, calculating predictions, computing a loss, and adjusting parameters through backpropagation. Key selections embrace selecting loss features (cross‑entropy for classification, imply squared error for regression), optimizers (SGD, Adam, RMSProp), and hyperparameters (studying price, batch dimension, epochs).

- Initialize the mannequin: Arrange the structure and initialize weights.

- Feed the coaching knowledge: Ahead propagate by way of the community to generate predictions.

- Compute the loss: Measure how far predictions are from true labels.

- Backpropagation: Replace weights utilizing gradient descent.

- Repeat: Iterate for a number of epochs till the mannequin converges.

- Validate and tune: Consider on a validation set; modify hyperparameters (studying price, regularization power, structure depth) utilizing grid search, random search, or Bayesian optimization.

- Keep away from over‑becoming: Use methods like dropout, early stopping, and L1/L2 regularization.

Skilled Insights

- Hyperparameter tuning is vital: Prismetric stresses balancing below‑becoming and over‑becoming and suggests automated tuning strategies.

- Compute calls for are rising: The AI Index notes that coaching compute for notable fashions doubles each 5 months; GPT‑4o required 38 billion petaFLOPs, whereas AlexNet wanted 470 PFLOPs. Use environment friendly {hardware} and modify coaching schedules accordingly.

- Use cross‑validation: Techstack recommends cross‑validation to keep away from overfitting and to pick sturdy fashions.

Artistic Instance: Hyperparameter Tuning Utilizing Clarifai

Suppose you practice a picture classifier. You may experiment with studying charges from 0.001 to 0.1, batch sizes from 32 to 256, and dropout charges between 0.3 and 0.5. Clarifai’s platform can orchestrate a number of coaching runs in parallel, routinely monitoring hyperparameters and metrics. As soon as the perfect parameters are recognized, Clarifai means that you can snapshot the mannequin and deploy it seamlessly.

Evaluating and Validating Your Mannequin

How are you aware in case your AI mannequin works?

Analysis ensures that the mannequin performs effectively not simply on the coaching knowledge but additionally on unseen knowledge. Select metrics primarily based in your downside kind:

- Classification: Use accuracy, precision, recall, F1 rating, and ROC‑AUC. Analyze confusion matrices to grasp misclassifications.

- Regression: Compute imply squared error (MSE), root imply squared error (RMSE), and imply absolute error (MAE).

- Generative duties: Measure with BLEU, ROUGE, Frechet Inception Distance (FID) or use human analysis for extra subjective outputs.

- Equity and robustness: Consider throughout totally different demographic teams, monitor for knowledge drift, and take a look at adversarial robustness.

Divide the info into coaching, validation, and take a look at units to stop over‑becoming. Use cross‑validation when knowledge is restricted. For time collection or sequential knowledge, make use of stroll‑ahead validation to imitate actual‑world deployment.

Skilled Insights

- A number of metrics: Prismetric emphasises combining metrics (e.g., precision and recall) to get a holistic view.

- Accountable analysis: Microsoft highlights the significance of rigorous testing to make sure equity and security. Evaluating AI fashions on totally different situations helps determine biases and vulnerabilities.

- Generative warning: MIT Sloan warns that generative fashions can generally produce believable however incorrect responses; human oversight remains to be wanted.

Artistic Instance: Evaluating a Buyer Churn Mannequin

Suppose you constructed a mannequin to foretell buyer churn for a streaming service. Consider precision (the proportion of predicted churners who really churn) and recall (the proportion of all churners appropriately recognized). If the mannequin achieves 90 % precision however 60 % recall, you might want to regulate the edge to catch extra churners. Visualize ends in a confusion matrix, and test efficiency throughout age teams to make sure equity.

Deployment and Integration

How do you deploy an AI mannequin into manufacturing?

Deployment turns your skilled mannequin right into a usable service. Take into account the setting (cloud vs on‑premises vs edge), latency necessities, scalability, and safety.

- Containerize your mannequin: Use Docker to bundle the mannequin with its dependencies. This ensures consistency throughout growth and manufacturing.

- Select an orchestration platform: Kubernetes manages scaling, load balancing, and resilience. For serverless deployments, use AWS Lambda, Google Cloud Features, or Azure Features.

- Expose through an API: Construct a REST or gRPC endpoint utilizing frameworks like Flask or FastAPI. Clarifai’s platform offers an API gateway that seamlessly integrates together with your utility.

- Safe your deployment: Implement SSL/TLS encryption, authentication (JWT or OAuth2), and authorization. Use setting variables for secrets and techniques and guarantee compliance with laws.

- Monitor efficiency: Observe metrics comparable to response time, throughput, and error charges. Add automated retries and fallback logic for robustness.

- Edge deployment: For latency‑delicate or privateness‑delicate use instances, deploy fashions to edge units. Clarifai’s native runners allow you to run inference on‑premises or on low‑energy units with out sending knowledge to the cloud.

Skilled Insights

- Modular design: Techstack encourages constructing modular architectures to facilitate scaling and integration.

- Edge case: The Amazon Go case research demonstrates edge AI deployment, the place sensor knowledge is processed domestically to allow cashierless purchasing. This reduces latency and protects buyer privateness.

- MLOps instruments: OpenXcell notes that integrating monitoring and automatic deployment pipelines is essential for sustainable operations.

Artistic Instance: Deploying a Fraud Detection Mannequin

A fintech firm trains a mannequin to determine fraudulent transactions. They containerize the mannequin with Docker, deploy it to AWS Elastic Kubernetes Service, and expose it through FastAPI. Clarifai’s platform helps orchestrate compute sources and offers fallback inference on a native runner when community connectivity is unstable. Actual‑time predictions seem inside 50 milliseconds, guaranteeing excessive throughput. The workforce displays the mannequin’s precision and recall to regulate thresholds and triggers an alert if efficiency drops under 90 % precision.

Steady Monitoring, Upkeep and MLOps

Why is AI lifecycle administration essential?

AI fashions aren’t “set and neglect” methods; they require steady monitoring to detect efficiency degradation, idea drift, or bias. MLOps combines DevOps rules with machine studying workflows to handle fashions from growth to manufacturing.

- Monitor efficiency metrics: Repeatedly monitor accuracy, latency, and throughput. Establish and examine anomalies.

- Detect drift: Monitor enter knowledge distributions and output predictions to determine knowledge drift or idea drift. Instruments like Alibi Detect and Evidently can provide you with a warning when drift happens.

- Model management: Use Git or devoted mannequin versioning instruments (e.g., DVC, MLflow) to trace knowledge, code, and mannequin variations. This ensures reproducibility and simplifies rollbacks.

- Automate retraining: Arrange scheduled retraining pipelines to include new knowledge. Use steady integration/steady deployment (CI/CD) pipelines to check and deploy new fashions.

- Power and value optimization: Monitor compute useful resource utilization, modify mannequin architectures, and discover {hardware} acceleration. The AI Index notes that as coaching compute doubles each 5 months, power consumption turns into a big challenge. Inexperienced AI focuses on decreasing carbon footprint by way of environment friendly algorithms and power‑conscious scheduling.

- Clarifai MLOps: Clarifai offers instruments for monitoring mannequin efficiency, retraining on new knowledge, and deploying updates with minimal downtime. Its workflow engine ensures that knowledge ingestion, preprocessing, and inference are orchestrated reliably throughout environments.

Skilled Insights

- Steady monitoring is important: Techstack warns that idea drift can happen on account of altering knowledge distributions; monitoring permits early detection.

- Power‑environment friendly AI: Microsoft highlights the necessity for useful resource‑environment friendly AI, advocating for improvements like liquid cooling and carbon‑free power.

- Safety: Guarantee knowledge encryption, entry management, and audit logging. Use federated studying or edge deployment to keep up privateness.

Artistic Instance: Monitoring a Voice Assistant

An organization deploys a voice assistant that processes hundreds of thousands of voice queries every day. They monitor latency, error charges, and confidence scores in actual time. When the assistant begins misinterpreting sure accents (idea drift), they acquire new knowledge, retrain the mannequin, and redeploy it. Clarifai’s monitoring instruments set off an alert when accuracy drops under 85 %, and the MLOps pipeline routinely kicks off a retraining job.

Safety, Privateness, and Moral Issues

How do you construct accountable AI?

AI methods can create unintended hurt if not designed responsibly. Moral concerns embrace privateness, equity, transparency, and accountability. Information laws (GDPR, HIPAA, CCPA) demand compliance; failure can lead to hefty penalties.

- Privateness: Use knowledge anonymization, pseudonymization, and encryption to guard private knowledge. Federated studying allows collaborative coaching with out sharing uncooked knowledge.

- Equity and bias mitigation: Establish and handle biases in knowledge and fashions. Use methods like re‑sampling, re‑weighting, and adversarial debiasing. Check fashions on numerous populations.

- Transparency: Implement mannequin playing cards and knowledge sheets to doc mannequin habits, coaching knowledge, and supposed use. Explainable AI instruments like SHAP and LIME make resolution processes extra interpretable.

- Human oversight: Maintain people within the loop for prime‑stakes selections. Autonomous brokers can chain actions along with minimal human intervention, however in addition they carry dangers like unintended habits and bias escalation.

- Regulatory compliance: Sustain with evolving AI legal guidelines within the US, EU, and different areas. Guarantee your mannequin’s knowledge assortment and inference practices comply with pointers.

Skilled Insights

- Belief challenges: The AI Index notes that fewer folks belief AI firms to safeguard their knowledge, prompting new laws.

- Autonomous agent dangers: In accordance with Instances Of AI, brokers that chain actions can result in unintended penalties; human supervision and express ethics are important.

- Accountability in design: Microsoft emphasizes that AI requires human oversight and moral frameworks to keep away from misuse.

Artistic Instance: Dealing with Delicate Well being Information

Take into account an AI mannequin that predicts coronary heart illness from wearable sensor knowledge. To guard sufferers, knowledge is encrypted on units and processed domestically utilizing a Clarifai native runner. Federated studying aggregates mannequin updates from a number of hospitals with out transmitting uncooked knowledge. Mannequin playing cards doc the coaching knowledge (e.g., 40 % feminine, ages 20–80) and identified limitations (e.g., much less correct for sufferers with uncommon situations), whereas the system alerts clinicians reasonably than making ultimate selections.

Business‑Particular Functions & Actual‑World Case Research

Healthcare: Bettering Diagnostics and Customized Care

In healthcare, AI accelerates drug discovery, analysis, and remedy planning. IBM Watsonx.ai and DeepMind’s AlphaFold 3 assist clinicians perceive protein constructions and determine drug targets. Edge AI allows distant affected person monitoring—transportable units analyze coronary heart rhythms in actual time, bettering response occasions and defending knowledge.

Skilled Insights

- Distant monitoring: Edge AI permits wearable units to research vitals domestically, guaranteeing privateness and decreasing latency.

- Personalization: AI tailors remedies to particular person genetics and life, enhancing outcomes.

- Compliance: Healthcare AI should adhere to HIPAA and FDA pointers.

Finance: Fraud Detection and Danger Administration

AI transforms the monetary sector by enhancing fraud detection, credit score scoring, and algorithmic buying and selling. Darktrace spots anomalies in actual time; Numeral Indicators makes use of crowdsourced knowledge for funding predictions; Upstart AI improves credit score selections, permitting inclusive lending. Clarifai’s mannequin orchestration can combine actual‑time inference into excessive‑throughput methods, whereas native runners guarantee delicate transaction knowledge by no means leaves the group.

Skilled Insights

- Actual‑time detection: AI fashions should ship sub‑second selections to catch fraudulent transactions.

- Equity: Credit score scoring fashions should keep away from discriminating in opposition to protected teams and must be clear.

- Edge inference: Processing knowledge domestically reduces danger of interception and ensures compliance.

Retail: Hyper‑Personalization and Autonomous Shops

Retailers leverage AI for customized experiences, demand forecasting, and AI‑generated commercials. Instruments like Vue.ai, Lily AI, and Granify personalize purchasing and optimize conversions. Amazon Go’s Simply Stroll Out expertise makes use of edge AI to allow cashierless purchasing, processing video and sensor knowledge domestically. Clarifai’s imaginative and prescient fashions can analyze buyer habits in actual time and generate context‑conscious suggestions.

Skilled Insights

- Buyer satisfaction: Eliminating checkout traces improves the purchasing expertise and will increase loyalty.

- Information privateness: Retail AI should adjust to privateness legal guidelines and shield client knowledge.

- Actual‑time suggestions: Edge AI and low‑latency fashions preserve options related as customers browse.

Schooling: Adaptive Studying and Conversational Tutors

Instructional platforms make the most of AI to personalize studying paths, grade assignments, and present tutoring. MagicSchool AI (2025 version) plans classes for lecturers; Khanmigo by Khan Academy tutors college students by way of dialog; Diffit helps educators tailor assignments. Clarifai’s NLP fashions can energy clever tutoring methods that adapt in actual time to a scholar’s comprehension stage.

Skilled Insights

- Fairness: Guarantee adaptive methods don’t widen achievement gaps. Present transparency about how suggestions are generated.

- Ethics: Keep away from recording pointless knowledge about minors and adjust to COPPA.

- Accessibility: Use multimodal content material (textual content, speech, visuals) to accommodate numerous studying kinds.

Manufacturing: Predictive Upkeep and High quality Management

Producers use AI for predictive upkeep, robotics automation, and high quality assurance. Vibrant Machines Microfactories simplify manufacturing traces; Instrumental.ai identifies defects; Vention MachineMotion 3 allows adaptive robots. The Stream Analyze case research exhibits that deploying edge AI immediately on the manufacturing line (utilizing a Raspberry Pi) improved inspection velocity 100‑fold and maintained knowledge safety.

Skilled Insights

- Localized AI: Processing knowledge on units ensures confidentiality and reduces community dependency.

- Predictive analytics: AI can cut back downtime by predicting tools failure and scheduling upkeep.

- Scalability: Edge AI frameworks should be scalable and versatile to adapt to totally different factories and machines.

Future Traits and Rising Matters

What’s going to form AI growth within the subsequent few years?

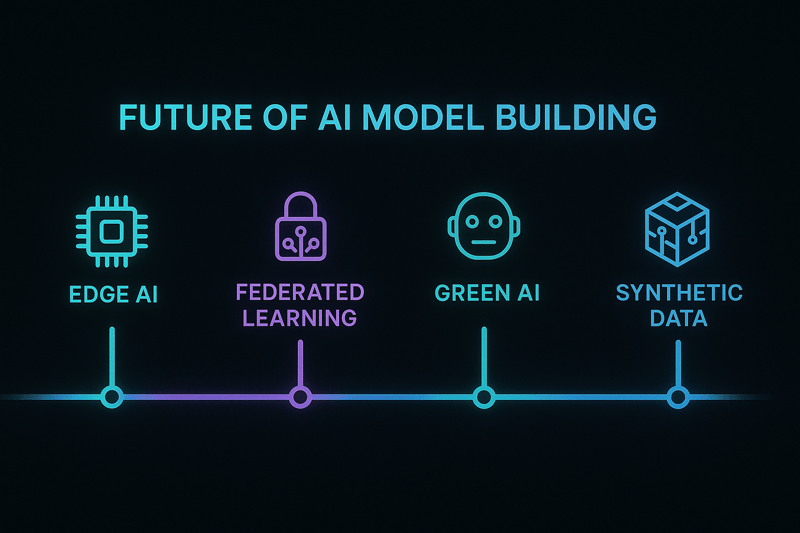

As AI matures, a number of traits are reshaping mannequin growth and deployment. Understanding these traits helps guarantee your fashions stay related, environment friendly, and accountable.

Multimodal AI and Human‑AI Collaboration

- Multimodal AI: Techniques that combine textual content, photographs, audio, and video allow wealthy, human‑like interactions. Digital brokers can reply utilizing voice, chat, and visuals, creating extremely customized customer support and academic experiences.

- Human‑AI collaboration: AI is automating routine duties, permitting people to deal with creativity and strategic resolution‑making. Nonetheless, people should interpret AI‑generated insights ethically.

Autonomous Brokers and Agentic Workflows

- Specialised brokers: Instruments like AutoGPT and Devin autonomously chain duties, performing analysis and operations with minimal human enter. They will velocity up discovery however require oversight to stop unintended habits.

- Workflow automation: Agentic workflows will rework how groups deal with complicated processes, from provide chain administration to product design.

Inexperienced AI and Sustainable Compute

- Power effectivity: AI coaching and inference devour huge quantities of power. Improvements comparable to liquid cooling, carbon‑free power, and power‑conscious scheduling cut back environmental affect. New analysis exhibits coaching compute is doubling each 5 months, making sustainability essential.

- Algorithmic effectivity: Rising algorithms and {hardware} (e.g., neuromorphic chips) intention to realize equal efficiency with decrease power utilization.

Edge AI and Federated Studying

- Federated studying: Allows decentralized mannequin coaching throughout units with out sharing uncooked knowledge. Market worth for federated studying may attain $300 million by 2030. Multi‑prototype FL trains specialised fashions for various places and combines them.

- 6G and quantum networks: Subsequent‑gen networks will help sooner synchronization throughout units.

- Edge Quantum Computing: Hybrid quantum‑classical fashions will allow actual‑time selections on the edge.

Retrieval‑Augmented Era (RAG) and AI Brokers

- Mature RAG: Strikes past static data retrieval to include actual‑time knowledge, sensor inputs, and data graphs. This considerably improves response accuracy and context.

- AI brokers in enterprise: Area‑particular brokers automate authorized overview, compliance monitoring, and customized suggestions.

Open‑Supply and Transparency

- Democratization: Low‑price open‑supply fashions comparable to Llama 3.1, DeepSeek R1, Gemma, and Mixtral 8×22B provide chopping‑edge efficiency.

- Transparency: Open fashions allow researchers and builders to examine and enhance algorithms, growing belief and accelerating innovation.

Skilled Insights for the Future

- Edge is the brand new frontier: Instances Of AI predicts that edge AI and multimodal methods will dominate the following wave of innovation.

- Federated studying will likely be important: The 2025 Edge AI report calls federated studying a cornerstone of decentralized intelligence, with quantum federated studying on the horizon.

- Accountable AI is non‑negotiable: Regulatory frameworks worldwide are tightening; practitioners should prioritize equity, transparency, and human oversight.

Pitfalls, Challenges & Sensible Options

What can go flawed, and the way do you keep away from it?

Constructing AI fashions is difficult; consciousness of potential pitfalls allows you to proactively mitigate them.

- Poor knowledge high quality and bias: Rubbish in, rubbish out. Spend money on knowledge assortment and cleansing. Audit knowledge for hidden biases and stability your dataset.

- Over‑becoming or below‑becoming: Use cross‑validation and regularization. Add dropout layers, cut back mannequin complexity, or collect extra knowledge.

- Inadequate computing sources: Coaching giant fashions requires GPUs or specialised {hardware}. Clarifai’s compute orchestration can allocate sources effectively. Discover power‑environment friendly algorithms and {hardware}.

- Integration challenges: Legacy methods might not work together seamlessly with AI companies. Use modular architectures and standardized protocols (REST, gRPC). Plan integration from the mission’s outset.

- Moral and compliance dangers: At all times think about privateness, equity, and transparency. Doc your mannequin’s goal and limitations. Use federated studying or on‑machine inference to guard delicate knowledge.

- Idea drift and mannequin degradation: Monitor knowledge distributions and efficiency metrics. Use MLOps pipelines to retrain when efficiency drops.

Artistic Instance: Over‑becoming in a Small Dataset

A startup constructed an AI mannequin to foretell inventory worth actions utilizing a small dataset. Initially, the mannequin achieved 99 % accuracy on coaching knowledge however solely 60 % on the take a look at set—traditional over‑becoming. They mounted the problem by including dropout layers, utilizing early stopping, regularizing parameters, and amassing extra knowledge. Additionally they simplified the structure and applied okay‑fold cross‑validation to make sure sturdy efficiency.

Conclusion: Constructing AI Fashions with Accountability and Imaginative and prescient

Creating an AI mannequin is a journey that spans strategic planning, knowledge mastery, algorithmic experience, sturdy engineering, moral accountability, and steady enchancment. Clarifai might help you on this journey with instruments for compute orchestration, pretrained fashions, workflow administration, and edge deployments. As AI continues to evolve—embracing multimodal interactions, autonomous brokers, inexperienced computing, and federated intelligence—practitioners should stay adaptable, moral, and visionary. By following this complete information and keeping track of rising traits, you’ll be effectively‑geared up to construct AI fashions that not solely carry out but additionally encourage belief and ship actual worth.

Continuously Requested Questions (FAQs)

Q1: How lengthy does it take to construct an AI mannequin?

Constructing an AI mannequin can take wherever from just a few weeks to a number of months, relying on the complexity of the issue, the availability of knowledge, and the workforce’s experience. A easy classification mannequin could be up and working inside days, whereas a sturdy, manufacturing‑prepared system that meets compliance and equity necessities may take months.

Q2: What programming language ought to I take advantage of?

Python is the most well-liked language for AI on account of its in depth libraries and group help. Different choices embrace R for statistical evaluation, Julia for prime efficiency, and Java/Scala for enterprise integration. Clarifai’s SDKs present interfaces in a number of languages, simplifying integration.

Q3: How do I deal with knowledge privateness?

Use anonymization, encryption, and entry controls. For collaborative coaching, think about federated studying, which trains fashions throughout units with out sharing uncooked knowledge. Clarifai’s platform helps safe knowledge dealing with and native inference.

This fall: What’s the distinction between machine studying and generative AI?

Machine studying focuses on recognizing patterns and making predictions, whereas generative AI creates new content material (textual content, photographs, music) primarily based on realized patterns. Generative fashions like transformers and diffusion fashions are notably helpful for artistic duties and knowledge augmentation.

Q5: Do I would like costly {hardware} to construct an AI mannequin?

Not at all times. You can begin with cloud‑primarily based companies or pretrained fashions. For giant fashions, GPUs or specialised {hardware} enhance coaching effectivity. Clarifai’s compute orchestration dynamically allocates sources, and native runners allow on‑machine inference with out pricey cloud utilization.

Q6: How do I guarantee my mannequin stays correct over time?

Implement steady monitoring for efficiency metrics and knowledge drift. Use automated retraining pipelines and schedule common audits for equity and bias. MLOps instruments make these processes manageable.

Q7: Can AI fashions be artistic?

Sure. Generative AI creates textual content, photographs, video, and even 3D environments. Combining retrieval‑augmented technology with specialised AI brokers ends in extremely artistic and contextually conscious methods.

Q8: How do I combine Clarifai into my AI workflow?

Clarifai offers APIs and SDKs for mannequin coaching, inference, workflow orchestration, knowledge annotation, and edge deployment. You may high-quality‑tune Clarifai’s pretrained fashions or carry your individual. The platform handles compute orchestration and means that you can run fashions on native runners for low‑latency, safe inference.

Q9: What traits ought to I watch within the close to future?

Regulate multimodal AI, federated studying, autonomous brokers, inexperienced AI, quantum and neuromorphic {hardware}, and the rising open‑supply ecosystem. These traits will form how fashions are constructed, deployed, and managed.

Coaching and Tuning Your Mannequin

Coaching and Tuning Your Mannequin