Over the previous couple of years, Immediate engineering has been the key handshake of the AI world. The proper phrasing may make a mannequin sound poetic, humorous, or insightful; the improper one turned it flat and robotic. However a brand new Stanford-led paper argues that almost all of this “craft” has been compensating for one thing deeper, a hidden bias in how we educated these methods.

Their declare is easy: the fashions had been by no means boring. They had been educated to behave that approach.

And the proposed answer, referred to as Verbalized Sampling, won’t simply change how we immediate fashions; it may rewrite how we take into consideration alignment and creativity in AI.

The Core Downside: Alignment Made AI Predictable

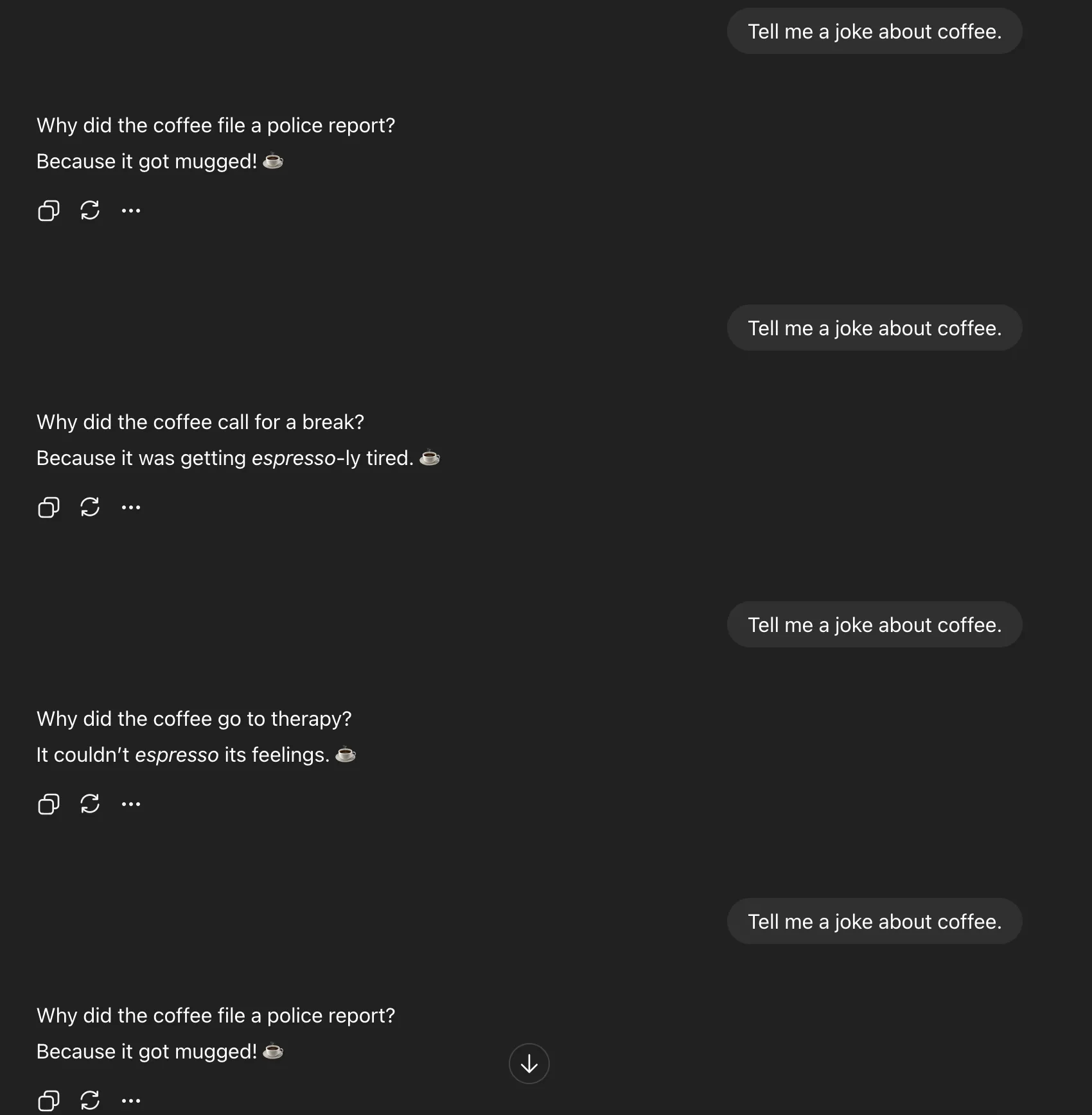

To grasp the breakthrough, begin with a easy experiment. Ask an AI mannequin, “c” Do it 5 occasions. You’ll nearly all the time get the identical response:

This isn’t laziness; it’s mode collapse, a narrowing of the mannequin’s output distribution after alignment coaching. As an alternative of exploring all of the legitimate responses it may produce, the mannequin gravitates towards the most secure, most common one.

The Stanford crew traced this to typicality bias within the human suggestions information used throughout reinforcement studying. When annotators choose mannequin responses, they constantly desire textual content that sounds acquainted. Over time, reward fashions educated on that desire study to reward normality as a substitute of novelty.

Mathematically, this bias provides a “typicality weight” (α) to the reward perform, amplifying no matter seems most statistically common. It’s a gradual squeeze on creativity, the explanation most aligned fashions sound alike.

The Twist: The Creativity Was By no means Misplaced

Right here’s the kicker: the variety isn’t gone. It’s buried.

While you ask for a single response, you’re forcing the mannequin to choose essentially the most possible completion. However if you happen to ask it to verbalize a number of solutions together with their chances, it abruptly opens up its inside distribution, the vary of concepts it really “is aware of.”

That’s Verbalized Sampling (VS) in motion.

As an alternative of:

Inform me a joke about espresso

You ask:

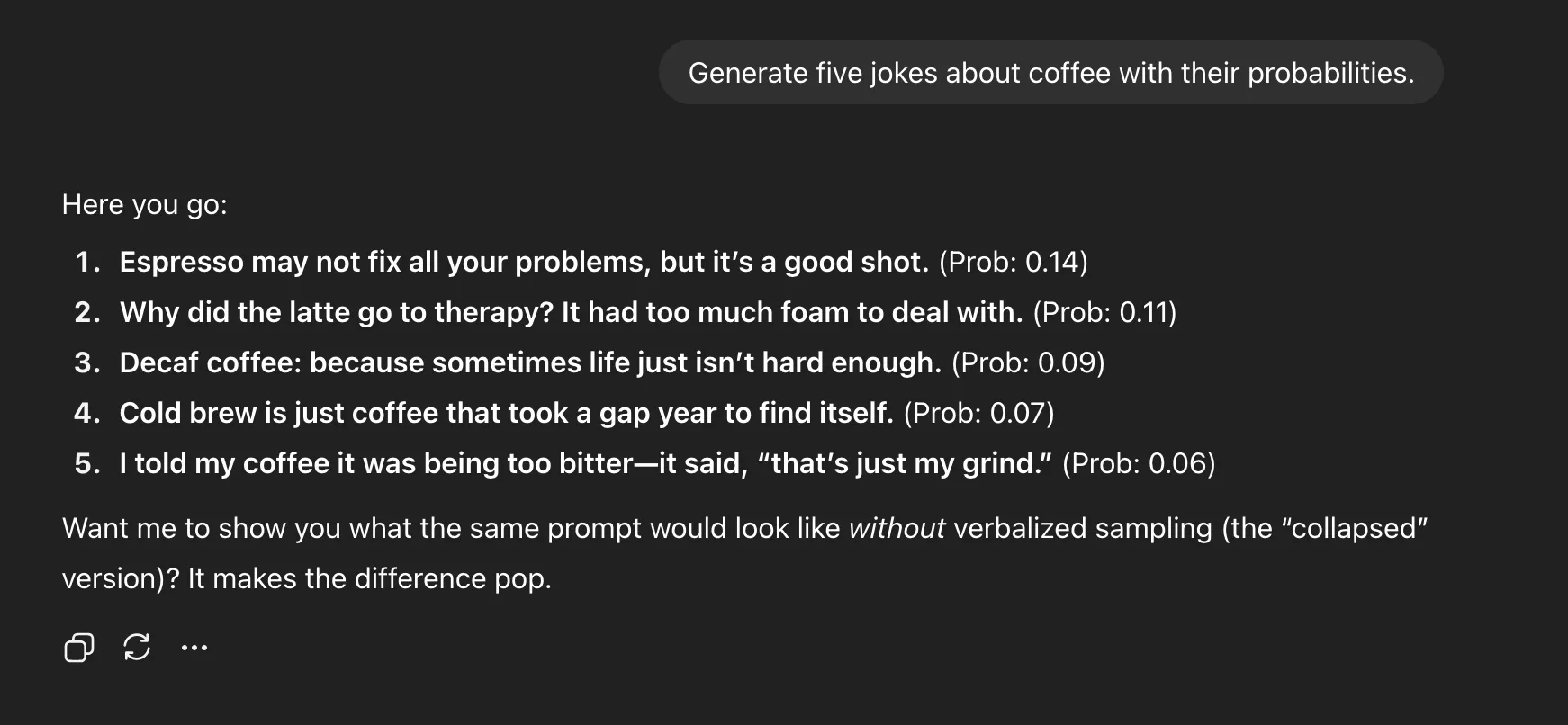

Generate 5 jokes about espresso with their chances

This small change unlocks the variety that alignment coaching had compressed. You’re not retraining the mannequin, altering temperature, or hacking sampling parameters. You’re simply prompting in a different way—asking the mannequin to point out its uncertainty somewhat than cover it.

The Espresso Immediate: Proof in Motion

To show, the researchers ran the identical espresso joke immediate utilizing each conventional prompting and Verbalized Sampling.

Direct Prompting

Verbalized Sampling

Why It Works

Throughout era, a language mannequin internally samples tokens from a chance distribution, however we often solely see the best choice. While you ask it to output a number of candidates with chances hooked up, you’re making it purpose about its personal uncertainty explicitly.

This “self-verbalization” exposes the mannequin’s underlying range. As an alternative of collapsing to a single high-probability mode, it reveals you many believable ones.

In apply, which means “Inform me a joke” yields one mugging pun, whereas “Generate 5 jokes with chances” produces espresso puns, remedy jokes, chilly brew traces, and extra. It’s not simply selection, it’s interpretability. You may see what the mannequin thinks would possibly work.

The Information and the Good points

Throughout a number of benchmarks, artistic writing, dialogue simulation, and open-ended QA, the outcomes had been constant:

- 1.6–2.1× enhance in range for artistic writing duties

- 66.8% restoration of pre-alignment range

- No drop in factual accuracy or security (refusal charges above 97%)

Bigger fashions benefited much more. GPT-4-class methods confirmed double the variety enchancment in comparison with smaller ones, suggesting that large fashions have deep latent creativity ready to be accessed.

The Bias Behind It All

To verify that typicality bias actually drives mode collapse, the researchers analyzed practically seven thousand response pairs from the HelpSteer dataset. Human annotators most well-liked “typical” solutions about 17–19% extra typically, even when each had been equally right.

They modeled this as:

r(x, y) = r_true(x, y) + α log π_ref(y | x)

That α time period is the typicality bias weight. As α will increase, the mannequin’s distribution sharpens, pushing it towards the middle. Over time, this makes responses secure, predictable, and repetitive.

What does it imply for Immediate Engineering?

So, is immediate engineering useless? Not fairly. However it’s evolving.

Verbalized Sampling doesn’t take away the necessity for considerate prompting—it adjustments what skillful prompting seems like. The brand new recreation isn’t about tricking a mannequin into creativity; it’s about designing meta-prompts that expose its full chance house.

You may even deal with it as a “creativity dial.” Set a chance threshold to manage how wild or secure you need the responses to be. Decrease it for extra shock, increase it for stability.

The Actual Implications

The largest shift right here isn’t about jokes or tales. It’s about reframing alignment itself.

For years, we’ve accepted that alignment makes fashions safer however blander. This analysis suggests in any other case: alignment made them too well mannered, not damaged. By prompting in a different way, we will recuperate creativity with out touching the mannequin weights.

That has penalties far past artistic writing—from extra real looking social simulations to richer artificial information for mannequin coaching. It hints at a brand new sort of AI system: one that may introspect by itself uncertainty and provide a number of believable solutions as a substitute of pretending there’s just one.

The Caveats

Not everybody’s shopping for the hype. Critics level out that some fashions might hallucinate chance scores as a substitute of reflecting true likelihoods. Others argue this doesn’t repair the underlying human bias, it merely sidesteps it.

And whereas the outcomes look robust in managed assessments, real-world deployment includes price, latency, and interpretability trade-offs. As one researcher dryly put it on X: “If it labored completely, OpenAI would already be doing it.”

Nonetheless, it’s onerous to not admire the magnificence. No retraining, no new information, only one revised instruction:

Generate 5 responses with their chances.

Conclusion

The lesson from Stanford’s work is greater than any single approach. The fashions we’ve constructed had been by no means unimaginative; they had been over-aligned, educated to suppress the variety that made them highly effective.

Verbalized Sampling doesn’t rewrite them; it simply arms them the keys again.

If pretraining constructed an unlimited inside library, alignment locked most of its doorways. VS is how we begin asking to see all 5 variations of the reality.

Immediate engineering isn’t useless. It’s lastly turning into a science.

Continuously Requested Questions

A. Verbalized Sampling is a prompting methodology that asks AI fashions to generate a number of responses with their chances, revealing their inside range with out retraining or parameter tweaks.

A. Due to typicality bias in human suggestions information, fashions study to favor secure, acquainted responses, resulting in mode collapse and lack of artistic selection.

A. No. It redefines it. The brand new ability lies in crafting meta-prompts that expose distributions and management creativity, somewhat than fine-tuning single-shot phrasing.

Login to proceed studying and luxuriate in expert-curated content material.