At Databricks, we use reinforcement studying (RL) to develop reasoning fashions for issues that our clients face in addition to for our merchandise, such because the Databricks Assistant and AI/BI Genie. These duties embrace producing code, analyzing information, integrating organizational information, domain-specific analysis, and info extraction (IE) from paperwork. Duties like coding or info extraction typically have verifiable rewards — correctness will be checked immediately (e.g., passing assessments, matching labels). This enables for reinforcement studying and not using a discovered reward mannequin, often called RLVR (reinforcement studying with verifiable rewards). In different domains, a customized reward mannequin could also be required — which Databricks additionally helps. On this put up, we concentrate on the RLVR setting.

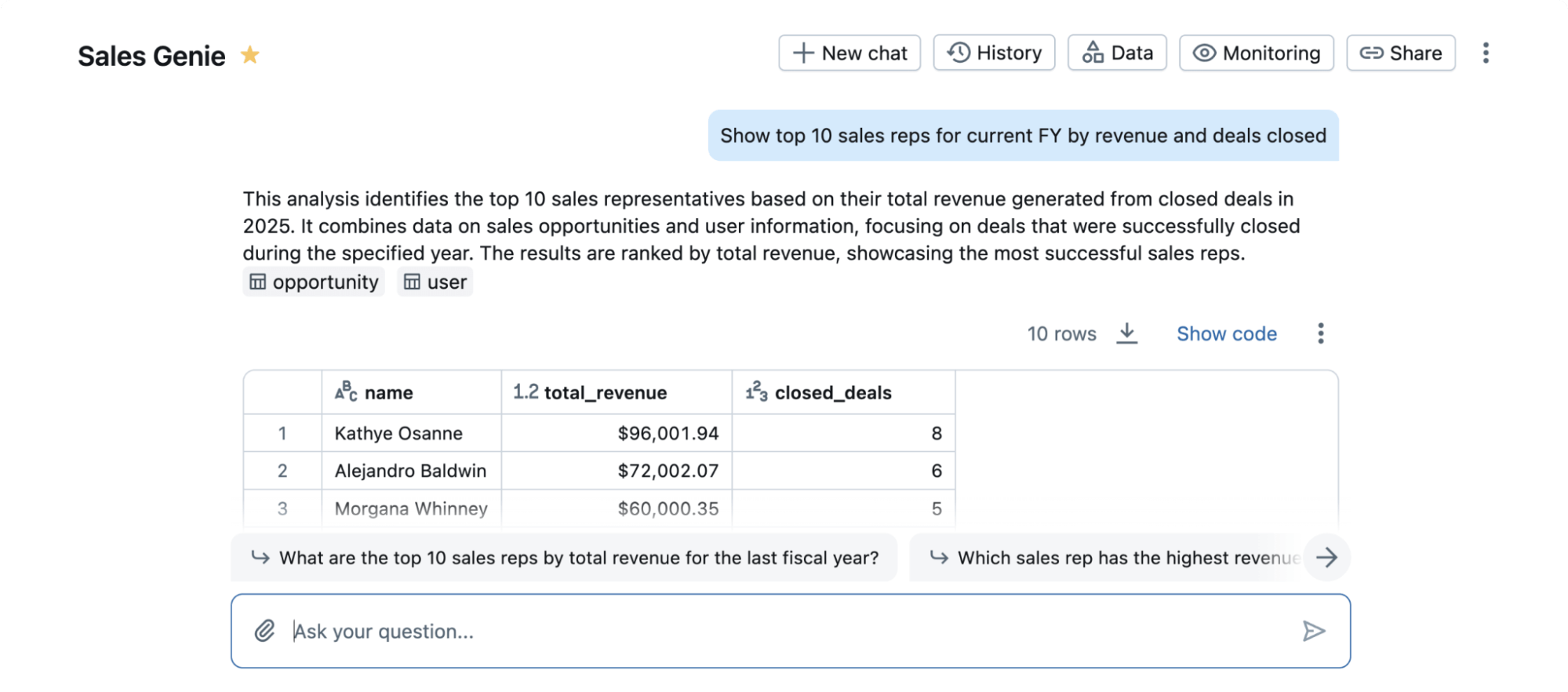

As an instance of the facility of RLVR, we utilized our coaching stack to a well-liked tutorial benchmark in information science known as BIRD. This benchmark research the duty of reworking a pure language question to a SQL code that runs on a database. This is a crucial drawback for Databricks customers, enabling non-SQL specialists to speak to their information. Additionally it is a difficult activity the place even the most effective proprietary LLMs don’t work properly out of the field. Whereas BIRD neither totally captures the real-world complexity of this activity nor the full-breadth of actual merchandise like Databricks AI/BI Genie (Determine 1), its reputation permits us to measure the efficacy of RLVR for information science on a properly understood benchmark.

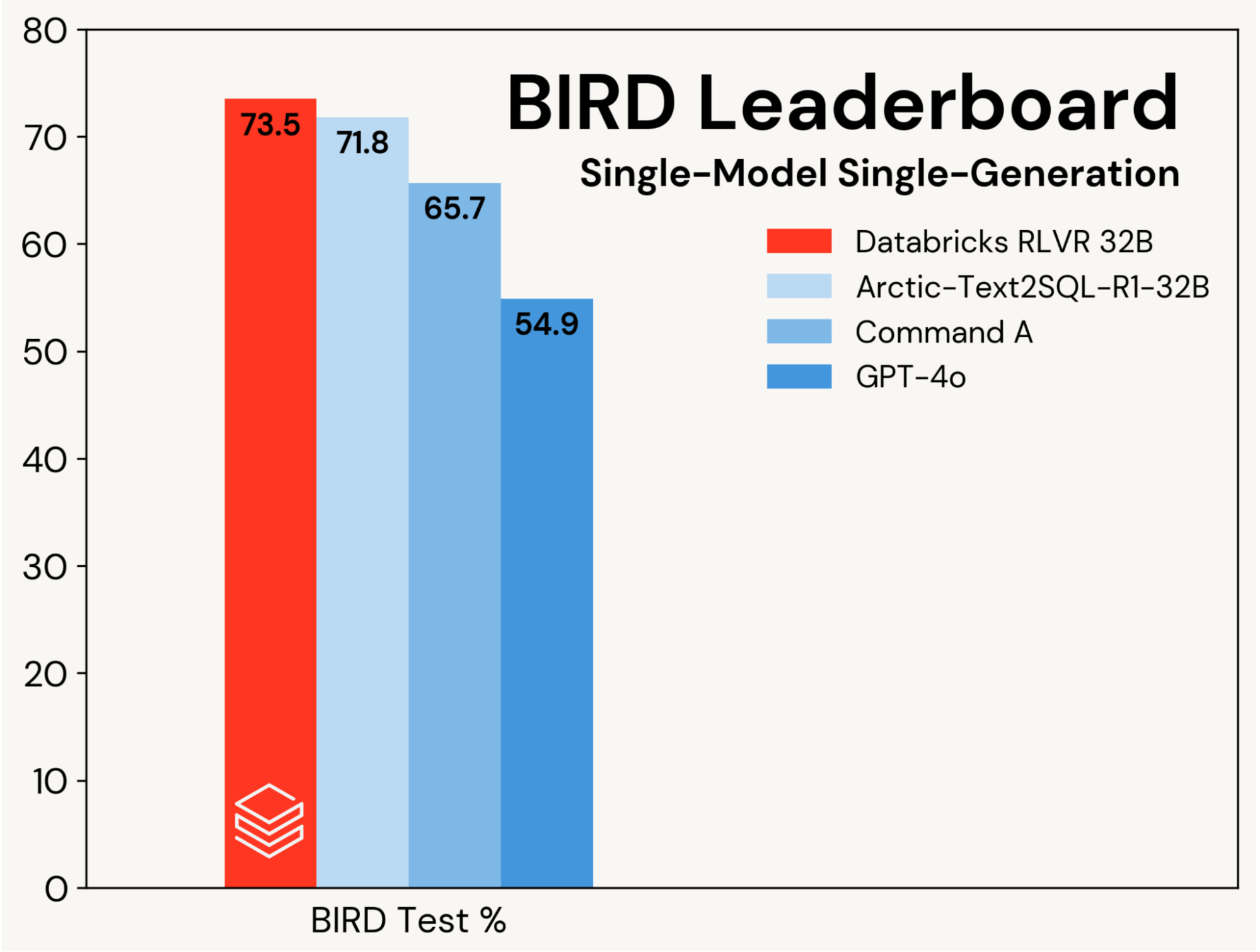

We concentrate on bettering a base SQL coding mannequin utilizing RLVR, isolating these positive aspects from enhancements pushed by agentic designs. Progress is measured on the single-model, single‑technology monitor of the BIRD leaderboard (i.e., no self‑consistency), which evaluates on a personal take a look at set.

We set a brand new state-of-the-art take a look at accuracy of 73.5% on this benchmark. We did so utilizing our commonplace RLVR stack and coaching solely on the BIRD coaching set. The earlier greatest rating on this monitor was 71.8%[1], achieved by augmenting the BIRD coaching set with further information and utilizing a proprietary LLM (GPT-4o). Our rating is considerably higher than each the unique base mannequin and proprietary LLMs (see Determine 2). This consequence showcases the simplicity and generality of RLVR: we reached this rating with off-the-shelf information and the usual RL elements we’re rolling out in Agent Bricks, and we did so on our first submission to BIRD. RLVR is a robust baseline that AI builders ought to take into account at any time when sufficient coaching information is obtainable.

We constructed our submission based mostly on the BIRD dev set. We discovered that Qwen 2.5 32B Coder Instruct was the most effective place to begin. We fine-tuned this mannequin utilizing each Databricks TAO – an offline RL technique, and our RLVR stack. This strategy alongside cautious immediate and mannequin choice was enough to get us to the highest of the BIRD Benchmark. This result’s a public demonstration of the identical strategies we’re utilizing to enhance fashionable Databricks merchandise like AI/BI Genie and Assistant and to assist our clients construct brokers utilizing Agent Bricks.

Our outcomes spotlight the facility of RLVR and the efficacy of our coaching stack. Databricks clients have additionally reported nice outcomes utilizing our stack of their reasoning domains. We predict this recipe is highly effective, composable, and extensively relevant to a spread of duties. If you’d prefer to preview RLVR on Databricks, contact us right here.

1See Desk 1 in https://arxiv.org/pdf/2505.20315

Authors: Alnur Ali, Ashutosh Baheti, Jonathan Chang, Ta-Chung Chi, Brandon Cui, Andrew Drozdov, Jonathan Frankle, Abhay Gupta, Pallavi Koppol, Sean Kulinski, Jonathan Li, Dipendra Kumar Misra, Jose Javier Gonzalez Ortiz, Krista Opsahl-Ong