ServiceNow AI Analysis Lab has launched Apriel-1.5-15B-Thinker, a 15-billion-parameter open-weights multimodal reasoning mannequin educated with a data-centric mid-training recipe—continuous pretraining adopted by supervised fine-tuning—with out reinforcement studying or choice optimization. The mannequin attains an Synthetic Evaluation Intelligence Index rating of 52 with 8x value financial savings in comparison with SOTA. The checkpoint ships underneath an MIT license on Hugging Face.

So, What’s new in it for me?

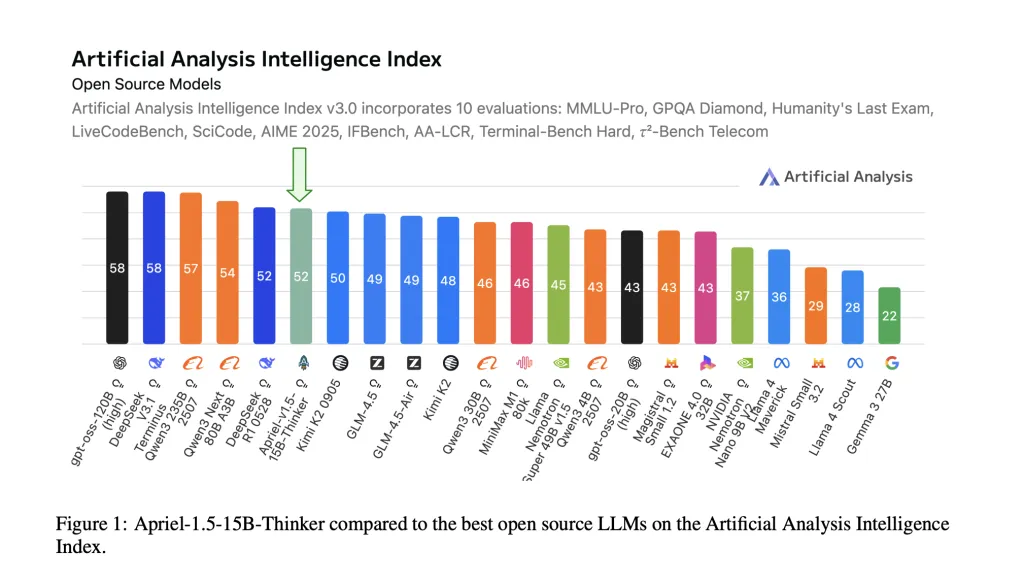

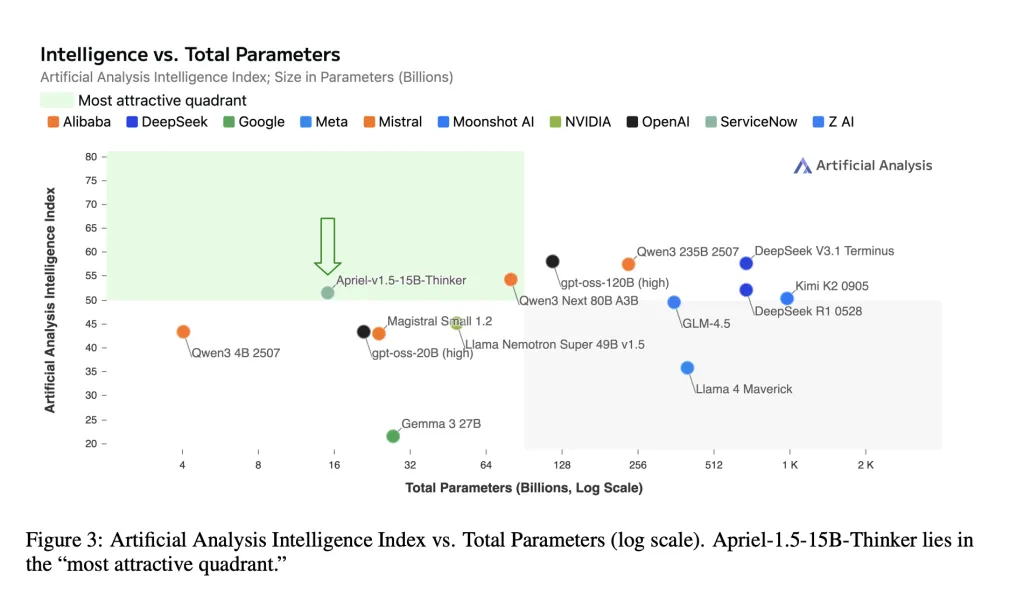

- Frontier-level composite rating at small scale. The mannequin stories Synthetic Evaluation Intelligence Index (AAI) = 52, matching DeepSeek-R1-0528 on that mixed metric whereas being dramatically smaller. AAI aggregates 10 third-party evaluations (MMLU-Professional, GPQA Diamond, Humanity’s Final Examination, LiveCodeBench, SciCode, AIME 2025, IFBench, AA-LCR, Terminal-Bench Arduous, τ²-Bench Telecom).

- Single-GPU deployability. The mannequin card states the 15B checkpoint “suits on a single GPU,” concentrating on on-premises and air-gapped deployments with fastened reminiscence and latency budgets.

- Open weights and reproducible pipeline. Weights, coaching recipe, and analysis protocol are public for impartial verification.

Okay! I bought it however what’s it’s coaching mechanism?

Base and upscaling. Apriel-1.5-15B-Thinker begins from Mistral’s Pixtral-12B-Base-2409 multimodal decoder-vision stack. The analysis group applies depth upscaling—rising decoder layers from 40→48—then projection-network realignment to align the imaginative and prescient encoder with the enlarged decoder. This avoids pretraining from scratch whereas preserving single-GPU deployability.

CPT (Continuous Pretraining). Two phases: (1) combined textual content+picture information to construct foundational reasoning and doc/diagram understanding; (2) focused artificial visible duties (reconstruction, matching, detection, counting) to sharpen spatial and compositional reasoning. Sequence lengths prolong to 32k and 16k tokens respectively, with selective loss placement on response tokens for instruction-formatted samples.

SFT (Supervised Nice-Tuning). Excessive-quality, reasoning-trace instruction information for math, coding, science, instrument use, and instruction following; two extra SFT runs (stratified subset; longer-context) are weight-merged to kind the ultimate checkpoint. No RL (reinforcement studying) or RLAIF (reinforcement studying from AI suggestions).

Knowledge observe. ~25% of the depth-upscaling textual content combine derives from NVIDIA’s Nemotron assortment.

O’ Wow! Inform me about it’s outcomes then?

Key textual content benchmarks (cross@1 / accuracy).

- AIME 2025 (American Invitational Arithmetic Examination 2025): 87.5–88%

- GPQA Diamond (Graduate-Degree Google-Proof Query Answering, Diamond break up): ≈71%

- IFBench (Instruction-Following Benchmark): ~62

- τ²-Bench (Tau-squared Bench) Telecom: ~68

- LiveCodeBench (practical code correctness): ~72.8

Utilizing VLMEvalKit for reproducibility, Apriel scores competitively throughout MMMU / MMMU-Professional (Huge Multi-discipline Multimodal Understanding), LogicVista, MathVision, MathVista, MathVerse, MMStar, CharXiv, AI2D, BLINK, with stronger outcomes on paperwork/diagrams and text-dominant math imagery.

Lets Summarize every part

Apriel-1.5-15B-Thinker demonstrates that cautious mid-training (continuous pretraining + supervised fine-tuning, no reinforcement studying) can ship a 52 on the Synthetic Evaluation Intelligence Index (AAI) whereas remaining deployable on a single graphics processing unit. Reported task-level scores (for instance, AIME 2025 ≈88, GPQA Diamond ≈71, IFBench ≈62, Tau-squared Bench Telecom ≈68) align with the mannequin card and place the 15-billion-parameter checkpoint in essentially the most cost-efficient band of present open-weights reasoners. For enterprises, that mixture—open weights, reproducible recipe, and single-GPU latency—makes Apriel a sensible baseline to judge earlier than contemplating bigger closed methods.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.