Whether or not you’re coming from healthcare, aerospace, manufacturing, authorities or some other industries the time period large knowledge isn’t any overseas idea; nevertheless how that knowledge will get built-in into your present present MATLAB or Simulink mannequin at scale might be a problem you’re going through right this moment. Because of this Databricks and Mathwork’s partnership was in-built 2020, and continues to help prospects to derive sooner significant insights from their knowledge at scale. This permits the engineers to proceed to develop their algorithms/fashions in Mathworks with out having to study new code whereas making the most of Databricks Information Intelligence Platform to run these fashions at scale to carry out knowledge evaluation and iteratively prepare and check these fashions.

For instance, within the manufacturing sector, predictive upkeep is a vital utility. Engineers leverage refined MATLAB algorithms to research machine knowledge, enabling them to forecast potential gear failures with outstanding accuracy. These superior techniques can predict impending battery failures as much as two weeks prematurely, permitting for proactive upkeep and minimizing pricey downtime in automobile and equipment operations.

On this weblog, we shall be masking a pre-flight guidelines, a number of in style integration choices, “Getting began” directions, and a reference structure with Databricks finest practices to implement your use case.

Pre-Flight Guidelines

Listed here are a set of inquiries to reply to be able to get began with the combination course of. Present the solutions to your technical help contacts at Mathworks and Databricks in order that they will tailor the combination course of to fulfill your wants.

- Are you utilizing Unity Catalog?

- Are you utilizing a MATLAB Compiler SDK? Do you’ve a MATLAB Compiler SDK license?

- Are you on MacOS or Home windows?

- What sorts of fashions or algorithms are you utilizing? Are the fashions constructed utilizing MATLAB or Simulink or each?

- Which MATLAB/Simulink toolboxes are these fashions utilizing?

- For Simulink fashions, are there any state variables/parameters saved as *.mat recordsdata which must be loaded? Are fashions writing middleman states/outcomes into *.mat recordsdata?

- What MATLAB runtime model are you on?

- What Databricks Runtime variations do you’ve entry to? The minimal required is X

Deploying MATLAB fashions at Databricks

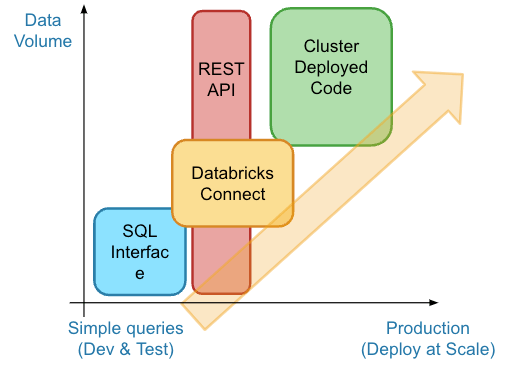

There are numerous other ways to combine MATLAB fashions at Databricks; nevertheless on this weblog we’ll talk about a number of in style integration architectures that prospects have carried out. To get began it’s essential set up the MATLAB interface for Databricks to discover the combination strategies, such because the SQL Interface, RestAPI, and Databricks Join for testing and growth, and the Compiler choice for manufacturing use instances.

Integration Strategies Overview

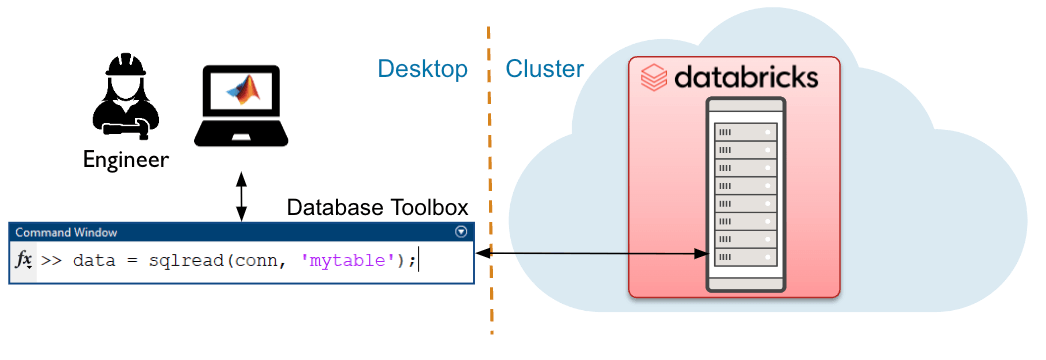

SQL Interface to Databricks

The SQL interface is finest suited to modest knowledge volumes and gives fast and quick access with database semantics. Customers can entry knowledge within the Databricks platform straight from MATLAB utilizing the Database Toolbox.

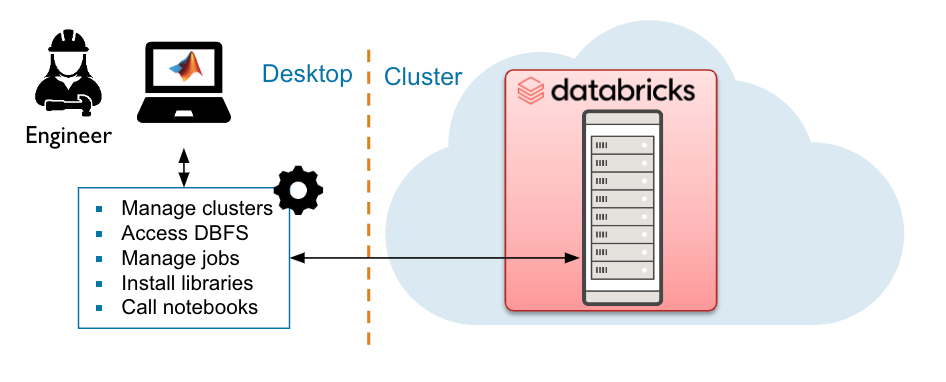

RestAPI to Databricks

The REST API allows the person to manage jobs and clusters inside the Databricks setting, akin to management of Databricks sources, automation, and knowledge engineering workflows.

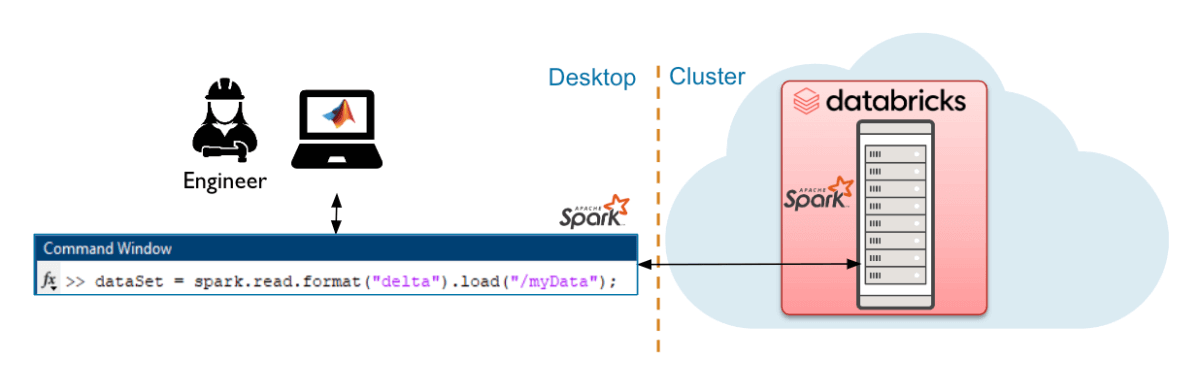

Databricks Join Interface to Databricks

The Databricks Join (DB Join) interface is finest suited to modest to giant knowledge volumes and makes use of an area Spark session to run queries on the Databricks cluster.

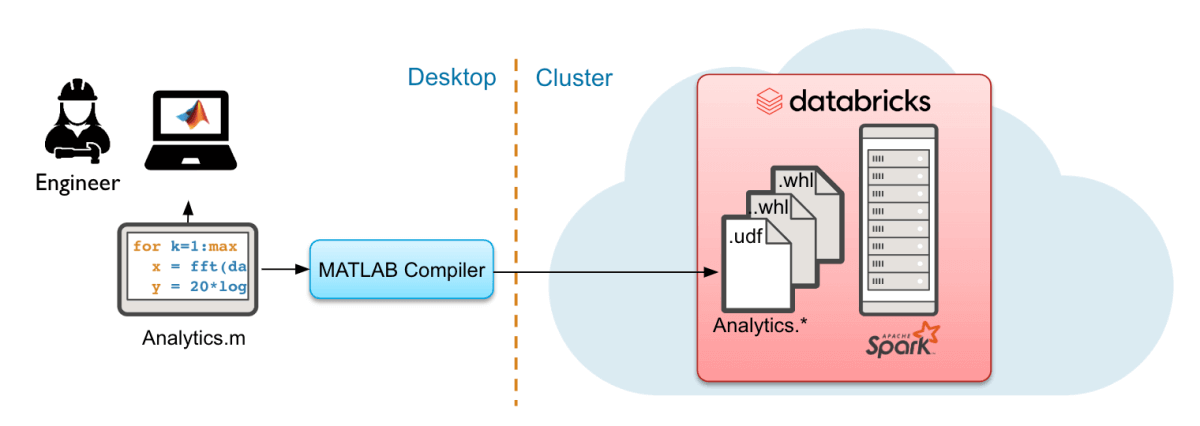

Deploy MATLAB to run at scale in Databricks utilizing MATLAB Compiler SDK

MATLAB Compiler SDK brings MATLAB compute to the information, scales by way of spark to make use of giant knowledge volumes for manufacturing. Deployed algorithms can run on-demand, scheduled, or built-in into knowledge processing pipelines.

For extra detailed directions on how you can get began with every of those deployment strategies please attain out to the MATLAB and Databricks workforce.

Getting Began

Set up and setup

- Navigate to MATLAB interface for Databricks and scroll right down to the underside and click on the “Obtain the MATLAB Interface for Databricks” button to obtain the interface. It will likely be downloaded as a zipper file.

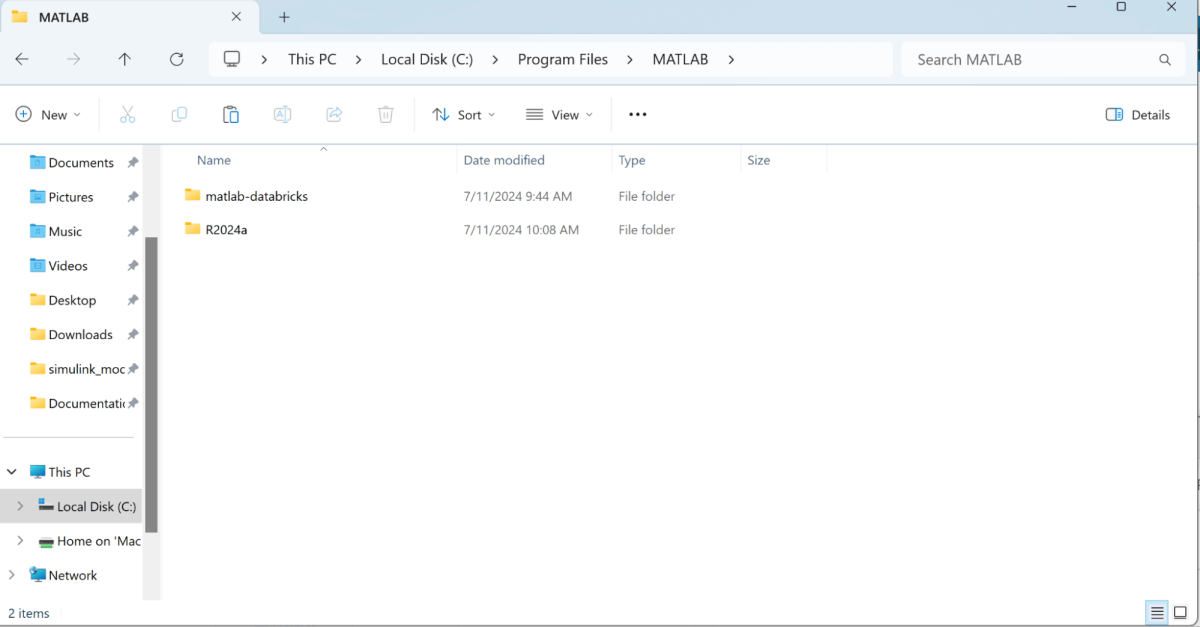

- Extract the compressed zipped folder “matlab-databricks-v4-0-7-build-…” inside Program Information MATLAB. As soon as extracted you will notice the “matlab-databricks” folder. Be sure the folders are on this folder and this hierarchy:

- Launch the MATLAB utility from native Desktop utility by the Search bar and ensure to run as an administrator

- Go to the command line interface in MATLAB and sort “ver” to confirm that you’ve all of the dependencies vital:

- Subsequent you’re prepared to put in the runtime on Databricks cluster:

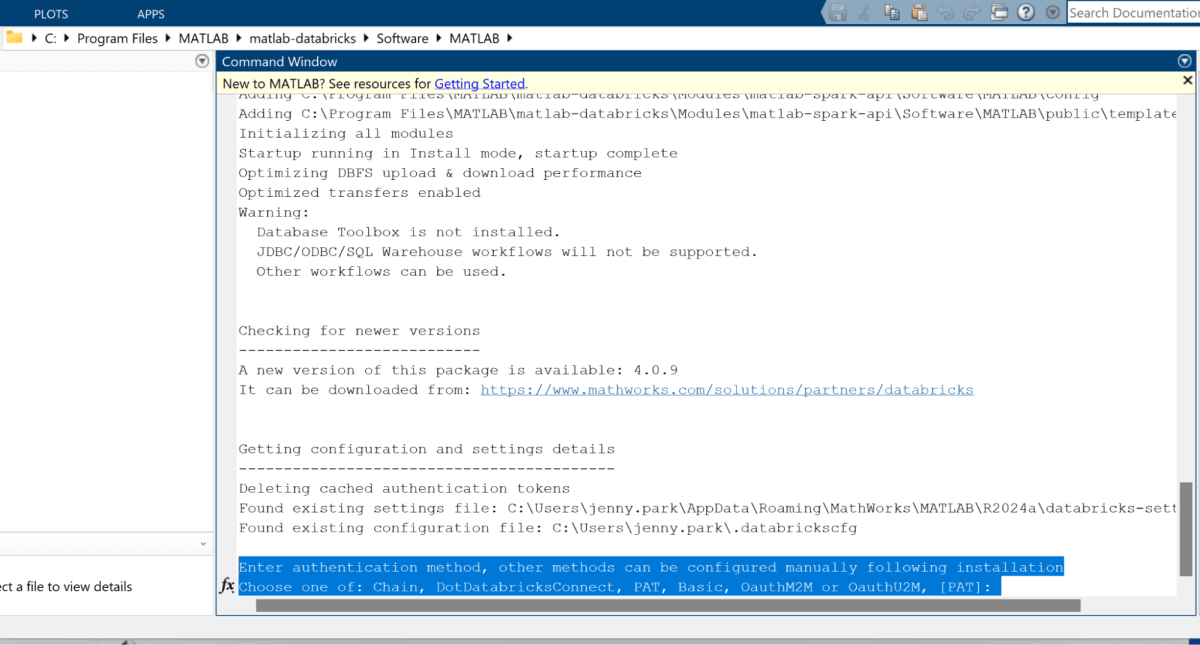

- Navigate to this path: C:Program FilesMATLABmatlab-databricksSoftwareMATLAB: cd <C:[Your path]Program FilesMATLABmatlab-databricksSoftwareMATLAB>

- You need to see within the prime bar subsequent to the folders icon the present listing path. Ensure that path seems like the trail written above, and you’ll see

set up.mobtainable within the present folder.

- Name

set up()from the MATLAB terminal - You’ll be prompted with a number of questions for configuring the cluster spin up.

- Authentication methodology, Databricks username, cloud vendor internet hosting Databricks, Databricks org id, and many others

- Authentication methodology, Databricks username, cloud vendor internet hosting Databricks, Databricks org id, and many others

- When prompted with “Enter the native path to the downloaded zip file for this package deal (Level to the one in your native machine)”

- You need to present the trail to your MATLAB compressed zip file. E.g: C:UserssomeuserDownloadsmatlab-databricks-v1.2.3_Build_A1234567.zip

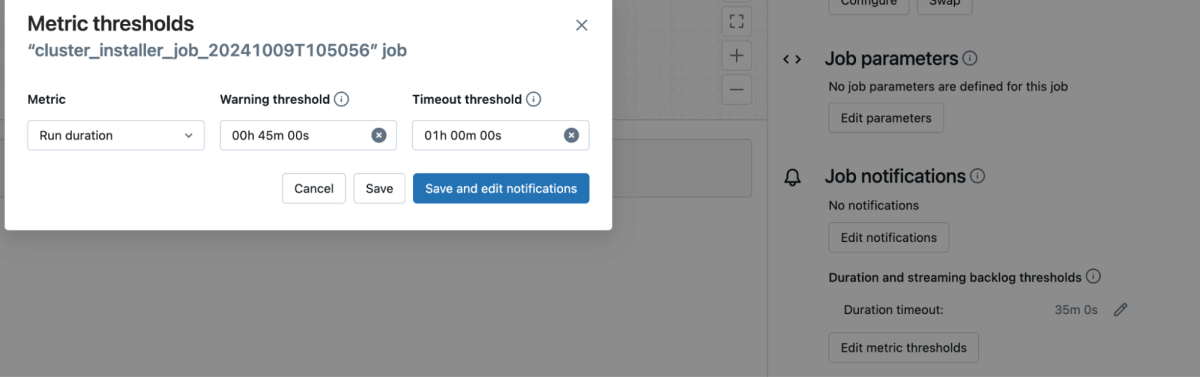

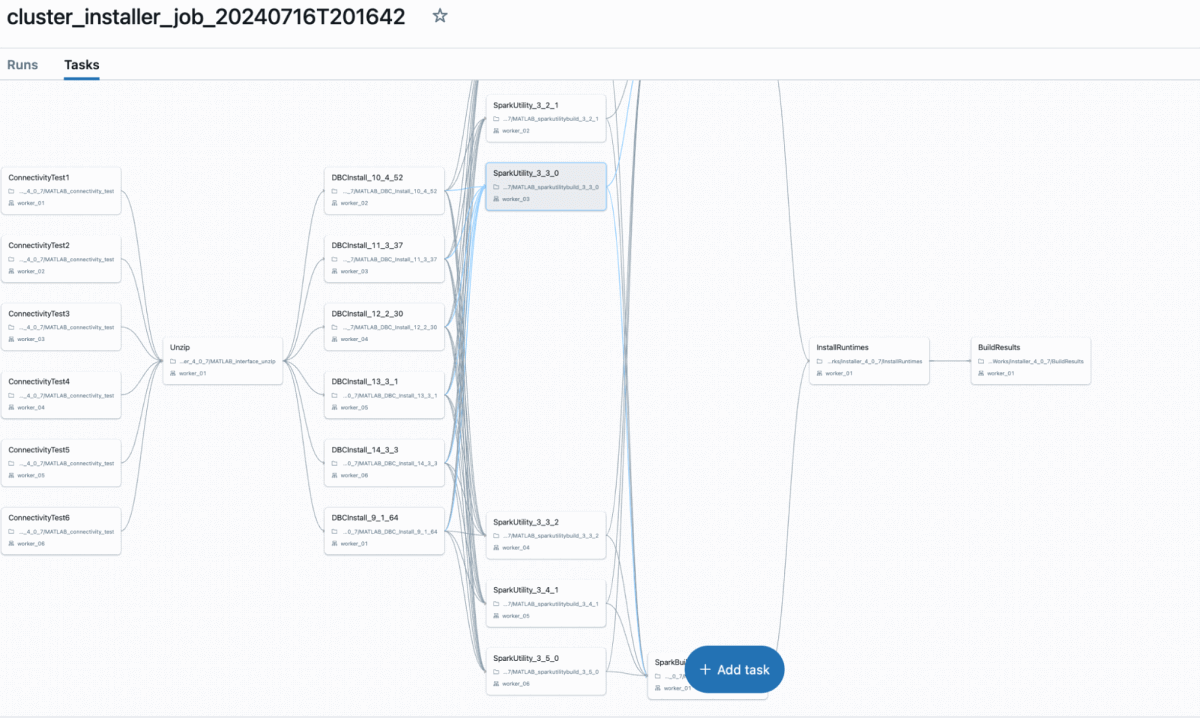

- A job shall be created in Databricks routinely as proven beneath (Be sure the job timeout is about to half-hour or larger to keep away from timeout error)

a.

b.

- As soon as this step is accomplished efficiently, your package deal needs to be able to go. You have to to restart MATLAB and run

startup()which ought to validate your settings and configurations.

Validating set up and packaging your MATLAB code for Databricks

- You may check one integration choice, Databricks-Join, fairly merely with the next steps:

spark = getDatabricksSessionds = spark.vary(10)Ds.present- If any of those don’t work, the more than likely difficulty just isn’t being related to a supported compute (DBR14.3LTS was used for testing) and needing to switch the configuration recordsdata listed below the authorization header of the `startup()` output.

- Add your .whl file to Databricks Volumes

- Create a pocket book and fasten the “MATLAB set up cluster” to the pocket book and import your features out of your .whl wrapper file

Reference Structure of a Batch/Actual time Use Case in Databricks Utilizing MATLAB fashions

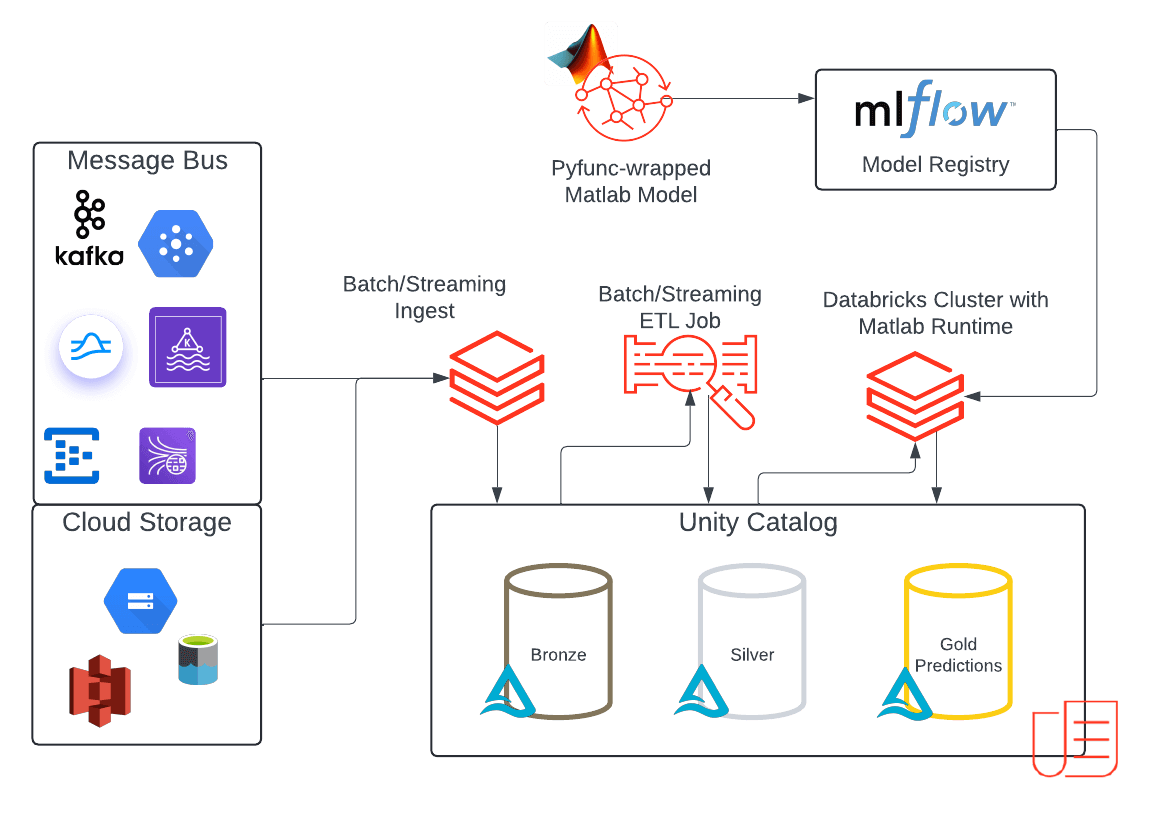

The structure showcases a reference implementation for an end-to-end ML batch or streaming use instances in Databricks that incorporate MATLAB fashions. This answer leverages the Databricks Information Intelligence Platform to its full potential:

- The platform allows streaming or batch knowledge ingestion into Unity Catalog (UC).

- The incoming knowledge is saved in a Bronze desk, representing uncooked, unprocessed knowledge.

- After preliminary processing and validation, the information is promoted to a Silver desk, representing cleaned and standardized knowledge.

- MATLAB fashions are packaged as .whl recordsdata so they’re prepared to make use of as customized packages in workflows and interactive clusters. These wheel recordsdata are uploaded to UC volumes, as described beforehand, and entry can now be ruled by UC.

- With the MATLAB mannequin obtainable in UC you may load it onto your cluster as a cluster-scoped library out of your Volumes path.

- Then import the MATLAB library into your cluster and create a customized pyfunc MLflow mannequin object to foretell. Logging the mannequin in MLflow experiments permits you to save and monitor totally different mannequin variations and the corresponding python wheel variations in a easy and reproducible manner.

- Save the mannequin in a UC schema alongside your enter knowledge, now you may handle mannequin permissions in your MATLAB mannequin like some other customized mannequin in UC. These might be separate permissions aside from those you set on the compiled MATLAB mannequin that was loaded into UC Volumes.

- As soon as registered, the fashions are deployed to make predictions.

- For batch and streaming – load the mannequin right into a pocket book and name the predict perform.

- For actual time – serve the mannequin utilizing the serverless Mannequin Serving endpoints and question it utilizing the REST API.

- Orchestrate your job utilizing a workflow to schedule a batch ingestion or repeatedly ingest the incoming knowledge and run inference utilizing your MATLAB mannequin.

- Retailer your predictions within the Gold desk in Unity Catalog to be consumed by downstream customers.

- Leverage Lakehouse Monitoring to observe your output predictions.

Conclusion

If you wish to combine MATLAB into your Databricks platform, we’ve addressed the totally different integration choices that exist right this moment and have offered an structure sample for finish to finish implementation and mentioned choices for interactive growth experiences. By integrating MATLAB into your platform you may leverage the advantages of distributed compute on spark, enhanced knowledge entry and engineering capabilities with delta, and securely handle entry to your MATLAB fashions with Unity Catalog.

Try these further sources:

All the pieces you wished to find out about Massive Information processing (however had been too afraid to ask) » Developer Zone – MATLAB & Simulink

Actionable Perception for Engineers and Scientists at Massive Information Scale with Databricks and MathWorks

Reworking Electrical Fault Detection: The Energy of Databricks and MATLAB