We’re excited to announce the Common Availability of serverless compute for notebooks, jobs and Delta Stay Tables (DLT) on AWS and Azure. Databricks clients already get pleasure from quick, easy and dependable serverless compute for Databricks SQL and Databricks Mannequin Serving. The identical functionality is now out there for all ETL workloads on the Knowledge Intelligence Platform, together with Apache Spark and Delta Stay Tables. You write the code and Databricks gives fast workload startup, computerized infrastructure scaling and seamless model upgrades of the Databricks Runtime. Importantly, with serverless compute you might be solely billed for work finished as a substitute of time spent buying and initializing situations from cloud suppliers.

Our present serverless compute providing is optimized for quick startup, scaling, and efficiency. Customers will quickly be capable of specific different objectives reminiscent of decrease value. We’re presently providing an introductory promotional low cost on serverless compute, out there now till October 31, 2024. You get a 50% value discount on serverless compute for Workflows and DLT and a 30% value discount for Notebooks.

“Cluster startup is a precedence for us, and serverless Notebooks and Workflows have made an enormous distinction. Serverless compute for notebooks make it simple with only a single click on; we get serverless compute that seamlessly integrates into workflows. Plus, it is safe. This long-awaited characteristic is a game-changer. Thanks, Databricks!”

— Chiranjeevi Katta, Knowledge Engineer, Airbus

Let’s discover the challenges serverless compute helps remedy and the distinctive advantages it affords knowledge groups.

Compute infrastructure is advanced and expensive to handle

Configuring and managing compute reminiscent of Spark clusters has lengthy been a problem for knowledge engineers and knowledge scientists. Time spent on configuring and managing compute is time not spent offering worth to the enterprise.

Choosing the proper occasion sort and measurement is time-consuming and requires experimentation to find out the optimum selection for a given workload. Determining cluster insurance policies, auto-scaling, and Spark configurations additional complicates this process and requires experience. When you get clusters arrange and working, you continue to should spend time sustaining and tuning their efficiency and updating Databricks Runtime variations so you’ll be able to profit from new capabilities.

Idle time – time not spent processing your workloads, however that you’re nonetheless paying for – is one other expensive final result of managing your individual compute infrastructure. Throughout compute initialization and scale-up, situations must boot up, software program together with Databricks Runtime must be put in, and many others. You pay your cloud supplier for this time. Second, in case you over-provision compute by utilizing too many situations or occasion sorts which have an excessive amount of reminiscence, CPU, and many others., compute will probably be under-utilized but you’ll nonetheless pay for the entire provisioned compute capability.

Observing this value and complexity throughout thousands and thousands of buyer workloads led us to innovate with serverless compute.

Serverless compute is quick, easy and dependable

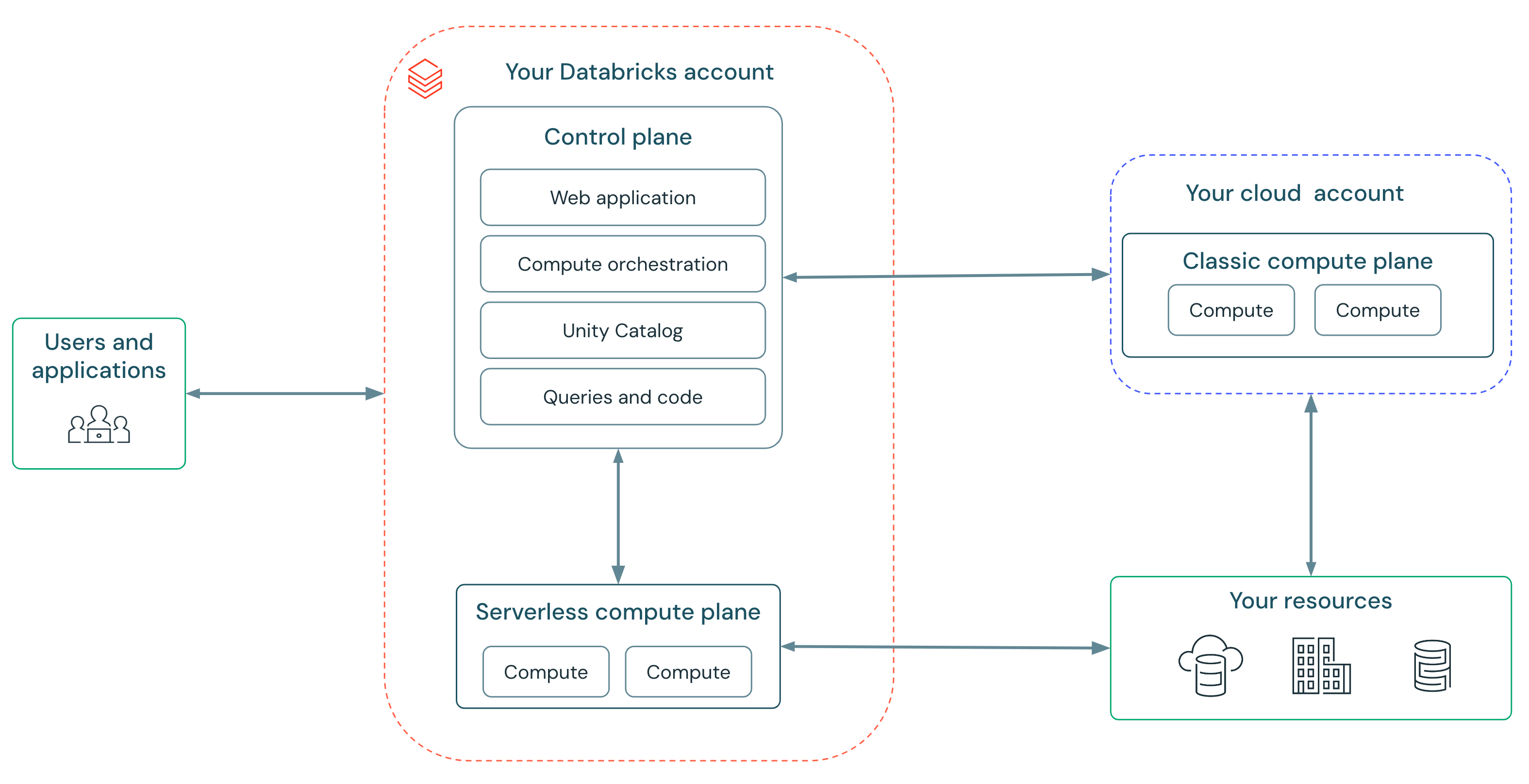

In traditional compute, you give Databricks delegated permission by way of advanced cloud insurance policies and roles to handle the lifecycle of situations wanted on your workloads. Serverless compute removes this complexity since Databricks manages an unlimited, safe fleet of compute in your behalf. You possibly can simply begin utilizing Databricks with none setup.

Serverless compute allows us to offer a service that’s quick, easy, and dependable:

- Quick: No extra ready for clusters — compute begins up in seconds, not minutes. Databricks runs “heat swimming pools” of situations in order that compute is prepared if you find yourself.

- Easy: No extra choosing occasion sorts, cluster scaling parameters, or setting Spark configs. Serverless features a new autoscaler which is smarter and extra aware of your workload’s wants than the autoscaler in traditional compute. Because of this each consumer is now in a position to run workloads with out hand-holding of infrastructure consultants. Databricks updates workloads routinely and safely improve to the most recent Spark variations — guaranteeing you all the time get the most recent efficiency and safety advantages.

- Dependable: Databricks’ serverless compute shields clients from cloud outages with computerized occasion sort failover and a “heat pool” of situations buffering from availability shortages.

“It’s extremely simple to maneuver workflows from Dev to Prod with out the necessity to decide on employee sorts. [The] important enchancment in start-up time, mixed with lowered DataOps configuration and upkeep, enormously enhances productiveness and effectivity.”

— Gal Doron, Head of Knowledge, AnyClip

Serverless compute payments for work finished

We’re excited to introduce an elastic billing mannequin for serverless compute. You’re billed solely when compute is assigned to your workloads and never for the time to amass and arrange compute situations.

The clever serverless autoscaler ensures that your workspace will all the time have the correct quantity of capability provisioned so we are able to reply to demand e.g., when a consumer runs a command in a pocket book. It is going to routinely scale workspace capability up and down in graduated steps to fulfill your wants. To make sure sources are managed properly, we are going to scale back provisioned capability after a couple of minutes when the clever autoscaler predicts it’s not wanted.

“Serverless compute for DLT was extremely simple to arrange and get working, and we’re already seeing main efficiency enhancements from our materialized views. Traditionally going from uncooked knowledge to the silver layer took us about 16 minutes, however after switching to serverless, it is solely about 7 minutes. The time and value financial savings are going to be immense”

— Aaron Jespen, Director IT Operations, Jetlinx

Serverless compute is simple to handle

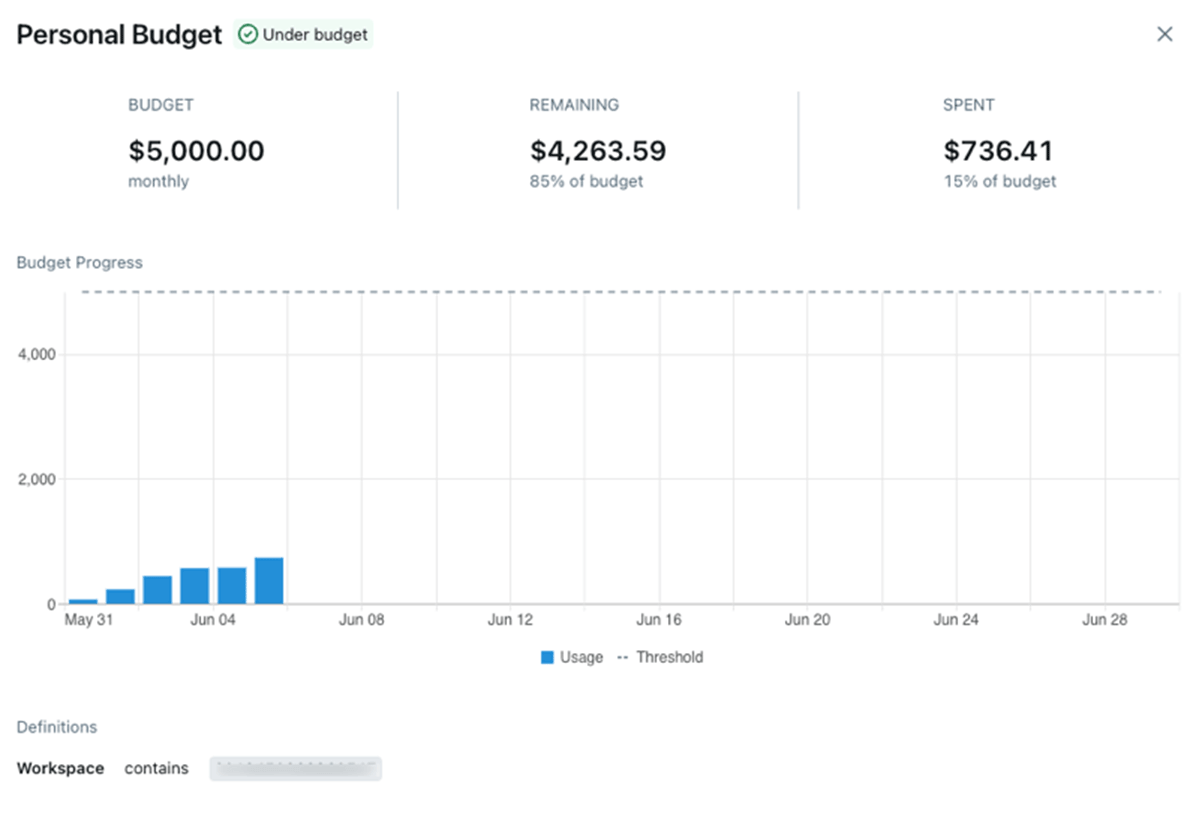

Serverless compute consists of instruments for directors to handle prices and budgets. In any case, simplicity mustn’t imply funds overruns and stunning payments!

Knowledge concerning the utilization and prices of serverless compute is accessible in system tables. We offer pre-built dashboards that allow you to get an summary of prices and drill down into particular workloads.

Directors can use funds alerts (Preview) to group prices and arrange alerts. There’s a pleasant UI for managing budgets.

Serverless compute is designed for contemporary Spark workloads

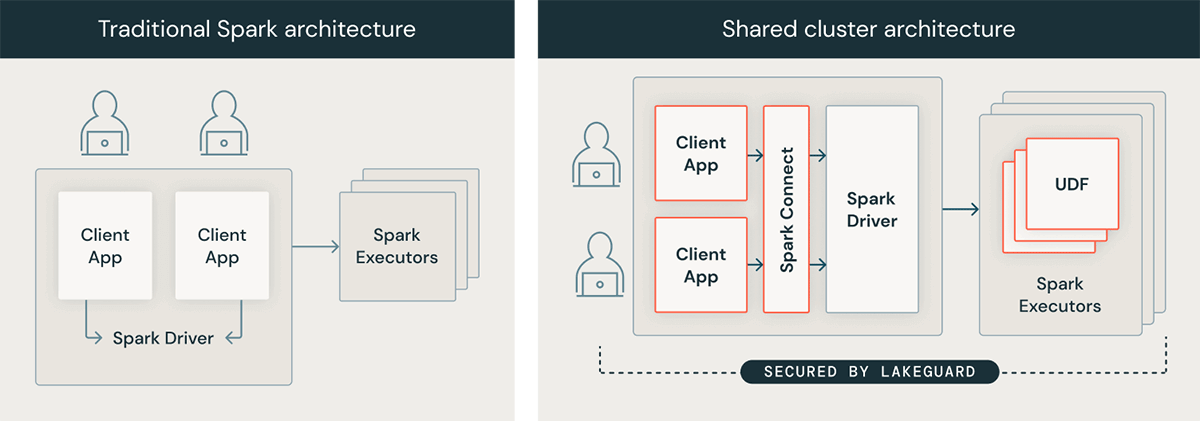

Below the hood, serverless compute makes use of Lakeguard to isolate consumer code utilizing sandboxing methods, an absolute necessity in a serverless surroundings. Because of this, some workloads require code adjustments to proceed engaged on serverless. Serverless compute requires Unity Catalog for safe entry to knowledge property, therefore workloads that entry knowledge with out utilizing Unity Catalog may have adjustments.

The best strategy to take a look at in case your workload is prepared for serverless compute is to first run it on a traditional cluster utilizing shared entry mode on DBR 14.3+.

Serverless compute is able to use

We’re onerous at work to make serverless compute even higher within the coming months:

- GCP help: We are actually starting a non-public preview on serverless compute on GCP; keep tuned for our public preview and GA bulletins.

- Non-public networking and egress controls: Connect with sources inside your non-public community, and management what your serverless compute sources can entry on the general public Web.

- Enforceable attribution: Be certain that all notebooks, workflows, and DLT pipelines are appropriately tagged so that you could assign value to particular value facilities, e.g. for chargebacks.

- Environments: Admins will be capable of set a base surroundings for the workspace with entry to personal repositories, particular Python and library variations, and surroundings variables.

- Value vs. efficiency: Serverless compute is presently optimized for quick startup, scaling, and efficiency. Customers will quickly be capable of specific different objectives reminiscent of decrease value.

- Scala help: Customers will be capable of run Scala workloads on serverless compute. To get able to easily transfer to serverless as soon as out there, transfer your Scala workloads to traditional compute with Shared Entry mode.

To start out utilizing serverless compute at present: