Word: Among the capabilities described on this publish are being rolled out progressively and should not but be out there in all workspaces. As soon as the rollout is full throughout the subsequent few days, the expertise will match what’s proven right here.

Databricks is happy to announce that desk replace triggers at the moment are usually out there in Lakeflow Jobs. Many knowledge groups nonetheless depend on cron jobs to approximate when knowledge is on the market, however that guesswork can result in wasted compute and delayed insights. With desk replace triggers, your jobs run robotically as quickly as specified tables are up to date, enabling a extra responsive and environment friendly approach to orchestrate pipelines.

Set off jobs immediately when knowledge adjustments

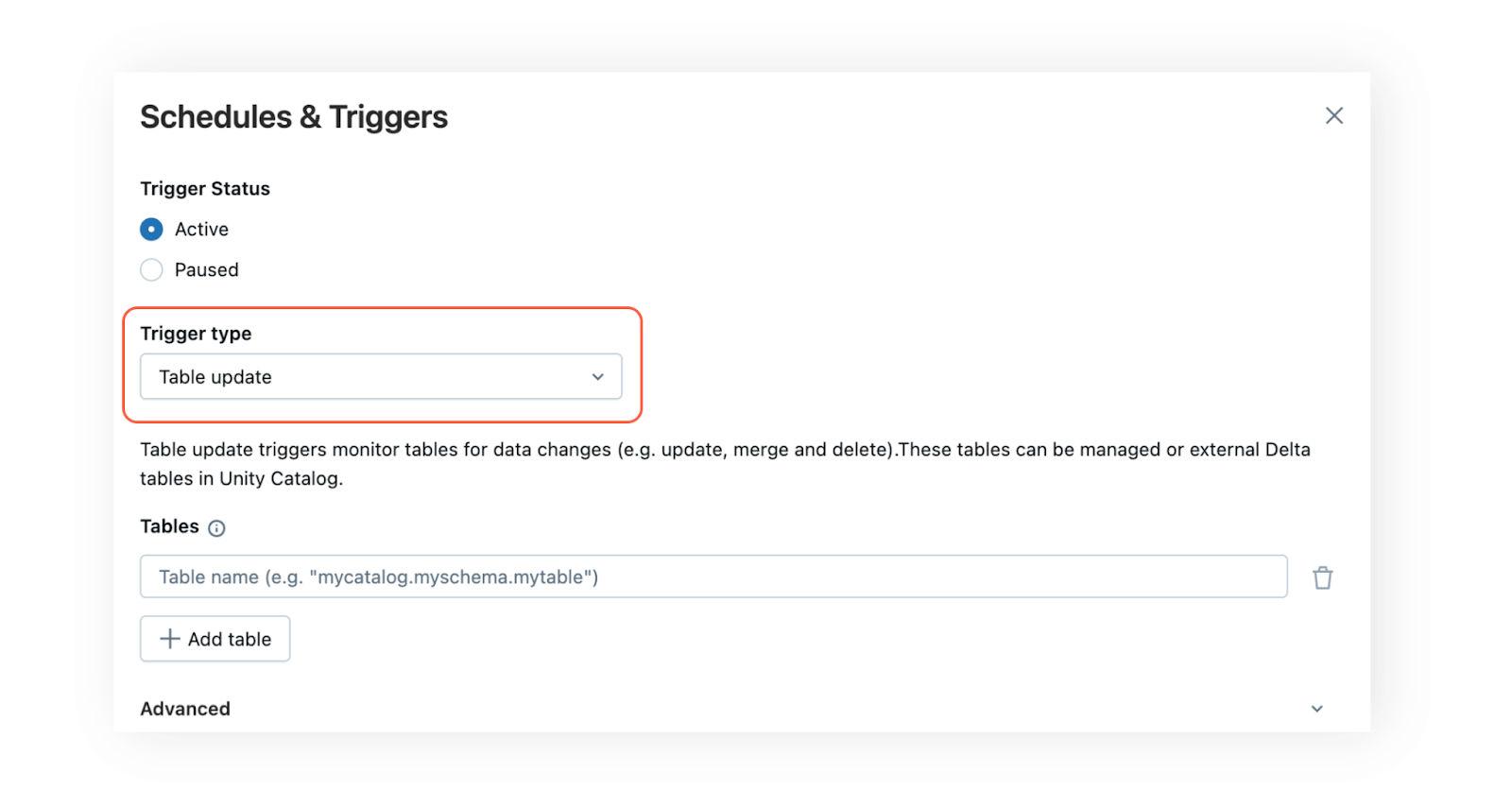

Desk replace triggers allow you to set off jobs based mostly on desk updates. Your job begins as quickly as knowledge is added or up to date. To configure a desk replace set off in Lakeflow Jobs, simply add a number of tables recognized to Unity Catalog utilizing the “Desk replace” set off kind within the Schedules & Triggers menu. A brand new run will begin as soon as the desired tables have been up to date. If a number of tables are chosen, you may decide whether or not the job ought to run after a single desk is up to date or solely as soon as all chosen tables are up to date.

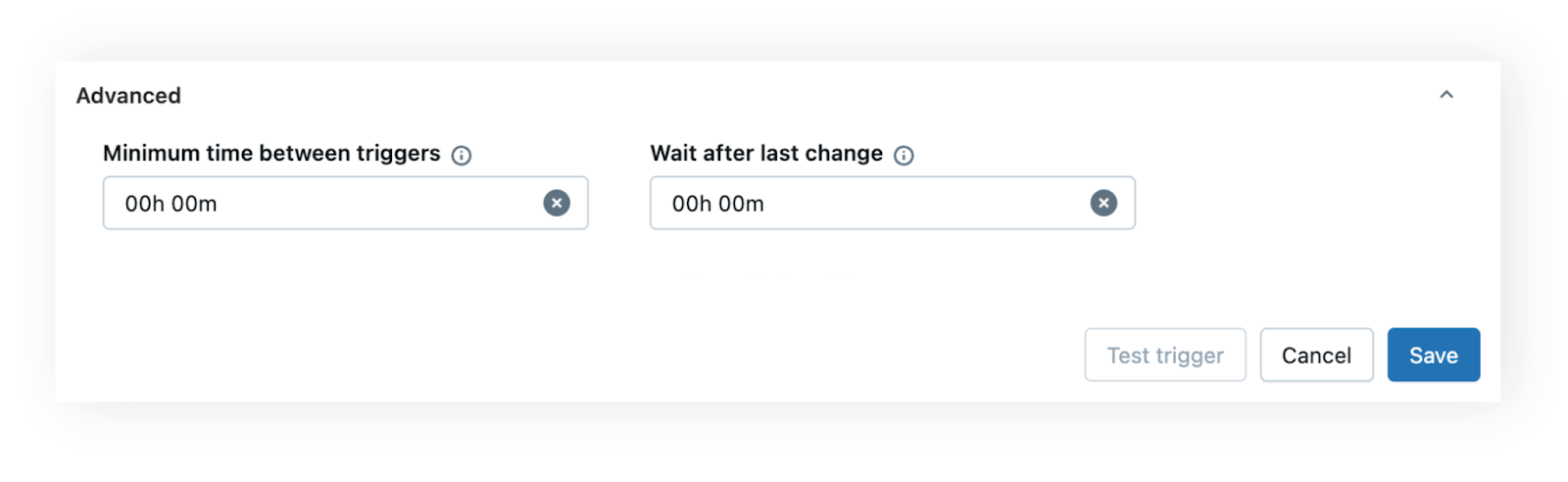

To deal with situations the place tables obtain frequent updates or bursts of knowledge, you may leverage the identical superior timing configurations out there for file arrival triggers: minimal time between triggers and wait after final change.

- Minimal time between triggers is helpful when a desk updates continuously and also you wish to keep away from launching jobs too usually. For instance, if an information ingestion pipeline updates a desk a number of occasions each hour, setting a 60-minute buffer prevents the job from operating greater than as soon as inside that window.

- Wait after final change helps guarantee all knowledge has landed earlier than the job begins. As an example, if an upstream system writes a number of batches to a desk over a couple of minutes, setting a brief “wait after final change” (e.g., 5 minutes) ensures the job solely runs as soon as writing is full.

These settings offer you management and adaptability, so your jobs are each well timed and resource-efficient.

Cut back prices and latency by eliminating guesswork

By changing cron schedules with real-time triggers, you scale back wasted compute and keep away from delays brought on by stale knowledge. If knowledge arrives early, the job runs instantly. If it’s delayed, you keep away from losing compute on stale knowledge.

That is particularly impactful at scale, when groups function throughout time zones or handle high-volume knowledge pipelines. As a substitute of overprovisioning compute or risking knowledge staleness, you keep aligned and responsive by reacting to real-time adjustments in your knowledge.

Energy decentralized, event-driven pipelines

In massive organizations, you won’t at all times know the place upstream knowledge comes from or the way it’s produced. With desk replace triggers, you may construct reactive pipelines that function independently with out tight coupling to upstream schedules. For instance, as a substitute of scheduling a dashboard refresh at 8 a.m. day-after-day, you may refresh it as quickly as new knowledge lands, guaranteeing your customers at all times see the freshest insights. That is particularly highly effective in Knowledge Mesh environments, the place autonomy and self-service are key.

Desk replace triggers profit from built-in observability in Lakeflow Jobs. Desk metadata (e.g., commit timestamp or model) is uncovered to downstream duties through parameters, guaranteeing each job makes use of the identical constant snapshot of knowledge. Since desk replace triggers depend on upstream desk adjustments, understanding knowledge dependencies is essential. Unity Catalog’s automated lineage supplies visibility, exhibiting which jobs learn from which tables. That is important for making desk replace triggers dependable at scale, serving to groups perceive dependencies and keep away from unintended downstream affect.

Desk replace triggers are the newest in a rising set of orchestration capabilities in Lakeflow Jobs. Mixed with management stream, file arrival triggers, and unified observability, they provide a versatile, scalable, and fashionable basis for extra environment friendly pipelines.

Getting Began

Desk replace triggers at the moment are out there to all Databricks clients utilizing Unity Catalog. To get began: