We lately introduced the overall availability of serverless compute for Notebooks, Workflows, and Delta Stay Tables (DLT) pipelines. At the moment, we might like to elucidate how your ETL pipelines constructed with DLT pipelines can profit from serverless compute.

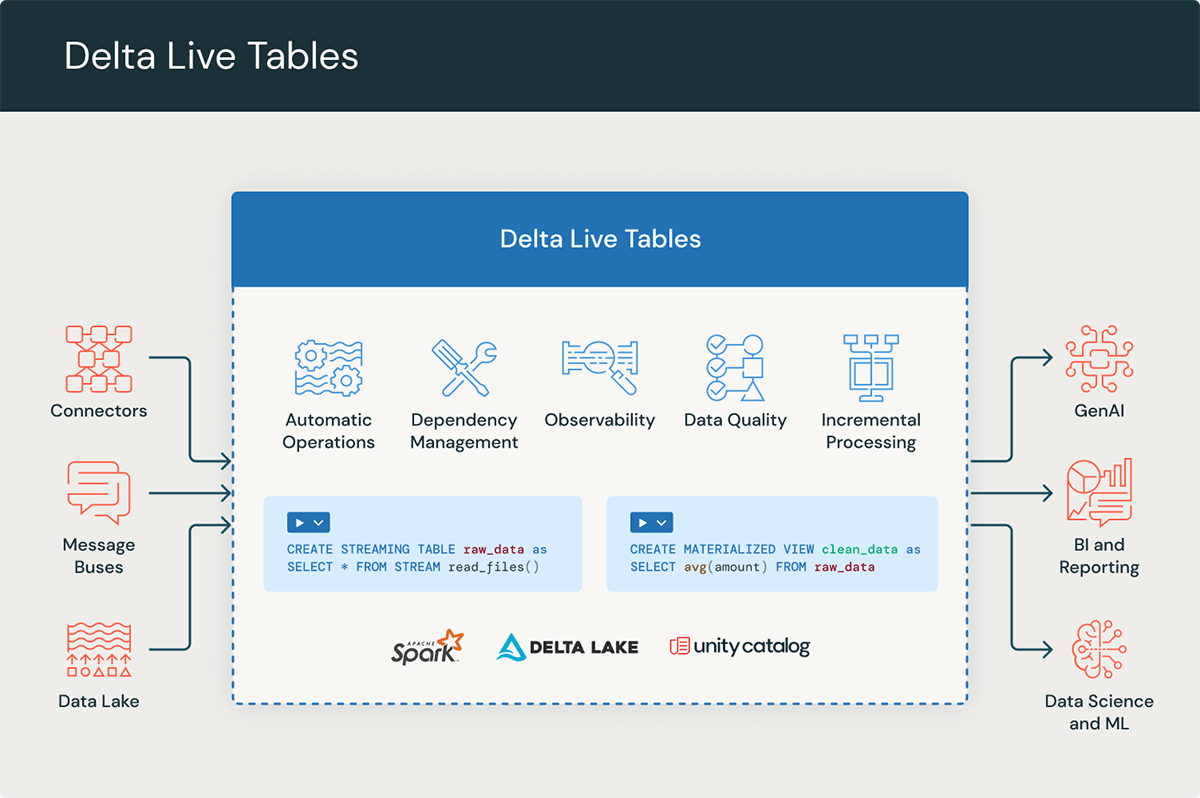

DLT pipelines make it simple to construct cost-effective streaming and batch ETL workflows utilizing a easy, declarative framework. You outline the transformations on your information, and DLT pipelines will robotically handle activity orchestration, scaling, monitoring, information high quality, and error dealing with.

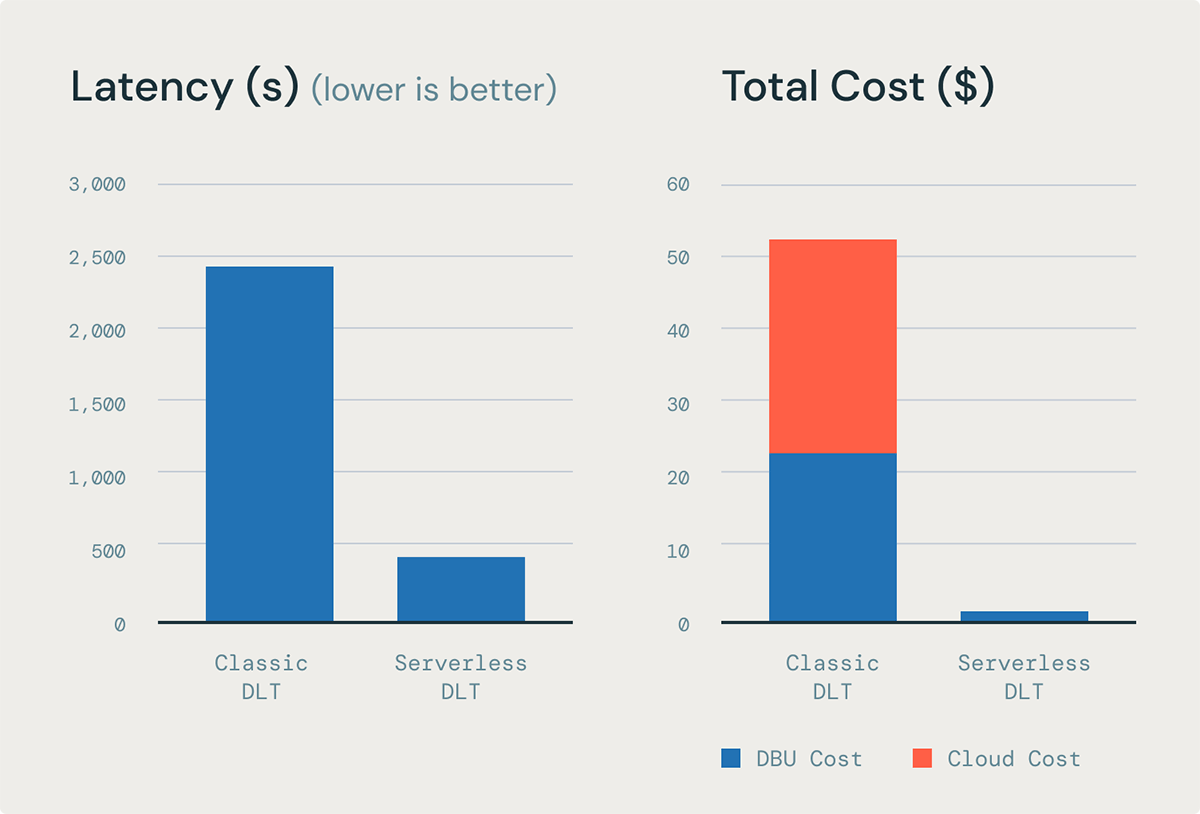

Serverless compute for DLT pipelines presents as much as 5 occasions higher cost-performance for information ingestion and as much as 98% price financial savings for complicated transformations. It additionally offers enhanced reliability in comparison with DLT on traditional compute. This mix results in quick and reliable ETL at scale on Databricks. On this weblog put up, we’ll delve into how serverless compute for DLT achieves excellent simplicity, efficiency, and the bottom whole price of possession (TCO).

DLT pipelines on serverless compute are quicker, cheaper, and extra dependable

DLT on serverless compute enhances throughput, bettering reliability, and decreasing whole price of possession (TCO). This enchancment is because of its skill to carry out end-to-end incremental processing all through the complete information journey—from ingestion to transformation. Moreover, serverless DLT can assist a wider vary of workloads by robotically scaling compute assets vertically, which improves the dealing with of memory-intensive duties.

Simplicity

DLT pipelines simplify ETL growth by automating a lot of the operational complexity. This lets you concentrate on delivering high-quality information as a substitute of managing and sustaining pipelines.

Easy growth

- Declarative Programming: Simply construct batch and streaming pipelines for ingestion, transformation and making use of information high quality expectations.

- Easy APIs: Deal with change-data-capture (CDC) for SCD sort 1 and kind 2 codecs from each streaming and batch sources.

- Knowledge High quality: Implement information high quality with expectation and leverage highly effective observability for information high quality.

Easy operations

- Horizontal Autoscaling: Routinely scale pipelines horizontally with automated orchestration and retries.

- Automated Upgrades: Databricks Runtime (DBR) upgrades are dealt with robotically, making certain you obtain the newest options and safety patches with none effort and minimal downtime.

- Serverless Infrastructure: Vertical autoscaling of assets with no need to choose occasion varieties or handle compute configurations, enabling even non-experts to function pipelines at scale.

Efficiency

DLT on serverless compute offers end-to-end incremental processing throughout your whole pipeline – from ingestion to transformation. Which means that pipelines working on serverless compute will execute quicker and have decrease total latency as a result of information is processed incrementally for each ingestion and complicated transformations. Key advantages embody:

- Quick Startup: Eliminates chilly begins because the serverless fleet ensures compute is all the time out there when wanted.

- Improved Throughput: Enhanced ingestion throughput with stream pipeline for activity parallelization.

- Environment friendly Transformations: Enzyme cost-based optimizer powers quick and environment friendly transformations for materialized views.

Low TCO

In DLT utilizing serverless compute, information is processed incrementally, enabling workloads with giant, complicated materialized views (MVs) to profit from diminished total information processing occasions. The serverless mannequin makes use of elastic billing, which means solely the precise time spent processing information is billed. This eliminates the necessity to pay for unused occasion capability or monitor occasion utilization. With DLT on serverless compute, the advantages embody:

- Environment friendly Knowledge Processing: Incremental ingestion with streaming tables and incremental transformation with materialized views.

- Environment friendly Billing: Billing happens solely when compute is assigned to workloads, not for the time required to accumulate and arrange assets.

“Serverless DLT pipelines halve execution occasions with out compromising prices, improve engineering effectivity, and streamline complicated information operations, permitting groups to concentrate on innovation quite than infrastructure in each manufacturing and growth environments.”

— Cory Perkins, Sr. Knowledge & AI Engineer, Qorvo

“We opted for DLT specifically to spice up developer productiveness, in addition to the embedded information high quality framework and ease of operation. The provision of serverless choices eases the overhead on engineering upkeep and value optimization. This transfer aligns seamlessly with our overarching technique to migrate all pipelines to serverless environments inside Databricks.”

— Bala Moorthy, Senior Knowledge Engineering Supervisor, Compass

Let’s take a look at a few of these capabilities in additional element:

Finish-to-end incremental processing

Knowledge processing in DLT happens at two phases: ingestion and transformation. In DLT, ingestion is supported by streaming tables, whereas information transformations are dealt with by materialized views. Incremental information processing is essential for attaining the most effective efficiency on the lowest price. It is because, with incremental processing, assets are optimized for each studying and writing: solely information that has modified because the final replace is learn, and present information within the pipeline is just touched if vital to attain the specified outcome. This method considerably improves price and latency in comparison with typical batch-processing architectures.

Streaming tables have all the time supported incremental processing for ingestion from cloud recordsdata or message buses, leveraging Spark Structured Streaming know-how for environment friendly, exactly-once supply of occasions.

Now, DLT with serverless compute permits the incremental refresh of complicated MV transformations, permitting for end-to-end incremental processing throughout the ETL pipeline in each ingestion and transformation.

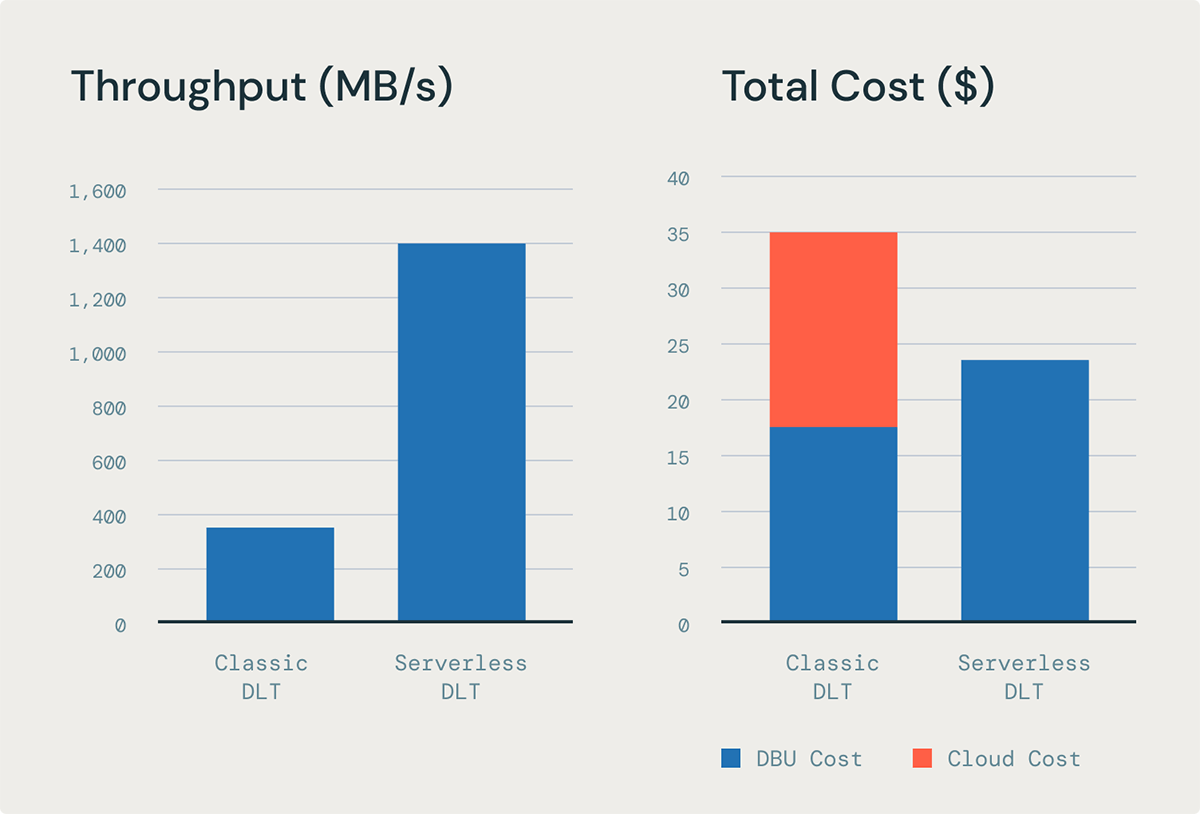

Higher information freshness at decrease price with incremental refresh of materialized views

Absolutely recomputing giant MVs can develop into costly and incur excessive latency. Beforehand to be able to do incremental processing for complicated transformation customers solely had one possibility: write sophisticated MERGE and forEachBatch() statements in PySpark to implement incremental processing within the gold layer.

DLT on serverless compute robotically handles incremental refreshing of MVs as a result of it features a cost-based optimizer (“Enzyme”) to robotically incrementally refresh materialized views with out the consumer needing to jot down complicated logic. Enzyme reduces the price and considerably improves latency to hurry up the method of doing ETL. This implies that you would be able to have higher information freshness at a a lot decrease price.

Primarily based on our inner benchmarks on a 200 billion row desk, Enzyme can present as much as 6.5x higher throughput and 85% decrease latency than the equal MV refresh on DLT on traditional compute.

Sooner, cheaper ingestion with stream pipelining

Streaming pipelining improves the throughput of loading recordsdata and occasions in DLT when utilizing streaming tables. Beforehand, with traditional compute, it was difficult to totally make the most of occasion assets as a result of some duties would end early, leaving slots idle. Stream pipelining with DLT on serverless compute solves this by enabling SparkTM Structured Streaming (the know-how that underpins streaming tables) to concurrently course of micro-batches. All of this results in important enhancements of streaming ingestion latency with out rising price.

Primarily based on our inner benchmarks of loading 100K JSON recordsdata utilizing DLT, stream pipelining can present as much as 5x higher worth efficiency than the equal ingestion workload on a DLT traditional pipeline.

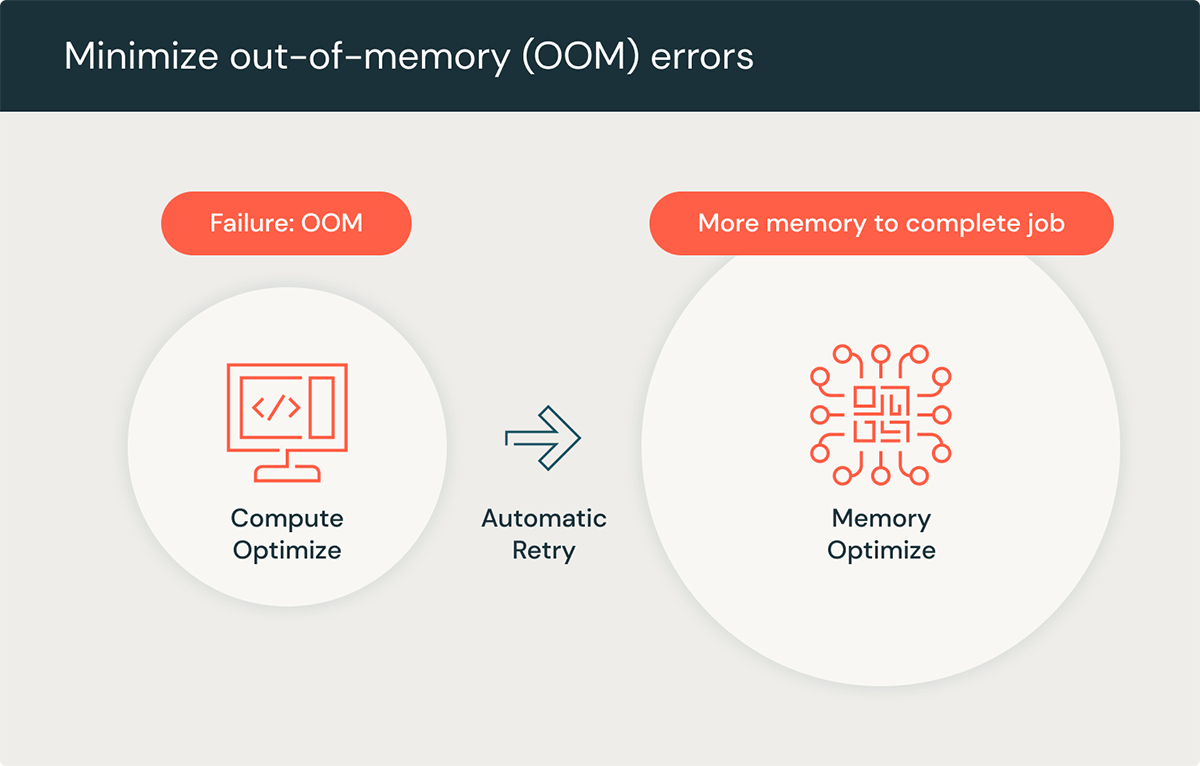

Allow memory-intensive ETL workloads with automated vertical scaling

Selecting the best occasion sort for optimum efficiency with altering, unpredictable information volumes – particularly for giant, complicated transformations and streaming aggregations – is difficult and infrequently results in overprovisioning. When transformations require extra reminiscence than out there, it might trigger out-of-memory (OOM) errors and pipeline crashes. This necessitates manually rising occasion sizes, which is cumbersome, time-consuming, and ends in pipeline downtime.

DLT on serverless compute addresses this with automated vertical auto-scaling of compute and reminiscence assets. The system robotically selects the suitable compute configuration to fulfill the reminiscence necessities of your workload. Moreover, DLT will scale down by decreasing the occasion dimension if it determines that your workload requires much less reminiscence over time.

DLT on serverless compute is prepared now

DLT on serverless compute is obtainable now, and we’re constantly working to enhance it. Listed below are some upcoming enhancements:

- Multi-Cloud Help: At present out there on Azure and AWS, with GCP assist in public preview and GA bulletins later this 12 months.

- Continued Optimization for Price and Efficiency: Whereas presently optimized for quick startup, scaling, and efficiency, customers will quickly have the ability to prioritize objectives like decrease price.

- Personal Networking and Egress Controls: Hook up with assets inside your personal community and management entry to the general public web.

- Enforceable Attribution: Tag notebooks, workflows, and DLT pipelines to assign prices to particular price facilities, similar to for chargebacks.

Get began with DLT on serverless compute in the present day

To start out utilizing DLT on serverless compute in the present day: