In short: A bunch of synthetic intelligence researchers has demonstrated working a strong AI language mannequin on a Home windows 98 machine. And we’re not speaking about simply any previous PC, however a classic Pentium II system with a mere 128MB of RAM. The crew behind the experiment is EXO Labs, a corporation shaped by researchers and engineers from Oxford College.

In a video shared on X, EXO Labs fired up a dusty Elonex Pentium II 350MHz system working Home windows 98. As a substitute of taking part in Minesweeper or shopping with Netscape Navigator, the PC was put by its paces with one thing way more demanding: working an AI mannequin.

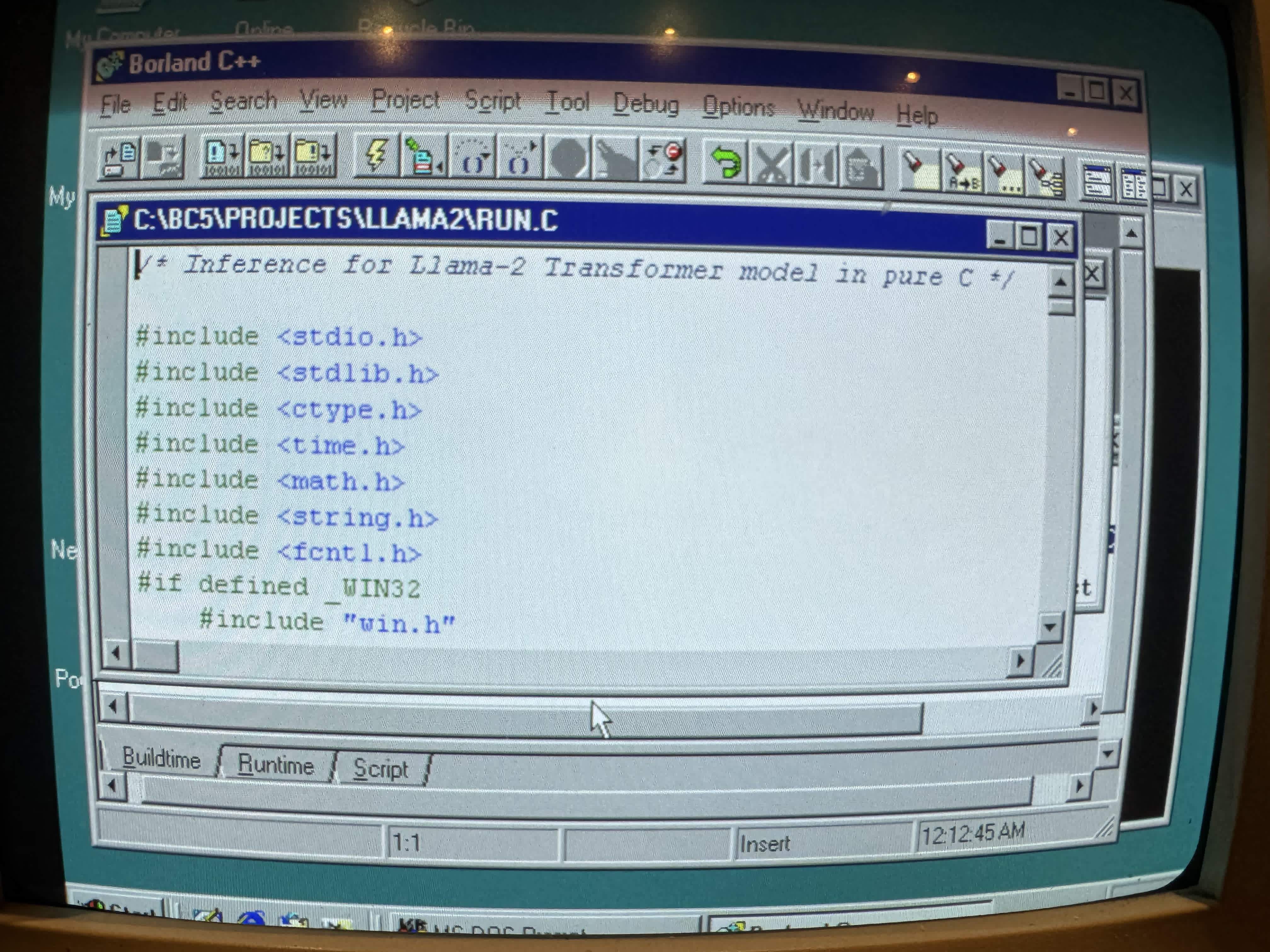

This mannequin was primarily based on Andrej Karpathy’s Llama2.c code. Towards all odds, the pc managed to generate a coherent story on command. It did it at a good velocity, too, which is normally troublesome with AI fashions run regionally.

Tempo is already a large enough problem, however one other hurdle the crew needed to overcome was getting trendy code to compile and run on an working system from 1998.

Ultimately, they managed to maintain a efficiency of 39.31 tokens per second working a Llama-based LLM with 260,000 parameters. Cranking up the mannequin dimension considerably diminished the efficiency, although. As an example, the 1 billion parameter Llama 3.2 mannequin barely managed 0.0093 tokens per second on the classic {hardware}.

As for why the crew is attempting so laborious to run AI fashions that sometimes require highly effective server {hardware} on such historic machines, the purpose is to develop AI fashions that may run on even essentially the most modest of units. EXO Labs’ mission is to “democratize entry to AI” and forestall a handful of tech giants from monopolizing this game-changing expertise.

35.9 tok/sec on Home windows 98 🤯

This can be a 260K LLM with Llama-architecture.

We additionally tried out bigger fashions. Leads to the weblog publish. https://t.co/QsViEQLqS9 pic.twitter.com/lRpIjERtSr

– Alex Cheema – e/acc (@alexocheema) December 28, 2024

For this, the corporate is growing what it calls the “BitNet” – a transformer structure that makes use of ternary weights to drastically cut back mannequin dimension. With this structure, a 7 billion parameter mannequin wants simply 1.38GB of storage, making it possible to run on most price range {hardware}.

Associated studying: Meet Transformers: The Google Breakthrough that Rewrote AI’s Roadmap

BitNet can also be designed to be CPU-first, avoiding the necessity for costly GPUs. Extra impressively, the structure can leverage a staggering 100 billion parameter mannequin on a single CPU whereas sustaining human studying speeds at 5-7 tokens per second.

In case you’re eager to affix the locally-run fashions revolution, EXO Labs is actively in search of contributors. Simply try the total weblog publish to get a greater concept of the mission.