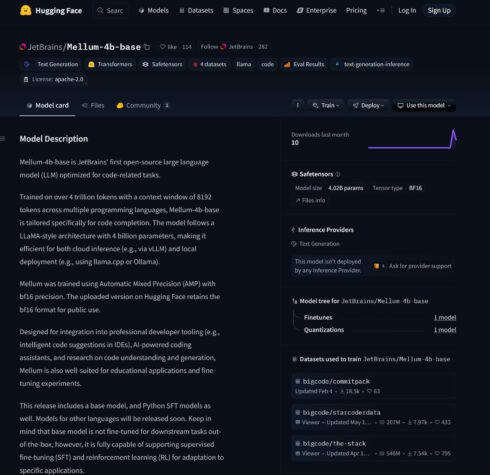

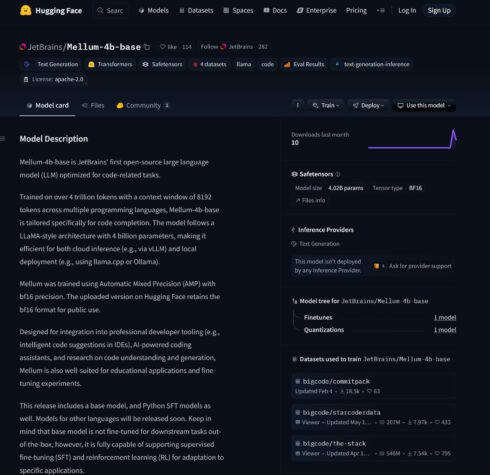

JetBrains has introduced that its code completion LLM, Mellum, is now accessible on Hugging Face as an open supply mannequin.

In line with the corporate, Mellum is a “focal mannequin,” which means that it was constructed purposely for a particular process, relatively than attempting to be good at all the pieces. “It’s designed to do one factor very well: code completion,” Anton Semenkin, senior product supervisor at JetBrains, and Michelle Frost, AI advocate at JetBrains, wrote in a weblog publish.

Focal fashions are typically cheaper to run than basic bigger fashions, which makes them extra accessible to groups that don’t have the sources to be operating massive fashions.

“Consider it like T-shaped abilities – an idea the place an individual has a broad understanding throughout many matters (the horizontal high bar or their breadth of data), however deep experience in a single particular space (the vertical stem or depth). Focal fashions observe this similar thought: they aren’t constructed to deal with all the pieces. As an alternative, they specialize and excel at a single process the place depth really delivers worth,” the authors wrote.

Mellum at the moment helps code completion for a number of fashionable languages: Java, Kotlin, Python, Go, PHP, C, C++, C#, JavaScript, TypeScript, CSS, HTML, Rust, Ruby.

There are plans to develop Mellum right into a household of various focal fashions very best for different particular coding duties, comparable to diff prediction.

The present model of Mellum is most very best for both AI/ML researchers exploring AI’s function in software program improvement, or AI/ML engineers or educators as a basis for studying how you can construct, fine-tune, and adapt domain-specific language fashions.

“Mellum isn’t a plug-and-play answer. By releasing it on Hugging Face, we’re providing researchers, educators, and superior groups the chance to discover how a purpose-built mannequin works beneath the hood,” the authors wrote.