Most RAG failures originate at retrieval, not era. Textual content-first pipelines lose format semantics, desk construction, and determine grounding throughout PDF→textual content conversion, degrading recall and precision earlier than an LLM ever runs. Imaginative and prescient-RAG—retrieving rendered pages with vision-language embeddings—immediately targets this bottleneck and reveals materials end-to-end positive factors on visually wealthy corpora.

Pipelines (and the place they fail)

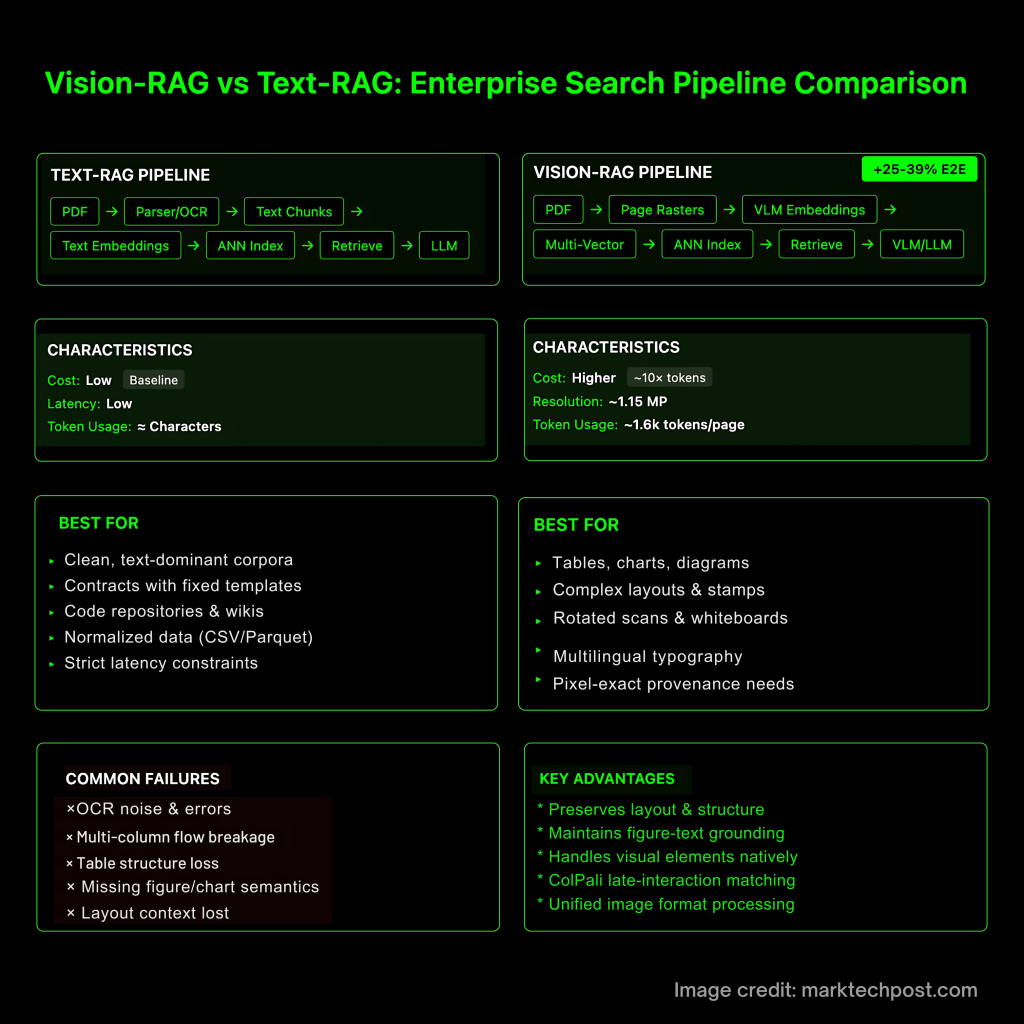

Textual content-RAG. PDF → (parser/OCR) → textual content chunks → textual content embeddings → ANN index → retrieve → LLM. Typical failure modes: OCR noise, multi-column stream breakage, desk cell construction loss, and lacking determine/chart semantics—documented by table- and doc-VQA benchmarks created to measure precisely these gaps.

Imaginative and prescient-RAG. PDF → web page raster(s) → VLM embeddings (typically multi-vector with late-interaction scoring) → ANN index → retrieve → VLM/LLM consumes high-fidelity crops or full pages. This preserves format and figure-text grounding; latest programs (ColPali, VisRAG, VDocRAG) validate the method.

What present proof helps

- Doc-image retrieval works and is less complicated. ColPali embeds web page photographs and makes use of late-interaction matching; on the ViDoRe benchmark it outperforms fashionable textual content pipelines whereas remaining end-to-end trainable.

- Finish-to-end elevate is measurable. VisRAG reviews 25–39% end-to-end enchancment over text-RAG on multimodal paperwork when each retrieval and era use a VLM.

- Unified picture format for real-world docs. VDocRAG reveals that retaining paperwork in a unified picture format (tables, charts, PPT/PDF) avoids parser loss and improves generalization; it additionally introduces OpenDocVQA for analysis.

- Decision drives reasoning high quality. Excessive-resolution assist in VLMs (e.g., Qwen2-VL/Qwen2.5-VL) is explicitly tied to SoTA outcomes on DocVQA/MathVista/MTVQA; constancy issues for ticks, superscripts, stamps, and small fonts.

Prices: imaginative and prescient context is (typically) order-of-magnitude heavier—due to tokens

Imaginative and prescient inputs inflate token counts through tiling, not essentially per-token worth. For GPT-4o-class fashions, complete tokens ≈ base + (tile_tokens × tiles), so 1–2 MP pages may be ~10× price of a small textual content chunk. Anthropic recommends ~1.15 MP caps (~1.6k tokens) for responsiveness. In contrast, Google Gemini 2.5 Flash-Lite costs textual content/picture/video on the similar per-token charge, however giant photographs nonetheless eat many extra tokens. Engineering implication: undertake selective constancy (crop > downsample > full web page).

Design guidelines for manufacturing Imaginative and prescient-RAG

- Align modalities throughout embeddings. Use encoders skilled for textual content↔picture alignment (CLIP-family or VLM retrievers) and, in apply, dual-index: low-cost textual content recall for protection + imaginative and prescient rerank for precision. ColPali’s late-interaction (MaxSim-style) is a robust default for web page photographs.

- Feed high-fidelity inputs selectively. Coarse-to-fine: run BM25/DPR, take top-k pages to a imaginative and prescient reranker, then ship solely ROI crops (tables, charts, stamps) to the generator. This preserves essential pixels with out exploding tokens below tile-based accounting.

- Engineer for actual paperwork.

• Tables: when you should parse, use table-structure fashions (e.g., PubTables-1M/TATR); in any other case desire image-native retrieval.

• Charts/diagrams: anticipate tick- and legend-level cues; decision should retain these. Consider on chart-focused VQA units.

• Whiteboards/rotations/multilingual: web page rendering avoids many OCR failure modes; multilingual scripts and rotated scans survive the pipeline.

• Provenance: retailer web page hashes and crop coordinates alongside embeddings to breed actual visible proof utilized in solutions.

| Commonplace | Textual content-RAG | Imaginative and prescient-RAG |

|---|---|---|

| Ingest pipeline | PDF → parser/OCR → textual content chunks → textual content embeddings → ANN | PDF → web page render(s) → VLM web page/crop embeddings (typically multi-vector, late interplay) → ANN. ColPali is a canonical implementation. |

| Main failure modes | Parser drift, OCR noise, multi-column stream breakage, desk construction loss, lacking determine/chart semantics. Benchmarks exist as a result of these errors are frequent. | Preserves format/figures; failures shift to decision/tiling selections and cross-modal alignment. VDocRAG formalizes “unified picture” processing to keep away from parsing loss. |

| Retriever illustration | Single-vector textual content embeddings; rerank through lexical or cross-encoders | Web page-image embeddings with late interplay (MaxSim-style) seize native areas; improves page-level retrieval on ViDoRe. |

| Finish-to-end positive factors (vs Textual content-RAG) | Baseline | +25–39% E2E on multimodal docs when each retrieval and era are VLM-based (VisRAG). |

| The place it excels | Clear, text-dominant corpora; low latency/price | Visually wealthy/structured docs: tables, charts, stamps, rotated scans, multilingual typography; unified web page context helps QA. |

| Decision sensitivity | Not relevant past OCR settings | Reasoning high quality tracks enter constancy (ticks, small fonts). Excessive-res doc VLMs (e.g., Qwen2-VL household) emphasize this. |

| Price mannequin (inputs) | Tokens ≈ characters; low-cost retrieval contexts | Picture tokens develop with tiling: e.g., OpenAI base+tiles formulation; Anthropic steerage ~1.15 MP ≈ ~1.6k tokens. Even when per-token worth is equal (Gemini 2.5 Flash-Lite), high-res pages eat much more tokens. |

| Cross-modal alignment want | Not required | Crucial: textual content↔picture encoders should share geometry for combined queries; ColPali/ViDoRe exhibit efficient page-image retrieval aligned to language duties. |

| Benchmarks to trace | DocVQA (doc QA), PubTables-1M (desk construction) for parsing-loss diagnostics. | ViDoRe (web page retrieval), VisRAG (pipeline), VDocRAG (unified-image RAG). |

| Analysis method | IR metrics plus textual content QA; could miss figure-text grounding points | Joint retrieval+gen on visually wealthy suites (e.g., OpenDocVQA below VDocRAG) to seize crop relevance and format grounding. |

| Operational sample | One-stage retrieval; low-cost to scale | Coarse-to-fine: textual content recall → imaginative and prescient rerank → ROI crops to generator; retains token prices bounded whereas preserving constancy. (Tiling math/pricing inform budgets.) |

| When to desire | Contracts/templates, code/wikis, normalized tabular information (CSV/Parquet) | Actual-world enterprise docs with heavy format/graphics; compliance workflows needing pixel-exact provenance (web page hash + crop coords). |

| Consultant programs | DPR/BM25 + cross-encoder rerank | ColPali (ICLR’25) imaginative and prescient retriever; VisRAG pipeline; VDocRAG unified picture framework. |

When Textual content-RAG continues to be the precise default?

- Clear, text-dominant corpora (contracts with fastened templates, wikis, code)

- Strict latency/price constraints for brief solutions

- Information already normalized (CSV/Parquet)—skip pixels and question the desk retailer

Analysis: measure retrieval + era collectively

Add multimodal RAG benchmarks to your harness—e.g., M²RAG (multi-modal QA, captioning, fact-verification, reranking), REAL-MM-RAG (real-world multi-modal retrieval), and RAG-Examine (relevance + correctness metrics for multi-modal context). These catch failure instances (irrelevant crops, figure-text mismatch) that text-only metrics miss.

Abstract

Textual content-RAG stays environment friendly for clear, text-only information. Imaginative and prescient-RAG is the sensible default for enterprise paperwork with format, tables, charts, stamps, scans, and multilingual typography. Groups that (1) align modalities, (2) ship selective high-fidelity visible proof, and (3) consider with multimodal benchmarks persistently get larger retrieval precision and higher downstream solutions—now backed by ColPali (ICLR 2025), VisRAG’s 25–39% E2E elevate, and VDocRAG’s unified image-format outcomes.