The velocity and scalability of knowledge utilized in purposes, which pairs intently with its price, are vital parts each growth group cares about. This weblog describes how we optimized Rockset’s sizzling storage tier to enhance effectivity by greater than 200%. We delve into how we architect for effectivity by leveraging new {hardware}, maximizing the usage of out there storage, implementing higher orchestration methods and utilizing snapshots for information sturdiness. With these effectivity features, we have been in a position to cut back prices whereas holding the identical efficiency and move alongside the financial savings to customers. Rockset’s new tiered pricing is as little as $0.13/GB-month, making real-time information extra reasonably priced than ever earlier than.

Rockset’s sizzling storage layer

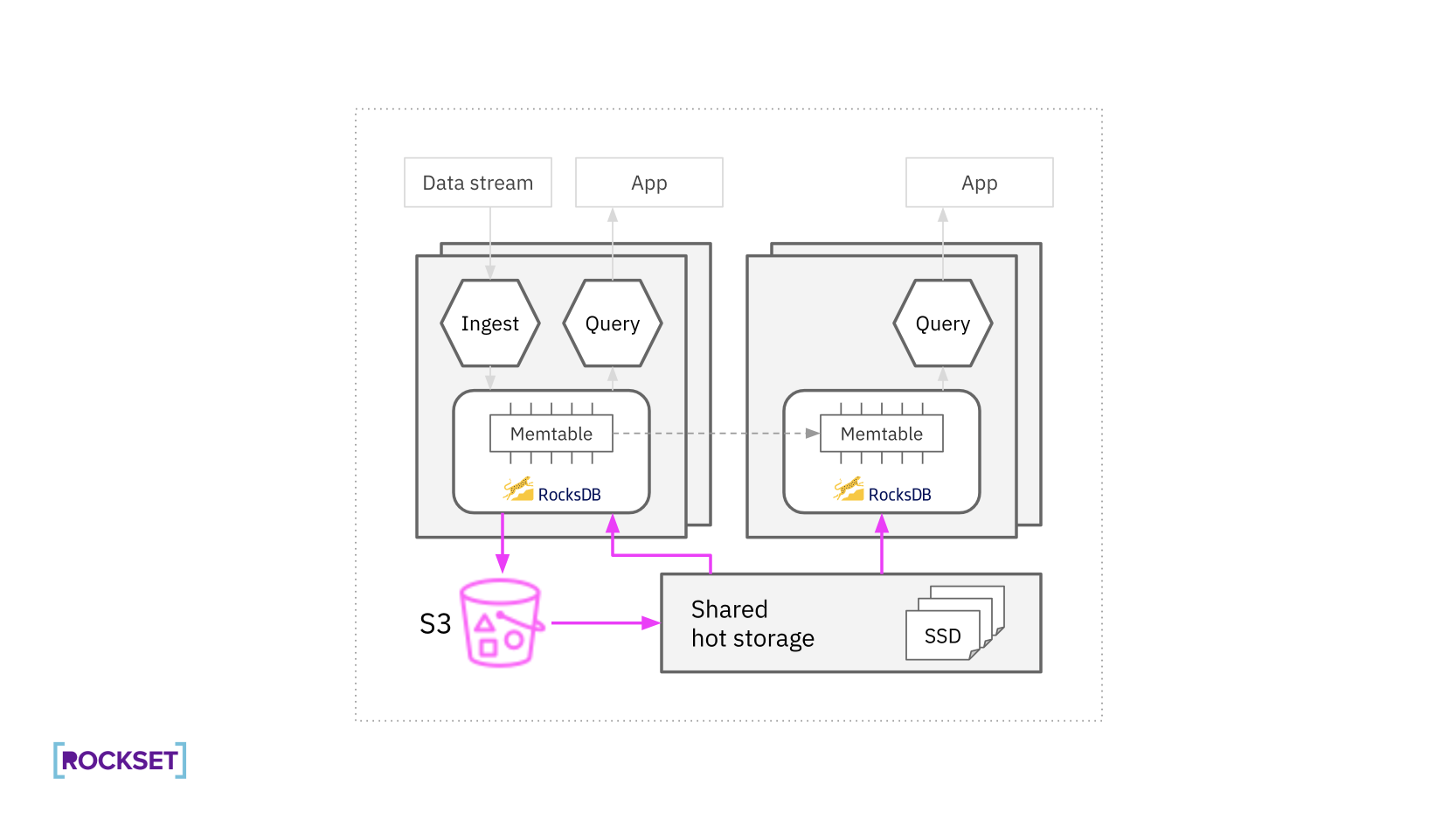

Rockset’s storage answer is an SSD-based cache layered on prime of Amazon S3, designed to ship constant low-latency question responses. This setup successfully bypasses the latency historically related to retrieving information immediately from object storage and eliminates any fetching prices.

Rockset’s caching technique boasts a 99.9997% cache hit fee, attaining near-perfection in caching effectivity on S3. Over the previous 12 months, Rockset has launched into a collection of initiatives aimed toward enhancing the cost-efficiency of its superior caching system. We centered efforts on accommodating the scaling wants of customers, starting from tens to a whole bunch of terabytes of storage, with out compromising on the essential side of low-latency efficiency.

Rockset’s novel structure has compute-compute separation, permitting unbiased scaling of ingest compute from question compute. Rockset gives sub-second latency for information insert, updates, and deletes. Storage prices, efficiency and availability are unaffected from ingestion compute or question compute. This distinctive structure permits customers to:

- Isolate streaming ingest and question compute, eliminating CPU rivalry.

- Run a number of apps on shared real-time information. No replicas required.

- Quick concurrency scaling. Scale out in seconds. Keep away from overprovisioning compute.

The mixture of storage-compute and compute-compute separation resulted in customers bringing onboard new workloads at bigger scale, which unsurprisingly added to their information footprint. The bigger information footprints challenged us to rethink the recent storage tier for price effectiveness. Earlier than highlighting the optimizations made, we first need to clarify the rationale for constructing a sizzling storage tier.

Why Use a Sizzling Storage Tier?

Rockset is exclusive in its selection to take care of a sizzling storage tier. Databases like Elasticsearch depend on locally-attached storage and information warehouses like ClickHouse Cloud use object storage to serve queries that don’t match into reminiscence.

In the case of serving purposes, a number of queries run on large-scale information in a brief window of time, usually underneath a second. This could shortly trigger out-of-memory cache misses and information fetches from both locally-attached storage or object storage.

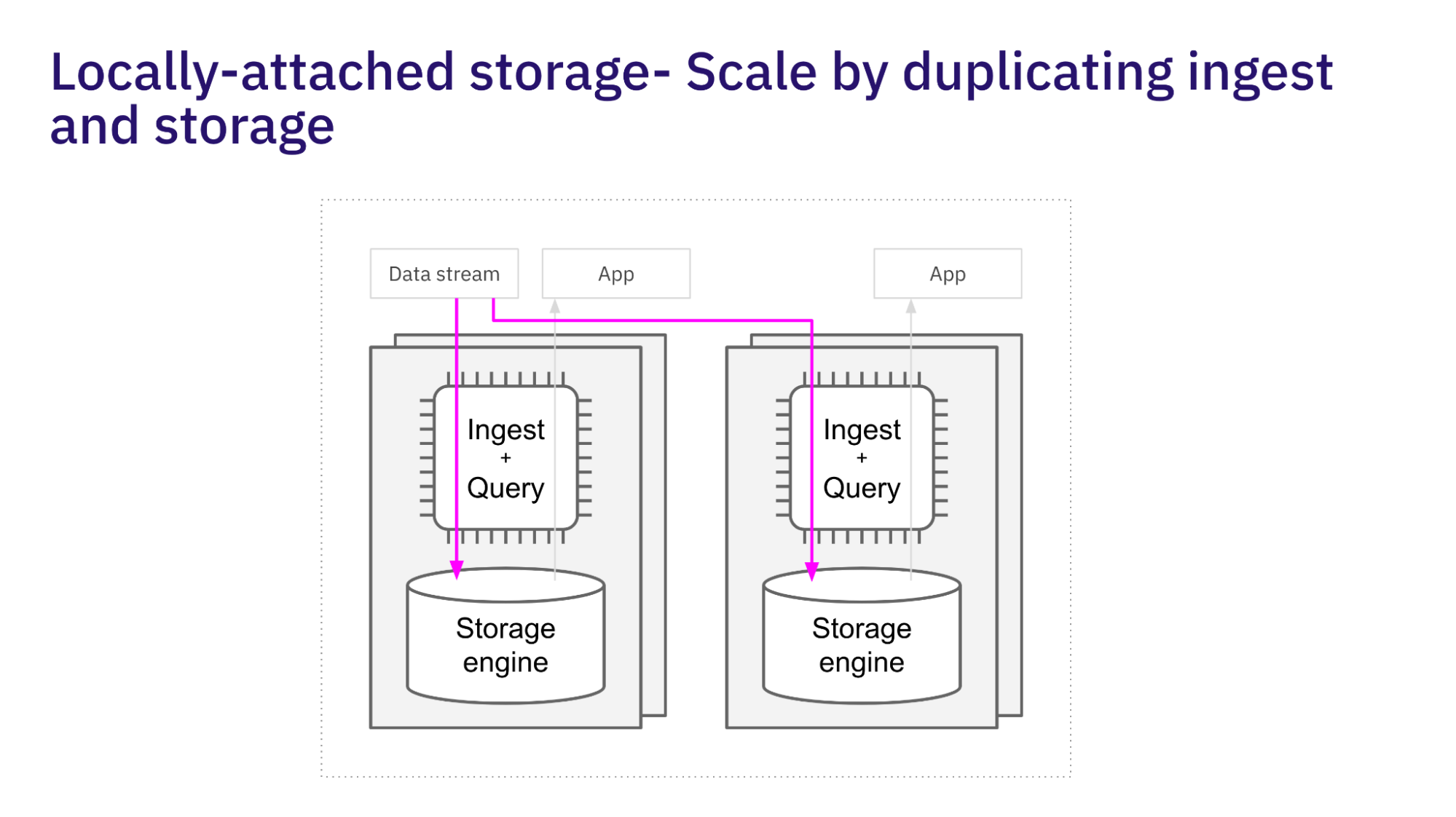

Domestically-Connected Storage Limitations

Tightly coupled methods use locally-attached storage for real-time information entry and quick response instances. Challenges with locally-attached storage embrace:

- Can’t scale information and queries independently. If the storage dimension outpaces compute necessities, these methods find yourself overprovisioned for compute.

- Scaling is gradual and error inclined. Scaling the cluster requires copying the information and information motion which is a gradual course of.

- Keep excessive availability utilizing replicas, impacting disk utilization and growing storage prices.

- Each duplicate must course of incoming information. This leads to write amplification and duplication of ingestion work.

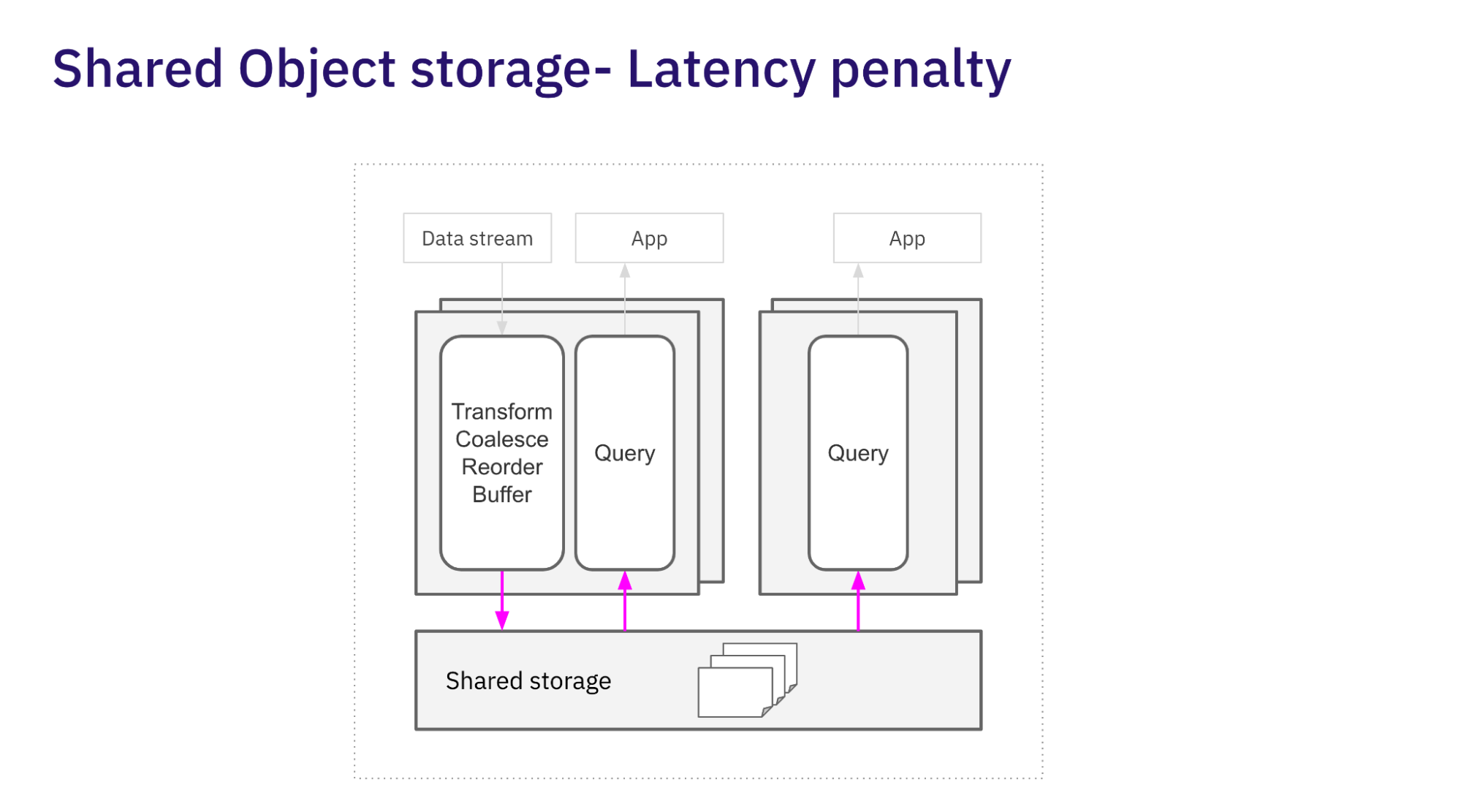

Shared Object Storage Limitations

Making a disaggregated structure utilizing cloud object storage removes the rivalry points with locally-attached storage. The next new challenges happen:

- Added latency, particularly for random reads and writes. Inner benchmarking evaluating Rockset to S3 noticed <1 ms reads from Rockset and ~100 ms reads from S3.

- Overprovisioning reminiscence to keep away from reads from object storage for latency-sensitive purposes.

- Excessive information latency, often within the order of minutes. Knowledge warehouses buffer ingest and compress information to optimize for scan operations, leading to added time from when information is ingested to when it’s queryable.

Amazon has additionally famous the latency of its cloud object retailer and lately launched S3 Xpress One Zone with single-digit millisecond information entry. There are a number of variations to name out between the design and pricing of S3 Xpress One Zone and Rockset’s sizzling storage tier. For one, S3 Specific One Zone is meant for use as a cache in a single availability zone. Rockset is designed to make use of sizzling storage for quick entry and S3 for sturdiness. We even have completely different pricing: S3 Specific One Zone costs embrace each per-GB price in addition to put, copy, submit and listing requests prices. Rockset’s pricing is barely per-GB based mostly.

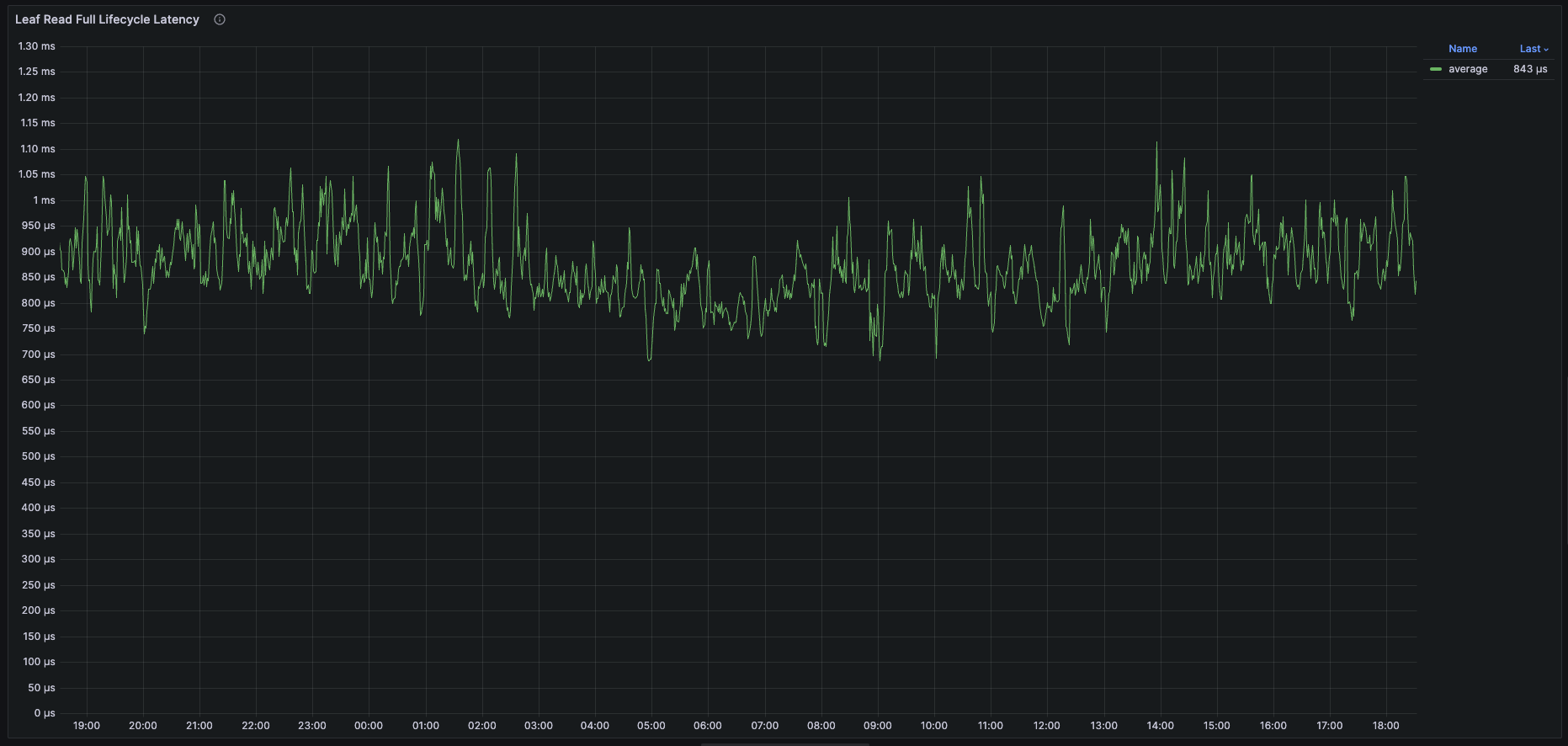

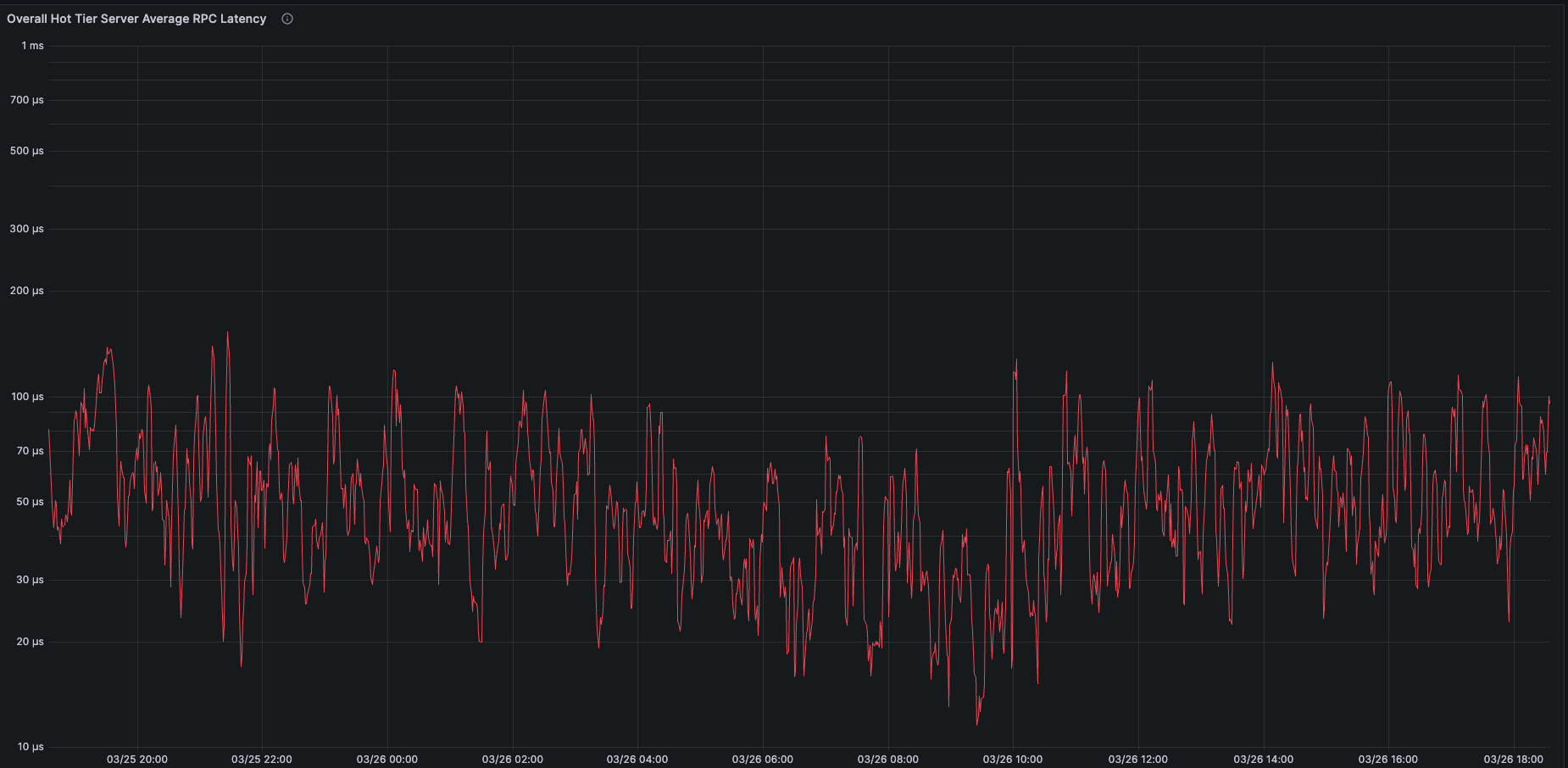

The most important distinction between S3 Xpress One Zone and Rockset is the efficiency. Trying on the graph of end-to-end latency from a 24 hour interval, we see that Rockset’s imply latency between the compute node and sizzling storage consistency stays at 1 millisecond or under.

If we study simply server-side latency, the typical learn is ~100 microseconds or much less.

Decreasing the Value of the Sizzling Storage Tier

To help tens to a whole bunch of terabytes cost-effectively in Rockset, we leverage new {hardware} profiles, maximize the usage of out there storage, implement higher orchestration methods and use snapshots for information restoration.

Leverage Value-Environment friendly {Hardware}

As Rockset separates sizzling storage from compute, it might probably select {hardware} profiles which might be ideally suited to sizzling storage. Utilizing the newest community and storage-optimized cloud situations, which offer one of the best price-performance per GB, we have now been in a position to lower prices by 17% and move these financial savings on to clients.

As we noticed that IOPS and community bandwidth on Rockset often certain sizzling storage efficiency, we discovered an EC2 occasion with barely decrease RAM and CPU assets however the identical quantity of community bandwidth and IOPS. Primarily based on manufacturing workloads and inside benchmarking, we have been in a position to see comparable efficiency utilizing the brand new lower-cost {hardware} and move on financial savings to customers.

Maximize out there storage

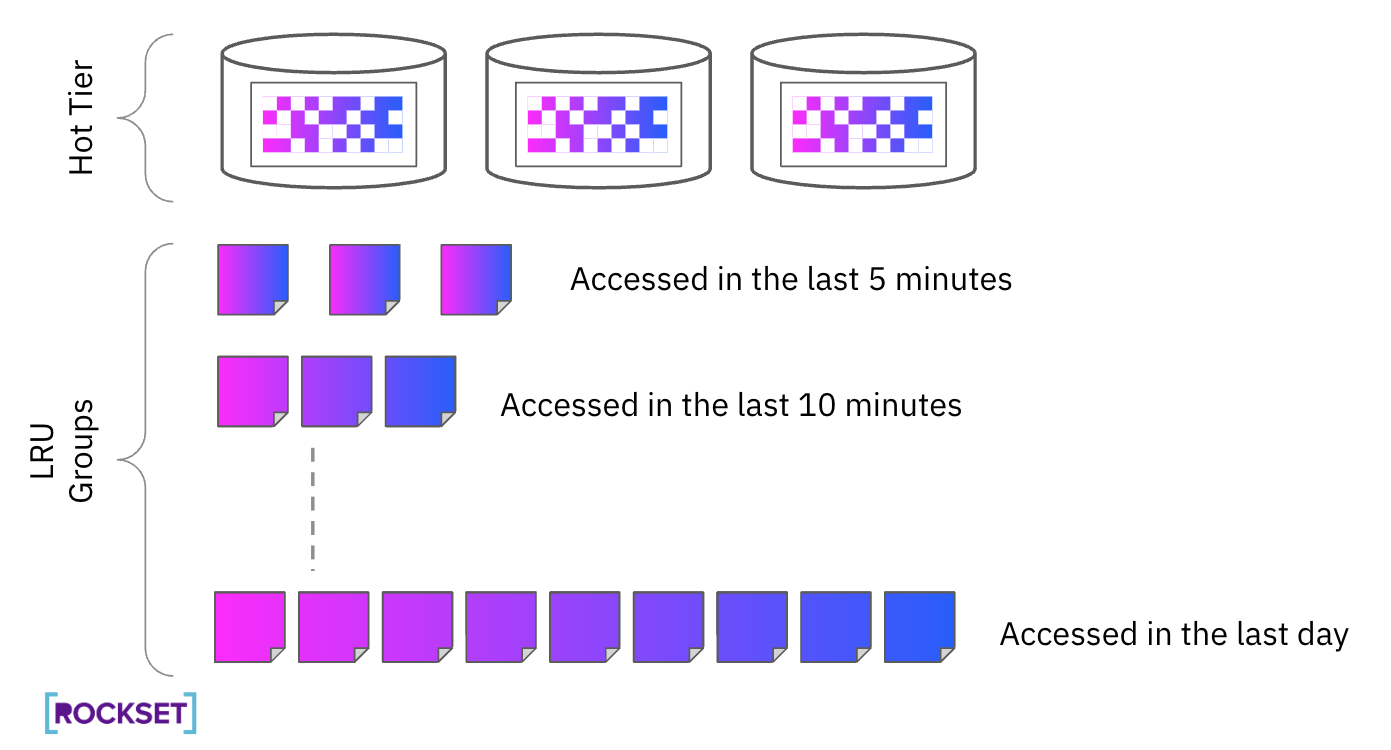

To take care of the very best efficiency requirements, we initially designed the recent storage tier to include two copies of every information block. This ensures that customers get dependable, constant efficiency always. Once we realized two copies had too excessive an influence on storage prices, we challenged ourselves to rethink methods to keep efficiency ensures whereas storing a partial second copy.

We use a LRU (Least Lately Used) coverage to make sure that the information wanted for querying is available even when one of many copies is misplaced. From manufacturing testing we discovered that storing secondary copies for ~30% of the information is ample to keep away from going to S3 to retrieve information, even within the case of a storage node crash.

Implement Higher Orchestration Strategies

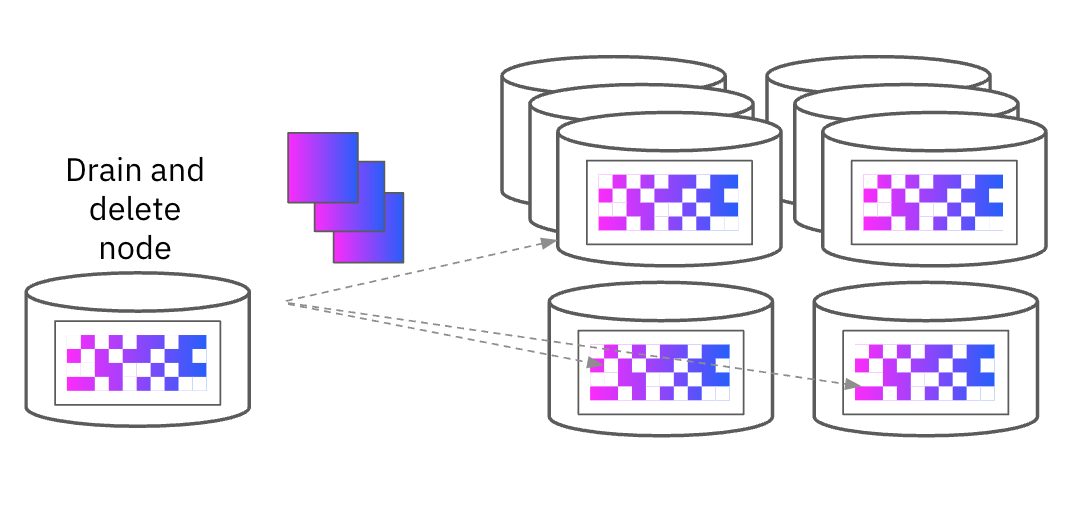

Whereas including nodes to the recent storage tier is simple, eradicating nodes to optimize for prices requires extra orchestration. If we eliminated a node and relied on the S3 backup to revive information to the recent tier, customers might expertise latency. As a substitute, we designed a “pre-draining” state the place the node designated for deletion sends information to the opposite storage nodes within the cluster. As soon as all the information is copied to the opposite nodes, we are able to safely take away it from the cluster and keep away from any efficiency impacts. We use this similar course of for any upgrades to make sure constant cache efficiency.

Use Snapshots for Knowledge Restoration

Initially, S3 was configured to archive each replace, insertion and deletion of paperwork within the system for restoration functions. Nonetheless, as Rockset’s utilization expanded, this method led to storage bloat in S3. To deal with this, we applied a technique involving the usage of snapshots, which decreased the amount of knowledge saved in S3. Snapshots enable Rockset to create a low-cost frozen copy of knowledge that may be restored from later. Snapshots don’t duplicate all the dataset; as a substitute, they solely file the modifications because the earlier snapshot. This decreased the storage required for information restoration by 40%.

Sizzling storage at 100s of TBs scale

The recent storage layer at Rockset was designed to supply predictable question efficiency for in-application search and analytics. It creates a shared storage layer that any compute occasion can entry.

With the brand new sizzling storage pricing as little as $0.13 / GB-month, Rockset is ready to help workloads within the 10s to 100s of terabytes cheaply. We’re repeatedly trying to make sizzling storage extra reasonably priced and move alongside price financial savings to clients. To date, we have now optimized Rockset’s sizzling storage tier to enhance effectivity by greater than 200%.

You may study extra concerning the Rockset storage structure utilizing RocksDB on the engineering weblog and likewise see storage pricing on your workload within the pricing calculator.