We’re excited to announce that Mosaic AI Mannequin Coaching now helps the total context size of 131K tokens when fine-tuning the Meta Llama 3.1 mannequin household. With this new functionality, Databricks clients can construct even higher-quality Retrieval Augmented Technology (RAG) or instrument use methods through the use of lengthy context size enterprise knowledge to create specialised fashions.

The dimensions of an LLM’s enter immediate is decided by its context size. Our clients are sometimes restricted by brief context lengths, particularly in use circumstances like RAG and multi-document evaluation. Meta Llama 3.1 fashions have a protracted context size of 131K tokens. For comparability, The Nice Gatsby is ~72K tokens. Llama 3.1 fashions allow reasoning over an in depth corpus of knowledge, lowering the necessity for chunking and re-ranking in RAG or enabling extra instrument descriptions for brokers.

High-quality-tuning permits clients to make use of their very own enterprise knowledge to specialize present fashions. Latest strategies reminiscent of Retrieval Augmented High-quality-tuning (RAFT) mix fine-tuning with RAG to show the mannequin to disregard irrelevant info within the context, enhancing output high quality. For instrument use, fine-tuning can specialize fashions to raised use novel instruments and APIs which are particular to their enterprise methods. In each circumstances, fine-tuning at lengthy context lengths permits fashions to motive over a considerable amount of enter info.

The Databricks Information Intelligence Platform permits our clients to securely construct high-quality AI methods utilizing their very own knowledge. To verify our clients can leverage state-of-the-art Generative AI fashions, you will need to help options like effectively fine-tuning Llama 3.1 on lengthy context lengths. On this weblog submit, we elaborate on a few of our latest optimizations that make Mosaic AI Mannequin Coaching a best-in-class service for securely constructing and fine-tuning GenAI fashions on enterprise knowledge.

Lengthy Context Size High-quality-tuning

Lengthy sequence size coaching poses a problem primarily due to its elevated reminiscence necessities. Throughout LLM coaching, GPUs have to retailer intermediate outcomes (i.e., activations) with a view to calculate gradients for the optimization course of. Because the sequence size of coaching examples will increase, so does the reminiscence required to retailer these activations, doubtlessly exceeding GPU reminiscence limits.

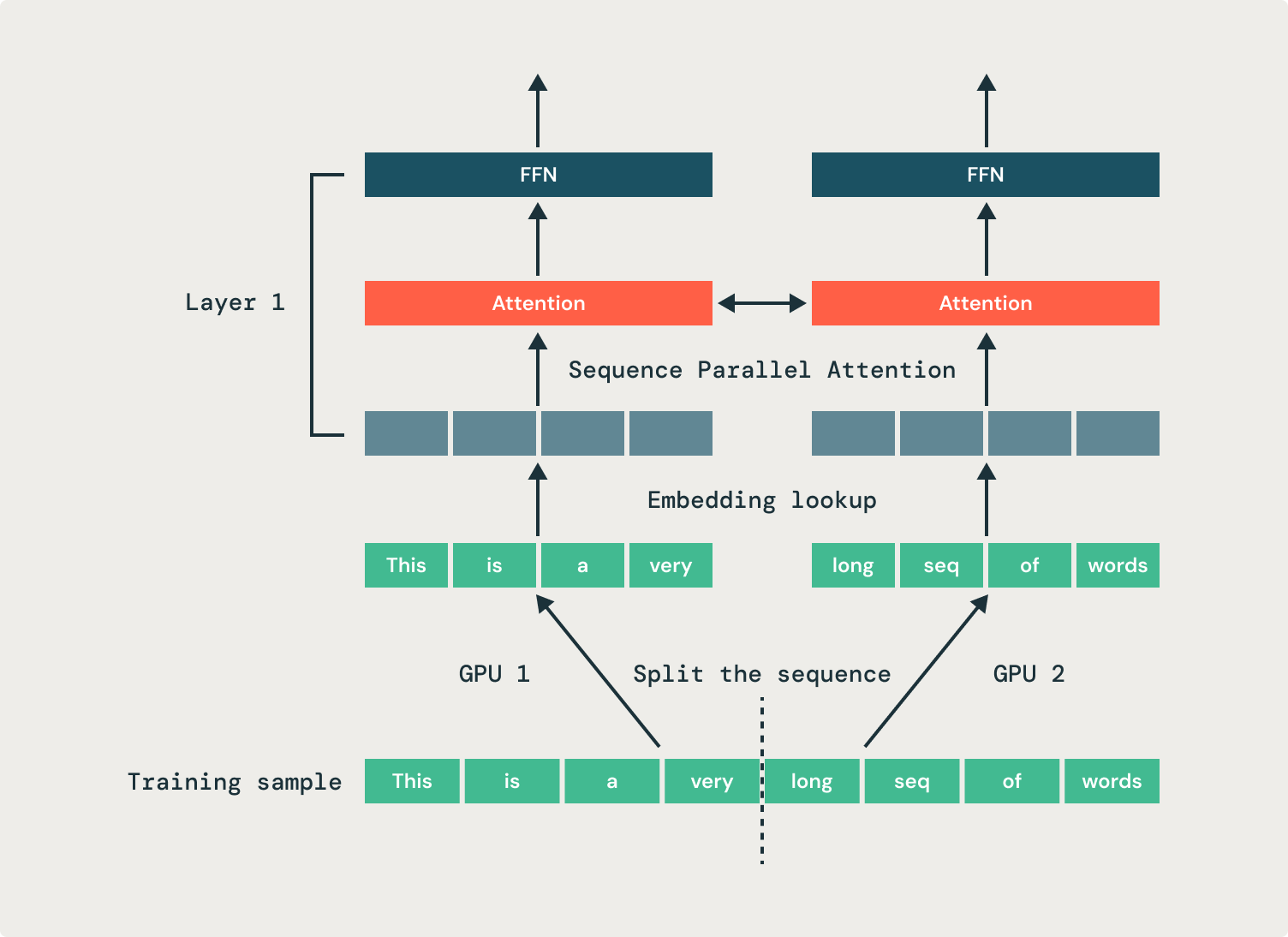

We clear up this by using sequence parallelism, the place we break up a single sequence throughout a number of GPUs. This strategy distributes the activation reminiscence for a sequence throughout a number of GPUs, lowering the GPU reminiscence footprint for fine-tuning jobs and enhancing coaching effectivity. Within the instance proven in Determine 1, two GPUs every course of half of the identical sequence. We use our open supply StreamingDataset’s replication characteristic to share samples throughout teams of GPUs.

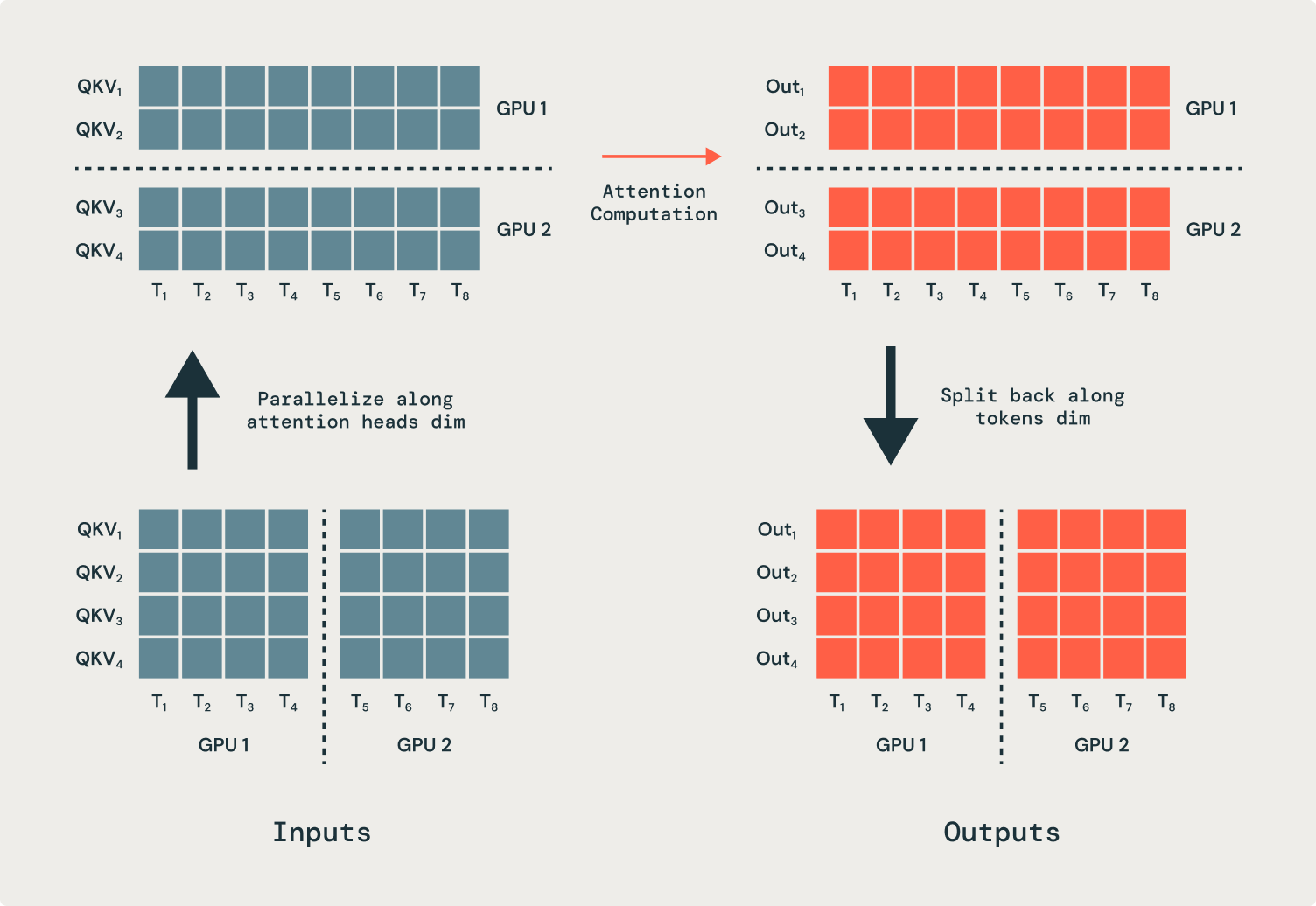

All operations in a transformer are unbiased of the sequence dimension—besides, crucially, consideration. Consequently, the eye operation needs to be modified to enter and output partial sequences. We parallelize consideration heads throughout many GPUs, which necessitates communication operations (all-to-alls) to maneuver tokens to the proper GPUs for processing. Previous to the eye operation, every GPU has a part of each sequence, however every consideration head should function on a full sequence. Within the instance proven in Determine 2, the primary GPU will get despatched all of the inputs for simply the primary consideration head, and the second GPU will get despatched all of the inputs for the second consideration head. After the eye operation, the outputs are despatched again to their unique GPUs.

With sequence parallelism, we’re capable of present full-context-length Llama 3.1 fine-tuning, enabling customized fashions to know and motive throughout a big context.

Optimizing High-quality-tuning Efficiency

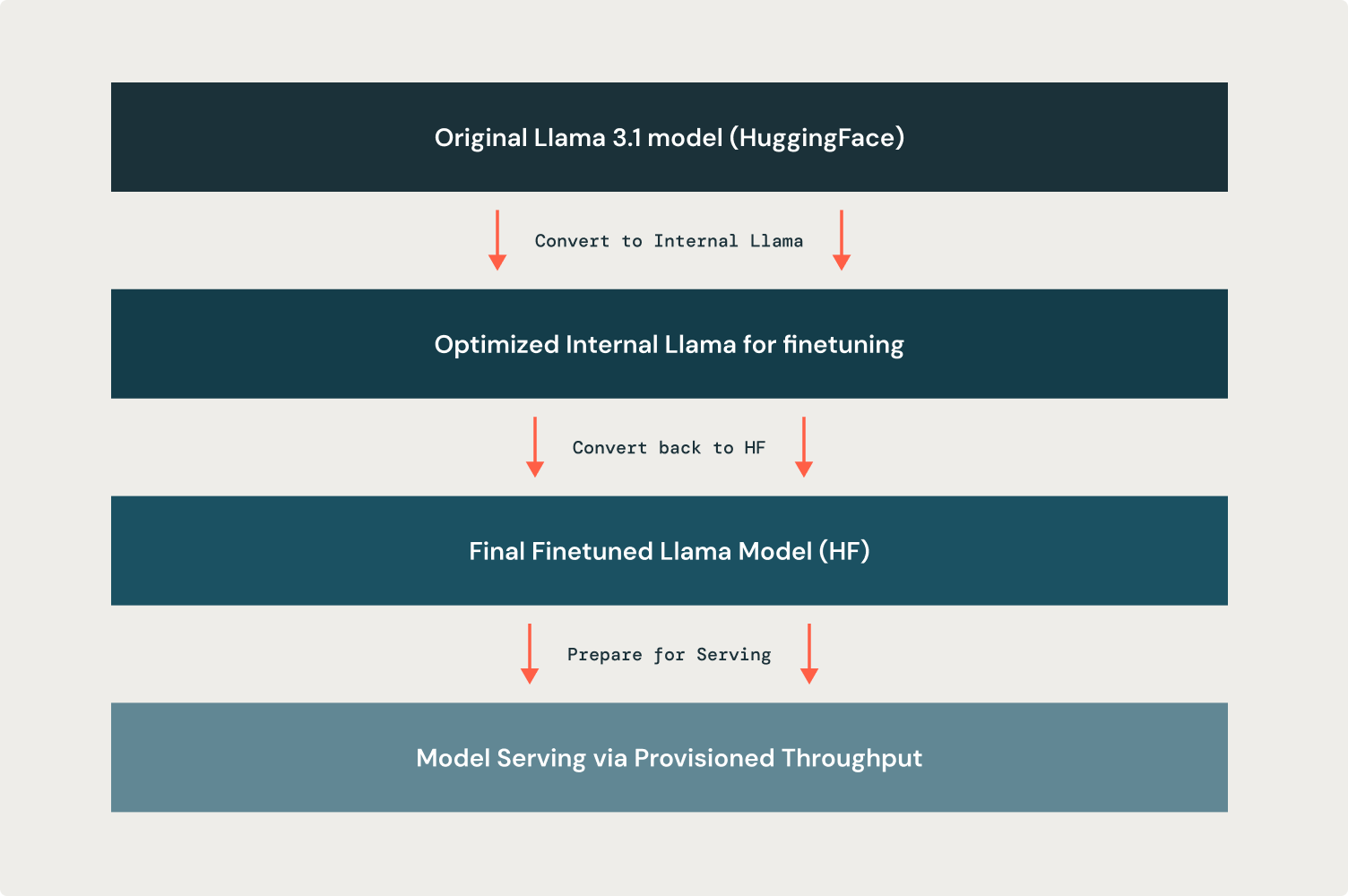

Customized optimizations like sequence parallelism for fine-tuning require us to have fine-grained management over the underlying mannequin implementation. Such customization isn’t attainable solely with the prevailing Llama 3.1 modeling code in HuggingFace. Nonetheless, for ease of serving and exterior compatibility, the ultimate fine-tuned mannequin must be a Llama 3.1 HuggingFace mannequin checkpoint. Subsequently, our fine-tuning answer should be extremely optimizable for coaching, but additionally capable of produce an interoperable output mannequin.

To realize this, we convert HuggingFace Llama 3.1 fashions into an equal inner Llama illustration previous to coaching. We’ve extensively optimized this inner illustration for coaching effectivity, with enhancements reminiscent of environment friendly kernels, selective activation checkpointing, efficient reminiscence use, and sequence ID consideration masking. Consequently, our inner Llama illustration permits sequence parallelism whereas yielding as much as 40% greater coaching throughput and requiring a 40% smaller reminiscence footprint. These enhancements in useful resource utilization translate to raised fashions for our clients, for the reason that skill to iterate shortly helps allow higher mannequin high quality.

When coaching is completed, we convert the mannequin from the inner illustration again to HuggingFace format, making certain that the saved artifact is straight away prepared for serving through our Provisioned Throughput providing. Determine 3 beneath reveals this whole pipeline.

Subsequent Steps

Get began fine-tuning Llama 3.1 at the moment through the UI or programmatically in Python. With Mosaic AI Mannequin Coaching, you possibly can effectively customise high-quality and open supply fashions for your corporation wants, and construct knowledge intelligence. Learn our documentation (AWS, Azure) and go to our pricing web page to get began with fine-tuning LLMs on Databricks.