In at this time’s data-driven world, types are all over the place, and type information extraction has develop into essential. These paperwork gather data effectively however typically require handbook processing. That is the place clever doc processing (IDP) is available in.

IDP leverages OCR, AI, and ML to automate type processing, making information extraction sooner and extra correct than conventional strategies. It is not all the time simple — complicated layouts and designs could make it difficult. However with the correct instruments, you’ll be able to extract information from on-line and offline types successfully and with fewer errors.

Take PDF types, for instance. They’re nice for accumulating contact data, however extracting that information might be difficult and costly. Extraction instruments resolve this, permitting you to simply import names, emails, and different particulars into codecs like Excel, CSV, JSON, and different structured information codecs.

This weblog put up will discover completely different situations and methods for extracting information from types utilizing OCR and Deep Studying.

Kind information extraction transforms uncooked type information into actionable insights. This clever course of does not simply learn types; it understands them. It makes use of superior algorithms to establish, seize, and categorize data from varied type varieties.

Key parts embody:

- Optical Character Recognition (OCR): Converts photographs of textual content into machine-readable textual content.

- Clever Character Recognition (ICR): Acknowledges handwritten characters.

- Pure Language Processing (NLP): Understands the context and which means of extracted textual content.

- Machine Studying: Improves accuracy over time by studying from new information.

These applied sciences work collectively to extract information and perceive it. In healthcare, for instance, an AI-powered extraction software can course of affected person consumption types, distinguishing between signs, drugs, and medical historical past. It may possibly flag potential drug interactions or alert employees to vital data, all whereas precisely populating the hospital’s database.

Kinds of Types and Information That Can Be Extracted

Kind information extraction might be utilized to all kinds of doc varieties. It is versatile and adaptable to quite a few industries and doc varieties. Listed here are some frequent examples:

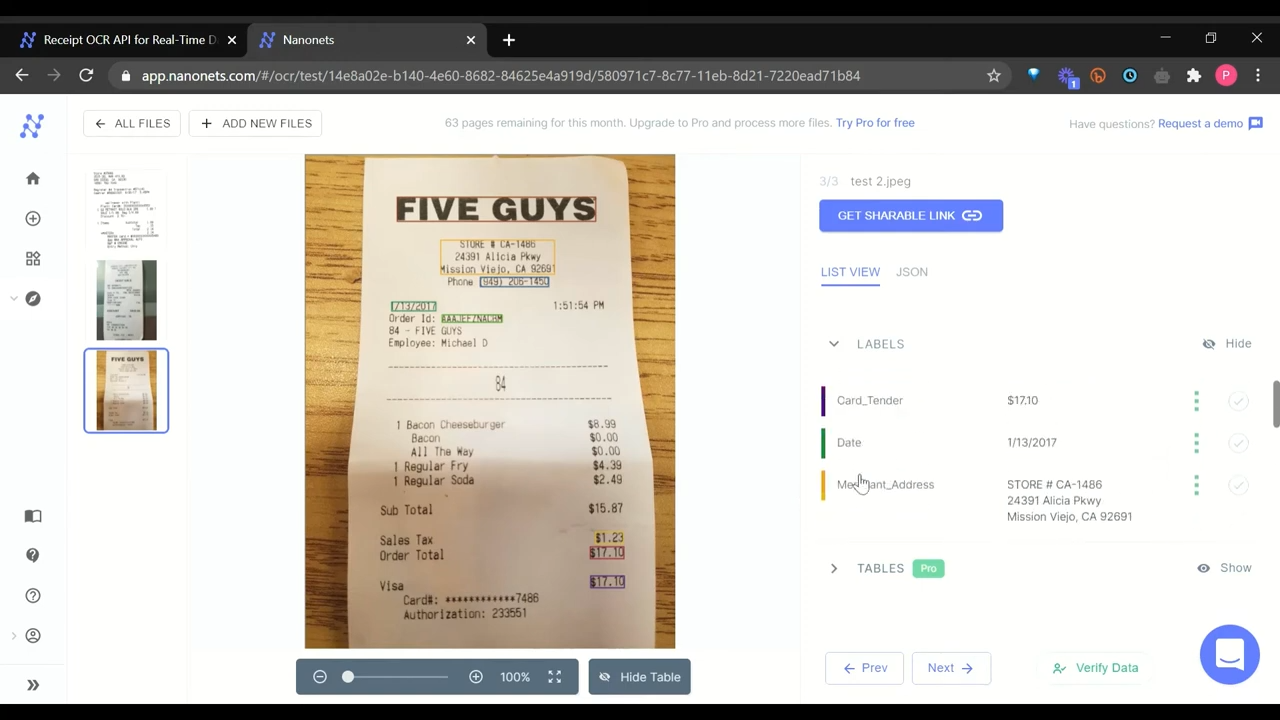

- Invoices and Receipts: Companies can routinely extract complete quantities, merchandise particulars, dates, and vendor data, streamlining their accounts payable processes.

- Purposes and Surveys: HR departments and market researchers can shortly seize private data, preferences, and responses to questions.

- Medical Types: Healthcare suppliers can effectively extract affected person particulars, medical historical past, and insurance coverage data, bettering affected person care and billing accuracy.

- Authorized Paperwork: Legislation corporations can establish key clauses, dates, and events concerned in contracts or agreements, saving precious time in doc overview.

- Monetary Statements: Banks and monetary establishments can extract account numbers, transaction particulars, and balances, enhancing their evaluation and reporting capabilities.

- Tax Types: Accounting corporations can seize earnings particulars, deductions, and tax calculations, dashing up tax preparation processes.

- Employment Data: HR departments can extract worker data, job particulars, and efficiency information, facilitating higher workforce administration.

- Delivery and Logistics Types: Logistics firms can seize order particulars, addresses, and monitoring data, optimizing their provide chain operations.

The information extracted can embody textual content (each typed and handwritten), numbers, dates, checkbox picks, signatures, and even barcodes or QR codes. Trendy automated type processing techniques can deal with each structured types with mounted layouts and semi-structured paperwork the place data seems in various places.

This broad applicability makes type information extraction so precious throughout industries. However with such variety comes challenges, which we’ll discover subsequent.

Bored with handbook information entry?

Now, routinely extract information from types with excessive accuracy and streamline your workflow, permitting you to concentrate on rising your corporation whereas we deal with the tedious work.

Information extraction presents a captivating problem. For one, it’s an picture recognition drawback, nevertheless it additionally has to think about the textual content that could be current within the picture and the structure of the shape. This complexity makes constructing an algorithm extra complicated.

On this part, we’ll discover the frequent hurdles confronted when constructing type information extraction algorithms:

- Information Variety: Types are available in numerous layouts and designs. Extraction instruments should deal with varied fonts, languages, and constructions, making it tough to create a one-size-fits-all resolution.

- Lack of Coaching Information: Deep studying algorithms depend on huge quantities of information to realize state-of-the-art efficiency. Discovering constant and dependable datasets is essential for any type information extraction software or software program. For instance, when coping with a number of type templates, these algorithms ought to perceive a variety of types, requiring coaching on a sturdy dataset.

- Dealing with Fonts, Languages, and Layouts: The number of typefaces, designs, and templates could make correct recognition difficult. It is necessary to restrict the font assortment to a selected language and kind for smoother processing. In multilingual circumstances, juggling characters from a number of languages wants cautious preparation.

- Orientation and Skew: Scanned photographs can seem skewed, which might scale back the accuracy of the mannequin. Methods like Projection Profile strategies or Fourier Transformation may help tackle this concern. Though orientation and skewness would possibly appear to be easy errors, they’ll considerably impression the mannequin’s accuracy when coping with massive volumes of types.

- Information Safety: When extracting information from varied sources, it is essential to concentrate on safety measures. In any other case, you threat compromising delicate data. That is notably necessary when working with ETL scripts and on-line APIs for information extraction.

- Desk Extraction: Extracting information from tables inside types might be complicated. Ideally, a type extraction algorithm ought to deal with each form-data and desk information effectively. This typically requires separate algorithms, which might enhance computational prices.

- Publish Processing and Exporting Output: The extracted information typically requires additional processing to filter outcomes right into a extra structured format. Organizations could must depend on third-party integrations or develop APIs to automate this course of, which might be time-consuming.

By addressing these challenges, clever doc processing techniques can considerably enhance the accuracy and effectivity of type information extraction, turning complicated paperwork into precious, actionable information.

Obtain constant information extraction

Precisely extract information from numerous type constructions, no matter structure or format, making certain constant outcomes and eliminating errors.

Now think about for those who may simply course of mortgage purposes, tax types, and medical information, every with its distinctive construction, with no need to create separate guidelines for every format.

Inside seconds, all of the related information—names, addresses, monetary particulars, medical data—is extracted, organized right into a structured format, and populated into your database. That’s what automated type processing may help achive.

Let us take a look at its different key advantages:

- Elevated Effectivity: Course of lots of of types in minutes, not hours. Reallocate employees to high-value duties like information evaluation or customer support.

- Improved Accuracy: Scale back information errors by eliminating handbook entry. Guarantee vital data like affected person information or monetary figures is captured accurately.

- Price Financial savings: Lower information processing prices considerably. Eradicate bills associated to paper storage and handbook information entry.

- Enhanced Information Accessibility: Immediately retrieve particular data from 1000’s of types. Allow real-time reporting and sooner decision-making.

- Scalability: Deal with sudden spikes of types with out hiring momentary employees. Course of 10 or 10,000 types with the identical system and related turnaround instances.

- Improved Compliance: Keep constant information dealing with throughout all types. Generate audit trails routinely for regulatory compliance.

- Higher Buyer Expertise: Scale back wait instances for form-dependent processes like mortgage approvals or insurance coverage claims from days to hours.

- Environmental Affect: Lower paper utilization considerably. Scale back bodily storage wants and related prices.

- Integration Capabilities: Robotically populate CRM, ERP, or different enterprise techniques with extracted information. Eradicate handbook information switch between techniques.

These advantages display how automated type processing can remodel doc dealing with from a bottleneck right into a strategic benefit.

Dealing with Totally different Kinds of Kind Information

Each type presents distinctive challenges for information extraction, from handwritten entries to intricate desk constructions. Let’s discover 4 real-world situations that showcase how superior extraction methods deal with challenges like handwriting, checkboxes, altering layouts, and sophisticated tables.

💡

Situation #1: Handwritten Recognition for Offline Types

Offline types are frequent in each day life. Manually digitalizing these types might be hectic and costly, which is why deep studying algorithms are wanted. Handwritten paperwork are notably difficult as a result of complexity of handwritten characters.

Information recognition algorithms study to learn and interpret handwritten textual content. The method entails scanning photographs of handwritten phrases and changing them into information that may be processed and analyzed. The algorithm creates a personality map primarily based on strokes and acknowledges corresponding letters to extract the textual content.

Situation #2: Checkbox Identification on Types

Checkbox types are used to assemble data from customers in enter fields. They’re frequent in lists and tables requiring customers to pick a number of gadgets. Trendy algorithms can automate the information extraction course of even from checkboxes.

The first objective is to establish enter areas utilizing laptop imaginative and prescient methods. These contain figuring out traces (horizontal and vertical), making use of filters, contours, and detecting edges on the photographs. After the enter area is recognized, it is simpler to extract the checkbox contents, whether or not marked or unmarked.

Situation #3: Format Modifications of the shape every now and then

Kind layouts can change relying on the sort and context. Due to this fact, it is important to construct an algorithm that may deal with a number of unstructured paperwork and intelligently extract content material primarily based on type labels.

One widespread method is the usage of Graph Convolutional Networks (GCNs). GCNs make sure that neuron activations are data-driven, making them appropriate for recognizing patterns in numerous type layouts.

Situation #4: Desk Cell Detection

Some types encompass desk cells, that are rectangular areas inside a desk the place information is saved. A super extraction algorithm ought to establish all kinds of cells (headers, rows, or columns) and their boundaries to extract information from them.

Well-liked methods for desk extraction embody Stream and Lattice algorithms, which may help detect traces, shapes, and polygons utilizing easy isomorphic operations on photographs.

These situations spotlight the varied challenges in type information extraction. Every process calls for superior algorithms and versatile options. As expertise progresses, we’re creating extra environment friendly and correct extraction processes. In the end, the objective right here is to construct clever techniques that may deal with any doc sort, structure, or format, seamlessly extracting precious data.

Kind information extraction has its origins within the pre-computer period of handbook type processing. As expertise superior, so did our skill to deal with types extra effectively.

At present, we see a model of the shape information extraction software program that’s extremely correct and quick and delivers the info in a extremely organized and structured method. Now, let’s briefly talk about various kinds of type information extraction methods.

- Rule-based From Information Extraction: This system routinely extracts information from specific template types. It really works by inspecting fields on the web page and deciding which to extract primarily based on surrounding textual content, labels, and different contextual clues. These algorithms are often developed and automatic utilizing ETL scripts or internet scraping. Nonetheless, when they’re examined on unseen information, they fail fully.

- Template Matching for Digital Photographs: Whereas just like rule-based extraction, template matching takes a extra visible method to information extraction. It makes use of predefined visible templates to find and extract information from types with mounted layouts. That is efficient for processing extremely related types, resembling standardized purposes or surveys. Nonetheless, it requires cautious template creation and common upkeep.

- Kind Information Extraction utilizing OCR: OCR is a go-to resolution for any type of information extraction drawback. It really works by studying every pixel of a picture with textual content and evaluating it to corresponding letters. Nonetheless, OCR can face challenges with handwritten textual content or complicated layouts. For instance, when the notes are shut collectively or overlap, resembling “a” and “e.” Due to this fact, these could not work after we are extracting offline types.

- NER for Kind Information Extraction: It identifies and classifies predefined entities in textual content. It is helpful for extracting data from types the place individuals enter names, addresses, feedback, and so on. Trendy NER fashions leverage pre-trained fashions for data extraction duties.

- Deep Studying for Kind Information Extraction: Latest advances in deep studying have led to breakthrough outcomes, with fashions attaining high efficiency in varied codecs. Coaching deep neural networks on massive datasets allows them to know complicated patterns and connections, resembling figuring out entities like names, emails, and IDs from image-form labels. Nonetheless, constructing a extremely correct mannequin requires vital experience and experimentation.

Constructing on these deep studying developments, Clever Doc Processing (IDP) has emerged as a complete method to type information extraction. IDP combines OCR, AI, and ML to automate type processing, making information extraction sooner and extra correct than conventional strategies.

It may possibly deal with each structured and unstructured paperwork, adapt to varied layouts, and repeatedly enhance its efficiency by means of machine studying. For companies coping with numerous doc varieties, IDP presents a scalable resolution that may considerably streamline document-heavy processes.

Wish to extract information from printed or handwritten types?

Try Nanonets type information extractor without spending a dime and automate the export of data from any type!

There are lots of completely different libraries out there for extracting information from types. However what if you wish to extract information from a picture of a type? That is the place Tesseract OCR (Optical Character Recognition) is available in.

Tesseract is an open-source OCR engine developed by HP. Utilizing Tesseract OCR, you’ll be able to convert scanned paperwork resembling paper invoices, receipts, and checks into searchable, editable digital recordsdata. It is out there in a number of languages and might acknowledge characters in varied picture codecs. Tesseract is often utilized in mixture with different libraries to course of photographs to extract textual content.

Wish to strive it out your self? This is how:

- Set up Tesseract in your native machine.

- Select between Tesseract CLI or Python bindings for working the OCR.

- If utilizing Python, take into account Python-tesseract, a wrapper for Google’s Tesseract-OCR Engine.

Python-tesseract can learn all picture varieties supported by the Pillow and Leptonica imaging libraries, together with jpeg, png, gif, bmp, tiff, and others. You possibly can simply use it as a stand-alone invocation script to Tesseract if wanted.

Let’s take a sensible instance. Say you could have a receipt containing type information. This is how one can establish the placement of the textual content utilizing Pc Imaginative and prescient and Tesseract:

import pytesseract

from pytesseract import Output

import cv2

img = cv2.imread('receipt.jpg')

d = pytesseract.image_to_data(img, output_type=Output.DICT)

n_boxes = len(d['level'])

for i in vary(n_boxes):

(x, y, w, h) = (d['left'][i], d['top'][i], d['width'][i], d['height'][i])

img = cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 2)

cv2.imshow(img,'img')Right here, within the output, as we are able to see, this system was in a position to establish all of the textual content inside the shape. Now, let’s apply OCR to this to extract all the knowledge. We will merely do that through the use of the image_to_string perform in Python.

extracted_text = pytesseract.image_to_string(img, lang = 'deu')

Output:

Berghotel

Grosse Scheidegg

3818 Grindelwald

Familie R.Müller

Rech.Nr. 4572 30.07.2007/13:29: 17

Bar Tisch 7/01

2xLatte Macchiato &ä 4.50 CHF 9,00

1xGloki a 5.00 CH 5.00

1xSchweinschnitzel ä 22.00 CHF 22.00

IxChässpätz 1 a 18.50 CHF 18.50

Complete: CHF 54.50

Incl. 7.6% MwSt 54.50 CHF: 3.85

Entspricht in Euro 36.33 EUR

Es bediente Sie: Ursula

MwSt Nr. : 430 234

Tel.: 033 853 67 16

Fax.: 033 853 67 19

E-mail: grossescheidegs@b luewin. Ch

Right here we’re in a position to extract all the knowledge from the shape. Nonetheless, generally, utilizing simply OCR won’t assist as the info extracted will likely be utterly unstructured. Due to this fact, customers depend on key-value pair extraction on types, which might solely establish particular entities resembling ID, Dates, Tax Quantity, and so on.

That is solely potential with deep studying. Within the subsequent part, let’s take a look at how we are able to leverage completely different deep-learning methods to construct data extraction algorithms.

Expertise unparalleled OCR accuracy

By combining OCR with AI, Nanonets delivers superior accuracy, even with handwriting, low-quality scans, and sophisticated layouts. You possibly can intelligently course of and improve photographs, making certain dependable information extraction from even probably the most difficult types.

Let’s discover three cutting-edge deep studying approaches to type information extraction: Graph Convolutional Networks (GCNs), LayoutLM, and Form2Seq. We’ll break down how these methods work and why they’re simpler at dealing with real-world type processing challenges than conventional approaches.

Graph Convolutional Networks (Graph CNNs) are a category of deep convolutional neural networks (CNNs) able to successfully studying extremely non-linear options in graph information constructions whereas preserving node and edge construction. They’ll take graph information constructions as enter and generate ‘function maps’ for nodes and edges. The ensuing options can be utilized for graph classification, clustering, or neighborhood detection.

GCNs present a strong resolution to extracting data from massive, visually wealthy paperwork like invoices and receipts. To course of these, every picture should be reworked right into a graph comprised of nodes and edges. Any phrase on the picture is represented by its personal node; visualization of the remainder of the info is encoded within the node’s function vector.

This mannequin first encodes every textual content section within the doc into graph embedding. Doing so captures the visible and textual context surrounding every textual content ingredient, together with its place or location inside a block of textual content. It then combines these graphs with textual content embeddings to create an general illustration of the doc’s construction and its content material.

The mannequin learns to assign larger weights on texts which might be prone to be entities primarily based on their places relative to at least one one other and the context wherein they seem inside a bigger block of readers. Lastly, it applies an ordinary BiLSTM-CRF mannequin for entity extraction. The outcomes present that this algorithm outperforms the baseline mannequin (BiLSTM-CRF) by a large margin.

2. LayoutLM: Pre-training of Textual content and Format for Doc Picture Understanding

The structure of the LayoutLM mannequin is closely impressed by BERT and incorporates picture embeddings from a Sooner R-CNN. LayoutLM enter embeddings are generated as a mixture of textual content and place embeddings, then mixed with the picture embeddings generated by the Sooner R-CNN mannequin.

Masked Visible-Language Fashions and Multi-Label Doc Classification are primarily used as pretraining duties for LayoutLM. The LayoutLM mannequin is effective, dynamic, and robust sufficient for any job requiring structure understanding, resembling type/receipt extraction, doc picture classification, and even visible query answering.

The LayoutLM mannequin was skilled on the IIT-CDIP Check Assortment 1.0, which incorporates over 6 million paperwork and greater than 11 million scanned doc photographs totalling over 12GB of information. This mannequin has considerably outperformed a number of state-of-the-art pre-trained fashions in type understanding, receipt understanding, and scanned doc picture classification duties.

Form2Seq is a framework that focuses on extracting constructions from enter textual content utilizing positional sequences. In contrast to conventional seq2seq frameworks, Form2Seq leverages relative spatial positions of the constructions, relatively than their order.

On this methodology, first, we classify low-level components that can enable for higher processing and group. There are 10 kinds of types, resembling subject captions, record gadgets, and so forth. Subsequent, we group lower-level components, resembling Textual content Fields and ChoiceFields, into higher-order constructs known as ChoiceGroups.

These are used as data assortment mechanisms to realize higher person expertise. That is potential by arranging the constituent components in a linear order in pure studying order and feeding their spatial and textual representations to the Seq2Seq framework. The Seq2Seq framework sequentially makes predictions for every ingredient of a sentence relying on the context. This enables it to course of extra data and arrive at a greater understanding of the duty at hand.

The mannequin achieved an accuracy of 90% on the classification process, which was larger than that of segmentation primarily based baseline fashions. The F1 on textual content blocks, textual content fields and selection fields have been 86.01%, 61.63% respectively. This framework achieved the state of the outcomes on the ICDAR dataset for desk construction recognition.

Scale your information extraction effortlessly

Nanonets leverages neural networks and parallel processing to allow you to deal with rising volumes of types with out compromising velocity or accuracy.

Now that we have explored superior methods like Graph CNNs, LayoutLM, and Form2Seq, the subsequent step is to think about greatest practices for implementing type information extraction in real-world situations.

Listed here are some key concerns:

Information Preparation

Guarantee a various dataset of type photographs, overlaying varied layouts and types.

- Embody samples of all type varieties you anticipate to course of

- Take into account augmenting your dataset with artificial examples to extend variety

Pre-processing

Implement strong picture preprocessing methods to deal with variations in high quality and format.

- Develop strategies for denoising, deskewing, and normalizing enter photographs

- Standardize enter codecs to streamline subsequent processing steps

Mannequin Choice

Select an applicable mannequin primarily based in your particular use case and out there sources.

- Take into account components like type complexity, required accuracy, and processing velocity

- Consider trade-offs between mannequin sophistication and computational necessities

Fantastic-tuning

Adapt pre-trained fashions to your particular area for improved efficiency.

- Use switch studying methods to leverage pre-trained fashions successfully

- Iteratively refine your mannequin on domain-specific information to reinforce accuracy

Publish-processing

Implement error-checking and validation steps to make sure accuracy.

- Develop rule-based techniques to catch frequent errors or inconsistencies

- Take into account implementing a human-in-the-loop method for vital or low-confidence extractions

Scalability

Design your pipeline to deal with massive volumes of types effectively.

- Implement batch processing and parallel computation the place potential

- Optimize your infrastructure to deal with peak masses with out compromising efficiency

Steady Enchancment

Recurrently replace and retrain your fashions with new information.

- Set up a suggestions loop to seize and study from errors or edge circumstances

- Keep knowledgeable about developments in type extraction methods and incorporate them as applicable.

These greatest practices may help maximize the effectiveness of your type information extraction system, making certain it delivers correct outcomes at scale. Nonetheless, implementing these practices might be complicated and resource-intensive.

That is the place specialised options like Nanonets’ AI-based OCR are available in. The platfom incorporates many of those greatest practices, providing a strong, out-of-the-box resolution for type information extraction.

Why Nanonets AI-Primarily based OCR is the Greatest Possibility

Although OCR software program can convert scanned photographs of textual content to formatted digital recordsdata resembling PDFs, DOCs, and PPTs, it’s not all the time correct. Nanonets presents a best-in-class AI-based OCR deep studying that tackles the constraints of standard strategies head-on. The platform provide superior accuracy in creating editable recordsdata from scanned paperwork, serving to you streamline your workflow and enhance productiveness.

1. Tackling Your Accuracy Woes

Think about processing invoices with high-accuracy, no matter font types or doc high quality. Nanonets’ system is designed to deal with:

- Numerous fonts and types

- Skewed or low-quality scans

- Paperwork with noise or graphical components

By doubtlessly decreasing errors, you might save numerous hours of double-checking and corrections.

2. Adapting to Your Numerous Doc Sorts

Does your work contain a mixture of types, from printed to handwritten? Nanonets’ AI-based OCR goals to be your all-in-one resolution, providing:

- Environment friendly desk extraction

- Handwriting recognition

- Capability to course of varied unstructured information codecs

Whether or not you are coping with resumes, monetary statements, or medical types, the system is constructed to adapt to your wants.

3. Seamlessly Becoming Into Your Workflow

Take into consideration how a lot time you spend changing extracted information. Nanonets is designed along with your workflow in thoughts, providing:

- Export choices to JSON, CSV, Excel, or on to databases

- API integration for automated processing

- Compatibility with current enterprise techniques

This flexibility goals to make the transition from uncooked doc to usable information clean and easy.

4. Enhancing Your Doc Safety

Dealing with delicate data? Nanonets’ superior options purpose so as to add an additional layer of safety:

- Fraud checks on monetary or confidential information

- Detection of edited or blurred textual content

- Safe processing compliant with information safety requirements

These options are designed to present you peace of thoughts when dealing with confidential paperwork.

5. Rising With Your Enterprise

As your corporation evolves, so ought to your OCR resolution. Nanonets’ AI is constructed to:

- Be taught and enhance from every processed doc

- Robotically tune primarily based on recognized errors

- Adapt to new doc varieties with out intensive reprogramming

This implies the system may develop into extra attuned to your particular doc challenges over time.

6. Remodeling Your Doc Processing Expertise

Think about decreasing your doc processing time by as much as 90%. By addressing frequent ache factors in OCR expertise, Nanonets goals to give you an answer that not solely saves time but in addition improves accuracy. Whether or not you are in finance, healthcare, authorized, or some other document-heavy trade, Nanonets’ AI-based OCR system is designed to doubtlessly remodel the way you deal with document-based data.

The Subsequent Steps

Kind information extraction has developed from easy OCR to classy AI-driven methods, revolutionizing how companies deal with doc processing workflows. As you implement these superior strategies, bear in mind to concentrate on information high quality, select the correct fashions to your wants, and repeatedly refine your method.

Schedule a demo with us at this time and perceive how Nanonets can streamline your workflows, enhance accuracy, and save precious time. With Nanonets, you’ll be able to course of numerous doc varieties, from invoices to medical information, with ease and precision.