Have you ever ever come throughout a scenario the place you wished your chatbot to make use of a device after which reply? Sounds difficult, proper! However now, MCP (Mannequin Context Protocol) affords you a solution to combine your LLM to exterior instruments simply and the LLM will be capable of use these instruments in each manner. On this tutorial, we’ll dive into the method of changing a easy internet app made utilizing FastAPI, powered by an MCP Server, utilizing the FastAPI-MCP.

FastAPI with MCP

FastAPI is a quite simple device in-built Python which lets you construct internet purposes utilizing APIs. It’s designed to be simple to make use of in addition to quick on the similar time. Consider FastAPI as a sensible waiter who takes your order (HTTP requests), goes to the Kitchen (Database/Server) after which takes your order (Output) after which reveals it to you. It’s a terrific device for constructing Internet backends, Providers for Cell apps and many others.

MCP is an open normal protocol by Anthropic that gives a performance for the LLMs to speak with exterior information sources and instruments. Consider MCP as a toolkit that gives the proper device for the given activity. We’d be utilizing MCP for making a server.

Now, what if these functionalities are given to your LLM? It’ll make your life a lot simpler! That’s why FastAPI to MCP integration helps loads. FastAPI takes care of the companies from totally different sources and MCP takes care of the context of your LLM. Through the use of FastAPI with MCP server, we are able to get entry to each device deployed over the net and make the most of that as a LLM device and make the LLMs do our work extra effectively.

Within the above picture, we are able to see that there’s an MCP server that’s related to an API endpoint. This API endpoint is usually a FastAPI endpoint or some other third occasion API service obtainable on the web.

What’s FastAPI-MCP?

FastAPI-MCP is a device which helps you to convert any FastAPI utility into some device that LLMs like ChatGPT or Claude can perceive and use simply. Through the use of FastAPI-MCP you may wrap your FastAPI endpoints in such a manner that they may develop into a plug and play device in an AI ecosystem using LLMs.

If you wish to know tips on how to work with MCP, learn this text on The right way to Use MCP?

What APIs Can Be Transformed into MCP Utilizing FastAPI-MCP?

With FastAPI-MCP, any FastAPI endpoint might be transformed right into a MCP device for LLMs. These endpoints ought to embody:

- GET endpoints: Transformed into MCP sources.

- POST, PUT, DELETE endpoints: Transformed into MCP instruments.

- Customized utility capabilities: Will be added as further MCP instruments

FastAPI-MCP is a really easy-to-use library that robotically discovers and converts these endpoints into MCP. It additionally preserves the schema in addition to the documentation of those APIs.

Fingers-on utilizing FastAPI-MCP

Let’s have a look at a easy instance on tips on how to convert a FastAPI endpoint right into a MCP server. Firstly, we’ll create a FastAPI endpoint after which transfer in the direction of changing it right into a MCP server utilizing fastapi-mcp.

Configuring FastAPI

1. Set up the dependencies

Make your system suitable by putting in the required dependencies.

pip set up fastapi fastapi_mcp uvicorn mcp-proxy2. Import the required dependencies

Make a brand new file with the title ‘foremost.py’, then import the next dependencies inside it.

from fastapi import FastAPI, HTTPException, Question

import httpx

from fastapi_mcp import FastApiMCP3. Outline the FastAPI App

Let’s outline a FastAPI app with the title “Climate Updates API”.

app = FastAPI(title="Climate Updates API")4. Defining the routes and capabilities

Now, we’ll outline the routes for our app, which is able to denote which endpoint will execute which operate. Right here, we’re making a climate replace app utilizing climate.gov API (free), which doesn’t require any API key. We simply must hit the https://api.climate.gov/factors/{lat},{lon} with the proper worth of latitude and longitude.

We outlined a get_weather operate which is able to take a state title or code as an argument after which discover the corresponding coordinates within the CITY_COORDINATES dictionary after which hit the bottom URL with these coordinates.

# Predefined latitude and longitude for main cities (for simplicity)

# In a manufacturing app, you may use a geocoding service like Nominatim or Google Geocoding API

CITY_COORDINATES = {

"Los Angeles": {"lat": 34.0522, "lon": -118.2437},

"San Francisco": {"lat": 37.7749, "lon": -122.4194},

"San Diego": {"lat": 32.7157, "lon": -117.1611},

"New York": {"lat": 40.7128, "lon": -74.0060},

"Chicago": {"lat": 41.8781, "lon": -87.6298},

# Add extra cities as wanted

}

@app.get("/climate")

async def get_weather(

stateCode: str = Question(..., description="State code (e.g., 'CA' for California)"),

metropolis: str = Question(..., description="Metropolis title (e.g., 'Los Angeles')")

):

"""

Retrieve at present's climate from the Nationwide Climate Service API primarily based on metropolis and state

"""

# Get coordinates (latitude, longitude) for the given metropolis

if metropolis not in CITY_COORDINATES:

elevate HTTPException(

status_code=404,

element=f"Metropolis '{metropolis}' not present in predefined listing. Please use one other metropolis."

)

coordinates = CITY_COORDINATES[city]

lat, lon = coordinates["lat"], coordinates["lon"]

# URL for the NWS API Gridpoints endpoint

base_url = f"https://api.climate.gov/factors/{lat},{lon}"

attempt:

async with httpx.AsyncClient() as shopper:

# First, get the gridpoint data for the given location

gridpoint_response = await shopper.get(base_url)

gridpoint_response.raise_for_status()

gridpoint_data = gridpoint_response.json()

# Retrieve the forecast information utilizing the gridpoint data

forecast_url = gridpoint_data["properties"]["forecast"]

forecast_response = await shopper.get(forecast_url)

forecast_response.raise_for_status()

forecast_data = forecast_response.json()

# Returning at present's forecast

today_weather = forecast_data["properties"]["periods"][0]

return {

"metropolis": metropolis,

"state": stateCode,

"date": today_weather["startTime"],

"temperature": today_weather["temperature"],

"temperatureUnit": today_weather["temperatureUnit"],

"forecast": today_weather["detailedForecast"],

}

besides httpx.HTTPStatusError as e:

elevate HTTPException(

status_code=e.response.status_code,

element=f"NWS API error: {e.response.textual content}"

)

besides Exception as e:

elevate HTTPException(

status_code=500,

element=f"Inside server error: {str(e)}"

)5. Arrange MCP Server

Let’s convert this FastAPI app into MCP now utilizing the fastapi-mcp library. This course of could be very easy, we simply want so as to add just a few traces of and the fastapi-mcp robotically converts the endpoints into MCP instruments and detects its schema and documentation simply.

mcp = FastApiMCP(

app,

title="Climate Updates API",

description="API for retrieving at present's climate from climate.gov",

)

mcp.mount() 6. Beginning the app

Now, add the next on the finish of your Python file.

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000) And go to terminal and run the principle.py file.

python foremost.py Now your FastAPI app ought to begin in localhost efficiently.

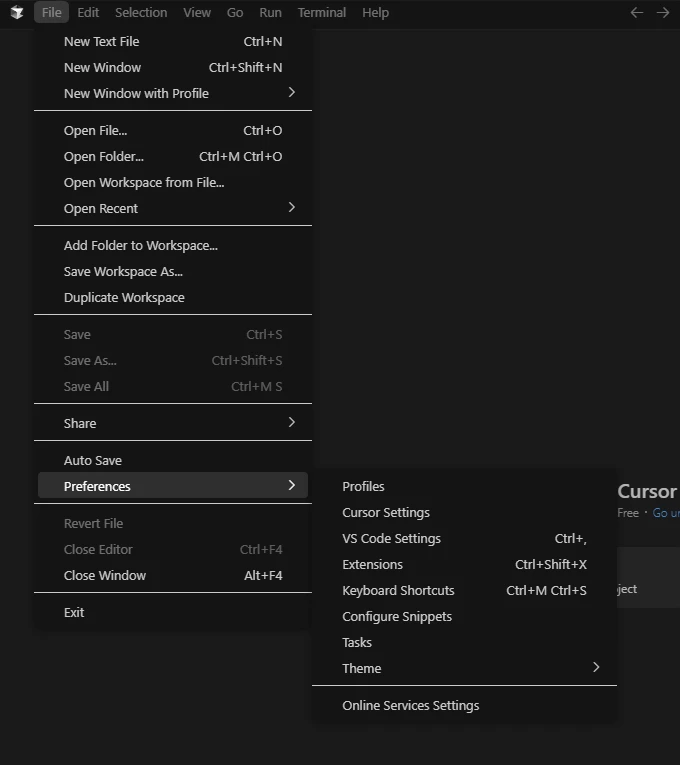

Configuring Cursor

Let’s configure the Cursor IDE for testing our MCP server.

- Obtain Cursor from right here https://www.cursor.com/downloads.

- Set up it, enroll and get to the house display screen.

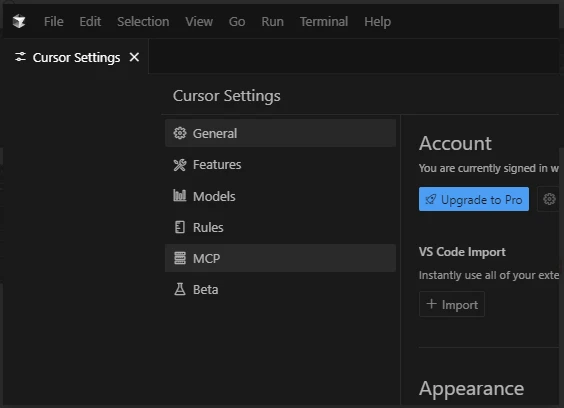

- Now go to the File from the header toolbar. and click on on Preferences after which on Cursor Settings.

- From the cursor settings, click on on MCP.

- On the MCP tab, click on on Add new world MCP Server.

It’ll open a mcp.json file. Paste the next code into it and save the file.

{

"mcpServers": {

"Nationwide Park Service": {

"command": "mcp-proxy",

"args": ["http://127.0.0.1:8000/mcp"]

}

}

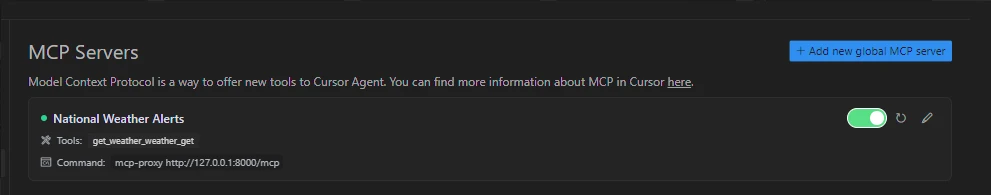

}- Again on the Cursor Settings, it’s best to see the next:

If you’re seeing this in your display screen, which means your server is operating efficiently and related to the Cursor IDE. If it’s displaying some errors, attempt utilizing the restart button in the proper nook.

We now have efficiently arrange the MCP server within the Cursor IDE. Now, let’s check the server.

Testing the MCP Server

Our MCP server can retrieve the climate updates. We simply need to ask the Cursor IDE for the climate replace on any location, and it’ll fetch that for us utilizing the MCP server.

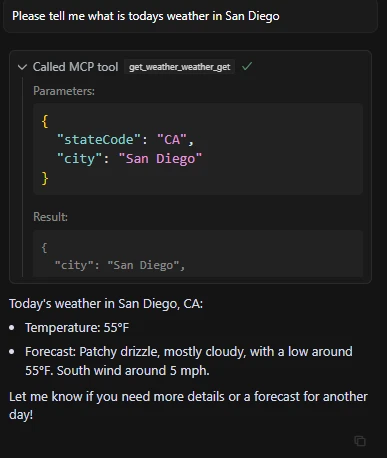

Question: “Please inform me what’s at present’s climate in San Diego”

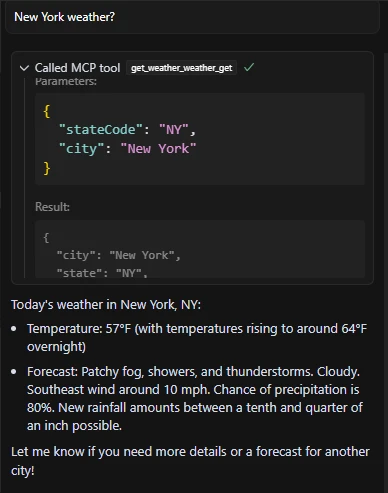

Question: “New York climate?”

We will see from the outputs that our MCP server is working nicely. We simply must ask for the climate particulars, it’ll determine by itself whether or not to make use of MCP server or not. Within the second output we requested vaguely “New York climate?” it was capable of notice the context of the question primarily based on our earlier immediate, and used the suitable MCP instruments to reply.

Conclusion

MCP permits LLMs to extend their answering capabilities by giving entry to exterior instruments and FastAPI affords a straightforward manner to try this. On this complete information, we mixed each the applied sciences utilizing the fastapi-mcp library. Using this library, we are able to convert any API into MCP server, which is able to assist the LLMs and AI brokers to get the newest data from the APIs. There will probably be no must outline a customized device for each new activity. MCP with FastAPI will care for every thing robotically. The revolution within the LLMs was introduced by the introduction of MCP, and now, FastAPI paired with MCP is revolutionizing the best way LLMs are accessing these instruments.

Login to proceed studying and luxuriate in expert-curated content material.