TL;DR

DeepSeek fashions, together with DeepSeek‑R1 and DeepSeek‑V3.1, are accessible instantly by way of the Clarifai platform. You will get began while not having a separate DeepSeek API key or endpoint.

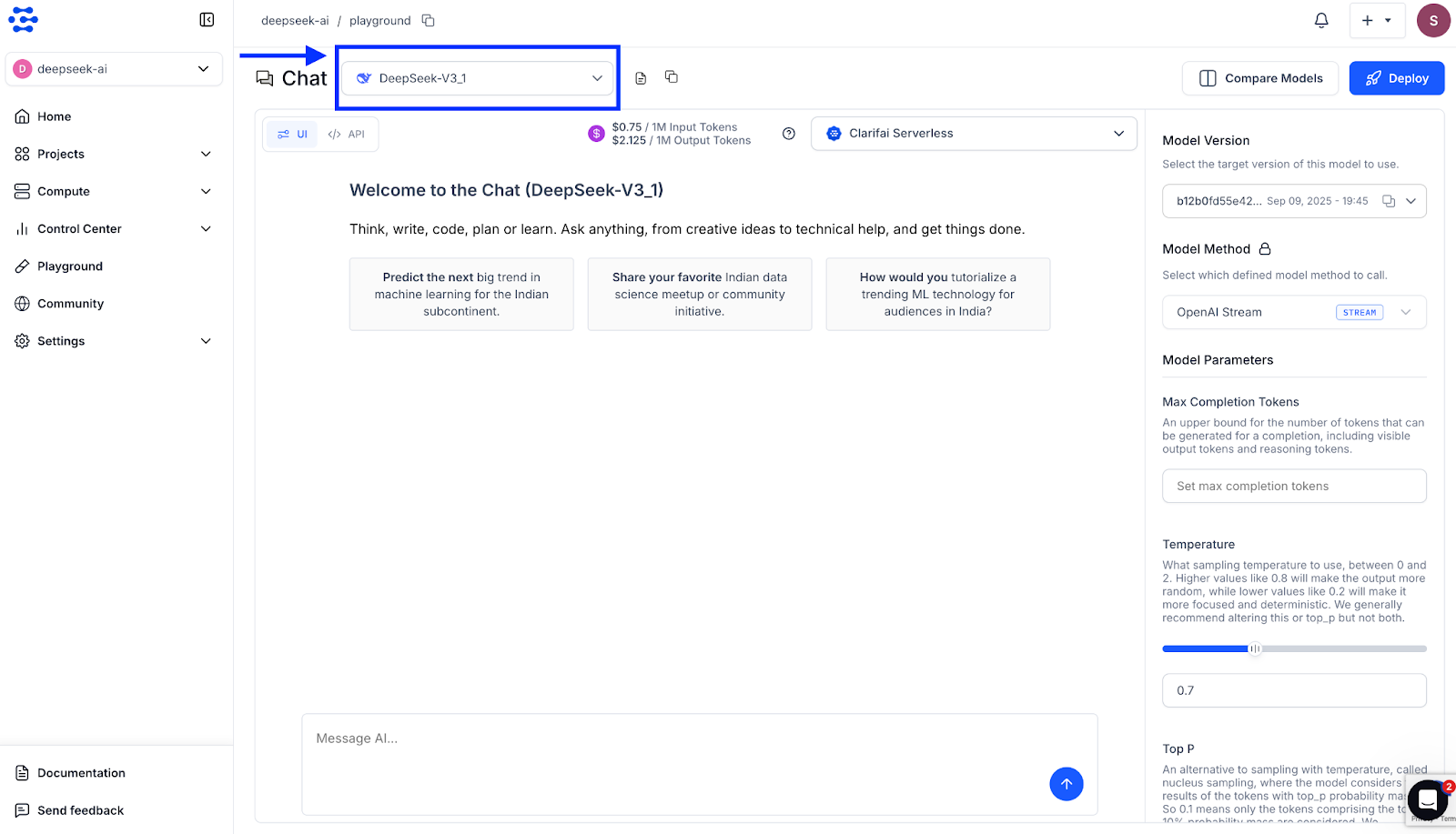

- Experiment within the Playground: Join a Clarifai account and open the Playground. This allows you to take a look at prompts interactively, regulate parameters, and perceive the mannequin habits earlier than integration.

- Combine by way of API: Combine fashions by way of Clarifai’s OpenAI-compatible endpoint by specifying the mannequin URL and authenticating together with your Private Entry Token (PAT).

https://api.clarifai.com/v2/ext/openai/v1

Authenticate together with your Private Entry Token (PAT) and specify the mannequin URL, comparable to DeepSeek‑R1 or DeepSeek‑V3.1.

Clarifai handles all internet hosting, scaling, and orchestration, letting you focus purely on constructing your software and utilizing the mannequin’s reasoning and chat capabilities.

DeepSeek in 90 Seconds—What and Why

DeepSeek encompasses a spread of enormous language fashions (LLMs) designed with various architectural methods to optimize efficiency throughout numerous duties. Whereas some fashions make use of a Combination-of-Specialists (MoE) strategy, others make the most of dense architectures to stability effectivity and functionality.

1. DeepSeek-R1

DeepSeek-R1 is a dense mannequin that integrates reinforcement studying (RL) with information distillation to reinforce reasoning capabilities. It employs a typical transformer structure augmented with Multi-Head Latent Consideration (MLA) to enhance context dealing with and scale back reminiscence overhead. This design permits the mannequin to realize excessive efficiency in duties requiring deep reasoning, comparable to arithmetic and logic.

2. DeepSeek-V3

DeepSeek-V3 adopts a hybrid strategy, combining each dense and MoE parts. The dense half handles normal conversational duties, whereas the MoE element prompts specialised consultants for complicated reasoning duties. This structure permits the mannequin to effectively swap between normal and specialised modes, optimizing efficiency throughout a broad spectrum of functions.

3. Distilled Fashions

To offer extra accessible choices, DeepSeek affords distilled variations of its fashions, comparable to DeepSeek-R1-Distill-Qwen-7B. These fashions are smaller in measurement however retain a lot of the reasoning and coding capabilities of their bigger counterparts. As an example, DeepSeek-R1-Distill-Qwen-7B is predicated on the Qwen 2.5 structure and has been fine-tuned with reasoning information generated by DeepSeek-R1, attaining sturdy efficiency in mathematical reasoning and normal problem-solving duties.

Easy methods to Entry DeepSeek on Clarifai

DeepSeek fashions may be accessed on Clarifai in 3 ways: by way of the Clarifai Playground UI, by way of the OpenAI-compatible API, or utilizing the Clarifai SDK. Every methodology supplies a distinct degree of management and adaptability, permitting you to experiment, combine, and deploy fashions in keeping with your growth workflow.

Clarifai Playground

The Playground supplies a quick, interactive surroundings to check prompts and discover mannequin habits.

You’ll be able to choose any DeepSeek mannequin, together with DeepSeek‑R1, DeepSeek‑V3.1, or distilled variations out there on the neighborhood. You’ll be able to enter prompts, regulate parameters comparable to temperature and streaming, and instantly see the mannequin responses. The Playground additionally lets you examine a number of fashions facet by facet to check and consider their responses.

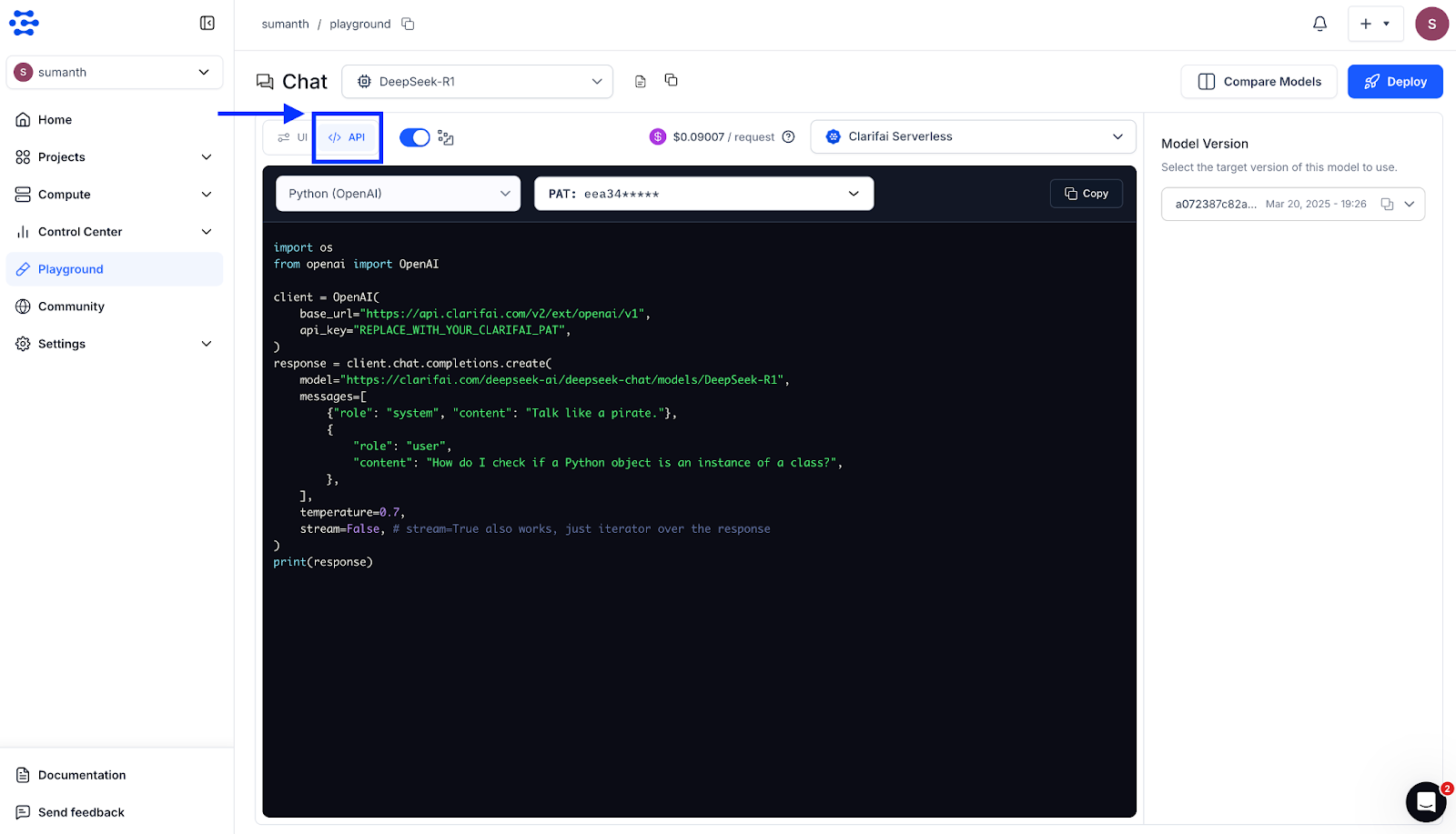

Throughout the Playground itself, you have got the choice to view the API part, the place you may entry code snippets in a number of languages, together with cURL, Java, JavaScript, Node.js, the OpenAI-compatible API, the Clarifai Python SDK, PHP, and extra.

You’ll be able to choose the language you want, copy the snippet, and instantly combine it into your functions. For extra particulars on testing fashions and utilizing the Playground, see the Clarifai Playground Quickstart

Strive it: The Clarifai Playground is the quickest method to take a look at prompts. Navigate to the mannequin web page and click on “Take a look at in Playground”.

By way of the OpenAI‑Appropriate API

Clarifai supplies a drop-in alternative for the OpenAI API, permitting you to make use of the identical Python or TypeScript shopper libraries you might be conversant in whereas pointing to Clarifai’s OpenAI-compatible endpoint. Upon getting your PAT set as an surroundings variable, you may name any Clarifai-hosted DeepSeek mannequin by specifying the mannequin URL.

Python Instance

import os

from openai import OpenAI

shopper = OpenAI(

base_url=“https://api.clarifai.com/v2/ext/openai/v1”,

api_key=os.environ[“CLARIFAI_PAT”]

)

response = shopper.chat.completions.create(

mannequin=“https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-R1”,

messages=[

{“role”: “system”, “content”: “You are a helpful assistant.”},

{“role”: “user”, “content”: “Tell me a three sentence bedtime story about a unicorn.”}

],

max_completion_tokens=100,

temperature=0.7

)

print(response.selections[0].message.content material)

TypeScript Instance

import OpenAI from “openai”;

const shopper = new OpenAI({

baseURL: “https://api.clarifai.com/v2/ext/openai/v1”,

apiKey: course of.env.CLARIFAI_PAT,

});

const response = await shopper.chat.completions.create({

mannequin: “https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-R1”,

messages: [

{ role: “system”, content: “You are a helpful assistant.” },

{ role: “user”, content: “Who are you?” }

],

});

console.log(response.selections?.[0]?.message.content material);

Clarifai Python SDK

Clarifai’s Python SDK simplifies authentication and mannequin calls, permitting you to work together with DeepSeek fashions utilizing concise Python code. After setting your PAT, you may initialize a mannequin shopper and make predictions with only a few strains.

import os

from clarifai.shopper import Mannequin

mannequin = Mannequin(

url=“https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-V3_1”,

pat=os.environ[“CLARIFAI_PAT”]

)

response = mannequin.predict(

immediate=“What’s the way forward for AI?”,

max_tokens=512,

temperature=0.7,

top_p=0.95,

pondering=“False”

)

print(response)

Vercel AI SDK

For contemporary internet functions, the Vercel AI SDK supplies a TypeScript toolkit to work together with Clarifai fashions. It helps the OpenAI-compatible supplier, enabling seamless integration.

import { createOpenAICompatible } from “@ai-sdk/openai-compatible”;

import { generateText } from “ai”;

const clarifai = createOpenAICompatible({

baseURL: “https://api.clarifai.com/v2/ext/openai/v1”,

apiKey: course of.env.CLARIFAI_PAT,

});

const mannequin = clarifai(“https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-R1”);

const { textual content } = await generateText({

mannequin,

messages: [

{ role: “system”, content: “You are a helpful assistant.” },

{ role: “user”, content: “What is photosynthesis?” }

],

});

console.log(textual content);

This SDK additionally helps streaming responses, software calling, and different superior options.Along with the above, DeepSeek fashions will also be accessed by way of cURL, PHP, Java, and different languages. For a whole checklist of integration strategies, supported suppliers, and superior utilization examples, seek advice from the documentation.

Superior Inference Patterns

DeepSeek fashions on Clarifai assist superior inference options that make them appropriate for production-grade workloads. You’ll be able to allow streaming for low-latency responses, and software calling to let the mannequin work together dynamically with exterior methods or APIs. These capabilities work seamlessly by way of Clarifai’s OpenAI-compatible API.

Streaming Responses

Streaming returns mannequin output token by token, enhancing responsiveness in real-time functions like chat interfaces or dashboards. The instance beneath exhibits find out how to stream responses from a DeepSeek mannequin hosted on Clarifai.

import os

from openai import OpenAI

# Initialize the OpenAI-compatible shopper for Clarifai

shopper = OpenAI(

base_url=“https://api.clarifai.com/v2/ext/openai/v1”,

api_key=os.environ[“CLARIFAI_PAT”]

)

# Create a chat completion request with streaming enabled

response = shopper.chat.completions.create(

mannequin=“https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-V3_1”,

messages=[

{“role”: “system”, “content”: “You are a helpful assistant.”},

{“role”: “user”, “content”: “Explain how transformers work in simple terms.”}

],

max_completion_tokens=150,

temperature=0.7,

stream=True

)

print(“Assistant’s Response:”)

for chunk in response:

if chunk.selections and chunk.selections[0].delta and chunk.selections[0].delta.content material is not None:

print(chunk.selections[0].delta.content material, finish=“”)

print(“n”)

Streaming helps you render partial responses as they arrive as an alternative of ready for your complete output, decreasing perceived latency.

Instrument Calling

Instrument calling permits a mannequin to invoke exterior capabilities throughout inference, which is very helpful for constructing AI brokers that may work together with APIs, fetch reside information, or carry out dynamic reasoning. DeepSeek-V3.1 helps software calling, permitting your brokers to make context-aware choices. Under is an instance of defining and utilizing a software with a DeepSeek mannequin.

import os

from openai import OpenAI

# Initialize the OpenAI-compatible shopper for Clarifai

shopper = OpenAI(

base_url=“https://api.clarifai.com/v2/ext/openai/v1”,

api_key=os.environ[“CLARIFAI_PAT”]

)

# Outline a easy operate the mannequin can name

instruments = [

{

“type”: “function”,

“function”: {

“name”: “get_weather”,

“description”: “Returns the current temperature for a given location.”,

“parameters”: {

“type”: “object”,

“properties”: {

“location”: {

“type”: “string”,

“description”: “City and country, for example ‘New York, USA'”

}

},

“required”: [“location”],

“additionalProperties”: False

}

}

}

]

# Create a chat completion request with tool-calling enabled

response = shopper.chat.completions.create(

mannequin=“https://clarifai.com/deepseek-ai/deepseek-chat/fashions/DeepSeek-V3_1”,

messages=[

{“role”: “user”, “content”: “What is the weather like in New York today?”}

],

instruments=instruments,

tool_choice=‘auto’

)

# Print the software name proposed by the mannequin

tool_calls = response.selections[0].message.tool_calls

print(“Instrument calls:”, tool_calls)

For extra superior inference patterns, together with multi-turn reasoning, structured output era, and prolonged examples of streaming and power calling, seek advice from the documentation

Which DeepSeek Mannequin Ought to I Choose?

Clarifai hosts a number of DeepSeek variants. Selecting the best one relies on your job:

- DeepSeek‑R1 – use for reasoning and complicated code. It excels at mathematical proofs, algorithm design, debugging and logical inference. Count on slower responses as a result of prolonged “pondering mode,” and better token utilization.

- DeepSeek‑V3.1 – use for normal chat and light-weight coding. V3.1 is a hybrid: it may swap between non‑pondering mode (sooner, cheaper) and pondering mode (deeper reasoning) inside a single mannequin. Preferrred for summarization, Q&A and on a regular basis assistant duties.

- Distilled fashions (R1‑Distill‑Qwen‑7B, and many others.) – these are smaller variations of the bottom fashions, providing decrease latency and value with barely decreased reasoning depth. Use them when velocity issues greater than maximal efficiency.

On the time of writing, DeepSeek‑OCR has simply been introduced and isn’t but out there on Clarifai. Keep watch over Clarifai’s mannequin catalog for updates.

Often Requested Questions (FAQs)

Q1: Do I would like a DeepSeek API key?

No. When utilizing Clarifai, you solely want a Clarifai Private Entry Token. Don’t use or expose the DeepSeek API key except you might be calling DeepSeek instantly (which this information doesn’t cowl).

Q2: How do I swap between fashions in code?

Change the mannequin worth to the Clarifai mannequin ID, comparable to openai/deepseek-ai/deepseek-chat/fashions/DeepSeek-R1 for R1 or openai/deepseek-ai/deepseek-chat/fashions/DeepSeek-V3.1 for V3.1.

Q3: What parameters can I tweak?

You’ll be able to regulate temperature, top_p and max_tokens to regulate randomness, sampling breadth and output size. For streaming responses, set stream=True. Instrument calling requires defining a software schema.

This fall: Are there fee limits?

Clarifai enforces delicate fee limits per PAT. Implement exponential backoff and keep away from retrying 4XX errors. For top throughput, contact Clarifai to extend quotas.

Q5: Is my information safe?

Clarifai processes requests in safe environments and complies with main information‑safety requirements. Retailer your PAT securely and keep away from together with delicate information in prompts except vital.

Q6: Can I superb‑tune DeepSeek fashions?

DeepSeek fashions are MIT‑licensed. Clarifai plans to supply non-public internet hosting and superb‑tuning for enterprise prospects within the close to future. Till then, you may obtain and superb‑tune the open‑supply fashions by yourself infrastructure.

Conclusion

You now have a quick, normal method to combine DeepSeek fashions, together with R1, V3.1, and distilled variants, into your functions. Clarifai handles all infrastructure, scaling, and orchestration. No separate DeepSeek key or complicated setup is required. Strive the fashions at this time by way of the Clarifai Playground or API and combine them into your functions.