In trendy enterprises, the exponential development of information means organizational data is distributed throughout a number of codecs, starting from structured knowledge shops corresponding to knowledge warehouses to multi-format knowledge shops like knowledge lakes. Info is commonly redundant and analyzing knowledge requires combining throughout a number of codecs, together with written paperwork, streamed knowledge feeds, audio and video. This makes gathering data for resolution making a problem. Workers are unable to shortly and effectively seek for the knowledge they want, or collate outcomes throughout codecs. A “Data Administration System” (KMS) permits companies to collate this data in a single place, however not essentially to go looking by it precisely.

In the meantime, ChatGPT has led to a surge in curiosity in leveraging Generative AI (GenAI) to deal with this downside. Customizing Massive Language Fashions (LLMs) is an effective way for companies to implement “AI”; they’re invaluable to each companies and their workers to assist contextualize organizational data.

Nonetheless, coaching fashions require big {hardware} sources, vital budgets and specialist groups. Plenty of expertise distributors supply API-based providers, however there are doubts round safety and transparency, with issues throughout ethics, consumer expertise and knowledge privateness.

Open LLMs i.e. fashions whose code and datasets have been shared with the group, have been a recreation changer in enabling enterprises to adapt LLMs, nevertheless pre-trained LLMs are inclined to carry out poorly on enterprise-specific data searches. Moreover, organizations need to consider the efficiency of those LLMs with the intention to enhance them over time. These two elements have led to growth of an ecosystem of tooling software program for managing LLM interactions (e.g. Langchain) and LLM evaluations (e.g. Trulens), however this may be rather more advanced at an enterprise-level to handle.

The Resolution

The Cloudera platform offers enterprise-grade machine studying, and together with Ollama, an open supply LLM localization service, offers a straightforward path to constructing a custom-made KMS with the acquainted ChatGPT model of querying. The interface permits for correct, business-wide, querying that’s fast and straightforward to scale with entry to knowledge units offered by Cloudera’s platform.

The enterprise context for this KMS could be offered by Retrieval-Augmented Era (RAG) of LLMs, to assist contextualize LLMs to a particular area. This enables the responses from a KMS to be particular and avoids producing obscure responses, referred to as hallucinations.

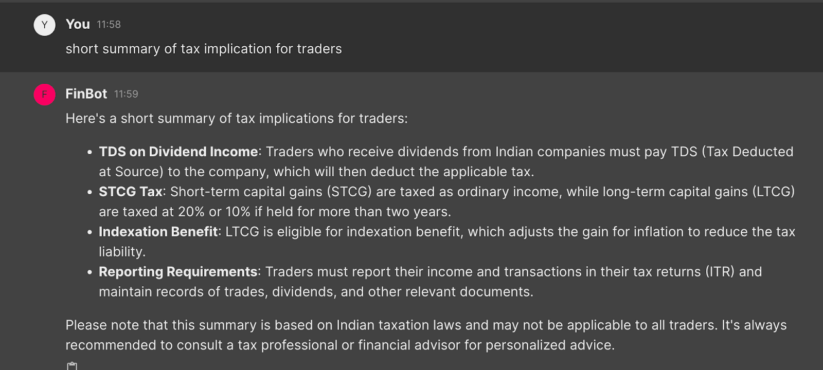

The picture above demonstrates a KMS constructed utilizing the llama3 mannequin from Meta. This utility is contextualized to finance in India. Within the picture, the KMS explains that the abstract relies on Indian Taxation legal guidelines, despite the fact that the consumer has not explicitly requested for a solution associated to India. This contextualization is feasible due to RAG.

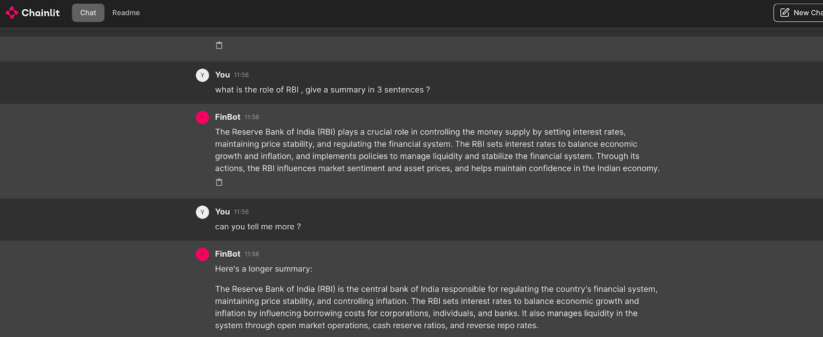

Ollama offers optimization and extensibility to simply arrange non-public and self-hosted LLMs, thereby addressing enterprise safety and privateness wants. Builders can write only a few traces of code, after which combine different frameworks within the GenAI ecosystem corresponding to Langchain, Llama Index for immediate framing, vector databases corresponding to ChromaDB or Pinecone, analysis frameworks corresponding to Trulens. GenAI particular frameworks corresponding to Chainlit additionally enable such purposes to be “sensible” by reminiscence retention between questions.

Within the image above, the applying is ready to first summarize after which perceive the follow-up query “are you able to inform me extra”, by remembering what was answered earlier.

Nonetheless, the query stays: how will we consider the efficiency of our GenAI utility and management hallucinating responses?

Historically, fashions are measured by evaluating predictions with actuality, additionally referred to as “floor fact.” For instance if my climate prediction mannequin predicted that it will rain at present and it did rain, then a human can consider and say the prediction matched the bottom fact. For GenAI fashions working in non-public environments and at-scale, such human evaluations could be not possible.

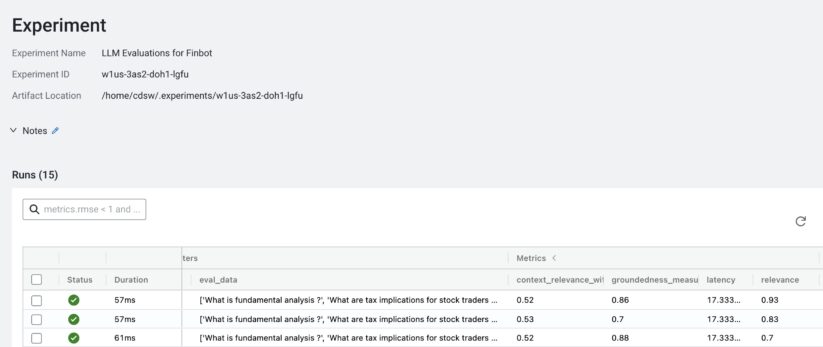

Open supply analysis frameworks, corresponding to Trulens, present totally different metrics to judge LLMs. Primarily based on the requested query, the GenAI utility is scored on relevance, context and groundedness. Trulens subsequently offers an answer to use metrics with the intention to consider and enhance a KMS.

The image above demonstrates saving the sooner metrics within the Cloudera platform for LLM efficiency analysis

With the Cloudera platform, companies can construct AI purposes hosted by open-source LLMs of their alternative. The Cloudera platform additionally offers scalability, permitting progress from proof of idea to deployment for a big number of customers and knowledge units. Democratized AI is offered by cross-functional consumer entry, that means strong machine studying on hybrid platforms could be accessed securely by many individuals all through the enterprise.

Finally, Ollama and Cloudera present enterprise-grade entry to localized LLM fashions, to scale GenAI purposes and construct strong Data Administration methods.

Discover out extra about Cloudera and Ollama on Github, or signal as much as Cloudera’s limited-time, “Quick Begin” package deal right here.