On this tutorial, we stroll by means of constructing a compact however totally purposeful Cipher-based workflow. We begin by securely capturing our Gemini API key within the Colab UI with out exposing it in code. We then implement a dynamic LLM choice perform that may routinely swap between OpenAI, Gemini, or Anthropic based mostly on which API secret is out there. The setup part ensures Node.js and the Cipher CLI are put in, after which we programmatically generate a cipher.yml configuration to allow a reminiscence agent with long-term recall. We create helper features to run Cipher instructions immediately from Python, retailer key challenge choices as persistent recollections, retrieve them on demand, and eventually spin up Cipher in API mode for exterior integration. Try the FULL CODES right here.

import os, getpass

os.environ["GEMINI_API_KEY"] = getpass.getpass("Enter your Gemini API key: ").strip()

import subprocess, tempfile, pathlib, textwrap, time, requests, shlex

def choose_llm():

if os.getenv("OPENAI_API_KEY"):

return "openai", "gpt-4o-mini", "OPENAI_API_KEY"

if os.getenv("GEMINI_API_KEY"):

return "gemini", "gemini-2.5-flash", "GEMINI_API_KEY"

if os.getenv("ANTHROPIC_API_KEY"):

return "anthropic", "claude-3-5-haiku-20241022", "ANTHROPIC_API_KEY"

elevate RuntimeError("Set one API key earlier than working.")We begin by securely getting into our Gemini API key utilizing getpass so it stays hidden within the Colab UI. We then outline a choose_llm() perform that checks our surroundings variables and routinely selects the suitable LLM supplier, mannequin, and key based mostly on what is out there. Try the FULL CODES right here.

def run(cmd, examine=True, env=None):

print("▸", cmd)

p = subprocess.run(cmd, shell=True, textual content=True, capture_output=True, env=env)

if p.stdout: print(p.stdout)

if p.stderr: print(p.stderr)

if examine and p.returncode != 0:

elevate RuntimeError(f"Command failed: {cmd}")

return pWe create a run() helper perform that executes shell instructions, prints each stdout and stderr for visibility, and raises an error if the command fails when examine is enabled, making our workflow execution extra clear and dependable. Try the FULL CODES right here.

def ensure_node_and_cipher():

run("sudo apt-get replace -y && sudo apt-get set up -y nodejs npm", examine=False)

run("npm set up -g @byterover/cipher")We outline ensure_node_and_cipher() to put in Node.js, npm, and the Cipher CLI globally, making certain our surroundings has all the required dependencies earlier than working any Cipher-related instructions. Try the FULL CODES right here.

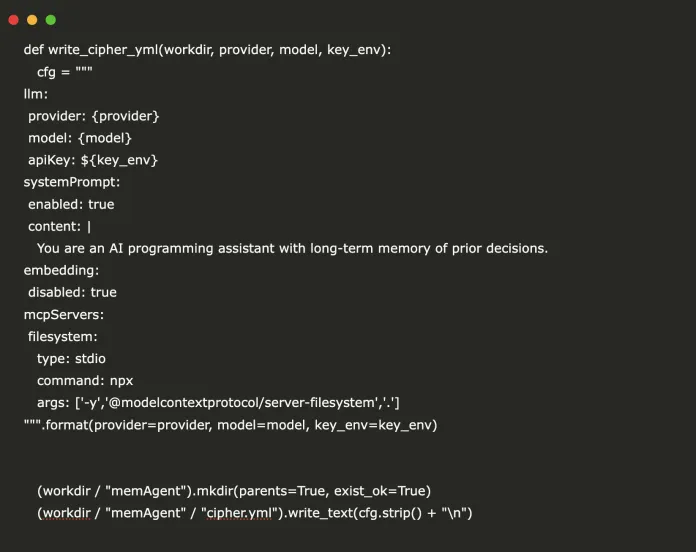

def write_cipher_yml(workdir, supplier, mannequin, key_env):

cfg = """

llm:

supplier: {supplier}

mannequin: {mannequin}

apiKey: ${key_env}

systemPrompt:

enabled: true

content material: |

You might be an AI programming assistant with long-term reminiscence of prior choices.

embedding:

disabled: true

mcpServers:

filesystem:

kind: stdio

command: npx

args: ['-y','@modelcontextprotocol/server-filesystem','.']

""".format(supplier=supplier, mannequin=mannequin, key_env=key_env)

(workdir / "memAgent").mkdir(mother and father=True, exist_ok=True)

(workdir / "memAgent" / "cipher.yml").write_text(cfg.strip() + "n")We implement write_cipher_yml() to generate a cipher.yml configuration file inside a memAgent folder, setting the chosen LLM supplier, mannequin, and API key, enabling a system immediate with long-term reminiscence, and registering a filesystem MCP server for file operations. Try the FULL CODES right here.

def cipher_once(textual content, env=None, cwd=None):

cmd = f'cipher {shlex.quote(textual content)}'

p = subprocess.run(cmd, shell=True, textual content=True, capture_output=True, env=env, cwd=cwd)

print("Cipher says:n", p.stdout or p.stderr)

return p.stdout.strip() or p.stderr.strip()We outline cipher_once() to run a single Cipher CLI command with the offered textual content, seize and show its output, and return the response, permitting us to work together with Cipher programmatically from Python. Try the FULL CODES right here.

def start_api(env, cwd):

proc = subprocess.Popen("cipher --mode api", shell=True, env=env, cwd=cwd,

stdout=subprocess.PIPE, stderr=subprocess.STDOUT, textual content=True)

for _ in vary(30):

attempt:

r = requests.get("http://127.0.0.1:3000/well being", timeout=2)

if r.okay:

print("API /well being:", r.textual content)

break

besides: cross

time.sleep(1)

return procWe create start_api() to launch Cipher in API mode as a subprocess, then repeatedly ballot its /well being endpoint till it responds, making certain the API server is prepared earlier than continuing. Try the FULL CODES right here.

def fundamental():

supplier, mannequin, key_env = choose_llm()

ensure_node_and_cipher()

workdir = pathlib.Path(tempfile.mkdtemp(prefix="cipher_demo_"))

write_cipher_yml(workdir, supplier, mannequin, key_env)

env = os.environ.copy()

cipher_once("Retailer choice: use pydantic for config validation; pytest fixtures for testing.", env, str(workdir))

cipher_once("Bear in mind: observe typical commits; implement black + isort in CI.", env, str(workdir))

cipher_once("What did we standardize for config validation and Python formatting?", env, str(workdir))

api_proc = start_api(env, str(workdir))

time.sleep(3)

api_proc.terminate()

if __name__ == "__main__":

fundamental()In fundamental(), we choose the LLM supplier, set up dependencies, and create a short lived working listing with a cipher.yml configuration. We then retailer key challenge choices in Cipher’s reminiscence, question them again, and eventually begin the Cipher API server briefly earlier than shutting it down, demonstrating each CLI and API-based interactions.

In conclusion, we’ve a working Cipher atmosphere that securely manages API keys, selects the suitable LLM supplier routinely, and configures a memory-enabled agent solely by means of Python automation. Our implementation consists of choice logging, reminiscence retrieval, and a stay API endpoint, all orchestrated in a Pocket book/Colab-friendly workflow. This makes the setup reusable for different AI-assisted growth pipelines, permitting us to retailer and question challenge data programmatically whereas retaining the atmosphere light-weight and straightforward to redeploy.

Try the FULL CODES right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.