Introduction

Giant Language Fashions or LLMs, have been all the fashion because the creation of ChatGPT in 2022. That is largely because of the success of the transformer structure and availability of terabytes price of textual content knowledge over the web. Regardless of their fame, LLMs are basically restricted to working solely with texts.

A VLM is price 1000 LLMs

Imaginative and prescient Language Fashions or VLMs are AI fashions that use each pictures and textual knowledge to carry out duties that basically want each of them. With how good LLMs have turn out to be, constructing high quality VLMs has turn out to be the subsequent logical step in the direction of Synthetic Normal Intelligence.

On this article, let’s perceive the basics of VLMs with a give attention to the right way to construct one. All through this text, we are going to cowl the most recent papers within the analysis and can present with related hyperlinks to the papers.

To offer an summary, within the following sections, we are going to cowl following subjects:

- The purposes of VLMs

- The historic background of VLMs, together with their origins and components contributing to their rise.

- A taxonomy of various VLM architectures, with examples for every class.

- An outline of key elements concerned in coaching VLMs, together with notable papers that utilized these elements.

- A assessment of datasets used to coach numerous VLMs, highlighting what made them distinctive.

- Analysis benchmarks used to check mannequin efficiency, explaining why sure evaluations are essential for particular purposes.

- State-of-the-art VLMs in relation to those benchmarks.

- A piece specializing in VLMs for doc understanding and main fashions for extracting data from paperwork.

- Lastly, we are going to conclude with key issues to contemplate for choosing up a VLM on your use case.

A few disclaimers:

VLMs work with texts and pictures, and there are a category of fashions known as Picture Mills that do the next:

- Picture Technology from textual content/immediate: Generate pictures from scratch that comply with an outline

- Picture Technology from textual content and picture: Generate pictures that resemble a given picture however are modified as per the outline

Whereas these are nonetheless thought of VLMs on a technicality, we is not going to be speaking about them because the analysis concerned is basically totally different. Our protection will probably be unique to VLMs that generate textual content as output.

There exists one other class of fashions, often known as Multimodal-LLMs (MLLMs). Though they sound much like VLMs, MLLMs are a broader class that may work with numerous combos of picture, video, audio, and textual content modalities. In different phrases, VLMs are only a subset of MLLMs.

Lastly, the figures for mannequin architectures and benchmarks have been taken from the respective papers previous the figures.

Functions of VLMs

Listed below are some simple purposes that solely VLMs can resolve –

Picture Captioning: Robotically generate textual content describing the pictures️

Dense Captioning: Producing a number of captions with a give attention to describing all of the salient options/objects within the picture

Occasion Detection: Detection of objects with bounding bins in a picture

Visible Query Answering (VQA): Questioning (textual content) and answering (textual content) about a picture️

Picture Retrieval or Textual content to Picture discovery: Discovering pictures that match a given textual content description (Form of the other of Picture Captioning)

Zero Shot Picture classification: The important thing distinction of this job from common picture classification is that it goals to categorize new lessons with out requiring extra coaching.

Artificial knowledge era: Given the capabilities of LLMs and VLMs, we have developed quite a few methods to generate high-quality artificial knowledge by leveraging variations in picture and textual content outputs from these fashions. Exploring these strategies alone could possibly be sufficient for a complete thesis! That is normally performed to coach extra succesful VLMs for different duties

Variations of above talked about purposes can be utilized in medical, industrial, academic, finance, e-commerce and lots of different domains the place there are giant volumes of pictures and texts to work with. Now we have listed some examples under

- Automating Radiology Report Technology by way of Dense Picture Captioning in Medical Diagnostics.

- Defect detection in manufacturing and automotive industries utilizing zeroshot/fewshot picture classification.

- Doc retrieval in monetary/authorized domains

- Picture Search in e-commerce could be turbo charged with VLMs by permitting the search queries to be as nuanced as doable.

- Summarizing and answering questions primarily based on diagrams in training, analysis, authorized and monetary domains.

- Creating detailed descriptions of merchandise and its specs in e-commerce,

- Generic Chatbots that may reply person’s questions primarily based on a pictures.

- Helping Visually Impaired by describing their present scene and textual content within the scene. VLMs can present related contextual details about the person’s atmosphere, considerably enhancing their navigation and interplay with the world.

- Fraud detection in journalism, and finance industries by flagging suspicious articles.

Historical past

Earliest VLMs have been in mid 2010s. Two of probably the most profitable makes an attempt have been Present and Inform and Visible Query Answering. The rationale for the success of those two papers can also be the basic idea what makes a VLM work – Facilitate efficient communication between visible and textual representations, by adjusting picture embeddings from a visible spine to make them suitable with a textual content spine. The sphere as such by no means actually took off as a result of lack of huge quantity of knowledge or good architectures.

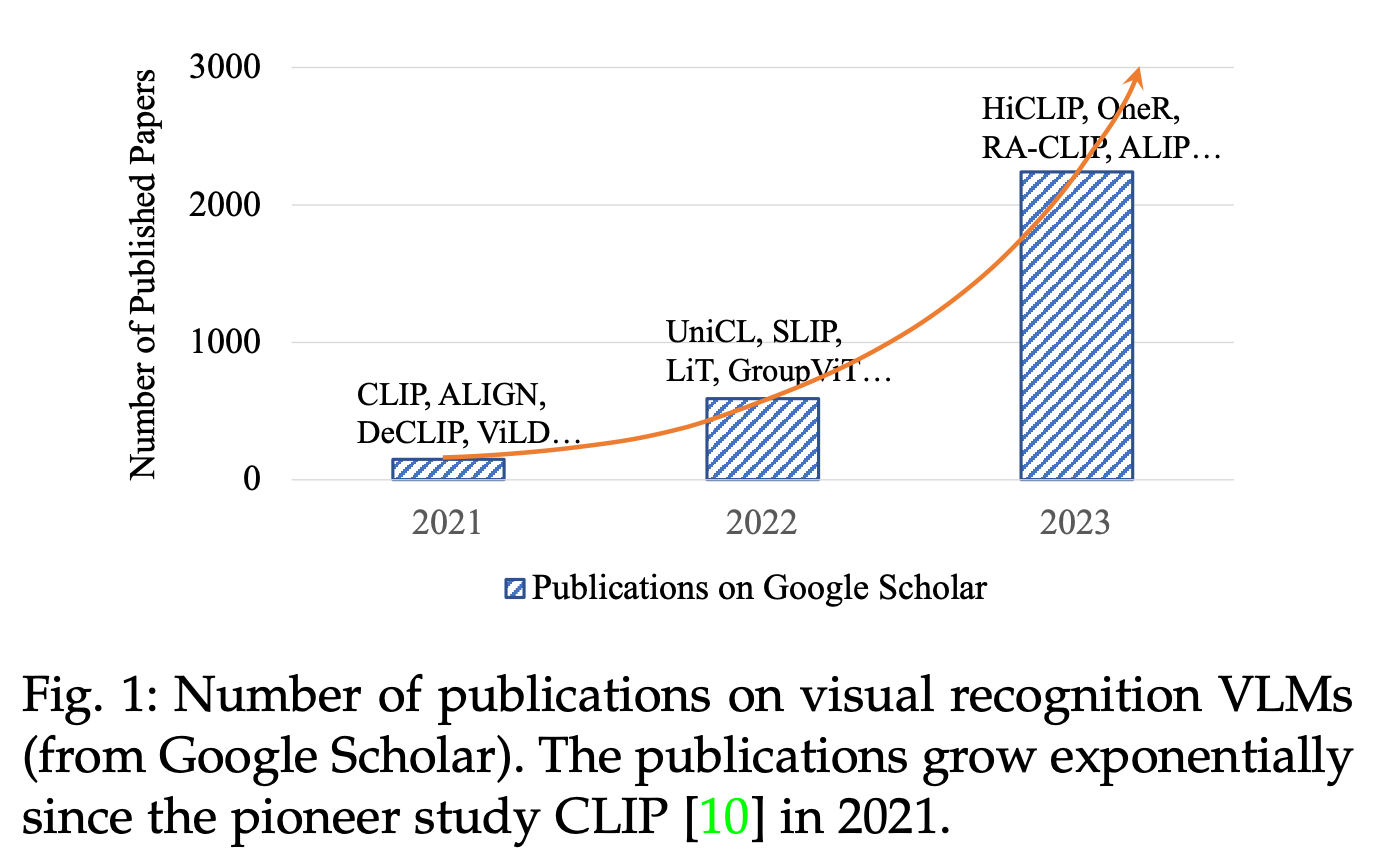

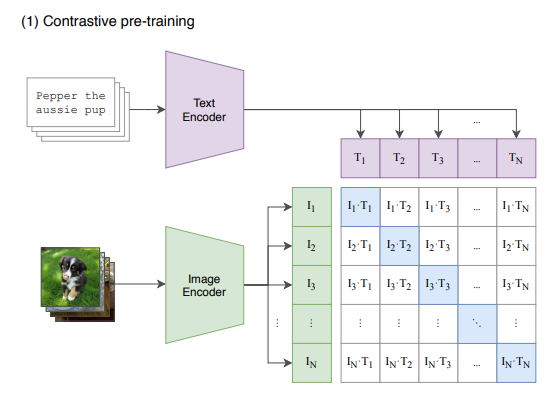

The primary promising mannequin in 2020s was CLIP. It addressed the shortage of coaching knowledge by leveraging the huge variety of pictures on the web accompanied by captions and alt-text. Not like conventional supervised fashions, which deal with every image-caption pair as a single knowledge level, CLIP utilized contrastive studying to remodel the issue right into a one-image-to-many-text comparability job. This strategy successfully multiplied the variety of knowledge factors to coach on, enabling for a simpler coaching.

When CLIP was launched, the transformer structure had already demonstrated its versatility and reliability throughout numerous domains, solidifying its standing as a go-to alternative for researchers. Nevertheless they have been largely focussed solely on textual content associated duties.️ ViT was the landmark paper that proved transformers could be additionally used for picture duties. The discharge of LLMs together with the promise of ViT has successfully paved manner for the trendy VLMs that we all know.

This is a picture giving the variety of VLM publications over the previous few years

VLM Architectures

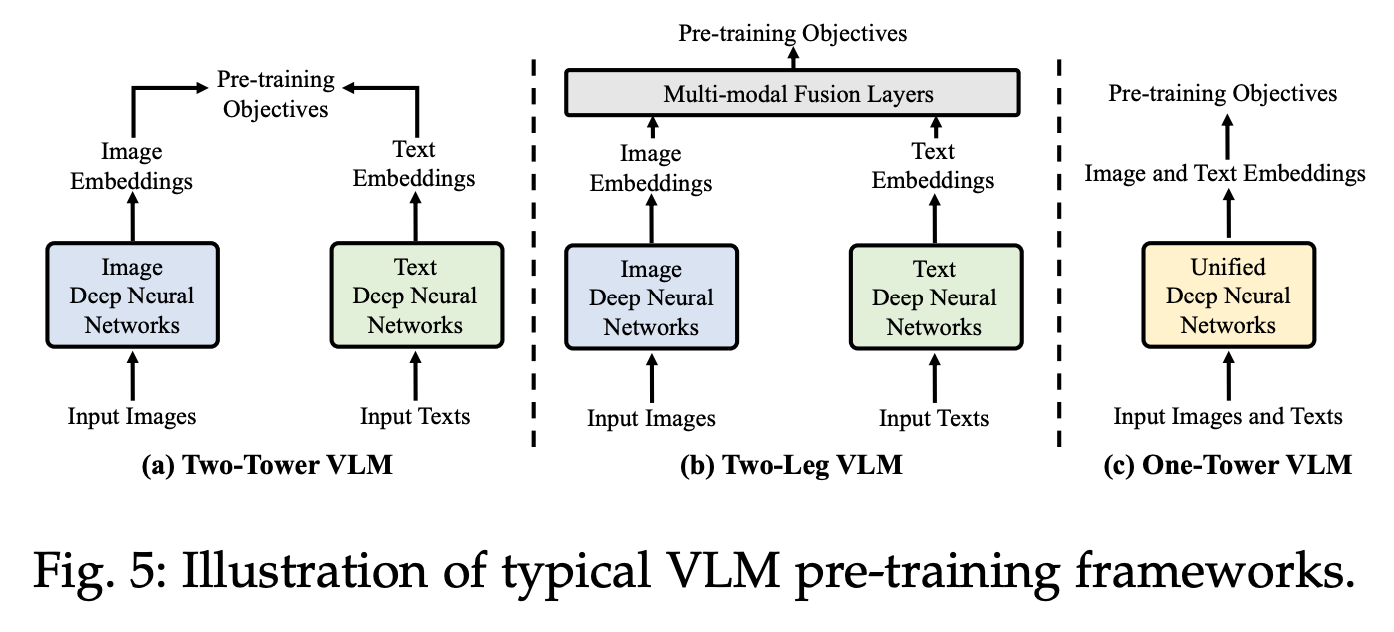

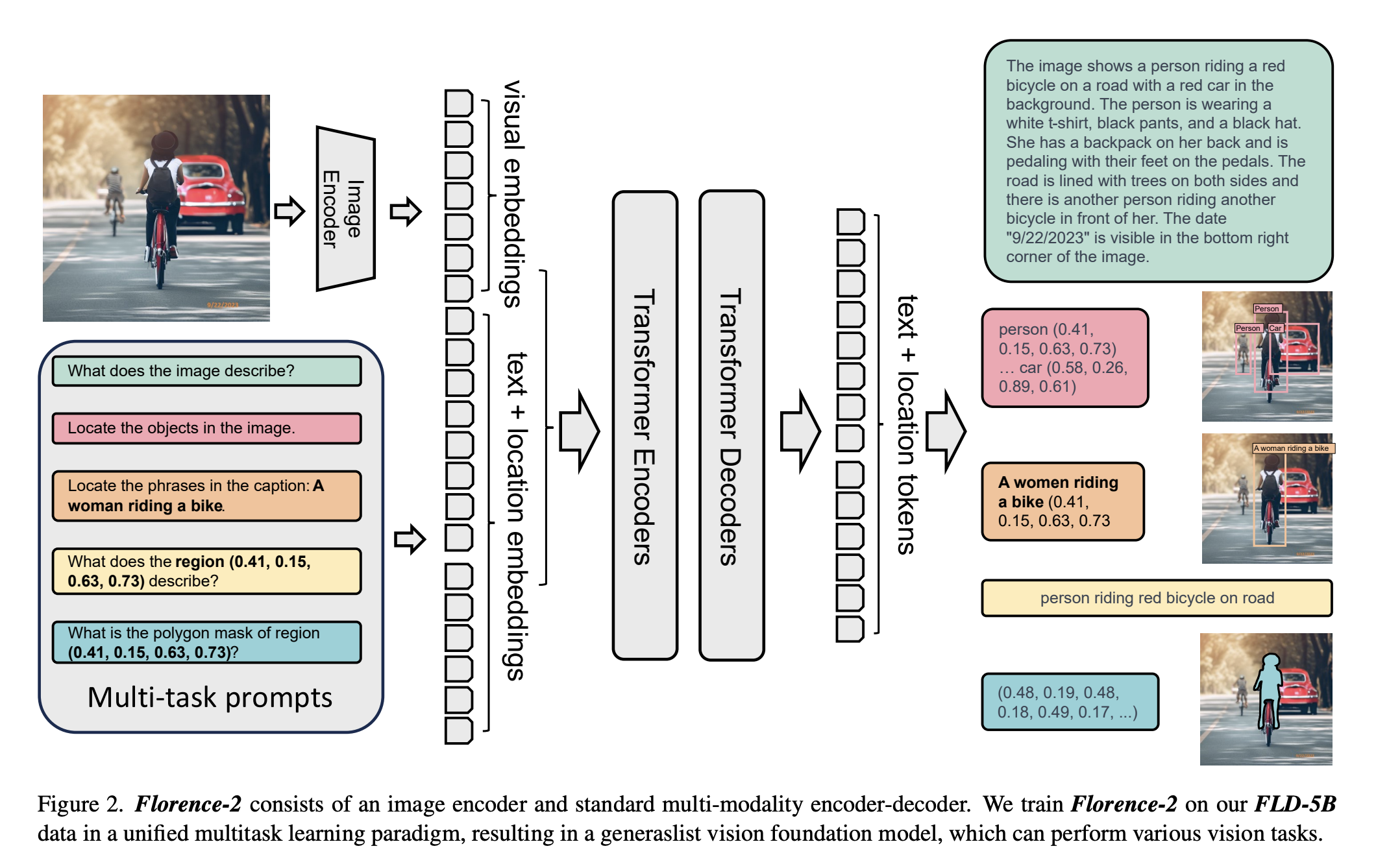

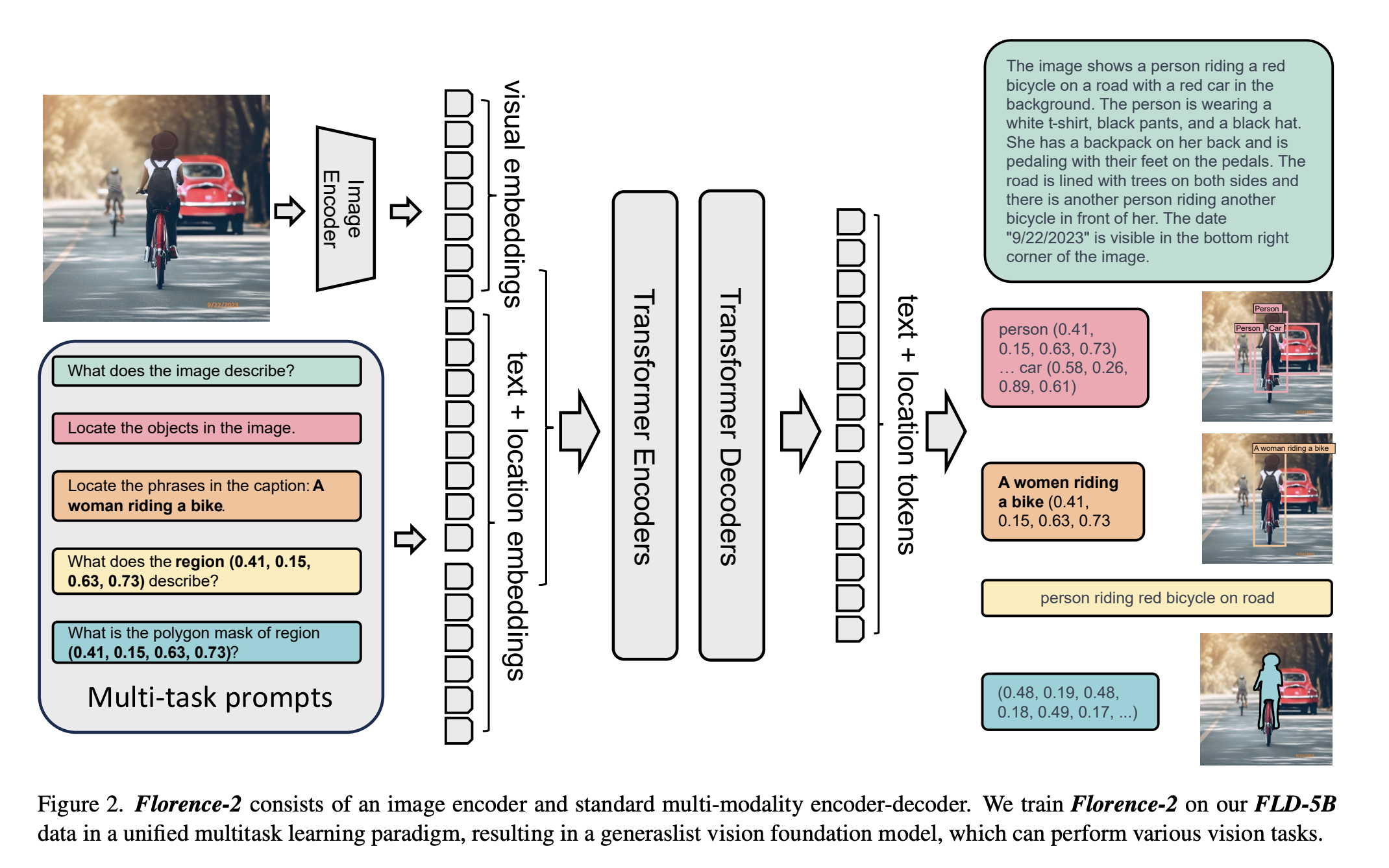

As mentioned within the above part, one of many essential side of an excellent VLM is the right way to deliver picture embeddings into the textual content embedding house. The architectures are sometimes of the three

- Two-Tower VLM the place the one connection between imaginative and prescient and textual content networks is on the ultimate layer. CLIP is the traditional instance for this

- Two-Leg VLM the place a single LLM takes textual content tokens together with tokens from imaginative and prescient encoder.

- Unified VLM the place the spine is attending to visible and textual inputs on the similar time

Take into account that there isn’t any laborious taxonomy and there will probably be exceptions. With that, following are the widespread ways in which have VLMs have proven promise.

- Shallow/Early Fusion

- Late Fusion

- Deep Fusion

Let’s focus on every one in every of them under.

Shallow/Early fusion

A standard function of the architectures on this part is that the connection between imaginative and prescient inputs and language happens early within the course of. Sometimes, this implies the imaginative and prescient inputs are minimally reworked earlier than getting into the textual content area, therefore the time period “shallow”. When a well-aligned imaginative and prescient encoder is established, it could possibly successfully deal with a number of picture inputs, a functionality that even subtle fashions typically battle to realize!

Let’s cowl the 2 forms of early fusion strategies under.

Imaginative and prescient Encoder

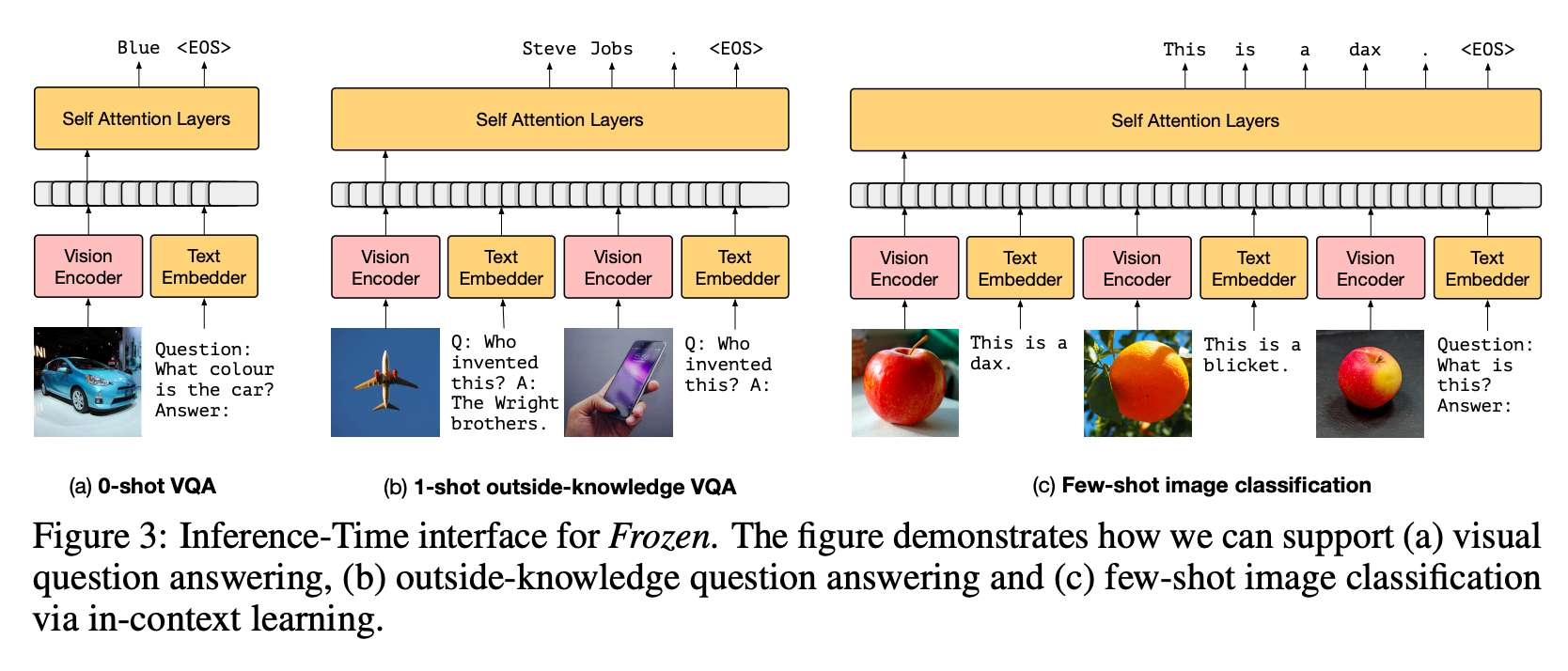

This is without doubt one of the easiest. Guarantee your imaginative and prescient encoder outputs are suitable with an LLMs inputs and simply prepare the imaginative and prescient encoder whereas holding the LLM frozen.

The structure is basically an LLM (particularly a decoder solely transformer) with a department for a picture encoder. It is quick to code, straightforward to know and normally doesn’t want writing any new layers.

These architectures have the identical loss as LLMs (i.e., the standard of subsequent token prediction)

Frozen is an instance of such implementation. Along with coaching the imaginative and prescient encoder, the strategy employs prefix-tuning, which includes attaching a static token to all visible inputs. This setup permits the imaginative and prescient encoder to regulate itself primarily based on the LLM’s response to the prefix.

Imaginative and prescient Projector/Adapter

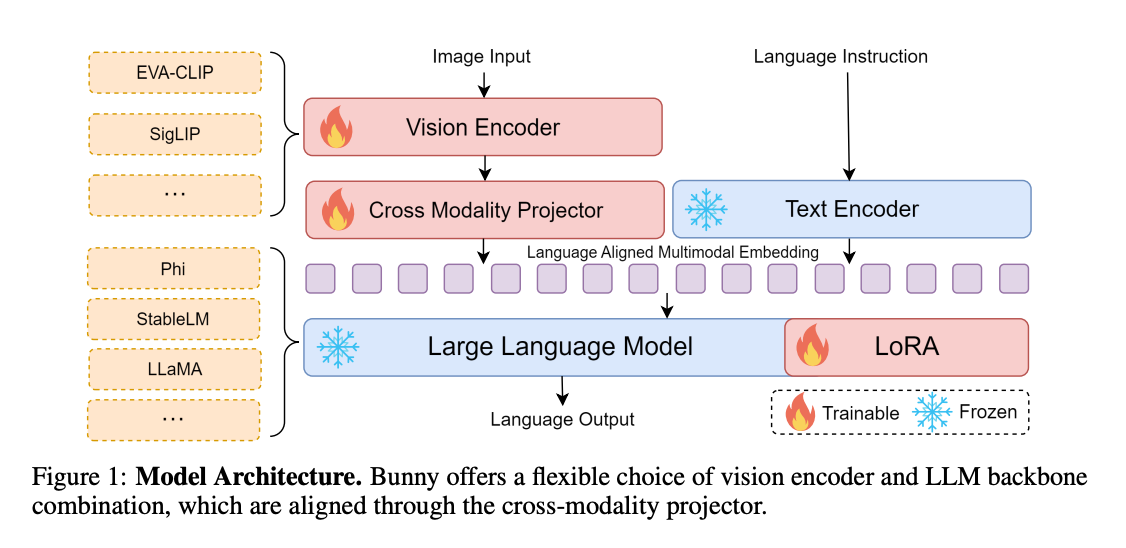

Challenge with utilizing only a Imaginative and prescient Encoder is that it is troublesome to make sure the imaginative and prescient encoder’s outputs are suitable with the LLM, limiting the variety of decisions for Imaginative and prescient,LLM pairs. What is less complicated is to have an intermediate layer between Imaginative and prescient and LLM networks that makes this output from Imaginative and prescient suitable with LLM. With the projector inserted between them, any imaginative and prescient embeddings could be aligned for any LLM’s comprehension. This structure provides elevated/related flexibility in comparison with coaching a imaginative and prescient encoder. One now has a option to freeze each imaginative and prescient and LLM networks. additionally accelerating coaching as a result of sometimes compact dimension of adapters.️

The projectors could possibly be so simple as MLP, i.e, a number of linear layers interleaved with non-linear activation features. Some such fashions are –

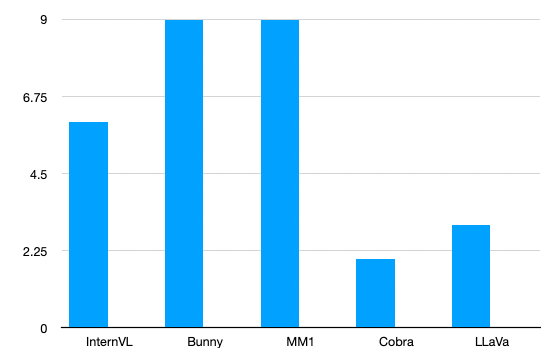

- LLaVa household of fashions – A deceptively easy structure that gained prominence for its emphasis on coaching with high-quality artificial knowledge.

- Bunny – An structure which helps a number of imaginative and prescient and language backbones. It makes use of LoRA to coach LLMs element as properly.

- MM1 makes use of combination of consultants fashions, a 3B-MoE utilizing 64 consultants that replaces a dense layer with a sparse layer in every-2 layers and a 7B-MoE utilizing 32 consultants that replaces a dense layer with a sparse layer in every-4 layers. By leveraging MoEs and curating the datasets, MM1 creates a powerful household of fashions which might be very environment friendly and correct.

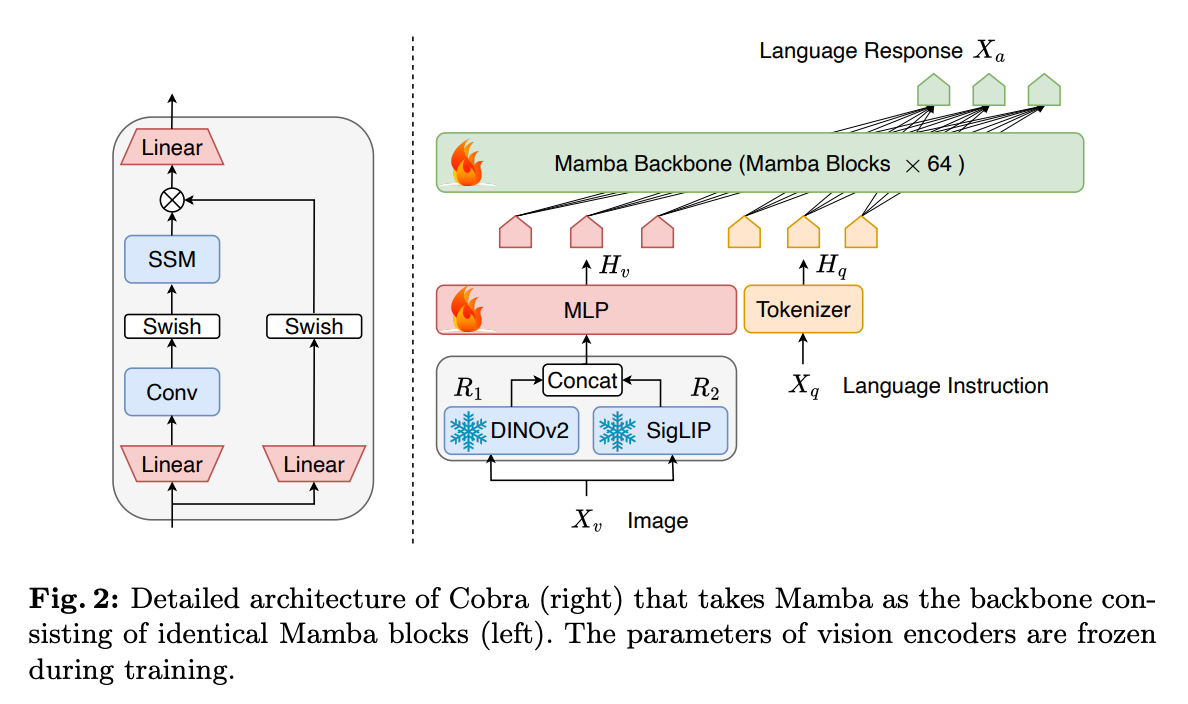

- Cobra – Makes use of the mamba structure as an alternative of the same old transformers

Projectors may also be specialised/complicated as exemplified by the next architectures

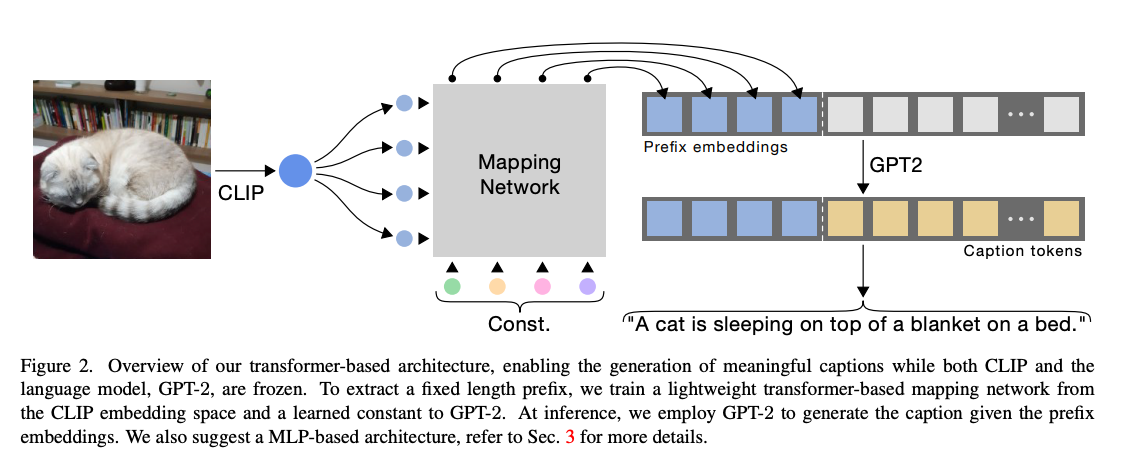

- CLIP Cap – Right here the “imaginative and prescient encoder” is basically a mix of CLIP’s imaginative and prescient encoder + a transformer encoder

- BLIP-2 makes use of a Q-Former as its adapter for stronger grounding of content material with respect to pictures

- MobileVLMv2 makes use of a light-weight point-wise convolution primarily based structure as VLM with MobileLLama as (Small Language Mannequin) SLM as an alternative of an LLM, focussing on the velocity of predictions.

One can use a number of projectors as properly

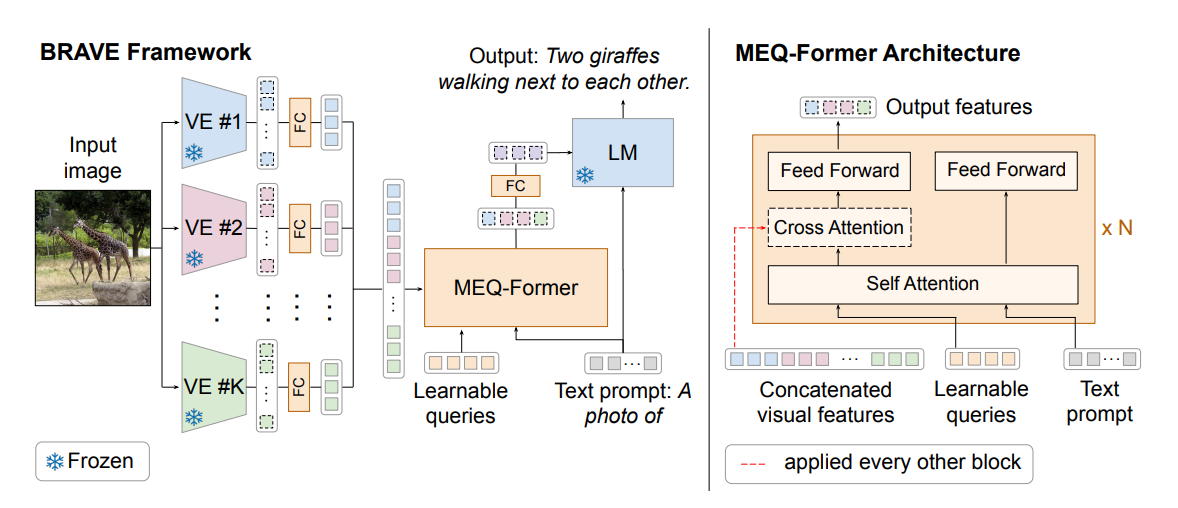

- BRAVE makes use of as much as 5 imaginative and prescient encoders and an adapter known as MEQ-Former that concatenates all of the imaginative and prescient inputs into one earlier than sending to the VLM

- Honeybee – makes use of two specialised imaginative and prescient encoders known as C-Abstractor and D-Abstractor that target locality preservation and skill to output a versatile variety of output tokens respectively

- DeepSeek VL additionally makes use of a number of encoders to protect each high-level and low-level particulars within the picture. Nevertheless on this case LLM can also be skilled resulting in deep fusion which we are going to cowl in a subsequent part.

Late Fusion

These architectures have imaginative and prescient and textual content fashions totally disjoint. The one place the place textual content and imaginative and prescient embeddings come collectively are throughout loss computation and this loss is usually contrastive loss.

- CLIP is the traditional instance the place textual content and picture are encoded individually and are in contrast by way of contrastive loss to regulate the encoders.

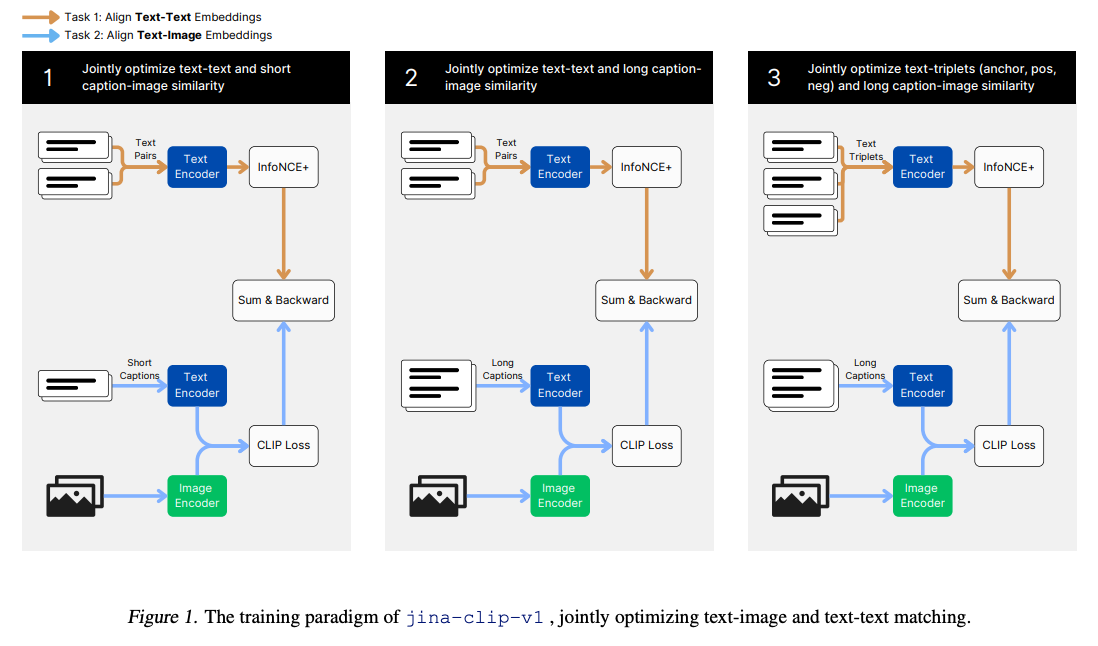

- JINA CLIP places a twist on CLIP structure by collectively optimizing CLIP Loss (i.e., image-text distinction) together with text-text distinction the place textual content pairs are deemed related if and provided that they’ve related semantic that means. There is a lesson to be taught right here, use extra goal features to make the alignment extra correct.

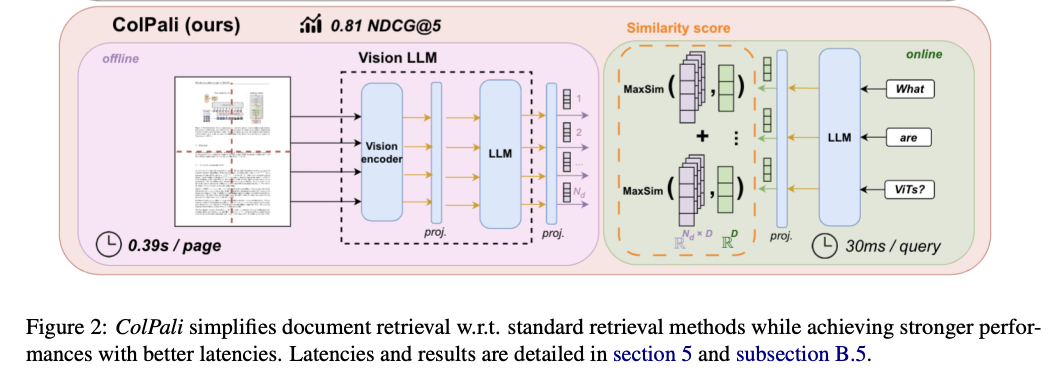

- ColPali is one other instance of late fusion, particularly skilled for doc retrieval. Nevertheless, it differs barely from CLIP in that it makes use of a imaginative and prescient encoder mixed with a big language mannequin (LLM) for imaginative and prescient embeddings, whereas relying solely on the LLM for textual content embeddings.

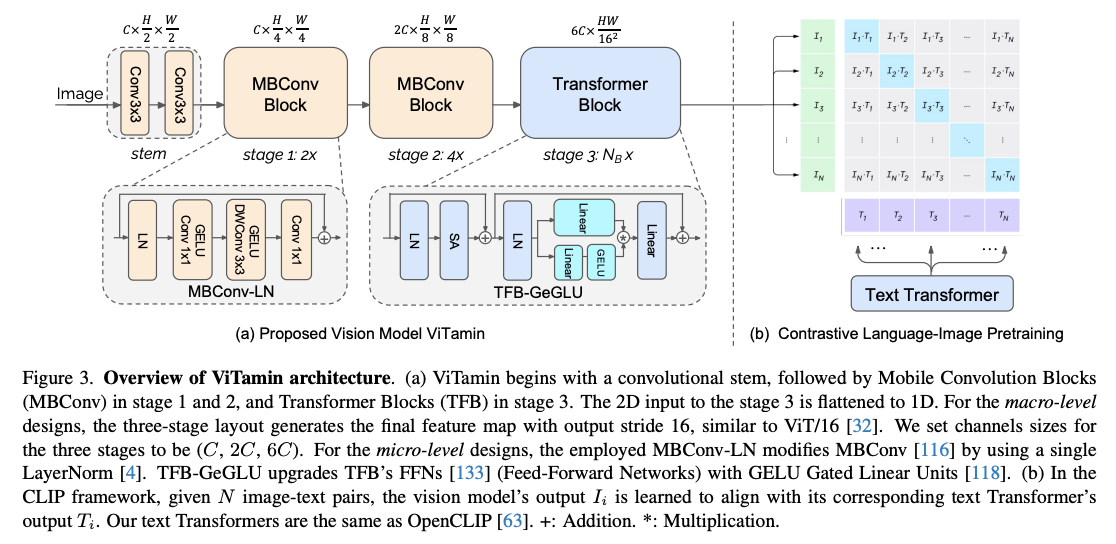

- ViTamin trains a imaginative and prescient tower that could be a concatenation of Convolution and Transformer blocks to get the very best of each worlds.

Deep Fusion

These architectures sometimes attend to picture options within the deeper layers of the community permitting for richer cross modal data switch. Sometimes the coaching spans throughout all of the modalities. These sometimes take extra time to coach however could supply higher effectivity and accuracies. Typically the architectures are much like Two-Leg VLMs with LLMs unfrozen

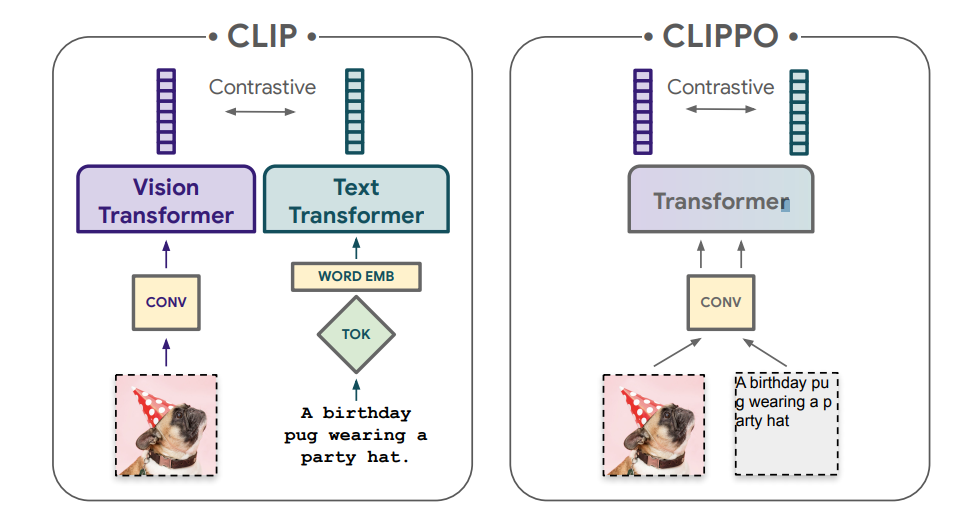

- CLIPPO is a variation of CLIP that makes use of a single encoder for each textual content and pictures.

- Single-tower Transformer trains a single transformer from scratch enabling a number of VLM purposes without delay.

- DINO makes use of localization loss along with cross-modality transformer to carry out zero-shot object detection, i.e, predict lessons that weren’t current in coaching

- KOSMOS-2 treats bounding bins as inputs/outputs together with textual content and picture tokens baking object detection into the language mannequin itself.

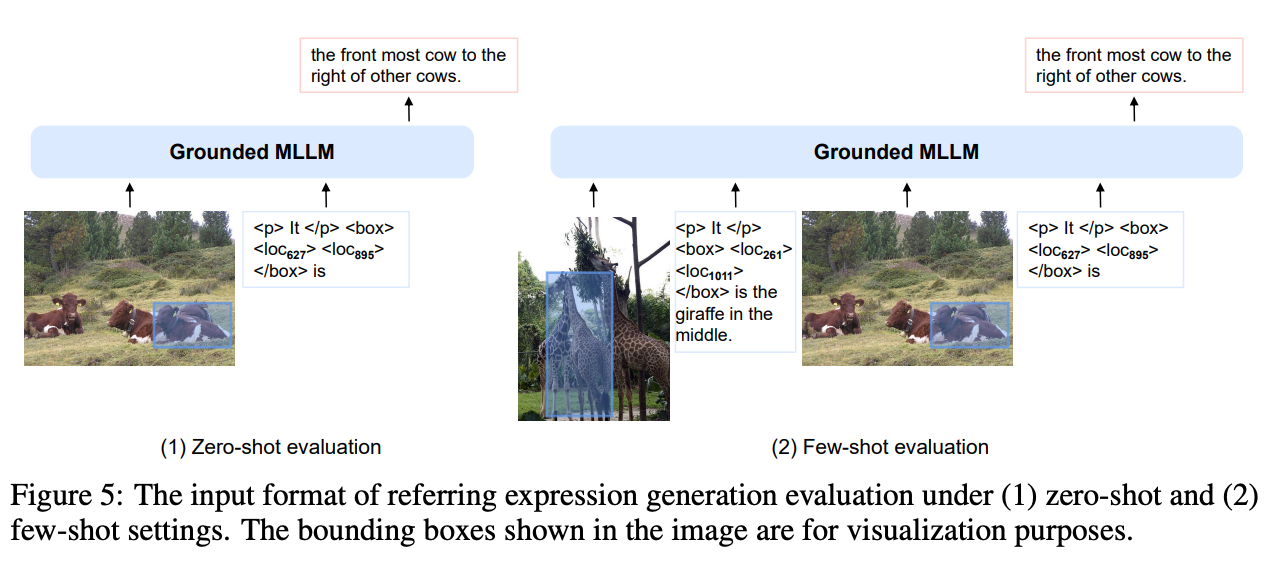

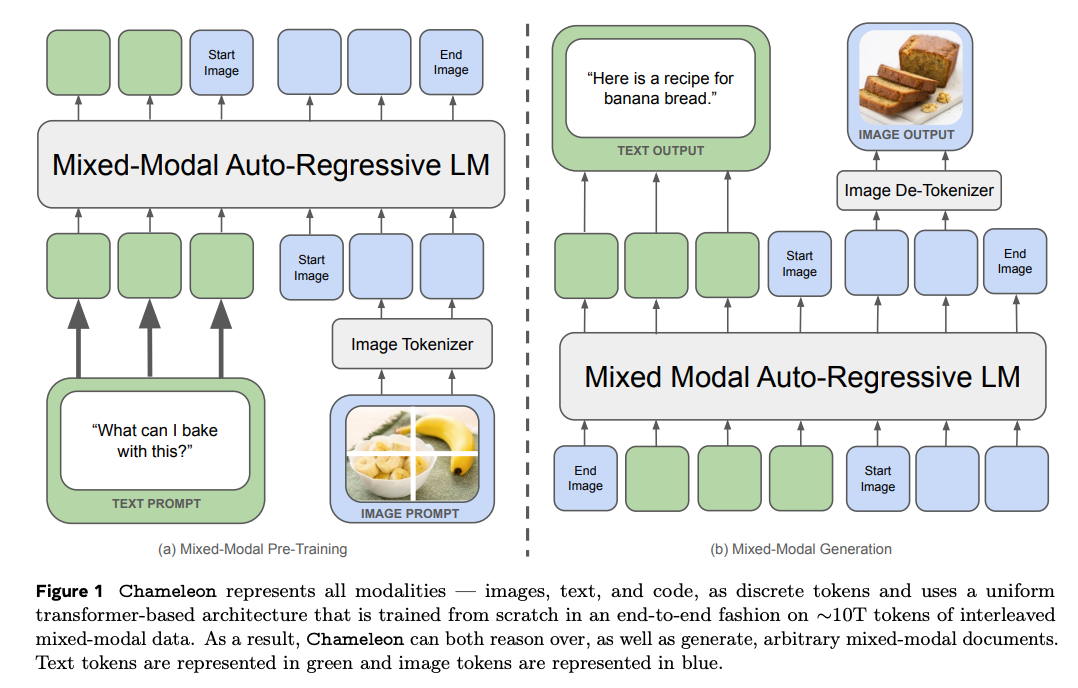

- Chameleon treats pictures natively as tokens by utilizing a quantizer resulting in text-vision agnostic structure

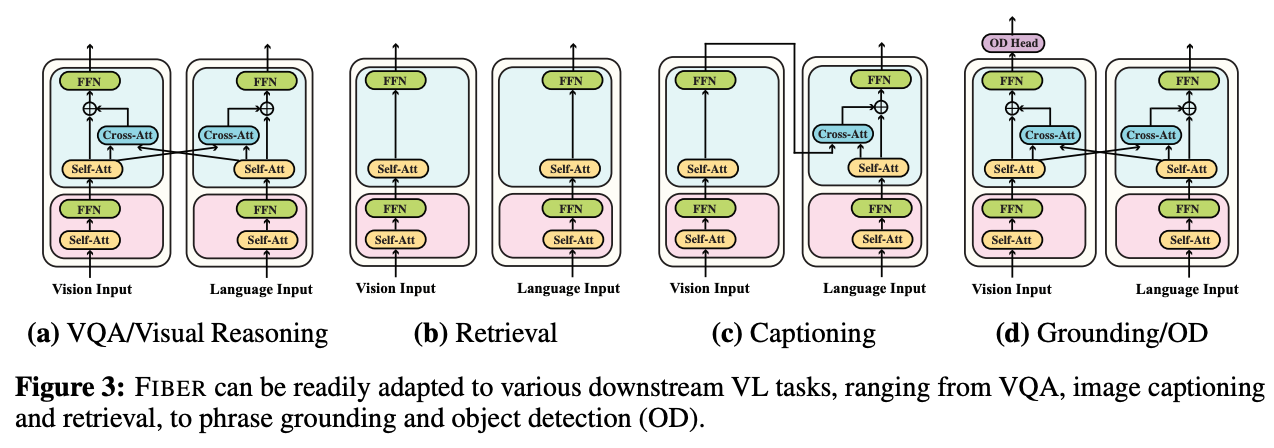

- FIBER makes use of dynamic cross consideration modules by switching them on/off to carry out totally different duties.

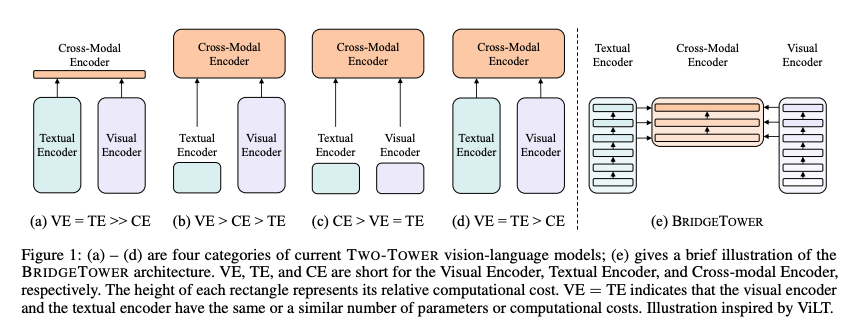

- BridgeTower creates a separate cross-modal encoder with a “bridge-layer” to cross attend to each textual content on imaginative and prescient and imaginative and prescient on textual content tokens to encapsulate a richer interplay.

- Flamingo – The imaginative and prescient tokens are computed with a modified model of Resnet and from from a specialised layer known as the Perceiver Resampler that’s much like DETR. It then makes use of dense fusion of imaginative and prescient with textual content by cross-attending imaginative and prescient tokens with language tokens utilizing a Chinchilla LLM because the frozen spine.

- MoE-LLaVa makes use of the combination of consultants method to deal with each imaginative and prescient and textual content tokens. It trains the mannequin in two levels the place solely the FFNs are skilled first and later the LLM

VLM Coaching

Coaching a VLM is a fancy course of that may contain a number of targets, every tailor-made to enhance efficiency on a wide range of duties. Under, we’ll discover

- Goals – the widespread targets used throughout coaching and pre-training of VLMs, and

- Coaching Greatest Practices – a number of the finest practices akin to pre-training, fine-tuning, and instruction tuning, which assist optimize these fashions for real-world purposes

Goals

There’s a wealthy interplay between pictures and texts. Given the number of architectures and duties in VLM, there isn’t any single technique to prepare the fashions. Let’s cowl the widespread targets used for coaching/pre-training of VLMs

- Contrastive Loss: This goals to regulate the embeddings in order that the gap between matching pairs is minimized, whereas the gap between non-matching pairs is maximized. It’s significantly helpful as a result of it’s straightforward to acquire matching pairs, and on prime of that each exponentially rising the variety of destructive samples obtainable for coaching.

- CLIP and all it is variations are a traditional instance of coaching with contrastive loss the place the match occurs between embeddings of (picture and textual content) pairs. InternVL, BLIP2, SigLIP are additionally some notable examples.

- SLIP demonstrates that pre-training of imaginative and prescient encoder with image-to-image contrastive loss even earlier than pre-training CLIP, will assist an amazing deal in enhancing the general efficiency

- Florence modifies the contrastive loss by together with the picture label and hash of the textual content, calling it Unified-CL

- ColPali makes use of two contrastive losses, one for image-text and one for text-text.

- Generative Loss – This class of losses deal with the VLM as a generator and is normally used for zero-shot and language era duties.

- Masked Language Modeling – You prepare a textual content language encoder to foretell intermediate token given the encircling context FIBER are simply a few examples amongst lots of.

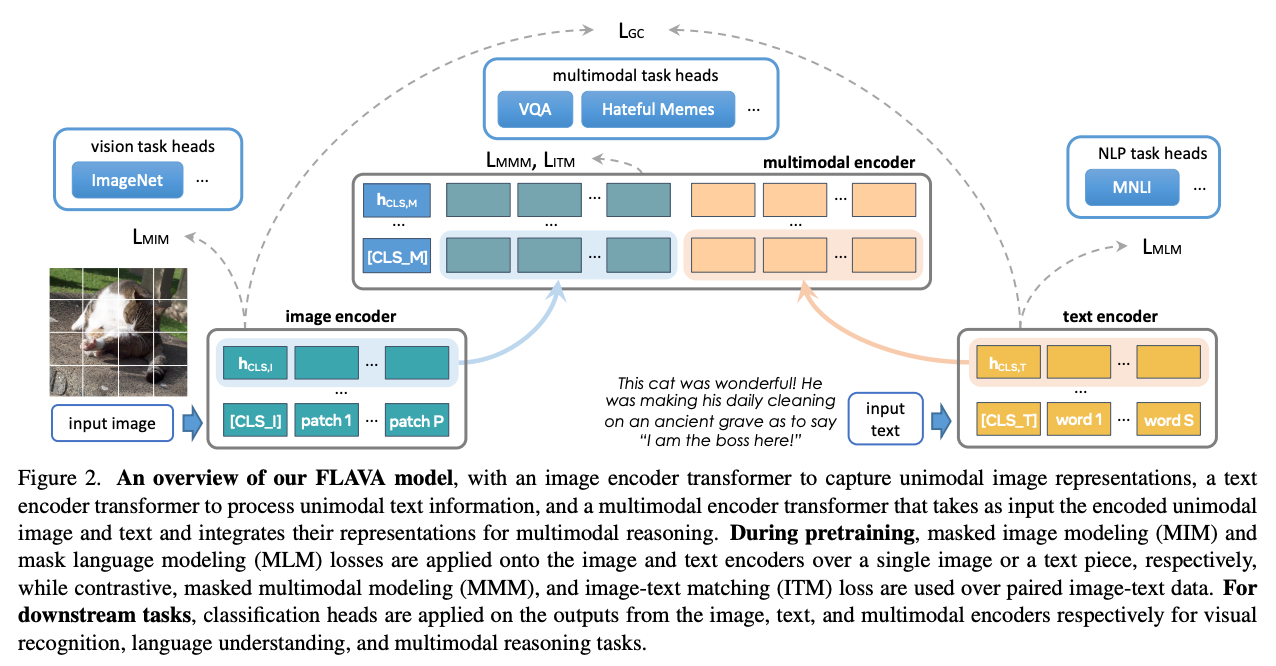

- Masked Picture Modeling – You prepare a transformer to foretell picture tokens by masking them through the enter, forcing the mannequin to be taught with restricted knowledge. LayoutLM, and utilization of MAE by SegCLIP, BeiT by FLAVA are examples of this loss

- Masked Picture+Textual content Modelling – Because the title suggests, one can use a two-leg structure to concurrently masks each picture and textual content tokens to make sure the community learns as a lot cross-domain interactions as doable with restricted knowledge. FLAVA is one such instance.

- Area of interest Cross Modality Alignments – Be aware that one can at all times provide you with good goal features given the richness of the panorama. For instance –

- BLIP2 created an Picture-grounded Textual content Technology loss,

- LayoutLM makes use of one thing known as Phrase Patch Alignment to roughly determine the place a phrase is current within the doc

Coaching Greatest Practices

Coaching VLMs successfully requires extra than simply selecting the best targets—it additionally includes following established finest practices to make sure optimum efficiency. On this part, we’ll dive into key practices akin to pre-training on giant datasets, fine-tuning for specialised duties, instruction tuning for chatbot capabilities, and utilizing strategies like LoRAs to effectively prepare giant language fashions. Moreover, we’ll cowl methods to deal with complicated visible inputs, akin to a number of resolutions and adaptive picture cropping.

Pre-training

Right here, solely the adapter/projector layer is skilled with as a lot knowledge as doable (sometimes goes into hundreds of thousands of image-text pairs). The purpose is to align picture encoder with textual content decoder and the main target may be very a lot on the amount of the information. Sometimes, this job is unsupervised in nature and makes use of one in every of contrastive loss or the subsequent token prediction loss whereas adjusting the enter textual content immediate to guarantee that the picture context is properly understood by the language mannequin.

Nice tuning

Relying on the structure some, or all the adapter, textual content, imaginative and prescient elements are unfrozen from step 1 and skilled. The coaching goes to be very sluggish due to the big variety of trainable parameters. Resulting from this, the variety of knowledge factors is diminished to a fraction of knowledge that was utilized in first step and it’s ensured that each knowledge level is of highest high quality.

Instruction Tuning

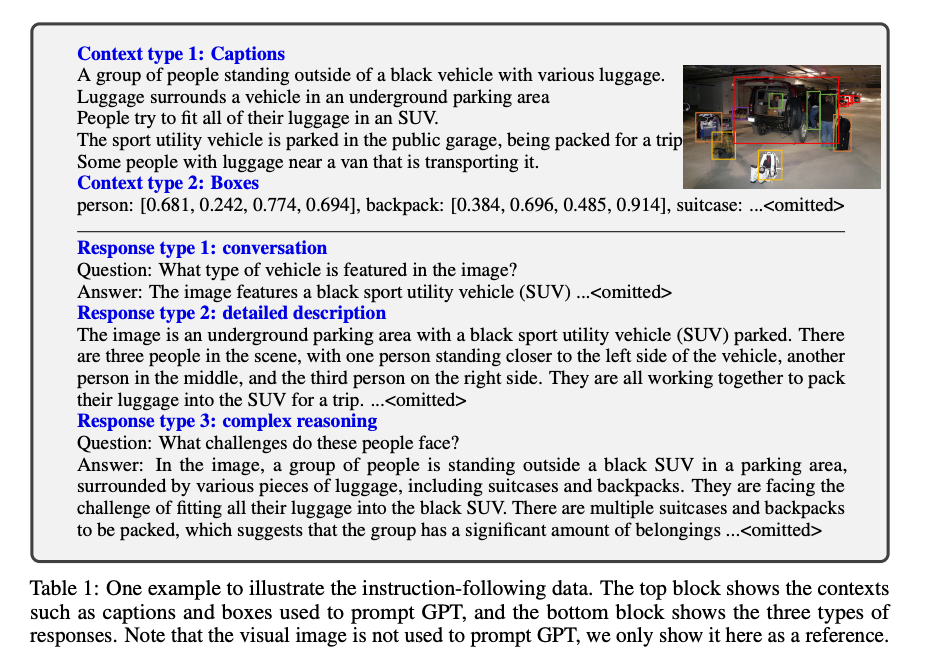

This could possibly be the second/third coaching step relying on the implementation. Right here the information is curated to be within the type of directions particularly to make a mannequin that can be utilized as a chatbot. Normally the obtainable knowledge is transformed into instruction format utilizing an LLM. LLaVa and Imaginative and prescient-Flan are a few examples

Utilizing LoRAs

As mentioned, the second stage of coaching would possibly contain unfreezing LLMs. It is a very expensive affair since LLMs are large. An environment friendly different to coaching LLMs with out this downside is to make the most of Low Rank Adaptation that inserts small layers in-between the LLM layers guaranteeing that whereas LLM is getting adjusted total, solely a fraction of the dimensions of LLM is being skilled.

A number of Resolutions

VLMs face challenges when enter pictures have an excessive amount of dense data akin to in duties with object/crowd counting and phrase recognition in OCR. Listed below are some papers that attempt to deal with it:

- The best manner is to easily resize picture to a number of resolutions and take all of the crops from every decision feed them to the imaginative and prescient encoder and feed them as tokens to LLM, this was proposed in Scaling on Scales and is utilized by Bunny household of fashions, one of many prime performers throughout all duties.

- LLaVA-UHD tries to seek out the easiest way to slice the picture into grids earlier than feeding to the imaginative and prescient encoder.

Coaching Datasets

Now that we all know what are the very best practices, let’s digress into a number of the obtainable datasets for each coaching and fine-tuning.

There are broadly two classes of datasets for VLMs. One class of datasets give attention to the amount and are primarily for guaranteeing an excellent quantity of unsupervised pre-training is feasible. The second class of datasets emphasizes specializations that improve area of interest or application-specific capabilities, akin to being domain-specific, instruction-oriented, or enriched with extra modalities like bounding bins.

Under are a number of the datasets and spotlight their qualities which have elevated VLMs to the place they’re.

| Dataset | Variety of Picture Textual content Pairs | Description |

|---|---|---|

| WebLI (2022) | 12B | One of many largest datasets constructed on net crawled pictures in 109 languages. Sadly this isn’t a public dataset |

| LAION-5B (2022) | 5.5B | A group picture and alt-text pairs over the web. One in every of largest publicly obtainable dataset that’s utilized by quite a lot of implementations to pretrain VLMs from scratch. |

| FLD-5B (2023) | 5B | Dense-captions, OCR and grounding data (each segmentation masks and polygons), makes it perfect for making a unified mannequin for all purposes by way of multitask studying, and helps with sturdy generalization |

| COYO (2022) | 700M | One other big that filters uninformative pairs by way of the picture and textual content stage filtering course of |

| LAION-COCO (2022) | 600M | A subset of LAION-5B with artificial captions generated since alt-texts is probably not at all times correct |

| Obelics (2023) | 141M | Dataset is in chat format, i.e., a coversation with pictures and texts. Greatest for instruction pretraining and fine-tuning |

| MMC4 (Interleaved) (2023) | 101M | Comparable chat format as above. Makes use of a linear task algorithm to put pictures into longer our bodies of textual content utilizing CLIP options |

| Yahoo Flickr Artistic Commons 100 Million (YFCC100M) (2016) | 100M | One of many earliest giant scale datasets |

| Wikipedia-based Picture Textual content (2021) | 37M | Distinctive for its affiliation of encyclopedic data with pictures |

| Conceptual Captions (CC12M) (2021) | 12M | Focusses on a bigger and numerous set of ideas versus different datasets which typically cowl actual world incidents/objects |

| Purple Caps (2021) | 12M | Collected from Reddit, this dataset’s captions replicate real-world, user-generated content material throughout numerous classes, including authenticity and variability in comparison with different datasets |

| Visible Genome (2017) | 5.4M | Has detailed annotations, together with object detection, relationships, and attributes inside scenes, making it perfect for scene understanding and dense captioning duties |

| Conceptual Captions (CC3M) (2018) | 3.3M | Not a subset of CC12M, that is extra applicable for fine-tuning |

| Bunny-pretrain-LAION-2M (2024) | 2M | Emphasizes on the standard of visual-text alignment |

| ShareGPT4V-PT (2024) | 1.2M | Derived from the ShareGPT platform the captions have been generated by a mannequin which was skilled on GPT4V captions |

| SBU Caption (2011) | 1M | Sourced from Flickr, this dataset is helpful for informal, on a regular basis image-text relationships |

| COCO Caption (2016) | 1M | 5 impartial human generated captions are be supplied for every picture |

| Localized Narratives (2020) | 870k | This dataset incorporates localized object-level descriptions, making it appropriate for duties like picture grounding |

| ALLaVA-Caption-4V (2024) | 715k | Captions have been generated by GPT4V, this dataset focuesses on picture captioning and visible reasoning |

| LLava-1.5-PT (2024) | 558k | Yet one more dataset that was genereated by calling GPT4 on pictures. The main target is on high quality prompts for visible reasoning, dense captioning |

| DocVQA (2021) | 50k | Doc-based VQA dataset the place the questions give attention to doc content material, making it essential for data extraction duties within the monetary, authorized, or administrative domains |

Analysis Benchmarks

On this part, let’s discover key benchmarks for evaluating vision-language fashions (VLMs) throughout a variety of duties. From visible query answering to document-specific challenges, these benchmarks assess fashions’ talents in notion, reasoning, and data extraction. We’ll spotlight widespread datasets like MMMU, MME, and Math-Vista, designed to stop biases and guarantee complete testing.

Visible Query Answering

MMMU – 11.5k paperwork – 2024

Huge Multi-discipline Multimodal Understanding and Reasoning (MMMU) benchmark is without doubt one of the hottest benchmarks for evaluating VLMs. It focusses on a wide range of domains to make sure the nice VLMs examined are generalized.

Notion, data, and reasoning are the three abilities which might be being assessed by this benchmark. The analysis is a carried out underneath a zero-shot setting to generate correct solutions with out fine-tuning or few-shot demonstrations on our benchmark.

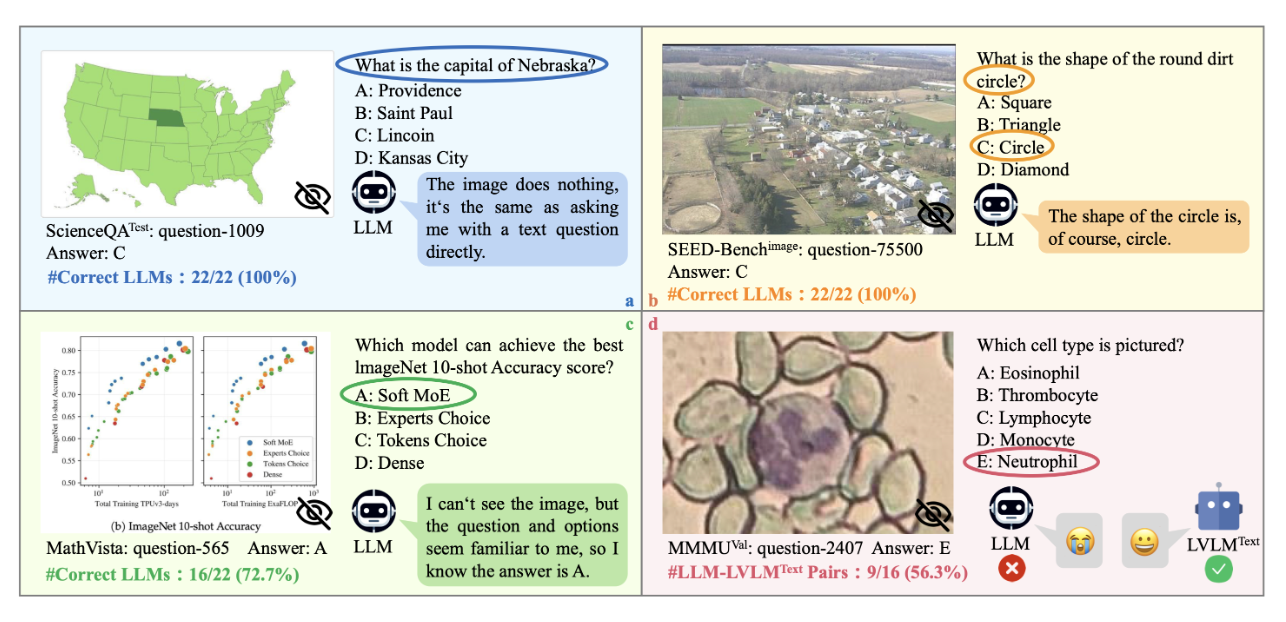

MMMU-PRO is a brand new model of MMMU that improves on MMMU by including more difficult questions and filtering out some knowledge factors that might have be solved by textual content enter alone.

MME – < 1000 pictures – 2024

This dataset focusses on high quality by handpicking pictures and creating annotations. Not one of the examples can be found anyplace over the web and that is performed to make sure the VLMs usually are not by accident skilled on any of them.

There are 14 subtasks within the benchmark with round 50 pictures in every job. Each job has a sure/no solutions solely. A number of the instance duties are existence of objects, notion of well-known objects/individuals, textual content translation and many others. Each picture additional has 2 questions one framed positively anticipated to get a “YES” from VLM and one body negatively to get a “NO” from VLM. Each subtask is it is personal benchmark. There are two sub-aggregate benchmarks one for cognition and one for notion that are sums of respective subtask-group’s accuracies. The ultimate benchmark is the sum of all of the benchmarks.

An instance set of questions for Cognition (reasoning) job within the dataset

MMStar – 1500 – 2024

This dataset is a subset of 6 VQA datasets which were totally filtered for top of the range guaranteeing the next –

- Not one of the samples are answered by LLMs utilizing solely text-based world data.

- In no cases is the query itself discovered to include the reply, making pictures superfluous.

- Not one of the samples are recalled immediately from LLMs’ coaching corpora with the textual questions and solutions.

Very similar to MME, this can be a dataset that focusses on high quality over amount.

Math-Vista – 6.1k paperwork – 2024

Collected and curated from over 31 distinction sources, this benchmark has questions particular to arithmetic throughout a number of reasoning sorts, job sorts, grade ranges and contexts.

The solutions are one in every of a number of decisions or numeric making it straightforward to judge.

MathVerse is a really related however totally different dataset that covers extra particular 2D, 3D geometry and analytical features as topics.

AI2D – 15k – 2016

A dataset that may be very focussed on science diagrams, this benchmark validates the understanding of a number of excessive stage ideas of a VLM. One must not solely parse the obtainable footage but in addition their relative positions, the arrows connecting them and eventually the textual content that’s introduced for every element. It is a dataset with 5000 grade faculty science diagrams masking over 150000 wealthy annotations, their floor fact syntactic parses, and greater than 15000 corresponding a number of alternative questions.

ScienceQA – 21k – 2022

Yet one more area particular dataset that assessments the “Chain of Thought” (COT) paradigm by evaluating elaborate explanations together with a number of alternative solutions. It additionally evaluates the chat like functionality by sending a number of texts and pictures to the VLM.

MM-Vet v2 – 200 questions – 2024

One of the widespread benchmarks and the smallest, this dataset assesses recognition, data, spatial consciousness, language era, OCR, and math capabilities of a mannequin by evaluating each single-image-single-text in addition to chat-like eventualities. As soon as once more InternVL has one of many highest scores in open supply choices.

VisDial – 120k pictures, 1.2M knowledge factors – 2020

Derived from COCO, this can be a dataset that tries to evaluates a VLM Chatbot’s response to a sequence of picture + textual content inputs adopted by a query.

LLaVA-NeXT-Interleave – 17k – 2024

This benchmark evaluates how succesful a fashions relies on a number of enter pictures. The bench combines 9 new and 13 present datasets together with Muir-Bench and ReMI

Different datasets

Listed below are a number of extra visible query answering benchmarks which have easy (sometimes one phrase/phrase) questions and solutions and have particular space of focus

- SEED (19k, 2023) – A number of Selection questions of each pictures and movies

- VQA (2M, 2015) – One of many first datasets. Covers a variety of daily eventualities

- GQA (22M, 2019) – has compositional query answering, i.e., questions that relate a number of objects in a picture.

- VisWiz (8k, 2020) – is a dataset that was generated by blind individuals who every took a picture and recorded a spoken query about it, along with 10 crowdsourced solutions per visible query.

Different Imaginative and prescient Benchmarks

Be aware that any imaginative and prescient job is a VLM job just by including the requirement within the type of a textual content.

- For instance any picture classification dataset can be utilized for zero-shot picture classification by including the textual content immediate, “determine the salient object within the picture” job. ImageNet continues to be among the finest and OG dataset for this objective and nearly all of the VLMs have respectable efficiency on this job.

- POPE is a curious dataset that exemplifies how one can create complexity utilizing easy constructing blocks. It has questions posed as presence/absence of objects in a picture, by first utilizing an object detection mannequin to seek out objects for presence and utilizing negation to create a destructive/absence pattern set. That is additionally used for figuring out if the VLM is hallucinating.

VLM Benchmarks particular to Paperwork

- Doc classification – RVL-CDIP is a 16 class dataset with lessons akin to Letter, E-mail, Type, Bill and many others. Docformer is an efficient baseline.

- Semantic Entity Recognition – FunSD, EPHOIE, CORD are all variations on printed paperwork wich consider fashions on f1 rating of their respective lessons. LiLT is a powerful baseline.

- Multi-language Semantic Entity Recognition – Just like above level besides that the paperwork are in a couple of language. XFUND is a dataset with paperwork in 7 languages and roughly 100k pictures. LiLT is once more among the many prime performers because it makes use of an LLM.

- OCRBench – is a properly rounded dataset of questions and solutions for pictures containing texts be it pure scenes or paperwork. It has total 5 duties spanning from OCR to VQA in various scene and job complexities. InternVL2 is a powerful baseline for this benchmark, proving all spherical efficiency

- DocVQA – is a dataset that’s basically VQA for paperwork, with normally one sentence/phrase questions and one phrase solutions.

- ViDoRe – focusses completely on doc retrieval encompassing paperwork with texts, figures, infographics, tables in medical, enterprise, scientific, administrative domains in each English and French. ColPali is an efficient out of the field mannequin for this benchmark and job

State of the Artwork

It is vital for the reader to know that throughout the handfuls of papers the creator went by way of, one widespread statement was that the GPT4 household of fashions from OpenAI and the Gemini household of fashions from Google appear to be the highest performers with one or two exceptions right here and there. Open supply fashions are nonetheless catching as much as proprietary fashions. This may be attributed to extra focussed analysis, extra human hours and extra proprietary knowledge on the disposal in personal eventualities the place one can generate, modify and annotate giant volumes of knowledge. That stated, let’s record the very best open supply fashions and level out what have been the standards that led to their successes.

Firstly, the LLaVA household of fashions are a detailed second finest throughout duties. LLaVa-OneVision is their newest iteration that’s presently the chief in MathVista demonstrating excessive language and logic comprehension.

The MM1 set of fashions additionally carry out excessive on quite a lot of benchmarks primarily as a result of its dataset curation and using combination of consultants in its decoders.

The Total finest performers when it comes to majority of the duties have been InternVL2, InternVL2-8B and Bunny-3B respectively for giant, medium and tiny fashions among the many benchmarks.

A few issues widespread throughout all of those these fashions is

- the utilization of curated knowledge for coaching, and

- picture inputs are processed in excessive decision or by way of a number of picture encoders to make sure that particulars at any stage of granularity are precisely captured.

One of many easiest methods to extract data from paperwork is to first use an OCR to transform the picture right into a structure conscious textual content and feed it to an LLM together with the specified data. This utterly bypasses a necessity for VLM by utilizing OCR as a proxy for picture encoder. Nevertheless there are various issues with this strategy akin to dependency on OCR, shedding the flexibility to parse visible cues.

LMDX is one such instance which converts OCR textual content right into a structure conscious textual content earlier than sending to LLM.

DONUT was one of many authentic VLMs that used a encoder decoder structure to carry out OCR free data extraction on paperwork.

The imaginative and prescient encoder is a SWIN Transformer which is right for capturing each low-level and high-level data from the Picture. BERT is used because the decoder, and is skilled to carry out a number of duties akin to classification, data extraction and doc parsing.

DiT makes use of self supervised coaching scheme with Masked Picture Modelling and discrete-VAE to be taught picture options. Throughout fine-tuning it makes use of a RCNN variant as head for doing object detection duties akin to word-detection, table-detection and structure evaluation

LLaVa Subsequent is without doubt one of the newest amongst LLaVa household of fashions. This mannequin was skilled with quite a lot of textual content paperwork knowledge along with pure pictures to spice up it is efficiency on paperwork.

InternVL is without doubt one of the newest SOTA fashions skilled with an 8k context window using coaching knowledge with lengthy texts, interleaved pictures for chat capability, medical knowledge in addition to movies

LayoutLM household of fashions use a two-leg structure on paperwork the place the bounding bins are used to create 2D embeddings (known as as structure embeddings) for phrase tokens, resulting in a richer. A brand new pretrainig object known as Phrase Patch Alignment is launched for making the mannequin perceive which picture patch a phrase belongs to.

LiLT was skilled with cross modality interplay of textual content and picture elements. This additionally makes use of structure embeddings as enter and pretraining targets resulting in a richer spatially conscious interplay of phrases with one another. It additionally used a few distinctive losses

DeepSeek-VL is without doubt one of the newest fashions which makes use of fashionable backbones and creates its dataset from all of the publically obtainable with selection, complexity, area protection, taken into consideration.

TextMonkey yet one more latest mannequin which makes use of overlapped cropping technique to feed pictures and textual content grounding targets to realize excessive accuracies on paperwork.

Issues to Contemplate for Coaching your individual VLM

Let’s attempt to summarize the findings from the papers that now we have lined within the type of a run-book.

- Know your job properly

- Are you coaching just for visible query answering? Or does the mannequin want extra qualities like Picture Retrieval, or grounding of objects?

- Does it have to be single picture immediate or ought to it have chat like performance?

- Ought to or not it’s realtime or can your shopper wait

- Questions like these can determine in case your mannequin could be giant, medium or small in dimension.

Arising with solutions to those questions can even provide help to zone into a selected set of architectures, datasets, coaching paradigms, analysis benchmarks and in the end, the papers you must give attention to studying.

- Choose the present SOTA fashions and take a look at your dataset by posing the query to VLMs as Zeroshot or Oneshot examples. When your knowledge is generic – with good immediate engineering, it’s probably that the mannequin will work.

- In case your dataset is complicated/area of interest, and also you to coach by yourself dataset, you have to understand how large is your dataset. Realizing this can provide help to determine if you have to prepare from scratch or simply fine-tune an present mannequin.

- In case your dataset is simply too small, use artificial knowledge era to multiply your dataset dimension. You should use an present LLM akin to GPT, Gemini, Claude or InternVL. Guarantee your artificial knowledge is properly assessed for high quality.

- Earlier than coaching you have to make certain that loss is properly thought out. Attempt to design as many goal features as you’ll be able to to make sure that the duty is properly represented by the loss perform. A superb loss can elevate your mannequin to the subsequent stage. Among the best CLIP variations is nothing however CLIP with an added loss. LayoutLM and BLIP-2 use three losses every for his or her coaching. Take inspiration from them as coaching on extra loss features doesn’t have an effect on the coaching time anyway!

- You additionally want to choose or design your benchmark from these talked about within the benchmarks part. Additionally provide you with precise enterprise metrics. Do not depend on simply the loss perform or the analysis benchmark to inform if a VLM is usable in a manufacturing setting. Your corporation is at all times distinctive and no benchmark is usually a proxy for buyer satisfaction.

- If you’re effective tuning

- Prepare solely the adapters first.

- Within the second stage, prepare imaginative and prescient and LLMs utilizing LORA.

- Be certain that your knowledge is of the very best high quality, as even a single unhealthy instance can hinder the progress of 100 good ones.

- If you’re coaching from scratch –

- Choose the correct backbones that play sturdy in your area.

- Use multi-resolution strategies talked about, for capturing particulars in any respect ranges of the picture.

- Use a number of imaginative and prescient encoders.

- Use Combination of consultants for LLMs in case your knowledge is understood to be complicated.

- As a complicated practitioner, one can,

- First prepare very giant fashions (50+ B parameters) and distill the learnings to a smaller mannequin.

- Strive a number of architectures – Very similar to the Bunny household of fashions, one can prepare totally different combos of imaginative and prescient and LLM elements to finish up with a household of fashions for locating the correct structure.

Conclusion

In simply a short while, we reviewed over 50 arXiv papers, predominantly from 2022 to August 2024. Our focus was on understanding the core elements of Imaginative and prescient-Language Fashions (VLMs), figuring out obtainable fashions and datasets for doc extraction, evaluating the metrics for a high-quality VLM, and figuring out what you have to know to successfully use a VLM.

As VLMs are one of the crucial quickly advancing fields. Even after inspecting this intensive physique of labor, we’ve solely scratched the floor. Whereas quite a few new strategies will undoubtedly emerge, we’ve laid a strong basis in understanding what makes a VLM efficient and the right way to develop one, if wanted.