All of us have used LLMs in several capacities for finishing up a mess of duties. However how usually have you ever used it for one thing that’s particular to your tradition? That’s the place all that processing energy hits a brick wall. The English-centric nature of most giant language fashions makes it unique to an viewers acquainted with the language.

AI4Bharat is right here to vary that. Their newest providing Indic LLM-Enviornment aspired to offer an open-source ecosystem for Indian language AI. This text will function a information for what Indic LLM-Enviornment affords and what its plans are for the long run.

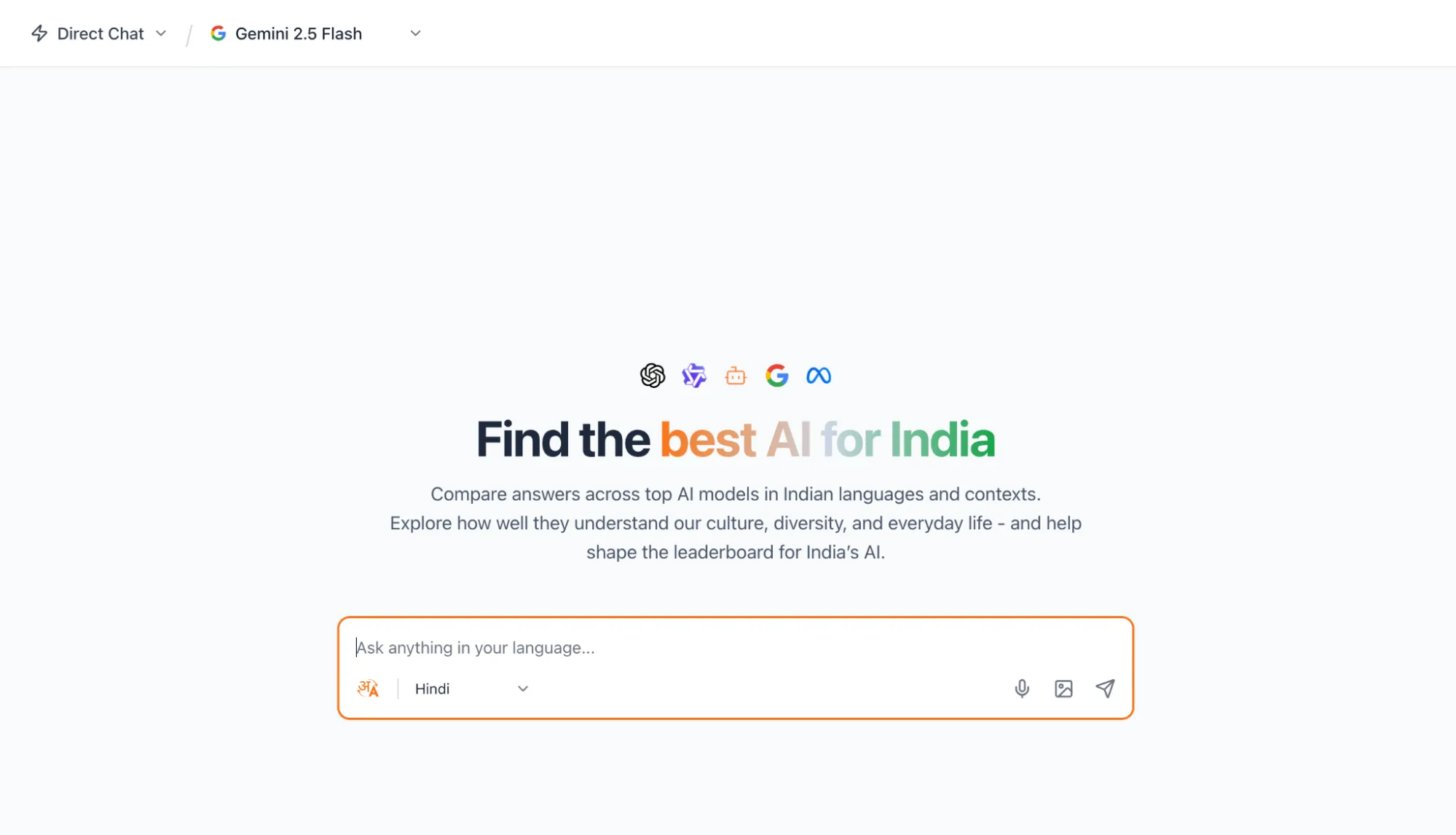

What’s Indic LLM-Enviornment?

Because the title suggests, Indic LLM-Enviornment is an indianized model of LMArena, the trade customary for LLM benchmarks. An initiative by AI4Bharat (IIT Madras), supported by Google Cloud, Indic LLM-Enviornment leaderboard is designed to benchmark LLMs on the three pillars that have an effect on the Indian expertise: language, context, and security.

The Gaps in Present LLM Analysis

The present leaderboards—whereas important in gauging the progress in fashions—fail to seize the realities of our nation. The hole exists throughout the next dimensions:

1. The Language Hole

The hole isn’t merely as a consequence of a scarcity of help for vernacular languages. It’s additionally partially as a consequence of lack of knowledge about Indic language communication and restricted success in code-switching eventualities. Even the fashions skilled particularly on the regional languages, fail to carry out satisfactorily as quickly as there isn’t a mono-linguistic immediate.

2. The Cultural Hole

India isn’t a monolith. There isn’t a one-size-fits-all, pan-india response. That is as a result of multi-cultural and -ethinic surroundings that India fosters. A culturally-aware mannequin would supply solutions which are acceptable for the given language or area—a functionality presently missing in fashions.

3. The Security & Equity Hole

A mannequin’s security and equity system must be taught the sorts of dangers that truly present up in India. That features regional prejudices, communal misinformation, and the quieter methods caste stereotypes slip in. Off-the-shelf security assessments don’t seize these realities, so the coaching has to account for them straight.

How one can Entry?

You’ll be able to entry Indic LLM-Enviornment at their official chat interface: https://enviornment.ai4bharat.org/#/chat

Ensure to create an account, in any other case you’d be restricted to the Random possibility in which you’ll be able to solely evaluate 2 fashions, one response per chat.

Fingers-On: Testing the Interface

To get a grip over all that Indic LLM-Enviornment has to supply, we’d be placing to check the three main modes that the websites operates on:

- Direct chat

- Evaluate fashions

- Random

You’ll be able to toggle between the modes utilizing the modes utilizing the chat mode dropdown.

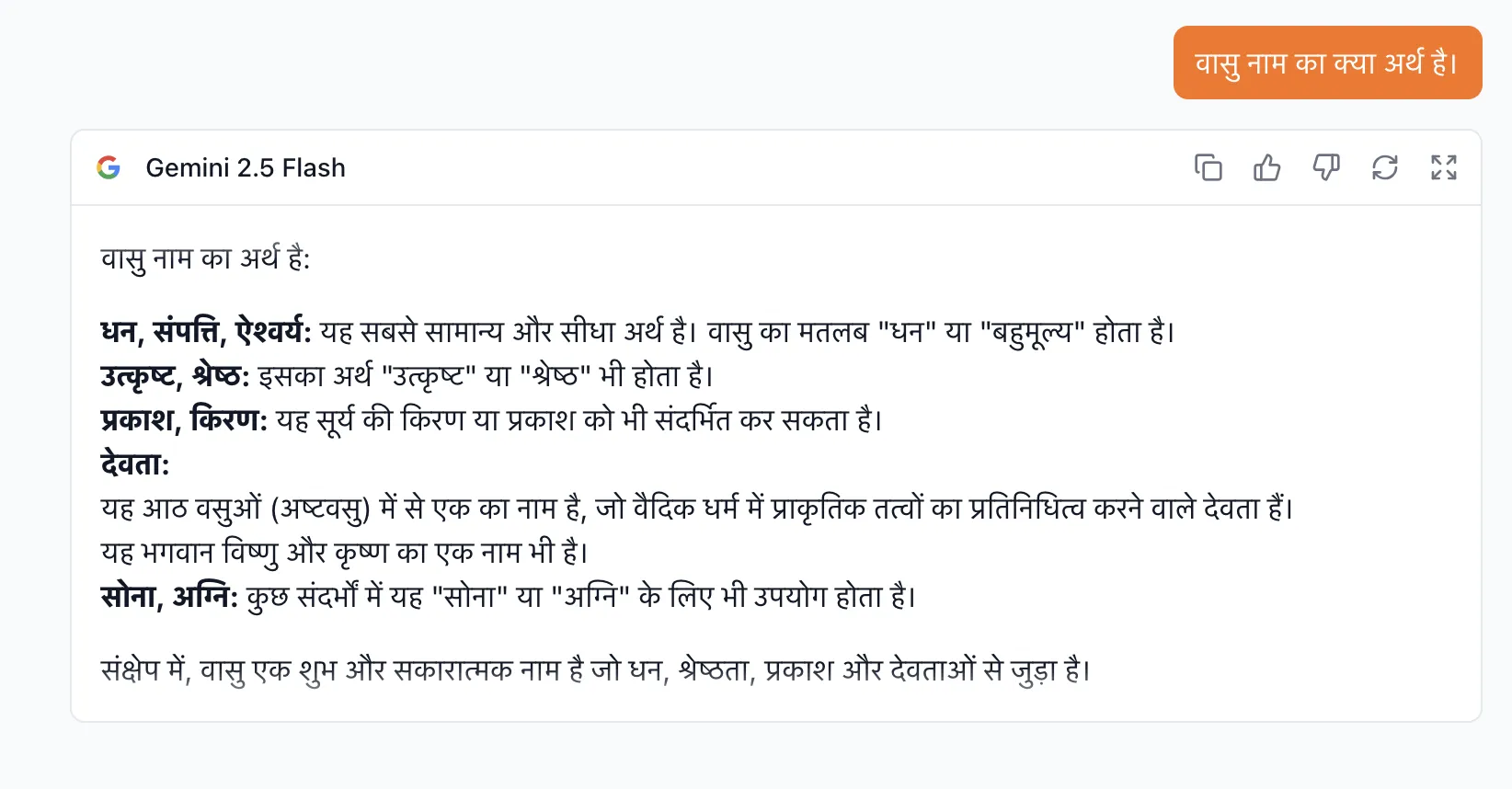

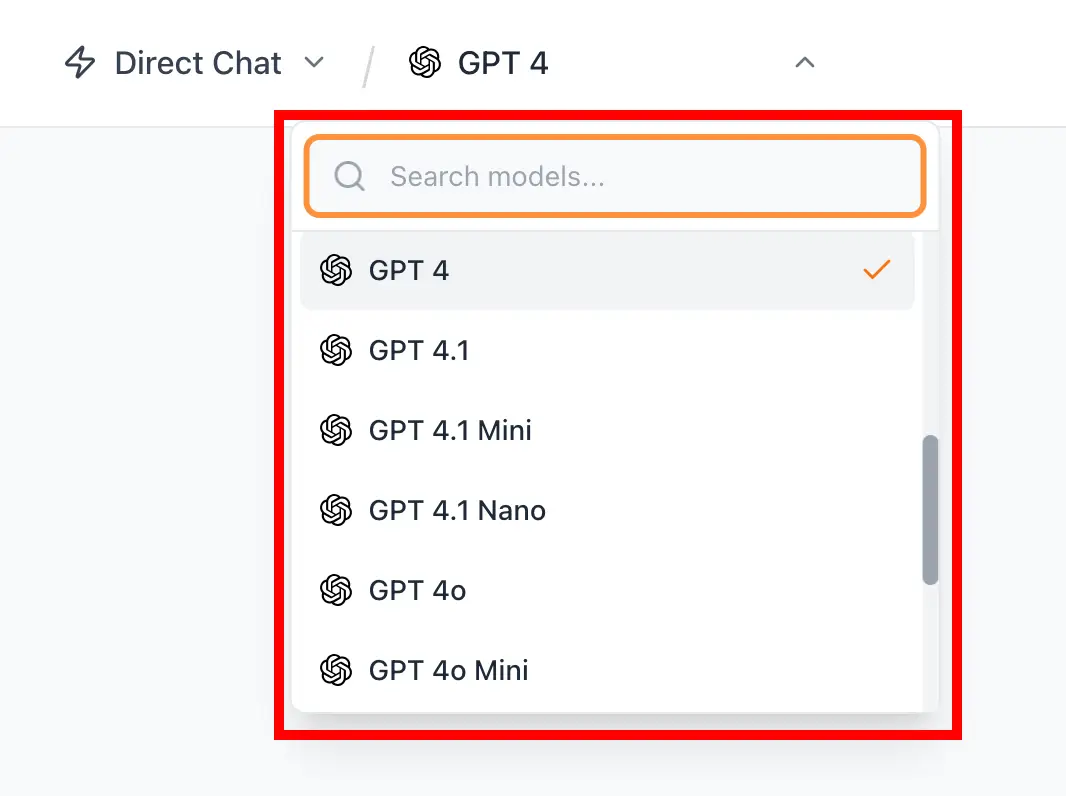

Direct Chat

For this take a look at, I’d be giving a immediate in Hindi to see how nicely the mannequin responds. I’ll ask the query “What does the title Vasu imply?” utilizing the Gemini 2.5 Flash mannequin.

Immediate: “वासु नाम का क्या अर्थ है”

Response:

Assessment: Hopeful stuff certainly! The response offered was in plain Hindi, with acceptable textual content emphasis. The knowledge offered is factually right, as might be corroborated from the Wikipedia web page of the title.

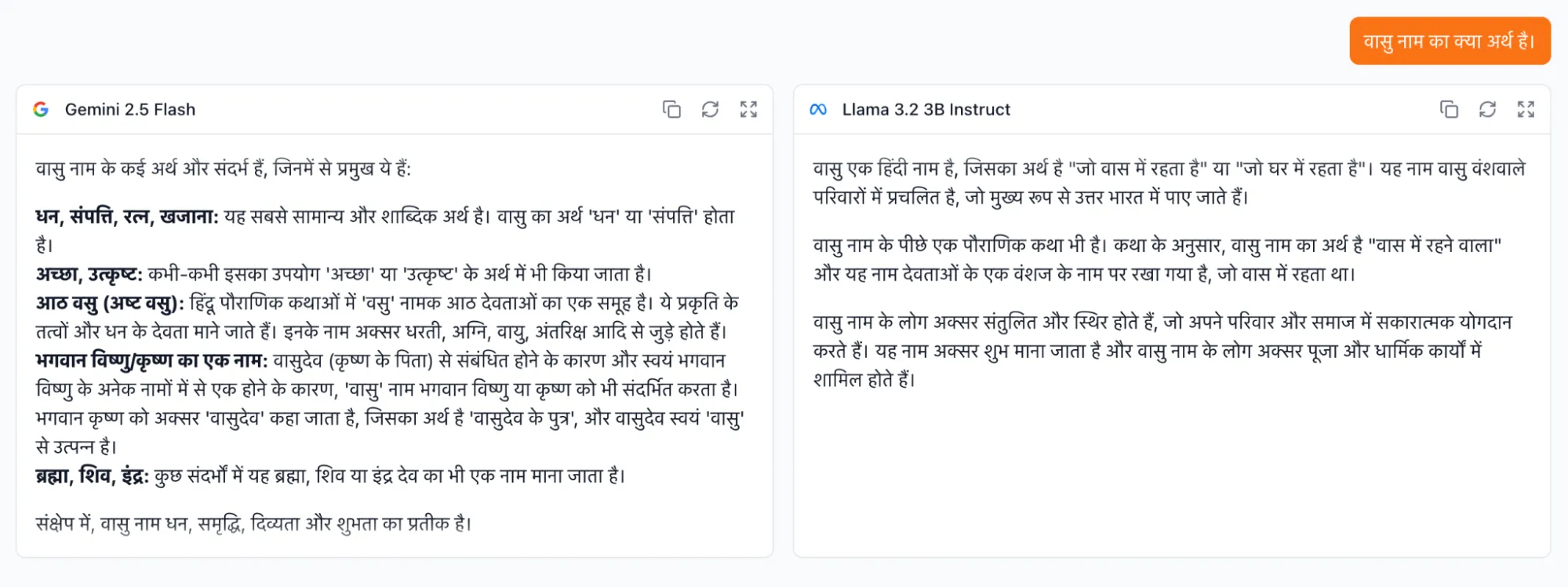

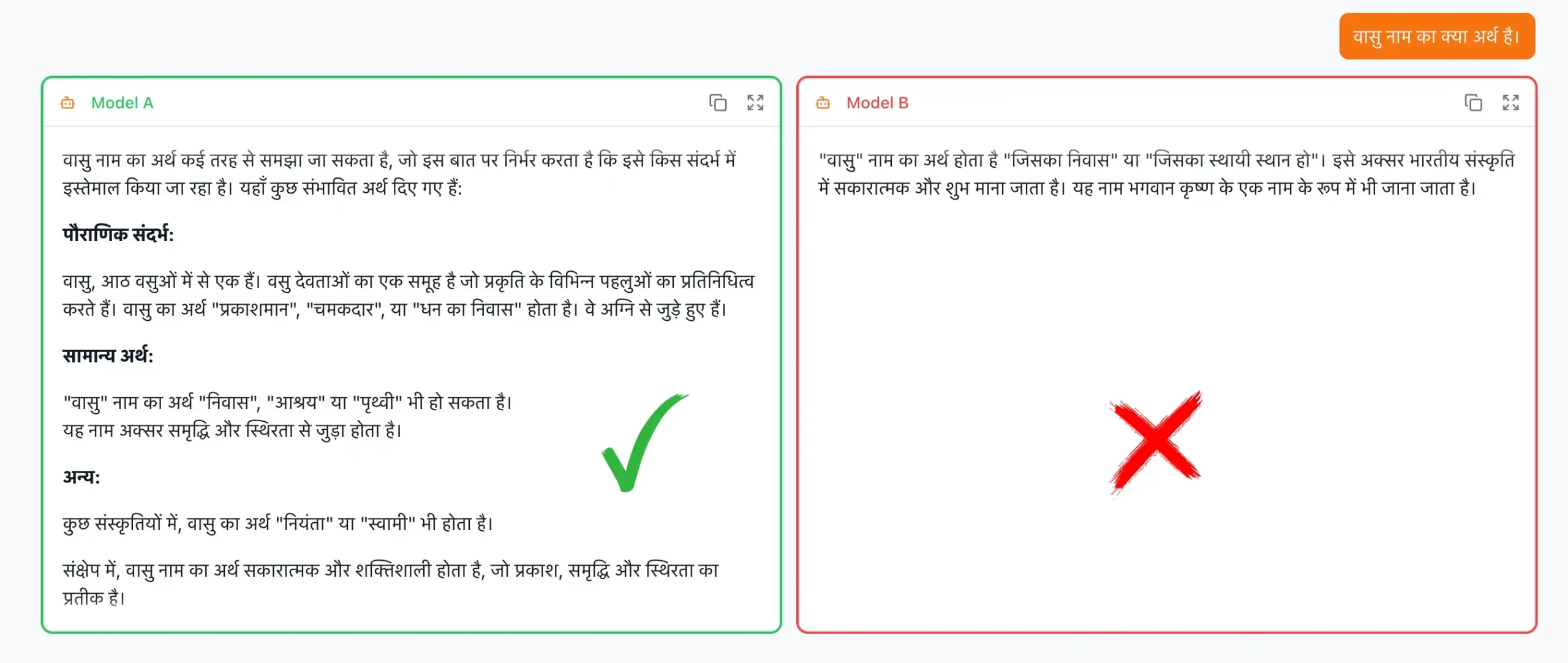

Evaluate Fashions

For this take a look at, I’d be giving the identical immediate because the one used within the earlier activity, to the fashions Gemini 2.5 Flash and Llama 3.2 3B Instruct.

Immediate: “वासु नाम का क्या अर्थ है”

Response:

Assessment: This one was intriguing. Now that we’re in a position to put two fashions in parallel, the response speeds are conspicuous. Gemini 2.5 flash was in a position to give the frilly response in lower than half the time it took for LLama 3.2 3B for a similar. The responses have been utterly in Hindi.

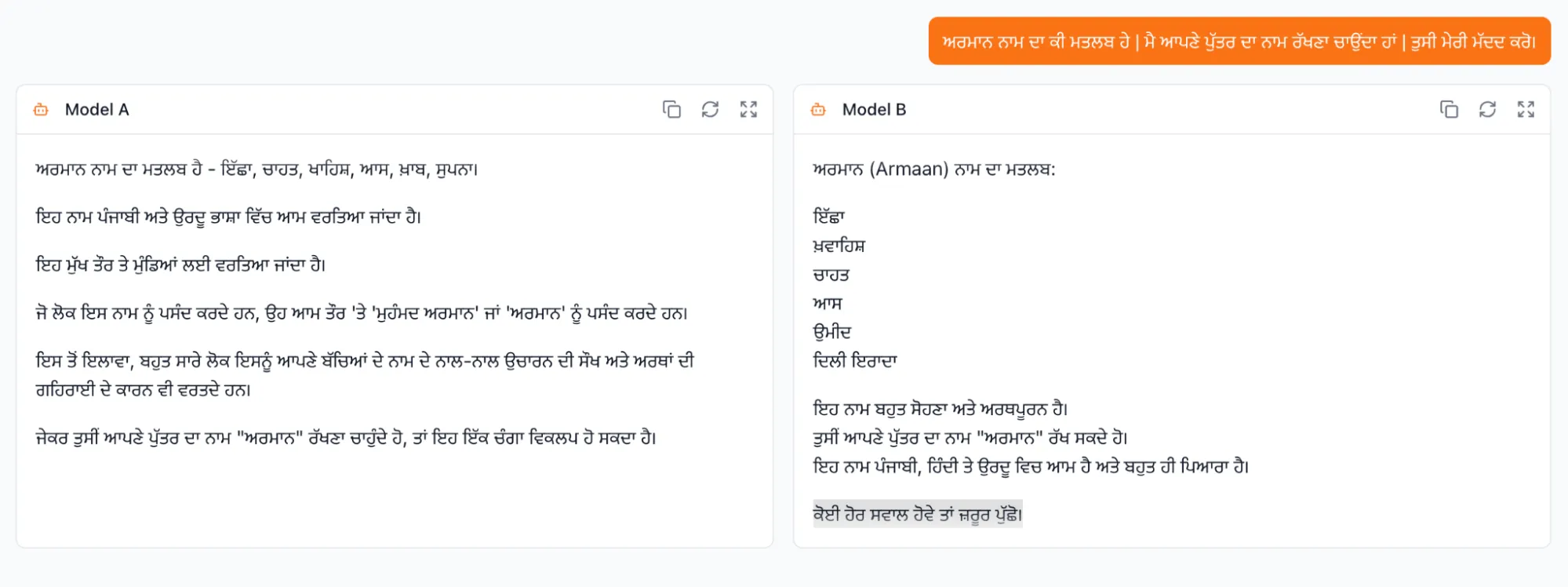

Random

For this take a look at, I’d be giving a immediate in Punjabi, to 2 fashions (utterly unknown) to see how nicely they reply. I’ll ask the query “What does the title Armaan imply? I need to title my son Armaan. Please assist me.”.

Immediate: “ਅਰਮਾਨ ਨਾਮ ਦਾ ਕੀ ਮਤਲਬ ਹੇ | ਮੈ ਆਪਣੇ ਪੁੱਤਰ ਦਾ ਨਾਮ ਰੱਖਣਾ ਚਾਉਂਦਾ ਹਾਂ | ਤੁਸੀ ਮੇਰੀ ਮੱਦਦ ਕਰੋ।”

Response:

Assessment: The response offered was in Punjabi and was factually right primarily based off the Wikipedia web page of the title. The 2 fashions that responded took a while to border the response utterly. This could possibly be attributed to the regional languages being a bit computation heavy than conventional english.

Verdict

The three modes of LLM-Enviornment supplied enough selection to maintain my curiosity. Whether or not it’s mannequin blind take a look at, comparability between the favorites or simply the common prompt-response routine, the platform has quite a bit on show. I may inform the distinction within the response occasions between English and vernacular queries. This goes to additional spotlight the struggles of conventional LLMs in processing Indian languages. LLM-Enviornment supplies a unified platform for testing of the newer fashions in addition to a leaderboard for the most effective fashions.

However LLM-Enviornment isn’t with out its flaws. Listed below are some issues that I confronted whereas utilizing it:

- Context-Much less transliteration: Transliteration, whereas being a tremendous function in itself, lacks context and has some latency. This makes it exhausting to write down code-switched queries, because the mannequin has a tough time realizing vernacular language (that we had chosen) with mortgage phrases (like ChatGPT):

- Lack of mannequin illustration: The fashions supplied as of now are totally different variants of three LLMs specifically: ChatGPT(10), Gemini(5), Qwen(1), Meta(3). There are two issues with this:

- Numerous the heavy hitters like DeepSeek, Claude, and plenty of extra aren’t out there.

- Native LLMs like Sarvam-1 that are language fashions particularly optimized for the Indian language haven’t had a illustration.

- UI Issues: The UI isn’t with out its flaws. I encountered the next subject, whereas utilizing the UI:

The Future

LLM-Enviornment is an open-letter to folks wanting to enhance the proficiency of fashions in coping with languages of India. As talked about by the corporate, the leaderboard is being curated, as increasingly information is being offered to them by customers like us. So, we may help on this course of by providing two cents about our personal private experiences of utilizing these fashions. This is able to help within the fine-tuning of those fashions, and in-turn make the fashions extra accessible to folks throughout the nation.

The requirement of English is quickly to be obviated, as initiatives comparable to Indic LLM-Enviornment come to the image. Whereas addressing localized challenges, offering alternate options to established names, and voicing regional issues, it’s a step in the correct path in direction of making AI extra accessible and customized.

Forged Your Vote

Indic LLM-Enviornment is solely depending on the suggestions of its customers: Us! To make it the platform it aspires to be and to push the envelop on the subject of Indianized LLMs, we’ve to offer our inputs to the positioning. Go to their official web page to contribute.

Additionally Learn: Prime 10 LLM That Are Constructed In India

Incessantly Requested Questions

A. It assessments how nicely fashions deal with Indian languages, cultural context, and security issues, giving a extra real looking image of efficiency for Indian customers.

A. Direct Chat allows you to take a look at a single mannequin, Evaluate Fashions exhibits side-by-side responses, and Random affords blind comparisons with out figuring out which mannequin replied.

A. Some main fashions are lacking, transliteration can lag, and some interface points nonetheless present up, although the platform is actively enhancing.

Login to proceed studying and luxuriate in expert-curated content material.