We’re excited to announce that the flexibility to entry AWS S3 information on Azure Databricks by Unity Catalog to allow cross-cloud information governance is now Usually Obtainable. Because the business’s solely unified and open governance answer for all information and AI belongings, Unity Catalog empowers organizations to control information wherever it lives, guaranteeing safety, compliance, and interoperability throughout clouds. With this launch, groups can straight configure and question AWS S3 information from Azure Databricks without having emigrate or copy datasets. This makes it simpler to standardize insurance policies, entry controls, and auditing throughout each ADLS and S3 storage.

On this weblog, we’ll cowl two key subjects:

- How Unity Catalog permits cross-cloud information governance

- How one can entry and work with AWS S3 information from Azure Databricks

What’s cross-cloud information governance on Unity Catalog?

As enterprises undertake hybrid and cross-cloud architectures, they typically face fragmented entry controls, inconsistent safety insurance policies, and duplicated governance processes. This complexity will increase threat, drives up operational prices, and slows innovation.

Cross-cloud information governance with Unity Catalog simplifies this by extending a single permission mannequin, centralized coverage enforcement, and complete auditing throughout information saved in a number of clouds, corresponding to AWS S3 and Azure Knowledge Lake Storage, all managed from inside the Databricks Platform.

Key advantages of leveraging cross-cloud information governance on Unity Catalog embody:

- Unified governance – Handle entry insurance policies, safety controls, and compliance requirements from one place with out juggling siloed techniques

- Frictionless information entry – Securely uncover, question, and analyze information throughout clouds in a single workspace, eliminating silos and lowering complexity

- Stronger safety and compliance – Achieve centralized visibility, tagging, lineage, information classification, and auditing throughout all of your cloud storage

By bridging governance throughout clouds, Unity Catalog provides groups a single, safe interface to handle and maximize the worth of all their information and AI belongings—wherever they stay.

The way it works

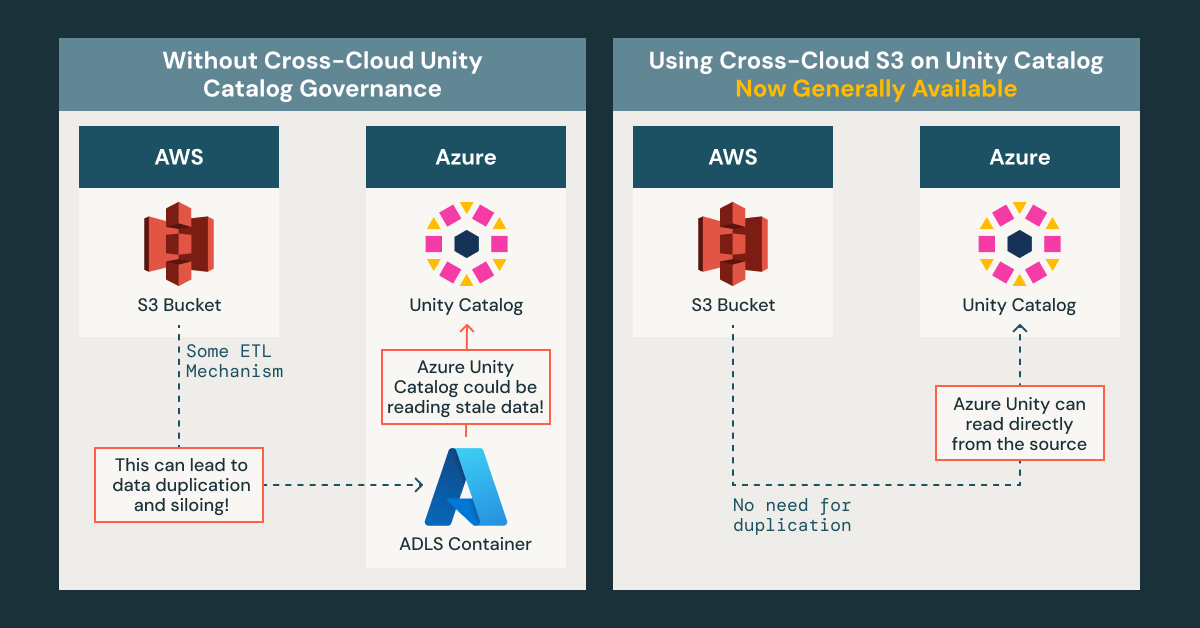

Beforehand, when utilizing Azure Databricks, Unity Catalog solely supported storage places inside ADLS. This meant that if in case you have information saved in an AWS S3 bucket however must entry and course of it with Unity Catalog on Azure Databricks, the normal strategy would require extracting, remodeling, and loading (ETL) that information into an ADLS container—a course of that’s each pricey and time-consuming. This additionally will increase the chance of sustaining duplicate, outdated copies of information.

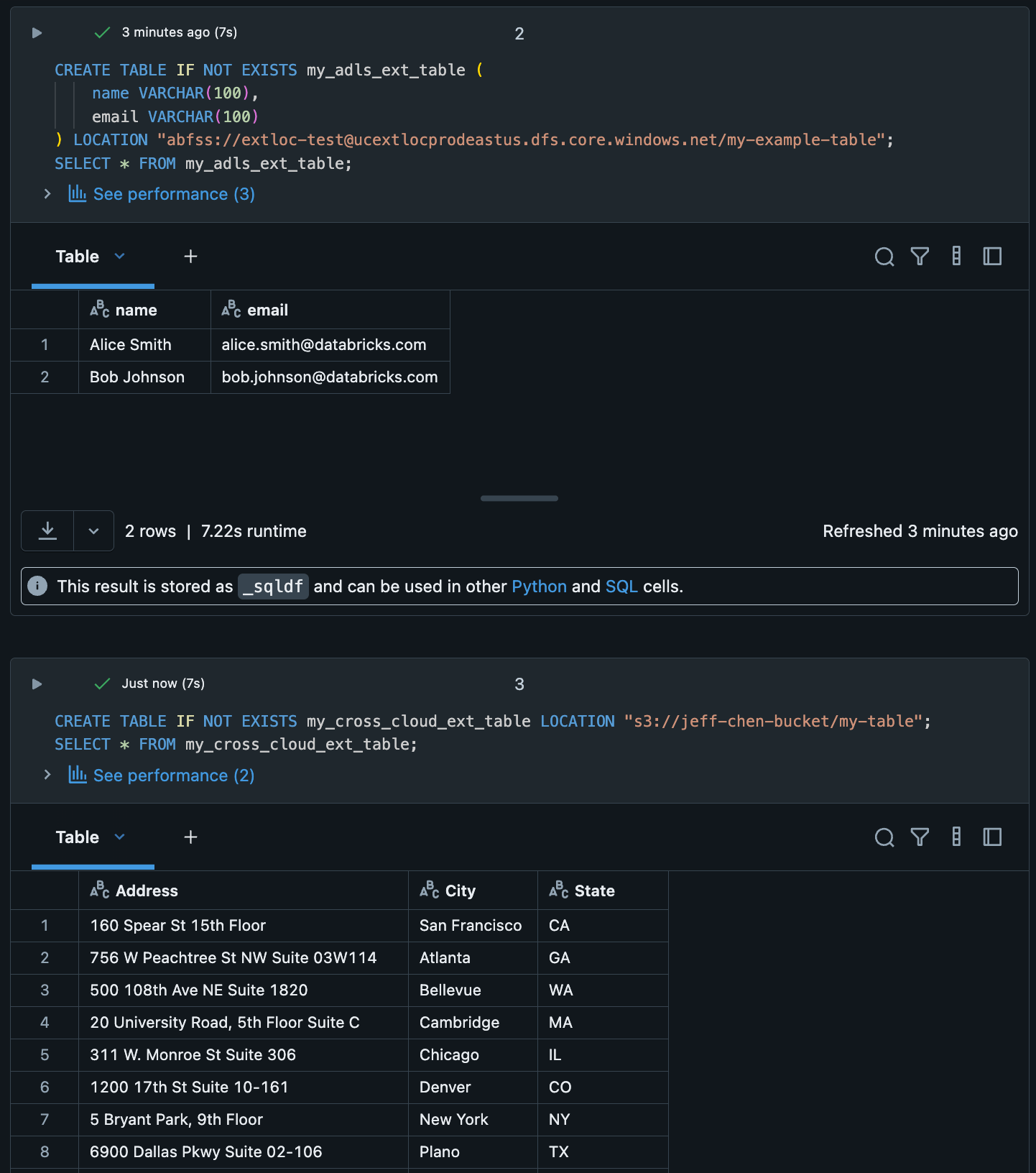

With this GA launch, now you can arrange an exterior cross-cloud S3 location straight from Unity Catalog on Azure Databricks. This lets you seamlessly learn and govern your S3 information with out migration or duplication.

You possibly can configure entry to your AWS S3 bucket in just a few straightforward steps:

- Arrange your storage credential and create an exterior location. As soon as your AWS IAM and S3 assets are provisioned, you may create your storage credential and exterior location straight within the Azure Databricks Catalog Explorer.

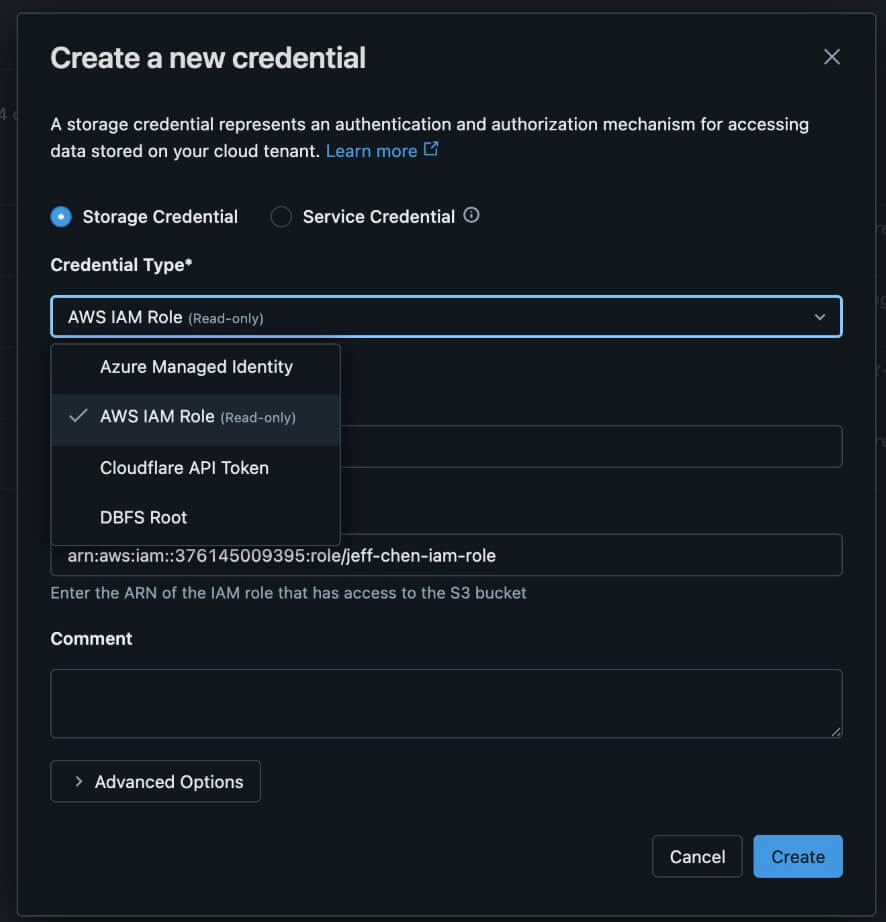

- To create your storage credential, navigate to Credentials inside the Catalog Explorer. Choose AWS IAM Position (Learn-only), fill within the required fields, and add the belief coverage snippet when prompted.

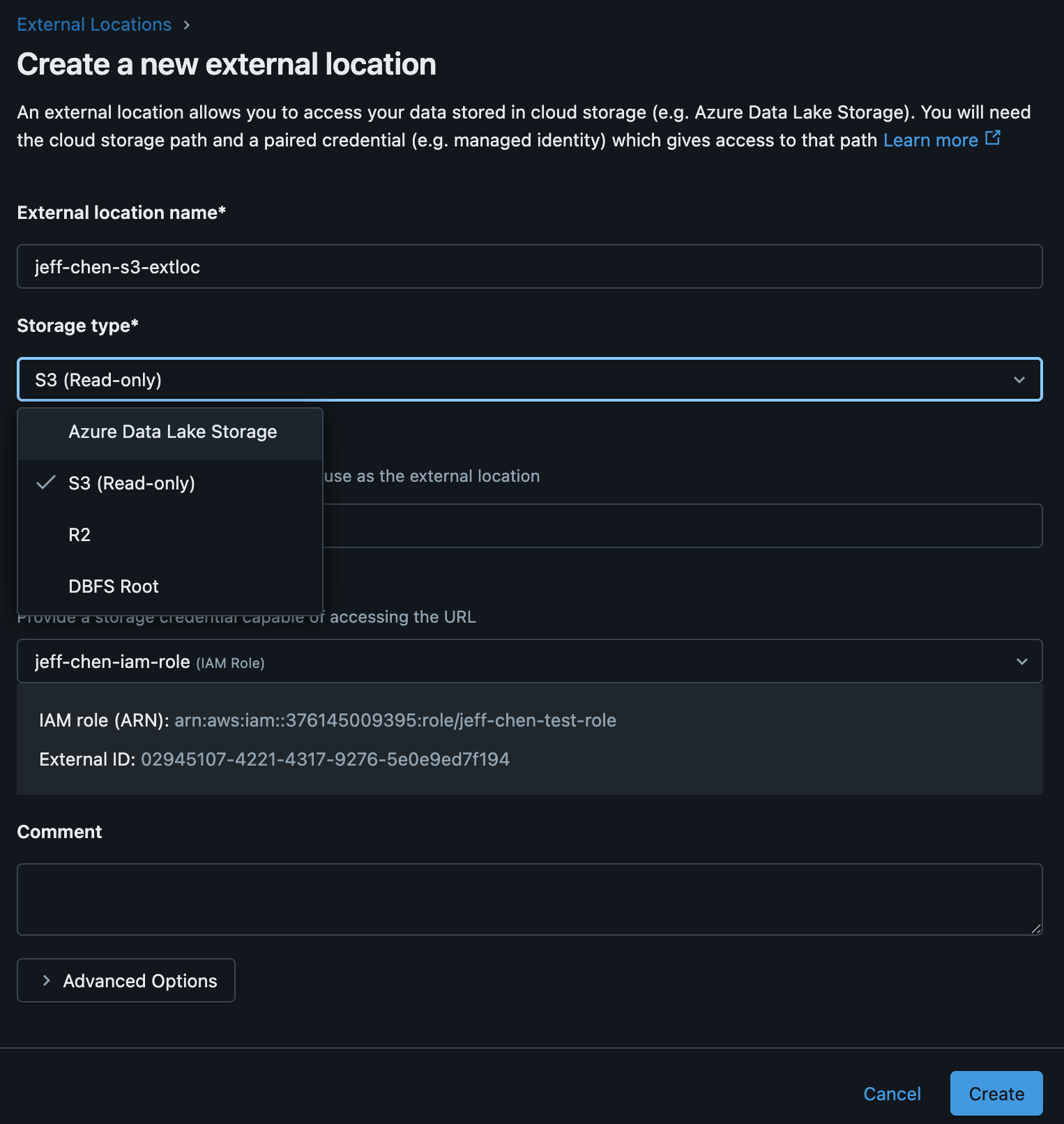

- To create an exterior location, navigate to Exterior places inside the Catalog Explorer. Then, choose the credential you simply arrange and full the remaining particulars.

- To create your storage credential, navigate to Credentials inside the Catalog Explorer. Choose AWS IAM Position (Learn-only), fill within the required fields, and add the belief coverage snippet when prompted.

- Apply permissions. On the Credentials web page inside the Catalog Explorer, now you can see your ADLS and S3 information collectively in a single place in Azure Databricks. From there, you may apply constant permissions throughout each storage techniques.

3. Begin querying! You’re prepared to question your S3 information straight out of your Azure Databricks workspace.

What’s supported within the GA launch?

With GA, we now assist accessing exterior tables and volumes in S3 from Azure Databricks. Particularly, the next options at the moment are supported in a read-only capability:

- AWS IAM position storage credentials

- S3 exterior places

- S3 exterior tables

- S3 exterior volumes

- S3 dbutils.fs entry

- Delta sharing of S3 information from UC on Azure

Getting Began

To check out cross-cloud information governance on Azure Databricks, take a look at our documentation on learn how to arrange storage credentials for IAM roles for S3 storage on Azure Databricks. It’s vital to notice that your cloud supplier might cost charges for accessing information exterior to their cloud companies. To get began with Unity Catalog, observe our Unity Catalog information for Azure.

Be a part of the Unity Catalog product and engineering workforce on the Knowledge + AI Summit, June 9–12 on the Moscone Middle in San Francisco! Get a primary have a look at the most recent improvements in information and AI governance. Register now to safe your spot!