The intelligence in synthetic intelligence is rooted in huge quantities of information upon which machine studying (ML) fashions are skilled—with latest giant language fashions like GPT-4 and Gemini processing trillions of tiny items of information known as tokens. This coaching dataset doesn’t merely include uncooked data scraped from the web. To ensure that the coaching information to be efficient, it additionally must be labeled.

Information labeling is a course of wherein uncooked, unrefined data is annotated or tagged so as to add context and that means. This improves the accuracy of mannequin coaching, since you are in impact marking or stating what you need your system to acknowledge. Some information labeling examples embrace sentiment evaluation in textual content, figuring out objects in photographs, transcribing phrases in audio, or labeling actions in video sequences.

It’s no shock that information labeling high quality has a huge effect on coaching. Initially coined by William D. Mellin in 1957, “Rubbish in, rubbish out” has develop into considerably of a mantra in machine studying circles. ML fashions skilled on incorrect or inconsistent labels can have a tough time adapting to unseen information and should exhibit biases of their predictions, inflicting inaccuracies within the output. Additionally, low-quality information can compound, inflicting points additional downstream.

This complete information to information labeling programs will assist your staff enhance information high quality and achieve a aggressive edge regardless of the place you’re within the annotation course of. First I’ll deal with the platforms and instruments that comprise an information labeling structure, exploring the trade-offs of varied applied sciences, after which I’ll transfer on to different key concerns together with lowering bias, defending privateness, and maximizing labeling accuracy.

Understanding Information Labeling within the ML Pipeline

The coaching of machine studying fashions usually falls into three classes: supervised, unsupervised, and reinforcement studying. Supervised studying depends on labeled coaching information, which presents enter information factors related to right output labels. The mannequin learns a mapping from enter options to output labels, enabling it to make predictions when introduced with unseen enter information. That is in distinction with unsupervised studying, the place unlabeled information is analyzed in quest of hidden patterns or information groupings. With reinforcement studying, the coaching follows a trial-and-error course of, with people concerned primarily within the suggestions stage.

Most fashionable machine studying fashions are skilled by way of supervised studying. As a result of high-quality coaching information is so necessary, it have to be thought-about at every step of the coaching pipeline, and information labeling performs an important function on this course of.

Earlier than information could be labeled, it should first be collected and preprocessed. Uncooked information is collected from all kinds of sources, together with sensors, databases, log information, and software programming interfaces (APIs). It usually has no customary construction or format and accommodates inconsistencies akin to lacking values, outliers, or duplicate information. Throughout preprocessing, the info is cleaned, formatted, and remodeled so it’s constant and appropriate with the info labeling course of. Quite a lot of strategies could also be used. For instance, rows with lacking values could be eliminated or up to date by way of imputation, a way the place values are estimated by way of statistical evaluation, and outliers could be flagged for investigation.

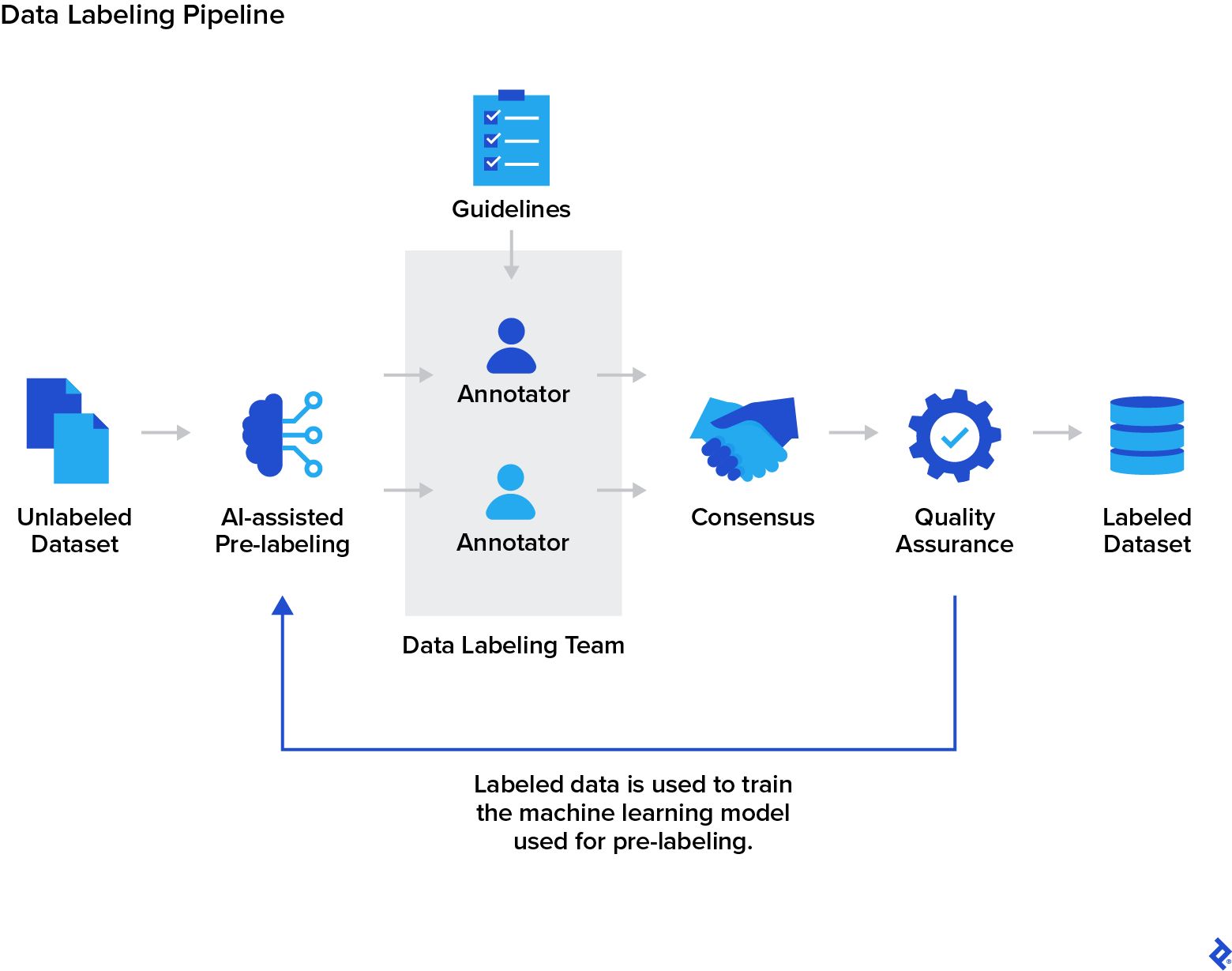

As soon as the info is preprocessed, it’s labeled or annotated as a way to present the ML mannequin with the knowledge it must study. The particular method is determined by the kind of information being processed; annotating photographs requires completely different strategies than annotating textual content. Whereas automated labeling instruments exist, the method advantages closely from human intervention, particularly in the case of accuracy and avoiding any biases launched by AI. After the info is labeled, the high quality assurance (QA) stage ensures the accuracy, consistency, and completeness of the labels. QA groups usually make use of double-labeling, the place a number of labelers annotate a subset of the info independently and evaluate their outcomes, reviewing and resolving any variations.

Subsequent, the mannequin undergoes coaching, utilizing the labeled information to study the patterns and relationships between the inputs and the labels. The mannequin’s parameters are adjusted in an iterative course of to make its predictions extra correct with respect to the labels. To consider the effectiveness of the mannequin, it’s then examined with labeled information it has not seen earlier than. Its predictions are quantified with metrics akin to accuracy, precision, and recall. If a mannequin is performing poorly, changes could be made earlier than retraining, certainly one of which is bettering the coaching information to deal with noise, biases, or information labeling points. Lastly, the mannequin could be deployed into manufacturing, the place it could work together with real-world information. You will need to monitor the efficiency of the mannequin as a way to determine any points that may require updates or retraining.

Figuring out Information Labeling Sorts and Strategies

Earlier than designing and constructing an information labeling structure, all the information sorts that can be labeled have to be recognized. Information can are available in many various kinds, together with textual content, photographs, video, and audio. Every information kind comes with its personal distinctive challenges, requiring a definite method for correct and constant labeling. Moreover, some information labeling software program consists of annotation instruments geared towards particular information sorts. Many annotators and annotation groups additionally focus on labeling sure information sorts. The selection of software program and staff will depend upon the mission.

For instance, the info labeling course of for laptop imaginative and prescient would possibly embrace categorizing digital photographs and movies, and creating bounding packing containers to annotate the objects inside them. Waymo’s Open Dataset is a publicly out there instance of a labeled laptop imaginative and prescient dataset for autonomous driving; it was labeled by a mixture of personal and crowdsourced information labelers. Different functions for laptop imaginative and prescient embrace medical imaging, surveillance and safety, and augmented actuality.

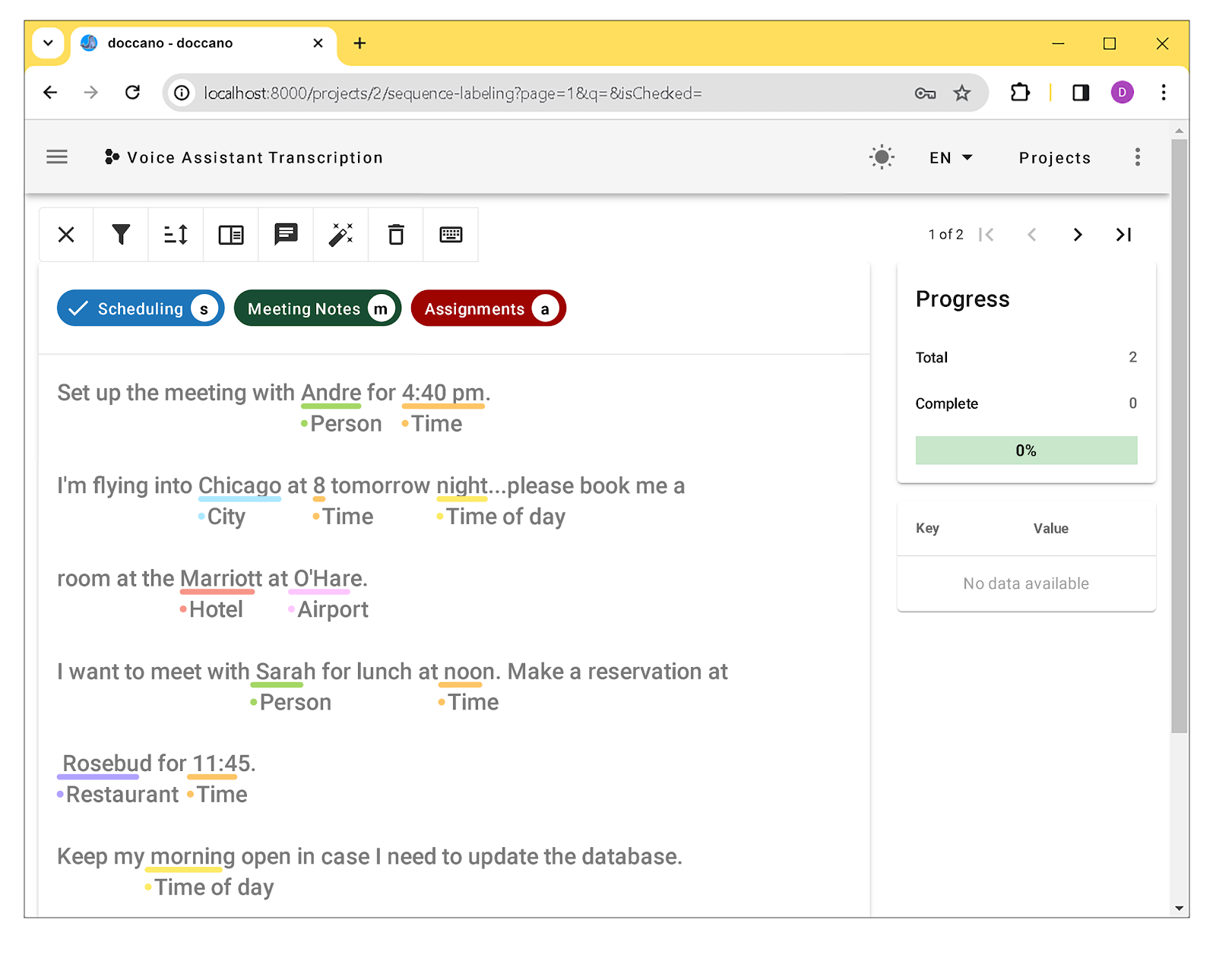

The textual content analyzed and processed by pure language processing (NLP) algorithms could be labeled in quite a lot of alternative ways, together with sentiment evaluation (figuring out optimistic or unfavourable feelings), key phrase extraction (discovering related phrases), and named entity recognition (stating particular individuals or locations). Textual content blurbs may also be labeled; examples embrace figuring out whether or not or not an electronic mail is spam or figuring out the language of the textual content. NLP fashions can be utilized in functions akin to chatbots, coding assistants, translators, and search engines like google.

Audio information is utilized in quite a lot of functions, together with sound classification, voice recognition, speech recognition, and acoustic evaluation. Audio information may be annotated to determine particular phrases or phrases (like “Hey Siri”), classify various kinds of sounds, or transcribe spoken phrases into written textual content.

Many ML fashions are multimodal–in different phrases, they’re able to decoding data from a number of sources concurrently. A self-driving automobile would possibly mix visible data, like visitors indicators and pedestrians, with audio information, akin to a honking horn. With multimodal information labeling, human annotators mix and label various kinds of information, capturing the relationships and interactions between them.

One other necessary consideration earlier than constructing your system is the acceptable information labeling technique in your use case. Information labeling has historically been carried out by human annotators; nevertheless, developments in ML are growing the potential for automation, making the method extra environment friendly and inexpensive. Though the accuracy of automated labeling instruments is bettering, they nonetheless can’t match the accuracy and reliability that human labelers present.

Hybrid or human-in-the-loop (HTL) information labeling combines the strengths of human annotators and software program. With HTL information labeling, AI is used to automate the preliminary creation of the labels, after which the outcomes are validated and corrected by human annotators. The corrected annotations are added to the coaching dataset and used to enhance the efficiency of the software program. The HTL method presents effectivity and scalability whereas sustaining accuracy and consistency, and is at the moment the preferred technique of information labeling.

Selecting the Parts of a Information Labeling System

When designing an information labeling structure, the proper instruments are key to creating certain that the annotation workflow is environment friendly and dependable. There are a number of instruments and platforms designed to optimize the info labeling course of, however based mostly in your mission’s necessities, chances are you’ll discover that constructing an information labeling pipeline with in-house instruments is probably the most acceptable in your wants.

Core Steps in a Information Labeling Workflow

The labeling pipeline begins with information assortment and storage. Info could be gathered manually by means of strategies akin to interviews, surveys, or questionnaires, or collected in an automatic method by way of net scraping. In case you don’t have the assets to gather information at scale, open-source datasets from platforms akin to Kaggle, UCI Machine Studying Repository, Google Dataset Search, and GitHub are an excellent various. Moreover, information sources could be artificially generated utilizing mathematical fashions to enhance real-world information. To retailer information, cloud platforms akin to Amazon S3, Google Cloud Storage, or Microsoft Azure Blob Storage scale along with your wants, offering just about limitless storage capability, and provide built-in safety features. Nonetheless, in case you are working with extremely delicate information with regulatory compliance necessities, on-premise storage is often required.

As soon as the info is collected, the labeling course of can start. The annotation workflow can fluctuate relying on information sorts, however normally, every vital information level is recognized and labeled utilizing an HTL method. There are a number of platforms out there that streamline this complicated course of, together with each open-source (Doccano, LabelStudio, CVAT) and business (Scale Information Engine, Labelbox, Supervisely, Amazon SageMaker Floor Reality) annotation instruments.

After the labels are created, they’re reviewed by a QA staff to make sure accuracy. Any inconsistencies are sometimes resolved at this stage by means of guide approaches, akin to majority resolution, benchmarking, and session with subject material specialists. Inconsistencies may also be mitigated with automated strategies, for instance, utilizing a statistical algorithm just like the Dawid-Skene mannequin to mixture labels from a number of annotators right into a single, extra dependable label. As soon as the right labels are agreed upon by the important thing stakeholders, they’re known as the “floor reality,” and can be utilized to coach ML fashions. Many free and open-source instruments have primary QA workflow and information validation performance, whereas business instruments present extra superior options, akin to machine validation, approval workflow administration, and high quality metrics monitoring.

Information Labeling Instrument Comparability

Open-source instruments are an excellent place to begin for information labeling. Whereas their performance could also be restricted in comparison with business instruments, the absence of licensing charges is a big benefit for smaller tasks. Whereas business instruments usually characteristic AI-assisted pre-labeling, many open-source instruments additionally help pre-labeling when linked to an exterior ML mannequin.

Title | Supported information sorts | Workflow administration | QA | Help for cloud storage | Extra notes |

|---|---|---|---|---|---|

Label Studio Neighborhood Version |

| Sure | No |

| |

CVAT | Sure | Sure |

|

| |

Doccano | Sure | No |

|

| |

VIA (VGG Picture Annotator) | No | No | No |

| |

No | No | No |

Whereas open-source platforms present a lot of the performance wanted for an information labeling mission, complicated machine studying tasks requiring superior annotation options, automation, and scalability will profit from using a business platform. With added safety features, technical help, complete pre-labeling performance (assisted by included ML fashions), and dashboards for visualizing analytics, a business information labeling platform is typically effectively definitely worth the extra value.

Title | Supported information kinds | Workflow administration | QA | Help for cloud storage | Extra notes |

|---|---|---|---|---|---|

Labelbox |

| Sure | Sure |

|

|

Supervisely |

| Sure | Sure |

|

|

Amazon SageMaker Floor Reality |

| Sure | Sure |

| |

Scale AI Information Engine |

| Sure | Sure |

| |

| Sure | Sure |

|

|

In case you require options that aren’t out there with current instruments, chances are you’ll choose to construct an in-house information labeling platform, enabling you to customise help for particular information codecs and annotation duties, in addition to design {custom} pre-labeling, assessment, and QA workflows. Nonetheless, constructing and sustaining a platform that’s on par with the functionalities of a business platform is value prohibitive for many firms.

In the end, the selection is determined by varied components. If third-party platforms shouldn’t have the options that the mission requires or if the mission entails extremely delicate information, a custom-built platform may be the most effective answer. Some tasks could profit from a hybrid method, the place core labeling duties are dealt with by a business platform, however {custom} performance is developed in-house.

Guaranteeing High quality and Safety in Information Labeling Techniques

The information labeling pipeline is a fancy system that entails huge quantities of information, a number of ranges of infrastructure, a staff of labelers, and an elaborate, multilayered workflow. Bringing these parts collectively right into a easily operating system isn’t a trivial process. There are challenges that may have an effect on labeling high quality, reliability, and effectivity, in addition to the ever-present problems with privateness and safety.

Enhancing Accuracy in Labeling

Automation can pace up the labeling course of, however overdependence on automated labeling instruments can cut back the accuracy of labels. Information labeling duties sometimes require contextual consciousness, area experience, or subjective judgment, none of which a software program algorithm can but present. Offering clear human annotation pointers and detecting labeling errors are two efficient strategies for making certain information labeling high quality.

Inaccuracies within the annotation course of could be minimized by making a complete set of pointers. All potential label classifications needs to be outlined, and the codecs of labels specified. The annotation pointers ought to embrace step-by-step directions that embrace steerage for ambiguity and edge instances. There must also be quite a lot of instance annotations for labelers to comply with that embrace easy information factors in addition to ambiguous ones.

Having a couple of impartial annotator labeling the identical information level and evaluating their outcomes will yield the next diploma of accuracy. Inter-annotator settlement (IAA) is a key metric used to measure labeling consistency between annotators. For information factors with low IAA scores, a assessment course of needs to be established as a way to attain consensus on a label. Setting a minimal consensus threshold for IAA scores ensures that the ML mannequin solely learns from information with a excessive diploma of settlement between labelers.

As well as, rigorous error detection and monitoring go a good distance in bettering annotation accuracy. Error detection could be automated utilizing software program instruments like Cleanlab. With such instruments, labeled information could be in contrast towards predefined guidelines to detect inconsistencies or outliers. For photographs, the software program would possibly flag overlapping bounding packing containers. With textual content, lacking annotations or incorrect label codecs could be robotically detected. All errors are highlighted for assessment by the QA staff. Additionally, many business annotation platforms provide AI-assisted error detection, the place potential errors are flagged by an ML mannequin pretrained on annotated information. Flagged and reviewed information factors are then added to the mannequin’s coaching information, bettering its accuracy by way of energetic studying.

Error monitoring supplies the precious suggestions mandatory to enhance the labeling course of by way of steady studying. Key metrics, akin to label accuracy and consistency between labelers, are tracked. If there are duties the place labelers regularly make errors, the underlying causes must be decided. Many business information labeling platforms present built-in dashboards that allow labeling historical past and error distribution to be visualized. Strategies of bettering efficiency can embrace adjusting information labeling requirements and pointers to make clear ambiguous directions, retraining labelers, or refining the principles for error detection algorithms.

Addressing Bias and Equity

Information labeling depends closely on private judgment and interpretation, making it a problem for human annotators to create truthful and unbiased labels. Information could be ambiguous. When classifying textual content information, sentiments akin to sarcasm or humor can simply be misinterpreted. A facial features in a picture may be thought-about “unhappy” to some labelers and “bored” to others. This subjectivity can open the door to bias.

The dataset itself may also be biased. Relying on the supply, particular demographics and viewpoints could be over- or underrepresented. Coaching a mannequin on biased information could cause inaccurate predictions, for instance, incorrect diagnoses as a consequence of bias in medical datasets.

To cut back bias within the annotation course of, the members of the labeling and QA groups ought to have numerous backgrounds and views. Double- and multilabeling may also decrease the influence of particular person biases. The coaching information ought to replicate real-world information, with a balanced illustration of things akin to demographics and geographic location. Information could be collected from a wider vary of sources, and if mandatory, information could be added to particularly tackle potential sources of bias. As well as, information augmentation strategies, akin to picture flipping or textual content paraphrasing, can decrease inherent biases by artificially growing the range of the dataset. These strategies current variations on the unique information level. Flipping a picture allows the mannequin to study to acknowledge an object whatever the manner it’s dealing with, lowering bias towards particular orientations. Paraphrasing textual content exposes the mannequin to extra methods of expressing the knowledge within the information level, lowering potential biases brought on by particular phrases or phrasing.

Incorporating an exterior oversight course of may also assist to scale back bias within the information labeling course of. An exterior staff—consisting of area specialists, information scientists, ML specialists, and variety and inclusion specialists—could be introduced in to assessment labeling pointers, consider workflow, and audit the labeled information, offering suggestions on tips on how to enhance the method in order that it’s truthful and unbiased.

Information Privateness and Safety

Information labeling tasks usually contain doubtlessly delicate data. All platforms ought to combine safety features akin to encryption and multifactor authentication for person entry management. To guard privateness, information with personally identifiable data needs to be eliminated or anonymized. Moreover, each member of the labeling staff needs to be skilled on information safety finest practices, akin to having robust passwords and avoiding unintentional information sharing.

Information labeling platforms must also adjust to related information privateness laws, together with the Normal Information Safety Regulation (GDPR) and the California Client Privateness Act (CCPA), in addition to the Well being Insurance coverage Portability and Accountability Act (HIPAA). Many business information platforms are SOC 2 Kind 2 licensed, that means they’ve been audited by an exterior occasion and located to adjust to the 5 belief ideas: safety, availability, processing integrity, confidentiality, and privateness.

Future-proofing Your Information Labeling System

Information labeling is an invisible, however huge endeavor that performs a pivotal function within the improvement of ML fashions and AI programs—and labeling structure should be capable of scale as necessities change.

Business and open-source platforms are repeatedly up to date to help rising information labeling wants. Likewise, in-house information labeling options needs to be developed with simple updating in thoughts. Modular design allows parts to be swapped out with out affecting the remainder of the system, for instance. And integrating open-source libraries or frameworks provides adaptability, as a result of they’re continually being up to date because the trade evolves.

Particularly, cloud-based options provide vital benefits for large-scale information labeling tasks over self-managed programs. Cloud platforms can dynamically scale their storage and processing energy as wanted, eliminating the necessity for costly infrastructure upgrades.

The annotating workforce should additionally be capable of scale as datasets develop. New annotators must be skilled shortly on tips on how to label information precisely and effectively. Filling the gaps with managed information labeling providers or on-demand annotators permits for versatile scaling based mostly on mission wants. That stated, the coaching and onboarding course of should even be scalable with respect to location, language, and availability.

The important thing to ML mannequin accuracy is the standard of the labeled information that the fashions are skilled on, and efficient, hybrid information labeling programs provide AI the potential to enhance the best way we do issues and make just about each enterprise extra environment friendly.