An Introduction to Time Sequence Forecasting with Generative AI

Time collection forecasting has been a cornerstone of enterprise useful resource planning for many years. Predictions about future demand information crucial choices such because the variety of models to inventory, labor to rent, capital investments into manufacturing and success infrastructure, and the pricing of products and providers. Correct demand forecasts are important for these and plenty of different enterprise choices.

Nonetheless, forecasts are not often if ever good. Within the mid-2010s, many organizations coping with computational limitations and restricted entry to superior forecasting capabilities reported forecast accuracies of solely 50-60%. However with the broader adoption of the cloud, the introduction of way more accessible applied sciences and the improved accessibility of exterior information sources akin to climate and occasion information, organizations are starting to see enhancements.

As we enter the period of generative AI, a brand new class of fashions known as time collection transformers seems able to serving to organizations ship much more enchancment. Much like giant language fashions (like ChatGPT) that excel at predicting the subsequent phrase in a sentence, time collection transformers predict the subsequent worth in a numerical sequence. With publicity to giant volumes of time collection information, these fashions turn out to be consultants at selecting up on delicate patterns of relationships between the values in these collection with demonstrated success throughout a wide range of domains.

On this weblog, we’ll present a high-level introduction to this class of forecasting fashions, supposed to assist managers, analysts and information scientists develop a fundamental understanding of how they work. We’ll then present entry to a collection of notebooks constructed round publicly out there datasets demonstrating how organizations housing their information in Databricks might simply faucet into a number of of the preferred of those fashions for his or her forecasting wants. We hope that this helps organizations faucet into the potential of generative AI for driving higher forecast accuracies.

Understanding Time Sequence Transformers

Generative AI fashions are a type of a deep neural community, a fancy machine studying mannequin inside which numerous inputs are mixed in a wide range of methods to reach at a predicted worth. The mechanics of how the mannequin learns to mix inputs to reach at an correct prediction is known as a mannequin’s structure.

The breakthrough in deep neural networks which have given rise to generative AI has been the design of a specialised mannequin structure known as a transformer. Whereas the precise particulars of how transformers differ from different deep neural community architectures are fairly advanced, the easy matter is that the transformer is superb at selecting up on the advanced relationships between values in lengthy sequences.

To coach a time collection transformer, an appropriately architected deep neural community is uncovered to a big quantity of time collection information. After it has had the chance to coach on thousands and thousands if not billions of time collection values, it learns the advanced patterns of relationships present in these datasets. When it’s then uncovered to a beforehand unseen time collection, it may well use this foundational information to establish the place comparable patterns of relationships inside the time collection exist and predict new values within the sequence.

This technique of studying relationships from giant volumes of knowledge is known as pre-training. As a result of the information gained by the mannequin throughout pre-training is extremely generalizable, pre-trained fashions known as basis fashions may be employed in opposition to beforehand unseen time collection with out extra coaching. That mentioned, extra coaching on a corporation’s proprietary information, a course of known as fine-tuning, might in some situations assist the group obtain even higher forecast accuracy. Both means, as soon as the mannequin is deemed to be in a passable state, the group merely must current it with a time collection and ask, what comes subsequent?

Addressing Widespread Time Sequence Challenges

Whereas this high-level understanding of a time collection transformer might make sense, most forecast practitioners will possible have three rapid questions. First, whereas two time collection might observe the same sample, they could function at utterly completely different scales, how does a transformer overcome that downside? Second, inside most time collection fashions there are every day, weekly and annual patterns of seasonality that must be thought-about, how do fashions know to search for these patterns? Third, many time collection are influenced by exterior components, how can this information be included into the forecast era course of?

The primary of those challenges is addressed by mathematically standardizing all time collection information utilizing a set of methods known as scaling. The mechanics of this are inside to every mannequin’s structure however primarily incoming time collection values are transformed to a normal scale that enables the mannequin to acknowledge patterns within the information based mostly on its foundational information. Predictions are made and people predictions are then returned to the unique scale of the unique information.

Relating to the seasonal patterns, on the coronary heart of the transformer structure is a course of known as self-attention. Whereas this course of is kind of advanced, basically this mechanism permits the mannequin to be taught the diploma to which particular prior values affect a given future worth.

Whereas that seems like the answer for seasonality, it is essential to know that fashions differ of their skill to select up on low-level patterns of seasonality based mostly on how they divide time collection inputs. By way of a course of known as tokenization, values in a time collection are divided into models known as tokens. A token could also be a single time collection worth or it might be a brief sequence of values (sometimes called a patch).

The dimensions of the token determines the bottom degree of granularity at which seasonal patterns may be detected. (Tokenization additionally defines logic for coping with lacking values.) When exploring a specific mannequin, it is essential to learn the typically technical info round tokenization to know whether or not the mannequin is suitable on your information.

Lastly, concerning exterior variables, time collection transformers make use of a wide range of approaches. In some, fashions are educated on each time collection information and associated exterior variables. In others, fashions are architected to know {that a} single time collection could also be composed of a number of, parallel, associated sequences. Whatever the exact approach employed, some restricted assist for exterior variables may be discovered with these fashions.

A Temporary Take a look at 4 Well-liked Time Sequence Transformers

With a high-level understanding of time collection transformers beneath our belt, let’s take a second to take a look at 4 standard basis time collection transformer fashions:

Chronos

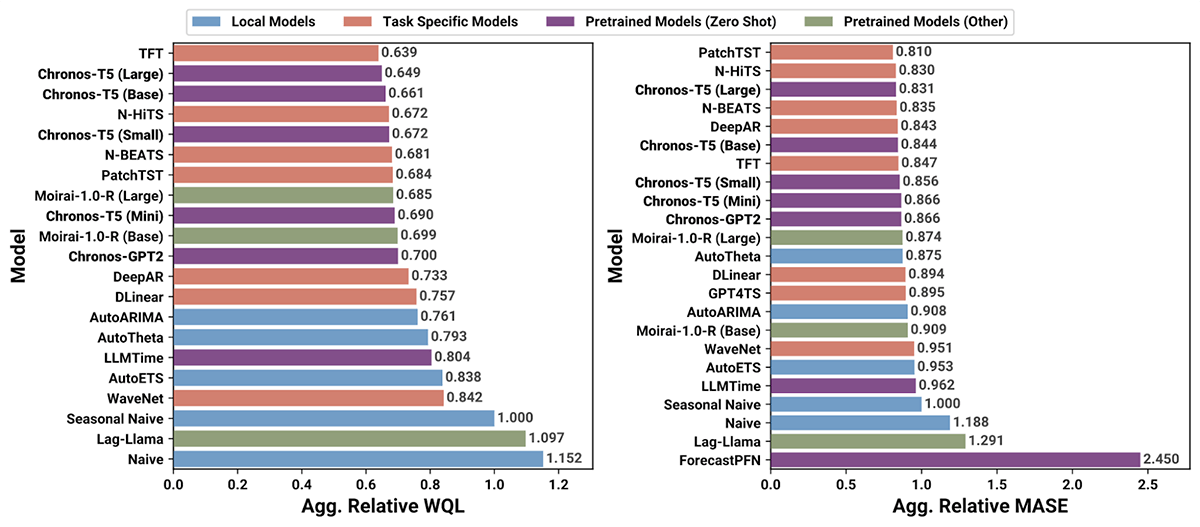

Chronos is a household of open-source, pretrained time collection forecasting fashions from Amazon. These fashions take a comparatively naive strategy to forecasting by deciphering a time collection as only a specialised language with its personal patterns of relationships between tokens. Regardless of this comparatively simplistic strategy which incorporates assist for lacking values however not exterior variables, the Chronos household of fashions has demonstrated some spectacular outcomes as a general-purpose forecasting answer (Determine 1).

Determine 1. Analysis metrics for Chronos and numerous different forecasting fashions utilized to 27 benchmarking information units (from https://github.com/amazon-science/chronos-forecasting)

TimesFM

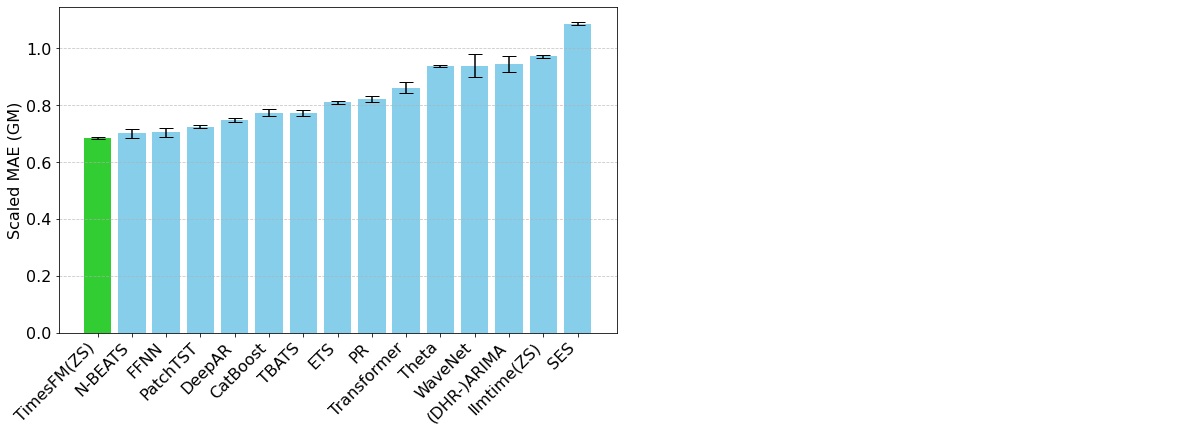

TimesFM is an open-source basis mannequin developed by Google Analysis, pre-trained on over 100 billion real-world time collection factors. Not like Chronos, TimesFM contains a while series-specific mechanisms in its structure that allow the consumer to exert fine-grained management over how inputs and outputs are organized. This has an influence on how seasonal patterns are detected but in addition the computation occasions related to the mannequin. TimesFM has confirmed itself to be a really highly effective and versatile time collection forecasting software (Determine 2).

Determine 2. Analysis metrics for TimesFM and numerous different fashions in opposition to the Monash Forecasting Archive dataset (from https://analysis.google/weblog/a-decoder-only-foundation-model-for-time-series-forecasting/)

Moirai

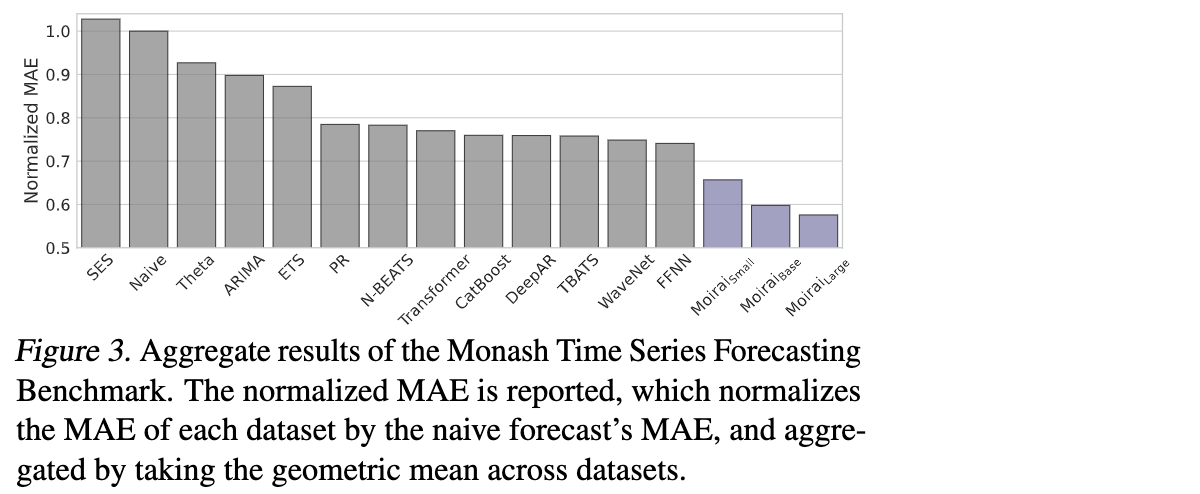

Moirai, developed by Salesforce AI Analysis, is one other open-source basis mannequin for time collection forecasting. Skilled on “27 billion observations spanning 9 distinct domains”, Moirai is offered as a common forecaster able to supporting each lacking values and exterior variables. Variable patch sizes permit organizations to tune the mannequin to the seasonal patterns of their datasets and when utilized correctly have been demonstrated to carry out fairly properly in opposition to different fashions (Determine 3).

Determine 3. Analysis metrics for Moirai and numerous different fashions in opposition to the Monash Time Sequence Forecasting Benchmark (from https://weblog.salesforceairesearch.com/moirai/)

TimeGPT

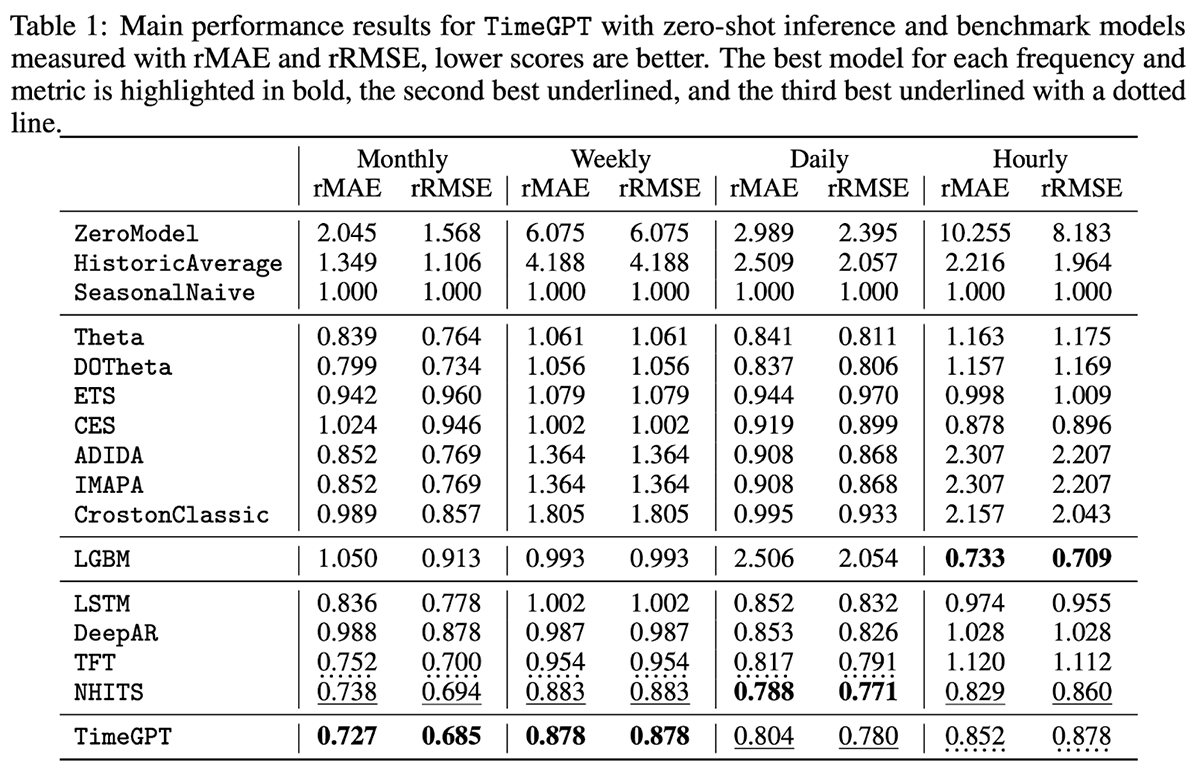

TimeGPT is a proprietary mannequin with assist for exterior (exogenous) variables however not lacking values. Targeted on ease of use, TimeGPT is hosted via a public API that enables organizations to generate forecasts with as little as a single line of code. In benchmarking the mannequin in opposition to 300,000 distinctive collection at completely different ranges of temporal granularity, the mannequin was proven to supply some spectacular outcomes with little or no forecasting latency (Determine 4).

Determine 4. Analysis metrics for TimeGPT and numerous different fashions in opposition to 300,000 distinctive collection (from https://arxiv.org/pdf/2310.03589)

Getting Began with Transformer Forecasting on Databricks

With so many mannequin choices and extra nonetheless on the best way, the important thing query for many organizations is, the way to get began in evaluating these fashions utilizing their very own proprietary information? As with all different forecasting strategy, organizations utilizing time collection forecasting fashions should current their historic information to the mannequin to create predictions, and people predictions should be rigorously evaluated and finally deployed to downstream methods to make them actionable.

Due to Databricks’ scalability and environment friendly use of cloud sources, many organizations have lengthy used it as the idea for his or her forecasting work, producing tens of thousands and thousands of forecasts on a every day and even larger frequency to run their enterprise operations. The introduction of a brand new class of forecasting fashions does not change the character of this work, it merely gives these organizations extra choices for doing it inside this atmosphere.

That is to not say that there are usually not some new wrinkles that include these fashions. Constructed on a deep neural community structure, many of those fashions carry out greatest when employed in opposition to a GPU, and within the case of TimeGPT, they could require API calls to an exterior infrastructure as a part of the forecast era course of. However basically, the sample of housing a corporation’s historic time collection information, presenting that information to a mannequin and capturing the output to a queriable desk stays unchanged.

To assist organizations perceive how they could use these fashions inside a Databricks atmosphere, we have assembled a collection of notebooks demonstrating how forecasts may be generated with every of the 4 fashions described above. Practitioners might freely obtain these notebooks and make use of them inside their Databricks atmosphere to realize familiarity with their use. The code offered might then be tailored to different, comparable fashions, offering organizations utilizing Databricks as the idea for his or her forecasting efforts extra choices for utilizing generative AI of their useful resource planning processes.

Get began with Databricks for forecasting modeling right this moment with this collection of notebooks.