A scorching potato: The worldwide AI trade is quietly crossing an power threshold that might reshape energy grids and local weather commitments. New findings reveal that the electrical energy required to run superior AI methods might surpass Bitcoin mining’s infamous power urge for food by late 2025, with implications that stretch far past tech boardrooms.

The speedy growth of generative AI has triggered a growth in information heart building and {hardware} manufacturing. As AI functions turn out to be extra advanced and are extra broadly adopted, the specialised {hardware} that powers them, accelerators from the likes of Nvidia and AMD, has proliferated at an unprecedented fee. This surge has pushed a dramatic escalation in power consumption, with AI anticipated to account for practically half of all information heart electrical energy utilization by subsequent 12 months, up from about 20 p.c in the present day.

AI anticipated to account for practically half of all information heart electrical energy utilization by subsequent 12 months, up from about 20 p.c in the present day.

This transformation has been meticulously analyzed by Alex de Vries-Gao, a PhD candidate at Vrije Universiteit Amsterdam’s Institute for Environmental Research. His analysis, revealed within the journal Joule, attracts on public machine specs, analyst forecasts, and company disclosures to estimate the manufacturing quantity and power consumption of AI {hardware}.

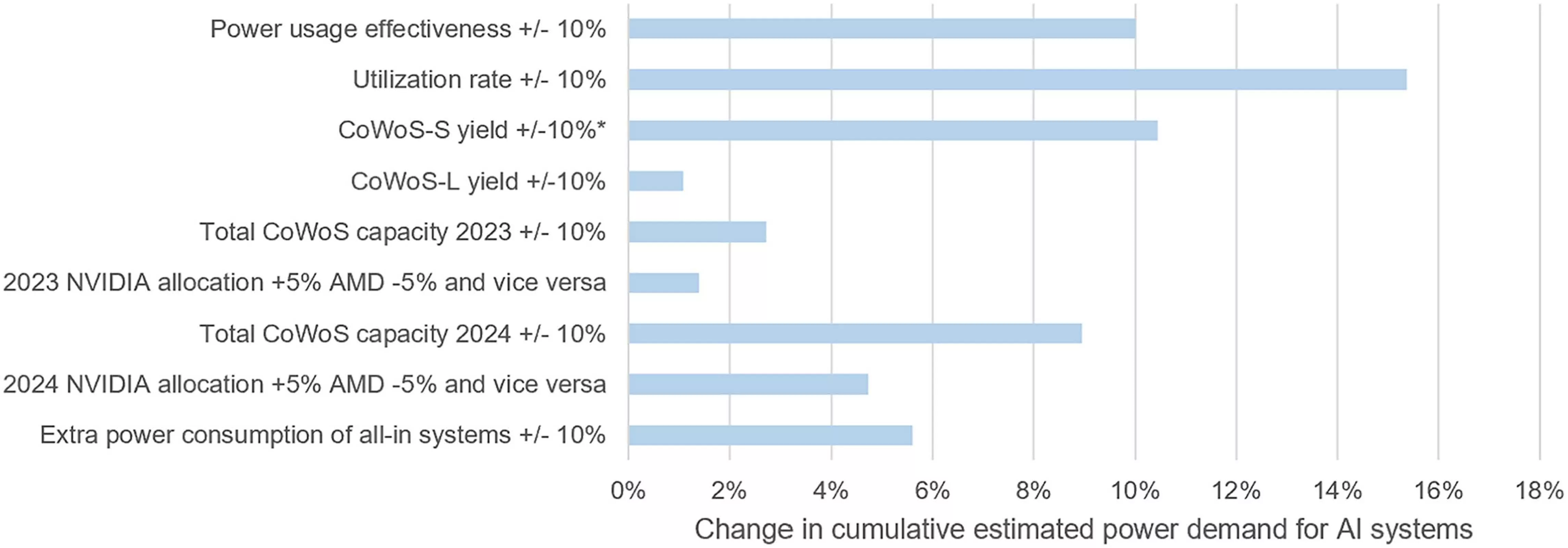

As a result of main tech corporations hardly ever disclose the electrical energy consumption of their AI operations, de Vries-Gao used a triangulation technique, analyzing the availability chain for superior chips and the manufacturing capability of key gamers similar to TSMC.

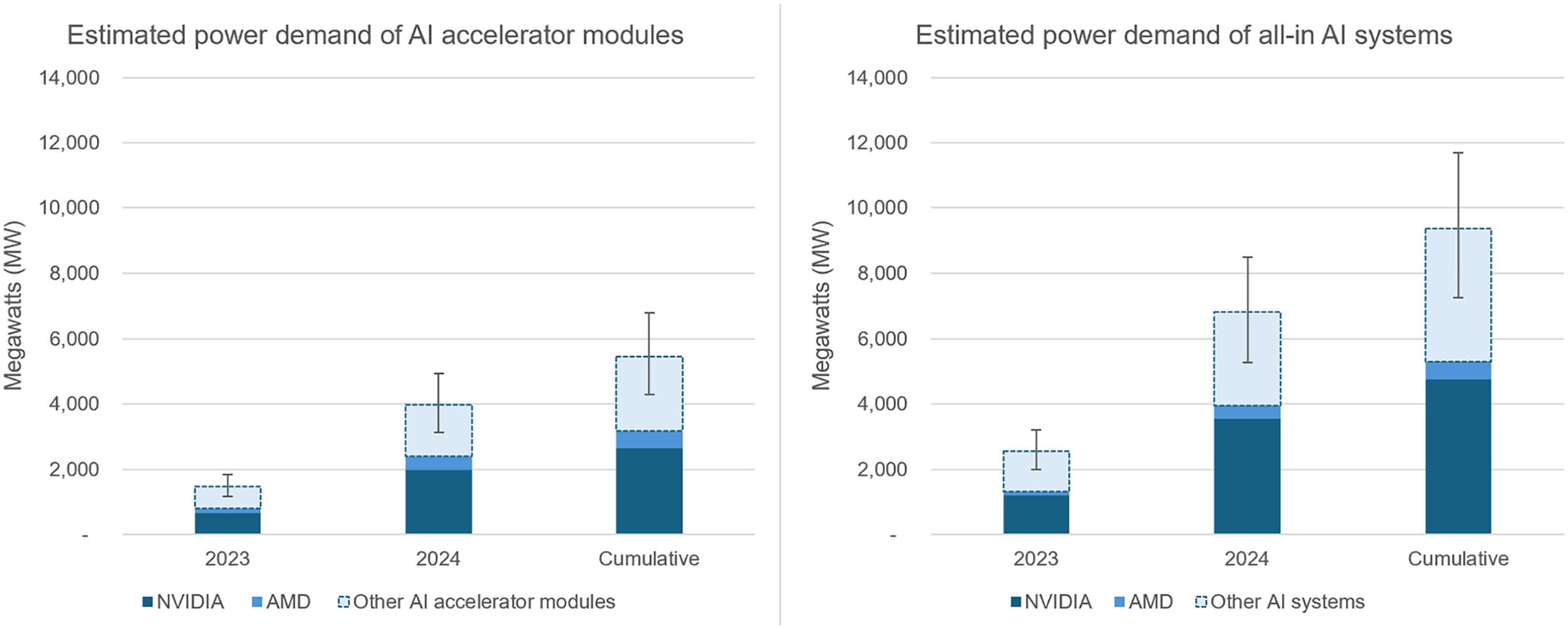

The numbers inform a stark story. Every Nvidia H100 AI accelerator, a staple in trendy information facilities, consumes 700 watts constantly when working advanced fashions. Multiply that by thousands and thousands of models, and the cumulative power draw turns into staggering.

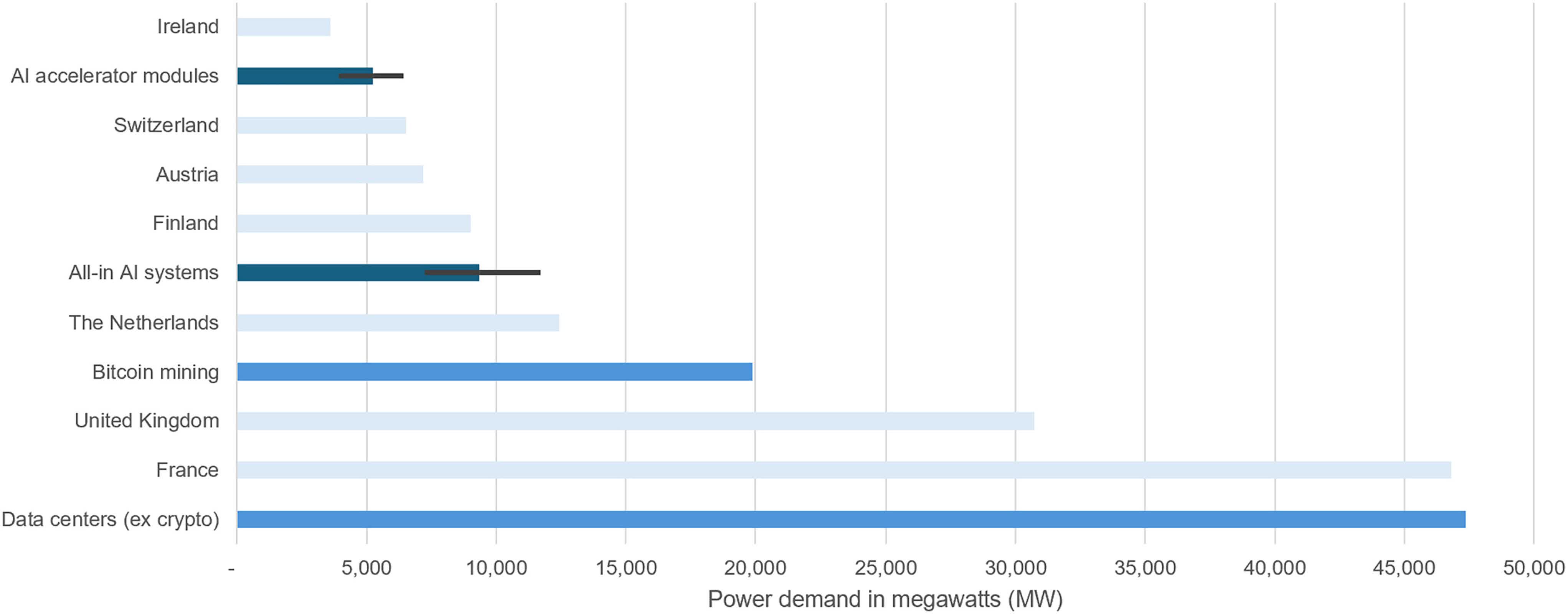

De Vries-Gao estimates that {hardware} produced in 2023 – 2024 alone might in the end demand between 5.3 and 9.4 gigawatts, sufficient to eclipse Eire’s complete nationwide electrical energy consumption.

However the actual surge lies forward. TSMC’s CoWoS packaging expertise permits highly effective processors and high-speed reminiscence to be built-in into single models, the core of recent AI methods. De Vries-Gao discovered that TSMC greater than doubled its CoWoS manufacturing capability between 2023 and 2024, but demand from AI chipmakers like Nvidia and AMD nonetheless outstripped provide.

TSMC plans to double CoWoS capability once more in 2025. If present tendencies proceed, de Vries-Gao initiatives that complete AI system energy wants might attain 23 gigawatts by the tip of the 12 months – roughly equal to the UK’s common nationwide energy consumption.

This might give AI a bigger power footprint than world Bitcoin mining. The Worldwide Vitality Company warns that this development might single-handedly double the electrical energy consumption of knowledge facilities inside two years.

Whereas enhancements in power effectivity and elevated reliance on renewable energy have helped considerably, these positive aspects are being quickly outpaced by the dimensions of latest {hardware} and information heart deployment. The trade’s “larger is best” mindset – the place ever-larger fashions are pursued to spice up efficiency – has created a suggestions loop of escalating useful resource use. Whilst particular person information facilities turn out to be extra environment friendly, total power use continues to rise.

Behind the scenes, a producing arms race complicates any effectivity positive aspects. Every new technology of AI chips requires more and more subtle packaging. TSMC’s newest CoWoS-L expertise, whereas important for next-gen processors, struggles with low manufacturing yields.

In the meantime, firms like Google report “energy capability crises” as they scramble to construct information facilities quick sufficient. Some initiatives are actually repurposing fossil gasoline infrastructure, with one securing 4.5 gigawatts of pure fuel capability particularly for AI workloads.

The environmental impression of AI relies upon closely on the place these power-hungry methods function. In areas the place electrical energy is primarily generated from fossil fuels, the related carbon emissions will be considerably greater than in areas powered by renewables. A server farm in coal-reliant West Virginia, for instance, generates practically twice the carbon emissions of 1 in renewable-rich California.

But, tech giants hardly ever disclose the place or how their AI operates – a transparency hole that threatens to undermine local weather targets. This opacity makes it difficult for policymakers, researchers, and the general public to totally assess the environmental implications of the AI growth.