Overview:

Information exfiltration is among the most severe safety dangers organizations face immediately. It might probably expose delicate buyer or enterprise info, resulting in reputational injury and regulatory penalties below legal guidelines like GDPR. The issue is that exfiltration can occur in some ways—via exterior attackers, insider errors, or malicious insiders and is commonly onerous to detect till the injury is completed.

Safety and cloud groups should defend in opposition to these dangers whereas enabling workers to make use of SaaS instruments and cloud companies to do their work. With a whole lot of companies in play, analyzing each doable exfiltration path can really feel overwhelming.

On this weblog, we introduce a unified method to defending in opposition to knowledge exfiltration on Databricks throughout AWS, Azure, and GCP. We begin with three core safety necessities that type a framework for assessing threat. We then map these necessities to nineteen sensible controls, organized by precedence, you can apply whether or not you might be constructing your first Databricks safety technique or strengthening an present one.

A Framework for Categorizing Information Exfiltration Safety Controls:

We’ll begin by defining the three core enterprise necessities that can type a complete framework for mapping related knowledge exfiltration safety controls:

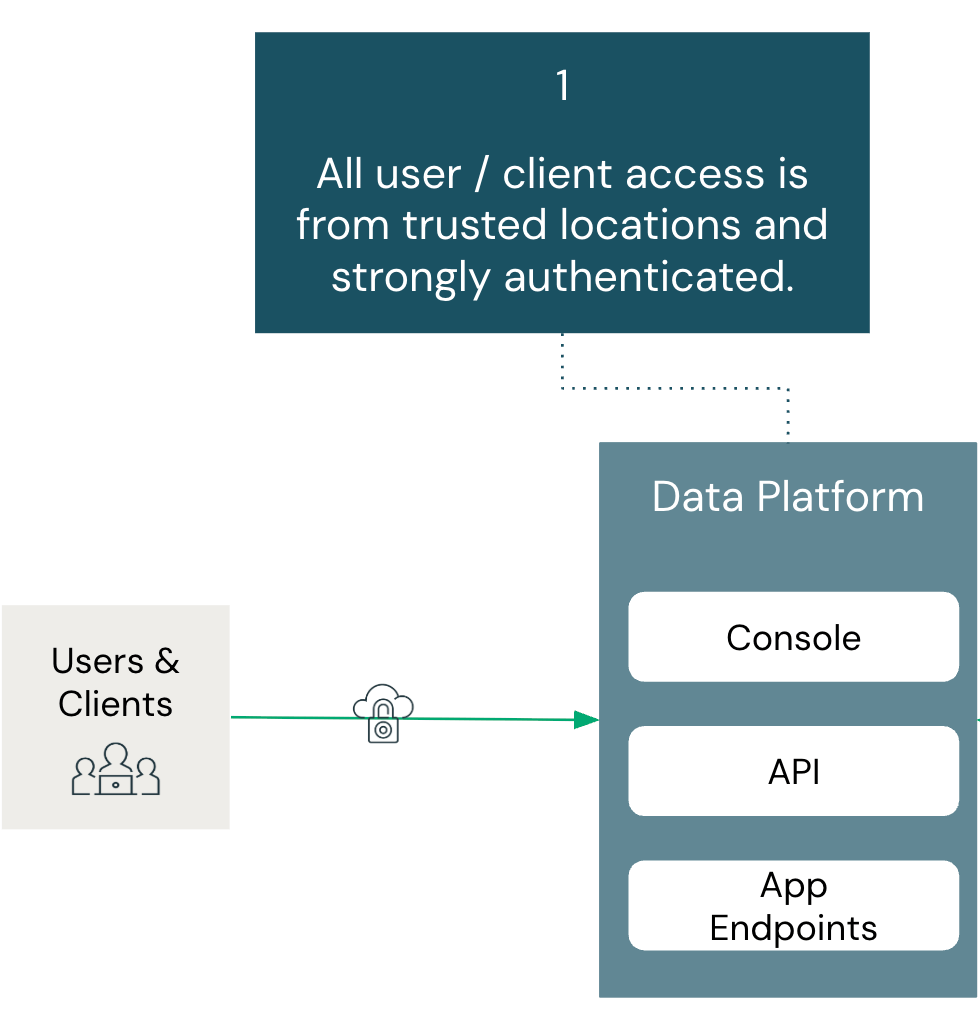

- All person/shopper entry is from trusted places and strongly authenticated:

- All entry should be authenticated and originate from trusted places, guaranteeing customers and purchasers can solely attain programs from authorized networks via verified identification controls.

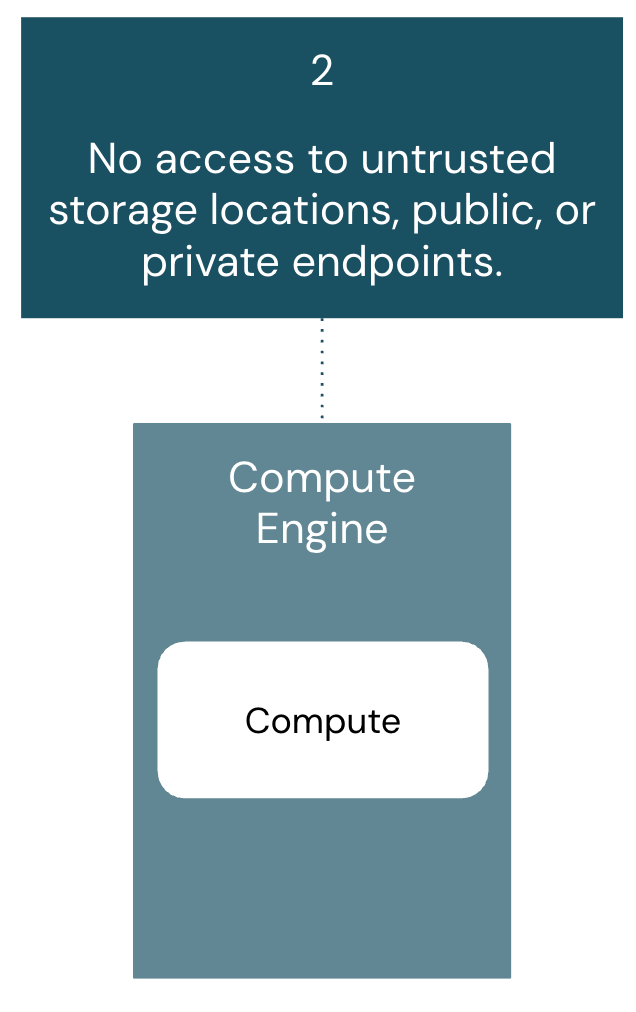

- No entry to untrusted storage places, public, or personal endpoints:

- Compute engines should solely entry administrator-approved storage and endpoints, stopping knowledge exfiltration to unauthorized locations whereas defending in opposition to malicious companies.

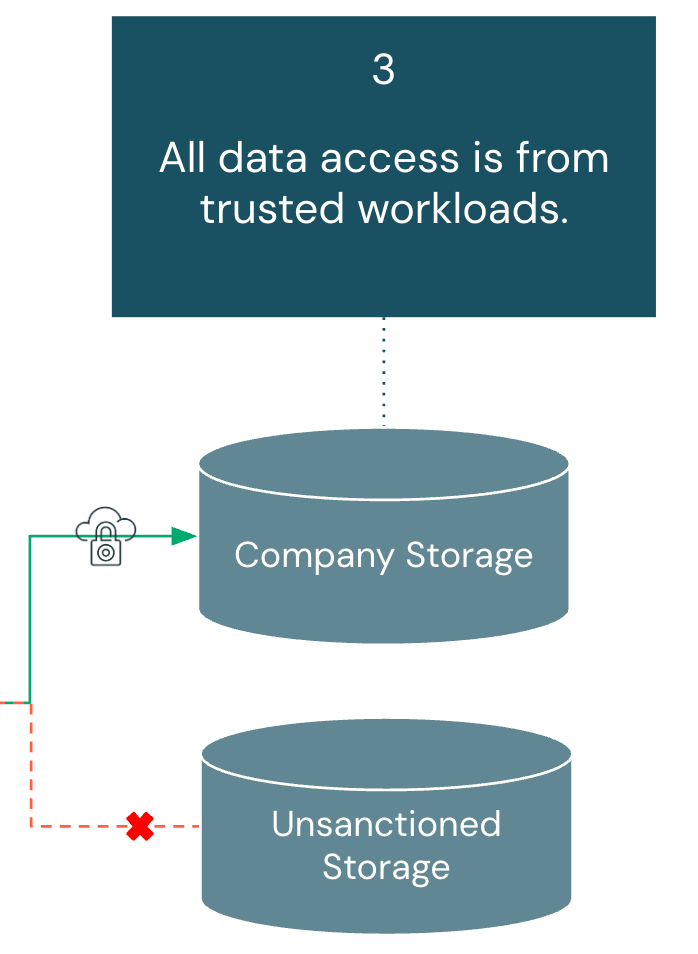

- All knowledge entry is from trusted workloads:

- Storage programs should solely settle for entry from authorized compute assets, making a remaining verification layer even when credentials are compromised on untrusted programs.

General, these three necessities working collectively deal with person behaviors that would facilitate unauthorized knowledge motion exterior the group’s safety perimeter. Nevertheless, it’s essential that we consider every of those three necessities as an entire. If there’s a hole in controls in one of many necessities, it hampers the safety posture of your entire structure.

Within the following sections, we’ll look at particular controls mapped to every particular person requirement.

Information Exfiltration Safety Methods for Databricks:

For readability and ease, every management below the related requirement is organized by: structure part, threat state of affairs, corresponding mitigation, implementation precedence, and cloud-specific documentation.

The legend for the prioritization to implement is as follows:

- HIGH – Implement instantly. These controls are important for all Databricks deployments no matter setting or use case.

- MEDIUM – Assess based mostly in your group’s threat tolerance and particular Databricks utilization patterns.

- LOW – Consider based mostly on workspace setting (improvement, QA, manufacturing) and organizational safety necessities.

NOTE: Earlier than implementing controls, make sure you’re on the right platform tier for that characteristic. Required tiers are famous within the related documentation hyperlinks.

All Consumer and Consumer Entry is From Trusted Places and Strongly Authenticated:

Abstract:

Customers should authenticate via authorized strategies and entry Databricks solely from licensed networks. This establishes the muse for mitigating unauthorized entry.

Structure elements coated on this part embody: Id Supplier, Account Console, and Workspace.

Why Is This Requirement Necessary?

Making certain that every one customers and purchasers join from trusted places and are strongly authenticated is the primary line of protection for mitigating knowledge exfiltration. If a knowledge platform can not affirm that entry requests originate from authorized networks or that customers are validated via a number of layers of authentication (similar to MFA), then each subsequent management is weakened, leaving the setting weak.

| Structure Part: | Danger: | Management: | Precedence to Implement: | Documentation: |

|---|---|---|---|---|

| Id Supplier and Account Console | Customers might try to bypass company identification controls by utilizing private accounts or non-single-sign-on (SSO) login strategies to entry Databricks workspaces. | Implement Unified Login to use single-sign on (SSO) safety throughout all, or chosen, workspaces within the Databricks account. NOTE: We advocate enabling multi-factor authentication (MFA) inside your Id Supplier. For those who can not use SSO, it’s possible you’ll configure MFA instantly in Databricks. | HIGH | AWS, Azure, GCP |

| Id Supplier | Former customers might try to log in to the workspace following a departure from the corporate. | Implement SCIM or Computerized Id Administration to deal with the automated de-provisioning of customers. | HIGH | AWS, Azure, GCP |

| Account Console | Customers might try to entry the account console from unauthorized networks. | Implement account console IP entry management lists (ACLs) | HIGH | AWS, Azure, GCP |

| Workspace | Customers might try to entry the workspace from unauthorized networks. | Implement community entry controls utilizing one of many following approaches: – Personal Connectivity – IP ACLs | HIGH | Personal Connectivity: AWS, Azure, GCP |

No Entry to Untrusted Storage Places, Public, or Personal Endpoints:

Abstract:

Compute assets should solely entry pre-approved storage places and endpoints. This mitigates knowledge exfiltration to unauthorized locations and protects in opposition to malicious exterior companies.

Structure elements coated on this part embody: Traditional Compute, Serverless Compute, and Unity Catalog.

Why Is This Requirement Necessary?

The requirement for compute to entry solely trusted storage places and endpoints is foundational to preserving a company’s safety perimeter. Historically, firewalls served as the first safeguard in opposition to knowledge exfiltration, however as cloud companies and SaaS integration factors develop, organizations should account for all potential vectors that might be exploited to maneuver knowledge to untrusted locations.

| Structure Part: | Danger: | Management: | Precedence to Implement: | Documentation: |

|---|---|---|---|---|

| Traditional Compute | Customers might execute code that interacts with malicious or unapproved public endpoints. | Implement an egress firewall in your cloud supplier community to filter outbound visitors to solely authorized domains and IP addresses. In any other case, for sure cloud suppliers, take away all outbound entry to the web. | HIGH | AWS, Azure, GCP |

| Traditional Compute | Customers might execute code that exfiltrates knowledge to unmonitored cloud assets by leveraging personal community connectivity to entry storage accounts or companies exterior their supposed scope. | Implement coverage pushed entry (e.g., VPC endpoint insurance policies, service endpoint insurance policies, and so forth.) and community segmentation to limit cluster entry to solely pre-approved cloud assets and storage accounts. | HIGH | AWS, Azure, GCP |

| Serverless Compute | Customers might execute code that exfiltrates knowledge to unauthorized exterior companies or malicious endpoints over public web connections. | Implement serverless egress controls to limit outbound visitors to solely pre-approved storage accounts and verified public endpoints. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Customers might try to entry untrusted storage accounts to exfiltrate knowledge exterior the group’s authorized knowledge perimeter. | Solely permit admins to create storage credentials and exterior places. Give customers permissions to make use of authorized Unity Catalog securables. Follow the precept of least privilege for cloud entry insurance policies (e.g. IAM) for storage credentials. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Customers might try to entry untrusted databases to learn and write unauthorized knowledge. | Solely permit admins to create database connections utilizing Lakehouse Federation. Give customers permissions to make use of authorized connections. | MEDIUM | AWS, Azure, GCP |

| Unity Catalog | Customers might try to entry untrusted non-storage cloud assets (e.g., managed streaming companies) utilizing unauthorized credentials. | Solely permit admins to create service credentials for exterior cloud companies. Give customers permissions to make use of authorized service credentials. Follow the precept of least privilege for cloud entry insurance policies (e.g. IAM) for service credentials. | MEDIUM | AWS, Azure, GCP |

All Information Entry is From Trusted Workloads:

Abstract:

Information storage should solely settle for entry from authorized Databricks workloads and trusted compute sources. This mitigates unauthorized entry to each buyer knowledge and workspace artifacts like notebooks and question outcomes. Structure elements coated on this part embody: Storage Account, Serverless Compute, Unity Catalog, and Workspace Settings.

Why Is This Requirement Necessary?

As organizations undertake extra SaaS instruments, knowledge requests more and more originate exterior conventional cloud networks. These requests might contain cloud object shops, databases, or streaming platforms, every creating potential avenues for exfiltration. To scale back this threat, entry should be constantly enforced via authorized governance layers and restricted to sanctioned knowledge tooling, guaranteeing knowledge is used inside managed environments.

| Structure Part: | Danger: | Management: | Precedence to Implement: | Documentation: |

|---|---|---|---|---|

| Storage Account | Customers might try to entry cloud supplier storage accounts via non-Unity Catalog ruled compute. | Implement firewalls or bucket insurance policies on storage accounts to solely settle for visitors from authorized supply locations. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Customers might try to learn and write knowledge from completely different environments (e.g., improvement workspace studying manufacturing knowledge) | Implement workspace bindings for catalogs. | HIGH | AWS, Azure, GCP |

| Serverless Compute | Customers might require entry to cloud assets via serverless compute, forcing directors to reveal inner companies to broader community entry than supposed. | Implement personal endpoints guidelines within the Community Connectivity Configuration object [AWS, Azure, GCP [Not currently available] | MEDIUM | AWS, Azure, GCP [Not currently available] |

| Workspace Settings | Customers might try to obtain pocket book outcomes to their native machine. | Disable Pocket book outcomes obtain within the Workspace admin safety setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Customers might try to obtain quantity recordsdata to their native machine. | Disable Quantity Recordsdata Obtain within the Workspace admin safety setting. | LOW | Documentation not out there. Toggle to disable discovered inside workspace admin safety settings below egress and ingress. |

| Workspace Settings | Customers might try to export notebooks or recordsdata from the workspace to their native machine. | Disable Pocket book and File exporting within the Workspace admin safety setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Customers might try to obtain SQL outcomes to their native machine. | Disable SQL outcomes obtain within the Workspace admin safety setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Customers might try to obtain MLflow run artifacts to their native machine. | Disable MLflow run artifact obtain within the Workspace admin safety setting. | LOW | Documentation not out there. Toggle to disable discovered inside workspace admin safety settings below egress and ingress. |

| Workspace Settings | Customers might try to repeat tabular knowledge to their clipboard via the UI. | Disable Outcomes desk clipboard characteristic within the Workspace admin safety setting. | LOW | AWS, Azure, GCP |

Proactive Information Exfiltration Monitoring:

Whereas the three core enterprise necessities allow us to set up the preventive controls essential to safe your Databricks Information Intelligence Platform, monitoring supplies the detection capabilities wanted to validate these controls are functioning as supposed. Even with strong authentication, restricted compute entry, and secured storage, you may want visibility into person behaviors that would point out makes an attempt to avoid your established controls.

Databricks presents complete system tables for entry management monitoring [AWS, Azure, GCP]. Utilizing these system tables, prospects can arrange alerts based mostly on doubtlessly suspicious actions to enhance present controls on the workspace.

For out-of-the-box queries that may drive actionable insights, go to this weblog submit: Enhance Lakehouse Safety Monitoring utilizing System Tables in Databricks Unity Catalog. Cloud-specific logs [AWS, Azure, GCP] will be ingested and analyzed to enhance the info from Databricks system tables.

Conclusion:

Now that we have coated the dangers and controls related to every safety requirement that make up this framework, we’ve got a unified method to mitigate knowledge exfiltration in your Databricks deployment.

Whereas stopping the unauthorized motion of knowledge is an on a regular basis job, it will present your customers with a basis to develop and innovate whereas defending one in all your organization’s most necessary property: your knowledge.

To proceed the journey of securing your Information Intelligence Platform, we extremely advocate visiting the Safety and Belief Middle for a holistic view of Safety Greatest Practices on Databricks.

- The Greatest Follow guides present an in depth overview of the primary safety controls we advocate for typical and extremely safe environments.

- The Safety Reference Structure – Terraform Templates make it simple to routinely create Databricks environments that observe the very best practices outlined on this weblog.

- The Safety Evaluation Instrument constantly screens the safety posture of your Databricks Information Intelligence Platform in accordance with greatest practices.