This publish can also be written by Vedha Avali, Genavieve Chick, and Kevin Kurian.

Day-after-day, new examples of deepfakes are surfacing. Some are supposed to be entertaining or humorous, however many are supposed to deceive. Early deepfake assaults focused public figures. Nonetheless, companies, authorities organizations, and healthcare entities have additionally grow to be prime targets. A latest evaluation discovered that barely greater than half of companies in america and the UK have been targets of economic scams powered by deepfake know-how, with 43 p.c falling sufferer to such assaults. On the nationwide safety entrance, deepfakes will be weaponized, enabling the dissemination of misinformation, disinformation, and malinformation (MDM).

It’s tough, however not unattainable, to detect deepfakes with assistance from machine intelligence. Nonetheless, detection strategies should proceed to evolve as era strategies grow to be more and more refined. To counter the menace posed by deepfakes, our group of researchers within the SEI’s CERT Division has developed a software program framework for forgery detection. On this weblog publish we element the evolving deepfake panorama, together with the framework we developed to fight this menace.

The Evolution of Deepfakes

We outline deepfakes as follows:

Deepfakes use deep neural networks to create sensible pictures or movies of individuals saying or doing issues they by no means mentioned or did in actual life. The method entails coaching a mannequin on a big dataset of pictures or movies of a goal individual after which utilizing the mannequin to generate new content material that convincingly imitates the individual’s voice or facial expressions.

Deepfakes are a part of a rising physique of generative AI capabilities that may be manipulated for deceit in data operations. Because the AI capabilities enhance, the strategies of manipulating data grow to be ever tougher to detect. They embrace the next:

- Audio manipulation digitally alters features of an audio recording to change its which means. This could contain altering the pitch, period, quantity, or different properties of the audio sign. In recent times, deep neural networks have been used to create extremely sensible audio samples of individuals saying issues they by no means really mentioned.

- Picture manipulation is the method of digitally altering features of a picture to change its look and which means. This could contain altering the looks of objects or individuals in a picture. In recent times, deep neural networks have been used to generate solely new pictures that aren’t primarily based on real-world objects or scenes.

- Textual content era entails the usage of deep neural networks, akin to recurrent neural networks and transformer-based fashions, to provide authentic-looking textual content that appears to have been written by a human. These strategies can replicate the writing and talking type of people, making the generated textual content seem extra plausible.

A Rising Downside

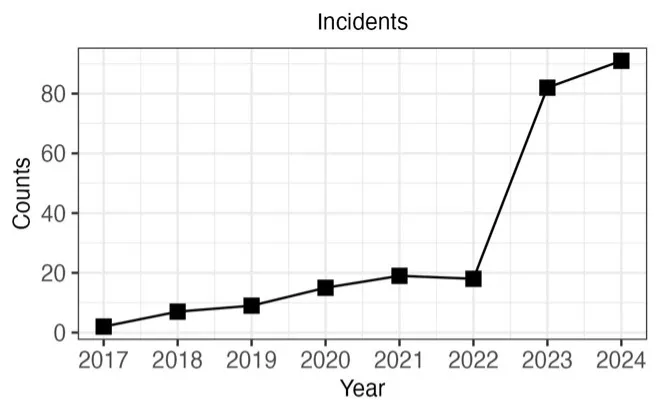

Determine 1 beneath reveals the annual variety of reported or recognized deepfake incidents primarily based on information from the AIAAIC (AI, Algorithmic, and Automation Incidents and Controversies) and the AI Incident Database. From 2017, when deepfakes first emerged, to 2022, there was a gradual enhance in incidents. Nonetheless, from 2022 to 2023, there was a virtually five-fold enhance. The projected variety of incidents for 2024 exceeds that of 2023, suggesting that the heightened degree of assaults seen in 2023 is more likely to grow to be the brand new norm relatively than an exception.

Most incidents concerned public misinformation (60 p.c), adopted by defamation (15 p.c), fraud (10 p.c), exploitation (8 p.c), and identification theft (7 p.c). Political figures and organizations have been probably the most often focused (54 p.c), with extra assaults occurring within the media sector (28 p.c), business (9 p.c), and the non-public sector (8 p.c).

An Evolving Risk

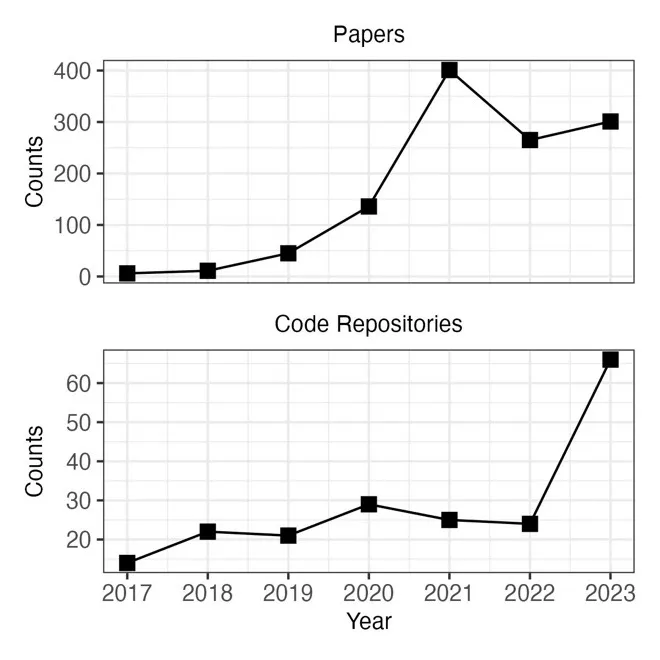

Determine 2 beneath reveals the cumulative variety of educational publications on deepfake era from the Internet of Science. From 2017 to 2019, there was a gentle enhance in publications on deepfake era. The publication fee surged throughout 2019 and has remained on the elevated degree ever since. The determine additionally reveals the cumulative variety of open-source code repositories for deepfake era from GitHub. The variety of repositories for creating deepfakes has elevated together with the variety of publications. Thus, deepfake era strategies are extra succesful and extra accessible than ever earlier than prior to now.

Throughout this analysis, 4 foundational architectures for deepfake era have emerged:

- Variational auto encoders (VAE). A VAE consists of an encoder and a decoder. The encoder learns to map inputs from the unique house (i.e., a picture) to a lower-dimensional latent illustration, whereas the decoder learns to reconstruct a simulacrum of the unique enter from this latent house. In deepfake era, an enter from the attacker is processed by the encoder, and the decoder—skilled with footage of the sufferer—reconstructs the supply sign to match the sufferer’s look and traits. Not like its precursor, the autoencoder (AE), which maps inputs to a set level within the latent house, the VAE maps inputs to a likelihood distribution. This enables the VAE to generate smoother, extra pure outputs with fewer discontinuities and artifacts.

- Generative adversarial networks (GANs). GANs include two neural networks, a generator and a discriminator, competing in a zero-sum sport. The generator creates faux information, akin to pictures of faces, whereas the discriminator evaluates the authenticity of the info created by the generator. Each networks enhance over time, resulting in extremely sensible generated content material. Following coaching, the generator is used to provide synthetic faces.

- Diffusion fashions (DM). Diffusion refers to a technique the place information, akin to pictures, are progressively corrupted by including noise. A mannequin is skilled to sequentially denoise these blurred pictures. As soon as the denoising mannequin has been skilled, it may be used for era by ranging from a picture composed solely of noise, and progressively refining it by the discovered denoising course of. DMs can produce extremely detailed and photorealistic pictures. The denoising course of can be conditioned on textual content inputs, permitting DMs to provide outputs primarily based on particular descriptions of objects or scenes.

- Transformers. The transformer structure makes use of a self-attention mechanism to make clear the which means of tokens primarily based on their context. For instance, the which means of phrases in a sentence. Transformers efficient for pure language processing (NLP) due to sequential dependencies current in language. Transformers are additionally utilized in text-to-speech (TTS) methods to seize sequential dependencies current in audio alerts, permitting for the creation of sensible audio deepfakes. Moreover, transformers underlie multimodal methods like DALL-E, which may generate pictures from textual content descriptions.

These architectures have distinct strengths and limitations, which have implications for his or her use. VAEs and GANs stay probably the most extensively used strategies, however DMs are growing in reputation. These fashions can generate photorealistic pictures and movies, and their means to include data from textual content descriptions into the era course of provides customers distinctive management over the outputs. Moreover, DMs can create sensible faces, our bodies, and even total scenes. The standard and inventive management allowed by DMs allow extra tailor-made and complex deepfake assaults than beforehand attainable.

Legislating Deepfakes

To counter the menace posed by deepfakes and, extra basically, to outline the boundaries for his or her authorized use, federal and state governments have pursued laws to manage deepfakes. Since 2019, 27 deepfake-related items of federal laws have been launched. About half of those contain how deepfakes could also be used, specializing in the areas of grownup content material, politics, mental property, and shopper safety. The remaining payments name for stories and activity forces to check the analysis, improvement, and use of deepfakes. Sadly, makes an attempt at federal laws are usually not retaining tempo with advances in deepfake era strategies and the expansion of deepfake assaults. Of the 27 payments which were launched, solely 5 have been enacted into regulation.

On the state degree, 286 payments have been launched through the 2024 legislative session. These payments predominantly deal with regulating deepfakes within the areas of grownup content material, politics, and fraud, they usually sought to strengthen deepfake analysis and public literacy.

These legislative actions signify progress in establishing boundaries for the suitable use of deepfake applied sciences and penalties for his or her misuse. Nonetheless, for these legal guidelines to be efficient, authorities should be able to detecting deepfake content material—and this functionality will rely on entry to efficient instruments.

A New Framework for Detecting Deepfakes

The nationwide safety dangers related to the rise in deepfake era strategies and use have been acknowledged by each the federal authorities and the Division of Protection. Attackers can use these strategies to unfold MDM with the intent of influencing U.S. political processes or undermining U.S. pursuits. To handle this situation, the U.S. authorities has applied laws to boost consciousness and comprehension of those threats. Our group of researchers within the SEI’s CERT Division have developed a software for establishing the authenticity of multimedia property, together with pictures, video, and audio. Our software is constructed on three guiding ideas:

- Automation to allow deployment at scale for tens of 1000’s of movies

- Combined-initiative to harness human and machine intelligence

- Ensemble strategies to permit for a multi-tiered detection technique

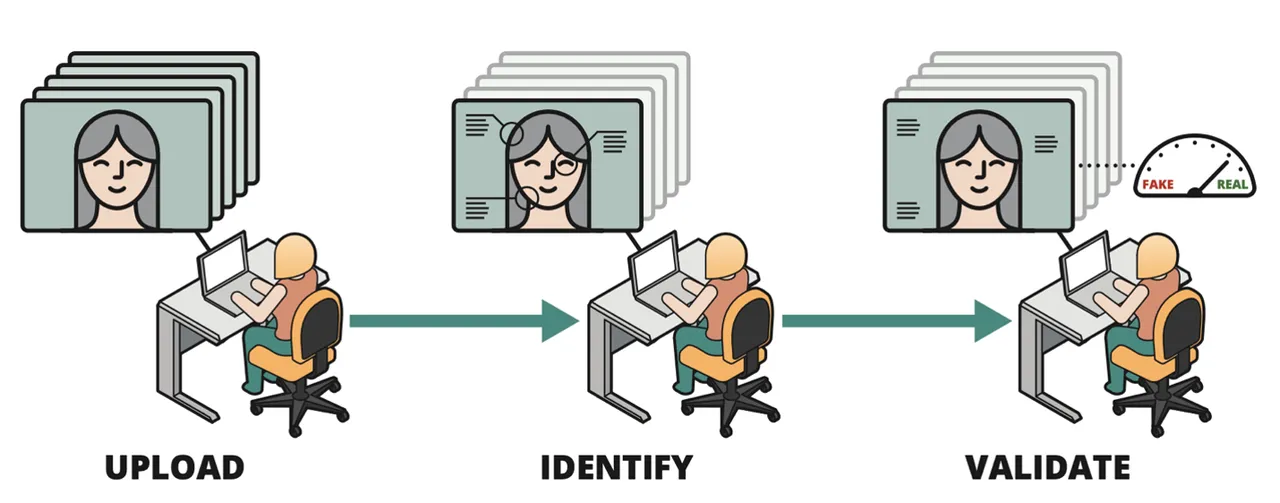

The determine beneath illustrates how these ideas are built-in right into a human-centered workflow for digital media authentication. The analyst can add a number of movies that includes a person. Our software compares the individual in every video in opposition to a database of identified people. If a match is discovered, the software annotates the person’s identification. The analyst can then select from a number of deepfake detectors, that are skilled to establish spatial, temporal, multimodal, and physiological abnormalities. If any detectors discover abnormalities, the software flags the content material for additional evaluation.

The software permits fast triage of picture and video information. Given the huge quantity of footage uploaded to multimedia websites and social media platforms every day, that is a vital functionality. By utilizing the software, organizations could make the very best use of their human capital by directing analyst consideration to probably the most important multimedia property.

Work with Us to Mitigate Your Group’s Deepfake Risk

Over the previous decade, there have been outstanding advances in generative AI, together with the flexibility to create and manipulate pictures and movies of human faces. Whereas there are respectable purposes for these deepfake applied sciences, they can be weaponized to deceive people, firms, and the general public.

Technical options like deepfake detectors are wanted to guard people and organizations in opposition to the deepfake menace. However technical options are usually not sufficient. It’s also essential to extend individuals’s consciousness of the deepfake menace by offering business, shopper, regulation enforcement, and public training.

As you develop a technique to guard your group and other people from deepfakes, we’re able to share our instruments, experiences, and classes discovered.