Everybody talks about LLMs—however as we speak’s AI ecosystem is much greater than simply language fashions. Behind the scenes, a complete household of specialised architectures is quietly remodeling how machines see, plan, act, section, symbolize ideas, and even run effectively on small units. Every of those fashions solves a distinct a part of the intelligence puzzle, and collectively they’re shaping the following technology of AI methods.

On this article, we’ll discover the 5 main gamers: Massive Language Fashions (LLMs), Imaginative and prescient-Language Fashions (VLMs), Combination of Consultants (MoE), Massive Motion Fashions (LAMs) & Small Language Fashions (SLMs).

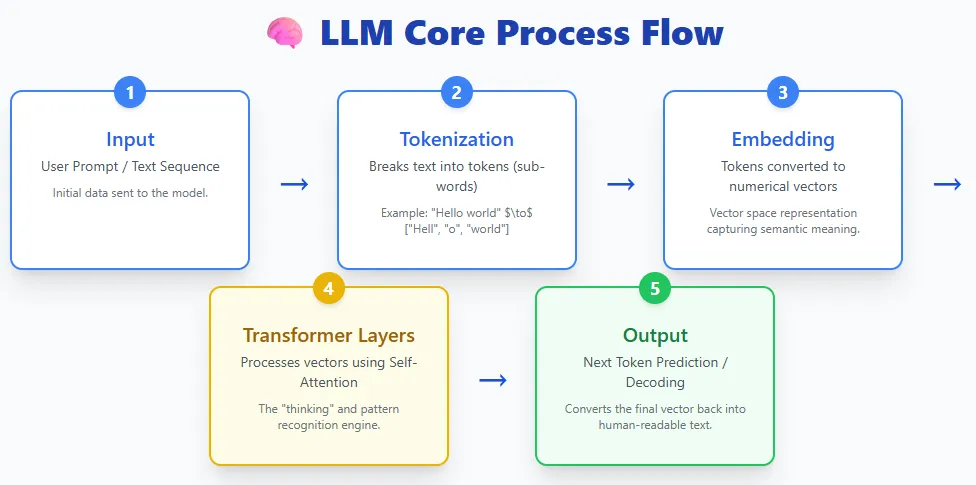

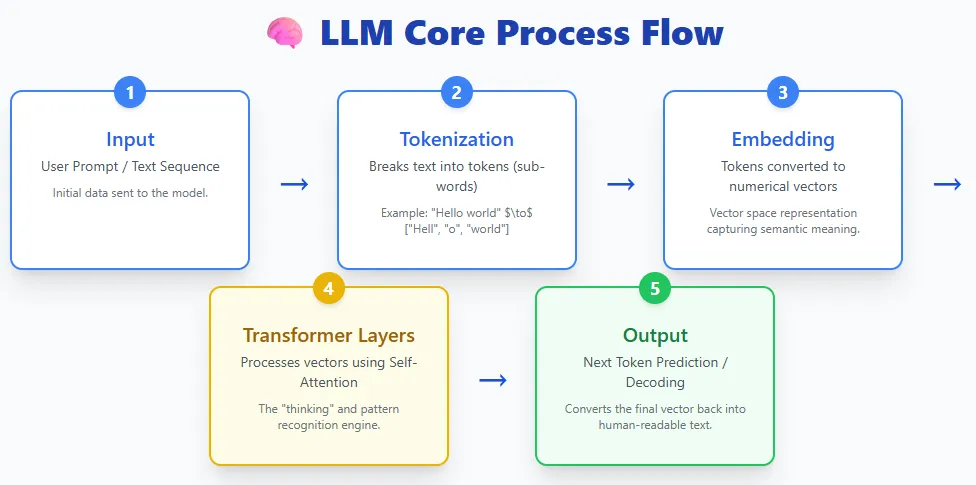

LLMs soak up textual content, break it into tokens, flip these tokens into embeddings, move them by means of layers of transformers, and generate textual content again out. Fashions like ChatGPT, Claude, Gemini, Llama, and others all observe this primary course of.

At their core, LLMs are deep studying fashions educated on huge quantities of textual content information. This coaching permits them to know language, generate responses, summarize info, write code, reply questions, and carry out a variety of duties. They use the transformer structure, which is extraordinarily good at dealing with lengthy sequences and capturing advanced patterns in language.

At this time, LLMs are broadly accessible by means of shopper instruments and assistants—from OpenAI’s ChatGPT and Anthropic’s Claude to Meta’s Llama fashions, Microsoft Copilot, and Google’s Gemini and BERT/PaLM household. They’ve turn into the inspiration of recent AI purposes due to their versatility and ease of use.

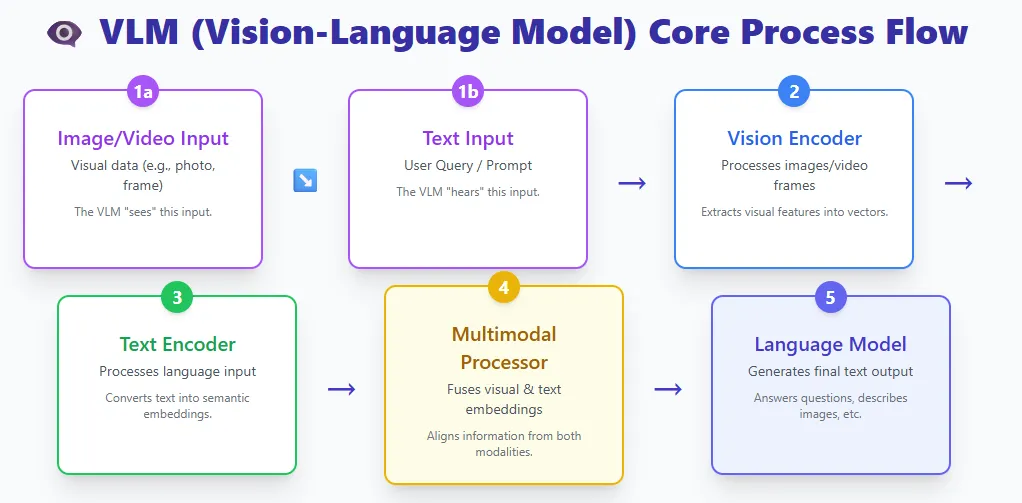

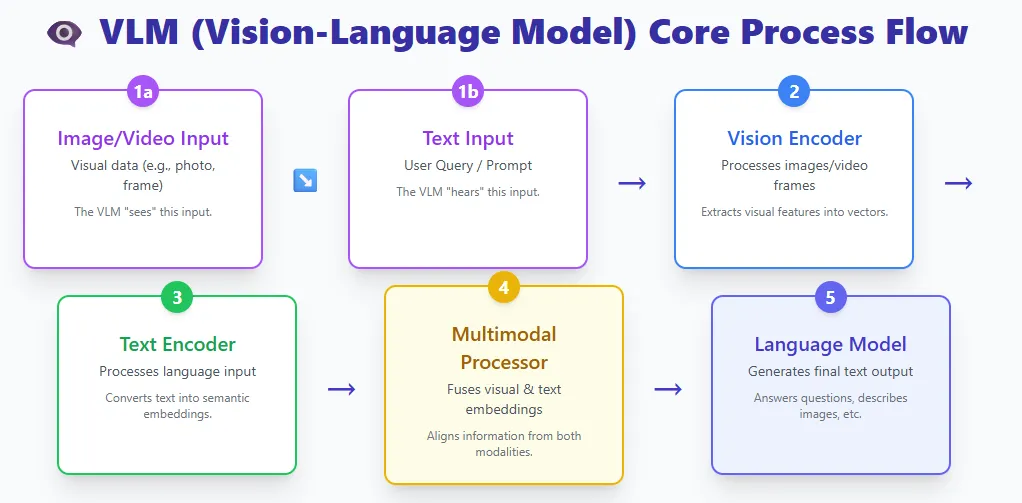

VLMs mix two worlds:

- A imaginative and prescient encoder that processes pictures or video

- A textual content encoder that processes language

Each streams meet in a multimodal processor, and a language mannequin generates the ultimate output.

Examples embrace GPT-4V, Gemini Professional Imaginative and prescient, and LLaVA.

A VLM is actually a big language mannequin that has been given the power to see. By fusing visible and textual content representations, these fashions can perceive pictures, interpret paperwork, reply questions on photos, describe movies, and extra.

Conventional pc imaginative and prescient fashions are educated for one slender process—like classifying cats vs. canine or extracting textual content from a picture—and so they can’t generalize past their coaching lessons. In case you want a brand new class or process, it’s essential to retrain them from scratch.

VLMs take away this limitation. Educated on large datasets of pictures, movies, and textual content, they will carry out many imaginative and prescient duties zero-shot, just by following pure language directions. They’ll do all the pieces from picture captioning and OCR to visible reasoning and multi-step doc understanding—all with out task-specific retraining.

This flexibility makes VLMs some of the highly effective advances in trendy AI.

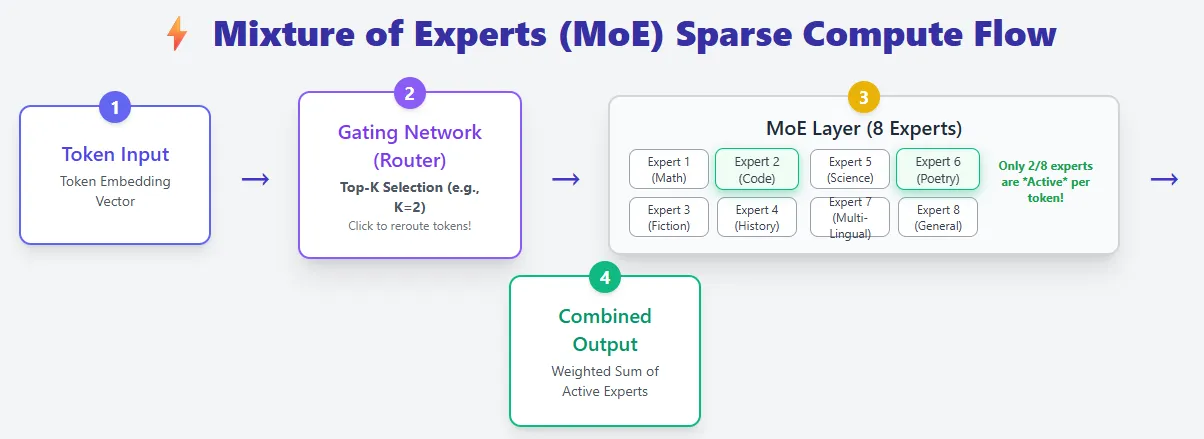

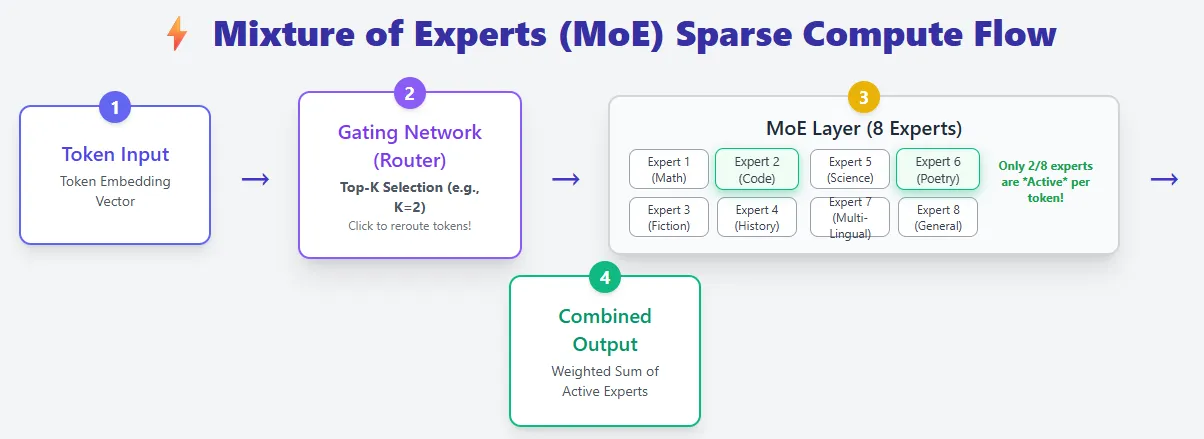

Combination of Consultants fashions construct on the usual transformer structure however introduce a key improve: as a substitute of 1 feed-forward community per layer, they use many smaller knowledgeable networks and activate just a few for every token. This makes MoE fashions extraordinarily environment friendly whereas providing huge capability.

In an everyday transformer, each token flows by means of the identical feed-forward community, which means all parameters are used for each token. MoE layers change this with a pool of specialists, and a router decides which specialists ought to course of every token (Prime-Okay choice). In consequence, MoE fashions could have way more whole parameters, however they solely compute with a small fraction of them at a time—giving sparse compute.

For instance, Mixtral 8×7B has 46B+ parameters, but every token makes use of solely about 13B.

This design drastically reduces inference price. As a substitute of scaling by making the mannequin deeper or wider (which will increase FLOPs), MoE fashions scale by including extra specialists, boosting capability with out elevating per-token compute. Because of this MoEs are sometimes described as having “greater brains at decrease runtime price.”

Massive Motion Fashions go a step past producing textual content—they flip intent into motion. As a substitute of simply answering questions, a LAM can perceive what a consumer needs, break the duty into steps, plan the required actions, after which execute them in the true world or on a pc.

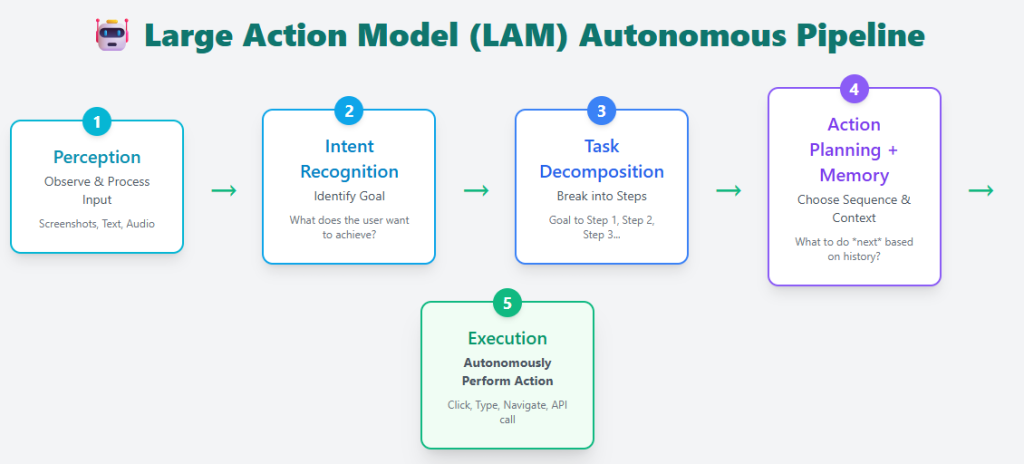

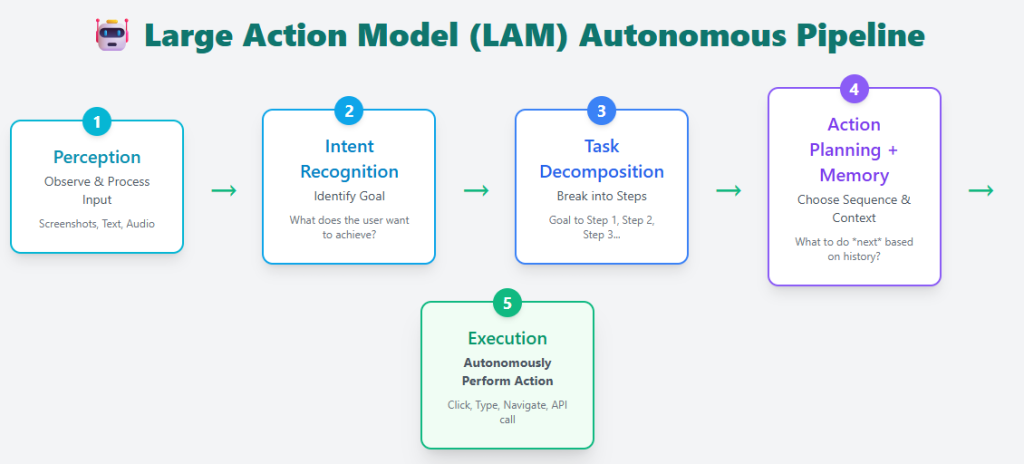

A typical LAM pipeline contains:

- Notion – Understanding the consumer’s enter

- Intent recognition – Figuring out what the consumer is attempting to attain

- Process decomposition – Breaking the aim into actionable steps

- Motion planning + reminiscence – Selecting the best sequence of actions utilizing previous and current context

- Execution – Finishing up duties autonomously

Examples embrace Rabbit R1, Microsoft’s UFO framework, and Claude Pc Use, all of which might function apps, navigate interfaces, or full duties on behalf of a consumer.

LAMs are educated on huge datasets of actual consumer actions, giving them the power to not simply reply, however act—reserving rooms, filling types, organizing recordsdata, or performing multi-step workflows. This shifts AI from a passive assistant into an energetic agent able to advanced, real-time decision-making.

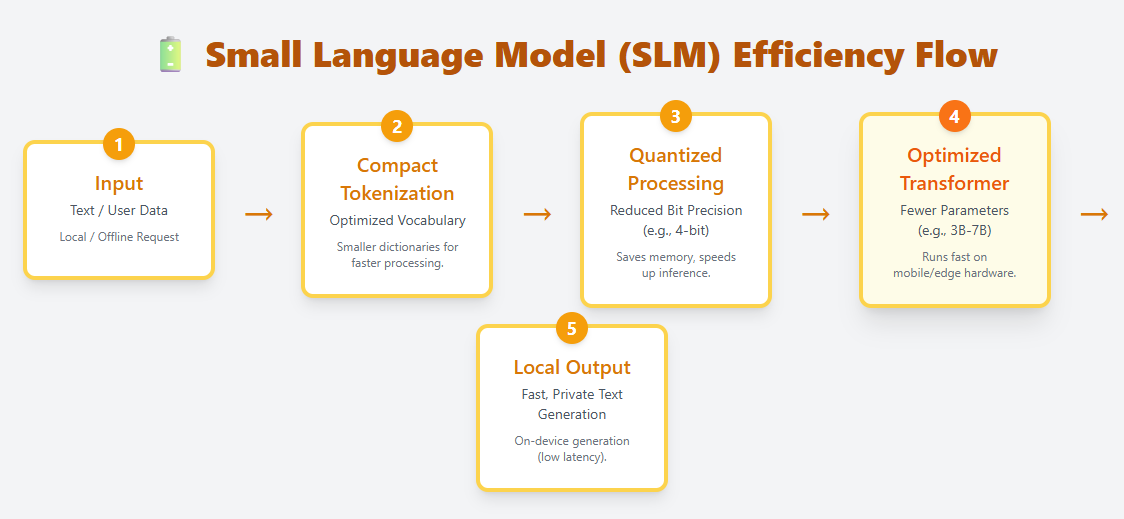

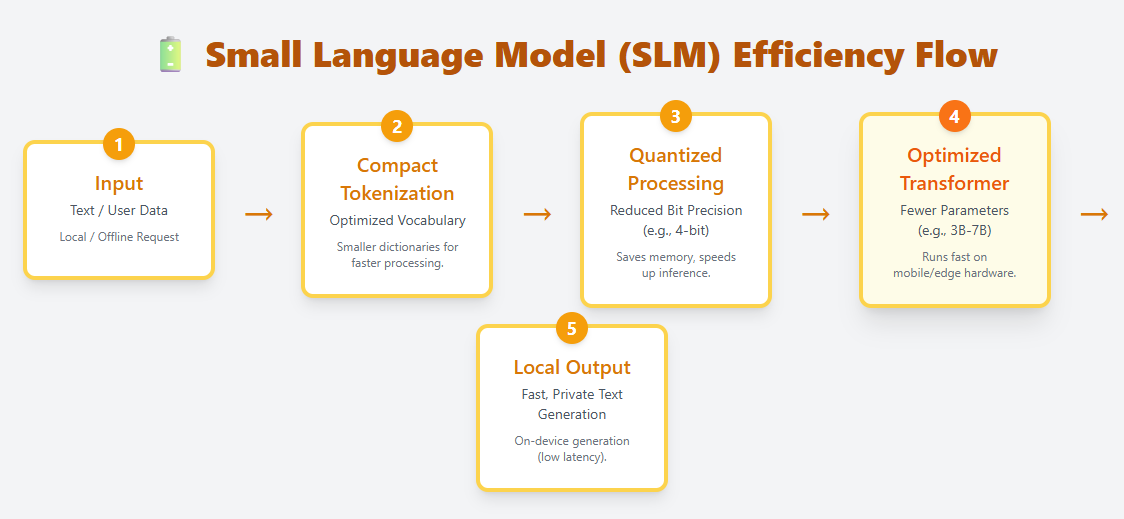

SLMs are light-weight language fashions designed to run effectively on edge units, cellular {hardware}, and different resource-constrained environments. They use compact tokenization, optimized transformer layers, and aggressive quantization to make native, on-device deployment attainable. Examples embrace Phi-3, Gemma, Mistral 7B, and Llama 3.2 1B.

In contrast to LLMs, which can have a whole lot of billions of parameters, SLMs sometimes vary from a couple of million to a couple billion. Regardless of their smaller dimension, they will nonetheless perceive and generate pure language, making them helpful for chat, summarization, translation, and process automation—with no need cloud computation.

As a result of they require far much less reminiscence and compute, SLMs are perfect for:

- Cell apps

- IoT and edge units

- Offline or privacy-sensitive eventualities

- Low-latency purposes the place cloud calls are too gradual

SLMs symbolize a rising shift towards quick, non-public, and cost-efficient AI, bringing language intelligence immediately onto private units.