Query:

MoE fashions include way more parameters than Transformers, but they will run sooner at inference. How is that doable?

Distinction between Transformers & Combination of Consultants (MoE)

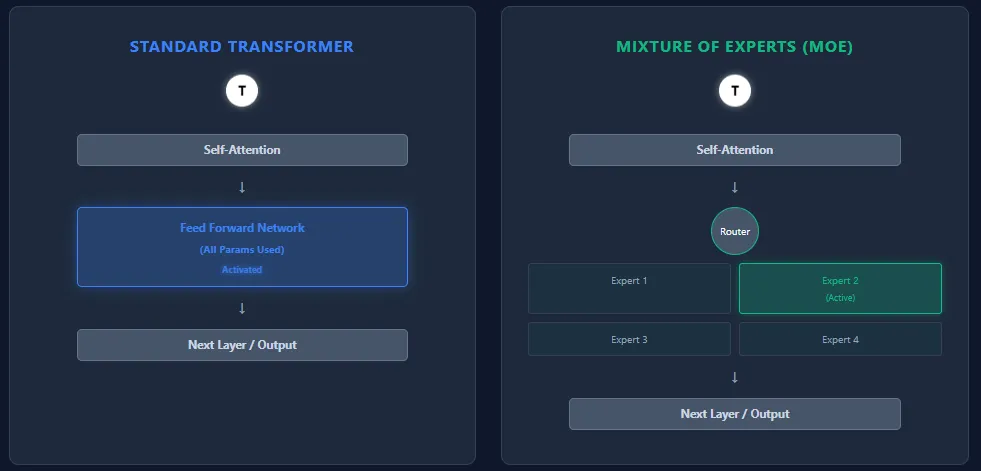

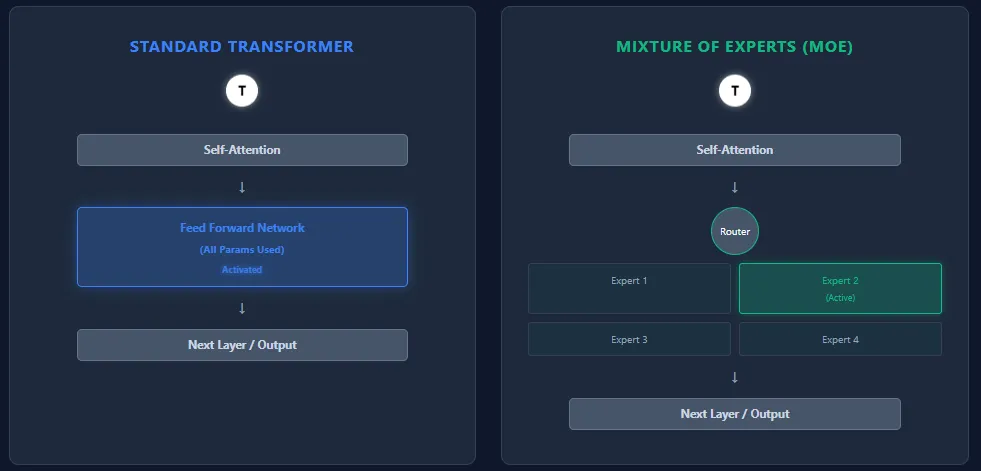

Transformers and Combination of Consultants (MoE) fashions share the identical spine structure—self-attention layers adopted by feed-forward layers—however they differ basically in how they use parameters and compute.

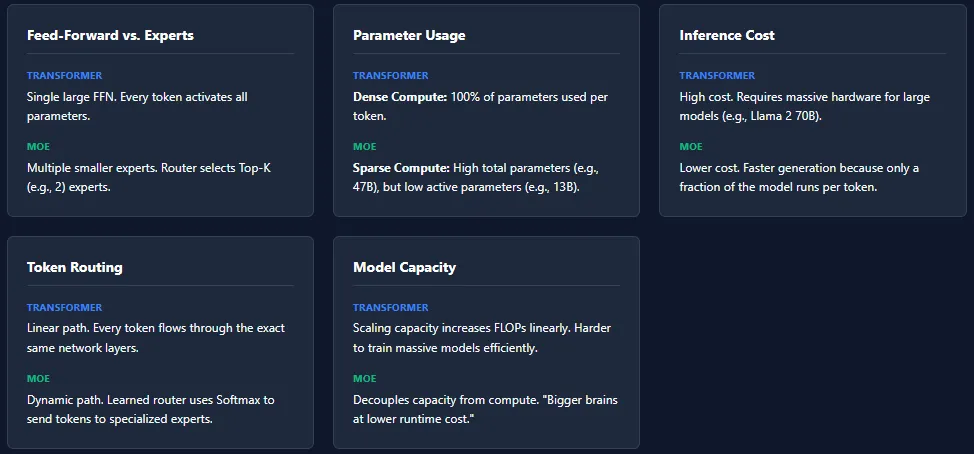

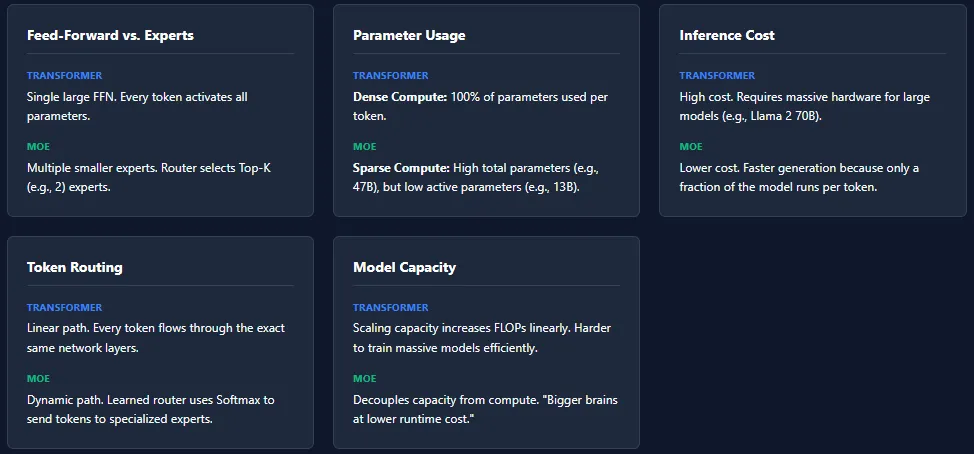

Feed-Ahead Community vs Consultants

- Transformer: Every block comprises a single massive feed-forward community (FFN). Each token passes by way of this FFN, activating all parameters throughout inference.

- MoE: Replaces the FFN with a number of smaller feed-forward networks, known as consultants. A routing community selects just a few consultants (Prime-Ok) per token, so solely a small fraction of complete parameters is lively.

Parameter Utilization

- Transformer: All parameters throughout all layers are used for each token → dense compute.

- MoE: Has extra complete parameters, however prompts solely a small portion per token → sparse compute. Instance: Mixtral 8×7B has 46.7B complete parameters, however makes use of solely ~13B per token.

Inference Price

- Transformer: Excessive inference price as a result of full parameter activation. Scaling to fashions like GPT-4 or Llama 2 70B requires highly effective {hardware}.

- MoE: Decrease inference price as a result of solely Ok consultants per layer are lively. This makes MoE fashions sooner and cheaper to run, particularly at massive scales.

Token Routing

- Transformer: No routing. Each token follows the very same path by way of all layers.

- MoE: A discovered router assigns tokens to consultants based mostly on softmax scores. Completely different tokens choose completely different consultants. Completely different layers could activate completely different consultants which will increase specialization and mannequin capability.

Mannequin Capability

- Transformer: To scale capability, the one choice is including extra layers or widening the FFN—each enhance FLOPs closely.

- MoE: Can scale complete parameters massively with out rising per-token compute. This allows “larger brains at decrease runtime price.”

Whereas MoE architectures provide large capability with decrease inference price, they introduce a number of coaching challenges. The commonest challenge is knowledgeable collapse, the place the router repeatedly selects the identical consultants, leaving others under-trained.

Load imbalance is one other problem—some consultants could obtain way more tokens than others, resulting in uneven studying. To handle this, MoE fashions depend on methods like noise injection in routing, Prime-Ok masking, and knowledgeable capability limits.

These mechanisms guarantee all consultants keep lively and balanced, however additionally they make MoE methods extra advanced to coach in comparison with normal Transformers.