Massive language fashions at the moment are restricted much less by coaching and extra by how briskly and cheaply we will serve tokens underneath actual visitors. That comes down to a few implementation particulars: how the runtime batches requests, the way it overlaps prefill and decode, and the way it shops and reuses the KV cache. Completely different engines make totally different tradeoffs on these axes, which present up straight as variations in tokens per second, P50/P99 latency, and GPU reminiscence utilization.

This text compares six runtimes that present up repeatedly in manufacturing stacks:

- vLLM

- TensorRT LLM

- Hugging Face Textual content Technology Inference (TGI v3)

- LMDeploy

- SGLang

- DeepSpeed Inference / ZeRO Inference

1. vLLM

Design

vLLM is constructed round PagedAttention. As an alternative of storing every sequence’s KV cache in a big contiguous buffer, it partitions KV into mounted dimension blocks and makes use of an indirection layer so every sequence factors to a listing of blocks.

This provides:

- Very low KV fragmentation (reported <4% waste vs 60–80% in naïve allocators)

- Excessive GPU utilization with steady batching

- Native assist for prefix sharing and KV reuse at block degree

Current variations add KV quantization (FP8) and combine FlashAttention type kernels.

Efficiency

vLLM analysis:

- vLLM achieves 14–24× greater throughput than Hugging Face Transformers and 2.2–3.5× greater than early TGI for LLaMA fashions on NVIDIA GPUs.

KV and reminiscence habits

- PagedAttention gives a KV structure that’s each GPU pleasant and fragmentation resistant.

- FP8 KV quantization reduces KV dimension and improves decode throughput when compute isn’t the bottleneck.

The place it suits

- Default excessive efficiency engine while you want a normal LLM serving backend with good throughput, good TTFT, and {hardware} flexibility.

2. TensorRT LLM

Design

TensorRT LLM is a compilation primarily based engine on prime of NVIDIA TensorRT. It generates fused kernels per mannequin and form, and exposes an executor API utilized by frameworks equivalent to Triton.

Its KV subsystem is express and have wealthy:

- Paged KV cache

- Quantized KV cache (INT8, FP8, with some combos nonetheless evolving)

- Round buffer KV cache

- KV cache reuse, together with offloading KV to CPU and reusing it throughout prompts to scale back TTFT

NVIDIA experiences that CPU primarily based KV reuse can cut back time to first token by as much as 14× on H100 and much more on GH200 in particular eventualities.

Efficiency

TensorRT LLM is extremely tunable, so outcomes range. Frequent patterns from public comparisons and vendor benchmarks:

- Very low single request latency on NVIDIA GPUs when engines are compiled for the precise mannequin and configuration.

- At reasonable concurrency, it may be tuned both for low TTFT or for top throughput; at very excessive concurrency, throughput optimized profiles push P99 up resulting from aggressive batching.

KV and reminiscence habits

- Paged KV plus quantized KV provides sturdy management over reminiscence use and bandwidth.

- Executor and reminiscence APIs allow you to design cache conscious routing insurance policies on the utility layer.

The place it suits

- Latency crucial workloads and NVIDIA solely environments, the place groups can put money into engine builds and per mannequin tuning.

3. Hugging Face TGI v3

Design

Textual content Technology Inference (TGI) is a server centered stack with:

- Rust primarily based HTTP and gRPC server

- Steady batching, streaming, security hooks

- Backends for PyTorch and TensorRT and tight Hugging Face Hub integration

TGI v3 provides a brand new lengthy context pipeline:

- Chunked prefill for lengthy inputs

- Prefix KV caching so lengthy dialog histories will not be recomputed on every request

Efficiency

For typical prompts, current third occasion work reveals:

- vLLM usually edges out TGI on uncooked tokens per second at excessive concurrency resulting from PagedAttention, however the distinction isn’t large on many setups.

- TGI v3 processes round 3× extra tokens and is as much as 13× quicker than vLLM on lengthy prompts, underneath a setup with very lengthy histories and prefix caching enabled.

Latency profile:

- P50 for brief and mid size prompts is just like vLLM when each are tuned with steady batching.

- For lengthy chat histories, prefill dominates in naive pipelines; TGI v3’s reuse of earlier tokens provides a big win in TTFT and P50.

KV and reminiscence habits

- TGI makes use of KV caching with paged consideration type kernels and reduces reminiscence footprint by means of chunking of prefill and different runtime adjustments.

- It integrates quantization by means of bits and bytes and GPTQ and runs throughout a number of {hardware} backends.

The place it suits

- Manufacturing stacks already on Hugging Face, particularly for chat type workloads with lengthy histories the place prefix caching provides massive actual world positive factors.

4. LMDeploy

Design

LMDeploy is a toolkit for compression and deployment from the InternLM ecosystem. It exposes two engines:

- TurboMind: excessive efficiency CUDA kernels for NVIDIA GPUs

- PyTorch engine: versatile fallback

Key runtime options:

- Persistent, steady batching

- Blocked KV cache with a supervisor for allocation and reuse

- Dynamic break up and fuse for consideration blocks

- Tensor parallelism

- Weight solely and KV quantization (together with AWQ and on-line INT8 / INT4 KV quant)

LMDeploy delivers as much as 1.8× greater request throughput than vLLM, attributing this to persistent batching, blocked KV and optimized kernels.

Efficiency

Evaluations present:

- For 4 bit Llama type fashions on A100, LMDeploy can attain greater tokens per second than vLLM underneath comparable latency constraints, particularly at excessive concurrency.

- It additionally experiences that 4 bit inference is about 2.4× quicker than FP16 for supported fashions.

Latency:

- Single request TTFT is in the identical ballpark as different optimized GPU engines when configured with out excessive batch limits.

- Beneath heavy concurrency, persistent batching plus blocked KV let LMDeploy maintain excessive throughput with out TTFT collapse.

KV and reminiscence habits

- Blocked KV cache trades contiguous per sequence buffers for a grid of KV chunks managed by the runtime, comparable in spirit to vLLM’s PagedAttention however with a distinct inner structure.

- Help for weight and KV quantization targets massive fashions on constrained GPUs.

The place it suits

- NVIDIA centric deployments that need most throughput and are comfy utilizing TurboMind and LMDeploy particular tooling.

5. SGLang

Design

SGLang is each:

- A DSL for constructing structured LLM applications equivalent to brokers, RAG workflows and power pipelines

- A runtime that implements RadixAttention, a KV reuse mechanism that shares prefixes utilizing a radix tree construction slightly than easy block hashes.

RadixAttention:

- Shops KV for a lot of requests in a prefix tree keyed by tokens

- Allows excessive KV hit charges when many calls share prefixes, equivalent to few shot prompts, multi flip chat, or software chains

Efficiency

Key Insights:

- SGLang achieves as much as 6.4× greater throughput and as much as 3.7× decrease latency than baseline methods equivalent to vLLM, LMQL and others on structured workloads.

- Enhancements are largest when there’s heavy prefix reuse, for instance multi flip chat or analysis workloads with repeated context.

Reported KV cache hit charges vary from roughly 50% to 99%, and cache conscious schedulers get near the optimum hit fee on the measured benchmarks.

KV and reminiscence habits

- RadixAttention sits on prime of paged consideration type kernels and focuses on reuse slightly than simply allocation.

- SGLang integrates effectively with hierarchical context caching methods that transfer KV between GPU and CPU when sequences are lengthy, though these methods are normally applied as separate initiatives.

The place it suits

- Agentic methods, software pipelines, and heavy RAG purposes the place many calls share massive immediate prefixes and KV reuse issues on the utility degree.

6. DeepSpeed Inference / ZeRO Inference

Design

DeepSpeed gives two items related for inference:

- DeepSpeed Inference: optimized transformer kernels plus tensor and pipeline parallelism

- ZeRO Inference / ZeRO Offload: methods that offload mannequin weights, and in some setups KV cache, to CPU or NVMe to run very massive fashions on restricted GPU reminiscence

ZeRO Inference focuses on:

- Preserving little or no mannequin weights resident in GPU

- Streaming tensors from CPU or NVMe as wanted

- Concentrating on throughput and mannequin dimension slightly than low latency

Efficiency

Within the ZeRO Inference OPT 30B instance on a single V100 32GB:

- Full CPU offload reaches about 43 tokens per second

- Full NVMe offload reaches about 30 tokens per second

- Each are 1.3–2.4× quicker than partial offload configurations, as a result of full offload permits bigger batch sizes

These numbers are small in comparison with GPU resident LLM runtimes on A100 or H100, however they apply to a mannequin that doesn’t match natively in 32GB.

A current I/O characterization of DeepSpeed and FlexGen confirms that offload primarily based methods are dominated by small 128 KiB reads and that I/O habits turns into the principle bottleneck.

KV and reminiscence habits

- Mannequin weights and typically KV blocks are offloaded to CPU or SSD to suit fashions past GPU capability.

- TTFT and P99 are excessive in comparison with pure GPU engines, however the tradeoff is the power to run very massive fashions that in any other case wouldn’t match.

The place it suits

- Offline or batch inference, or low QPS companies the place mannequin dimension issues greater than latency and GPU depend.

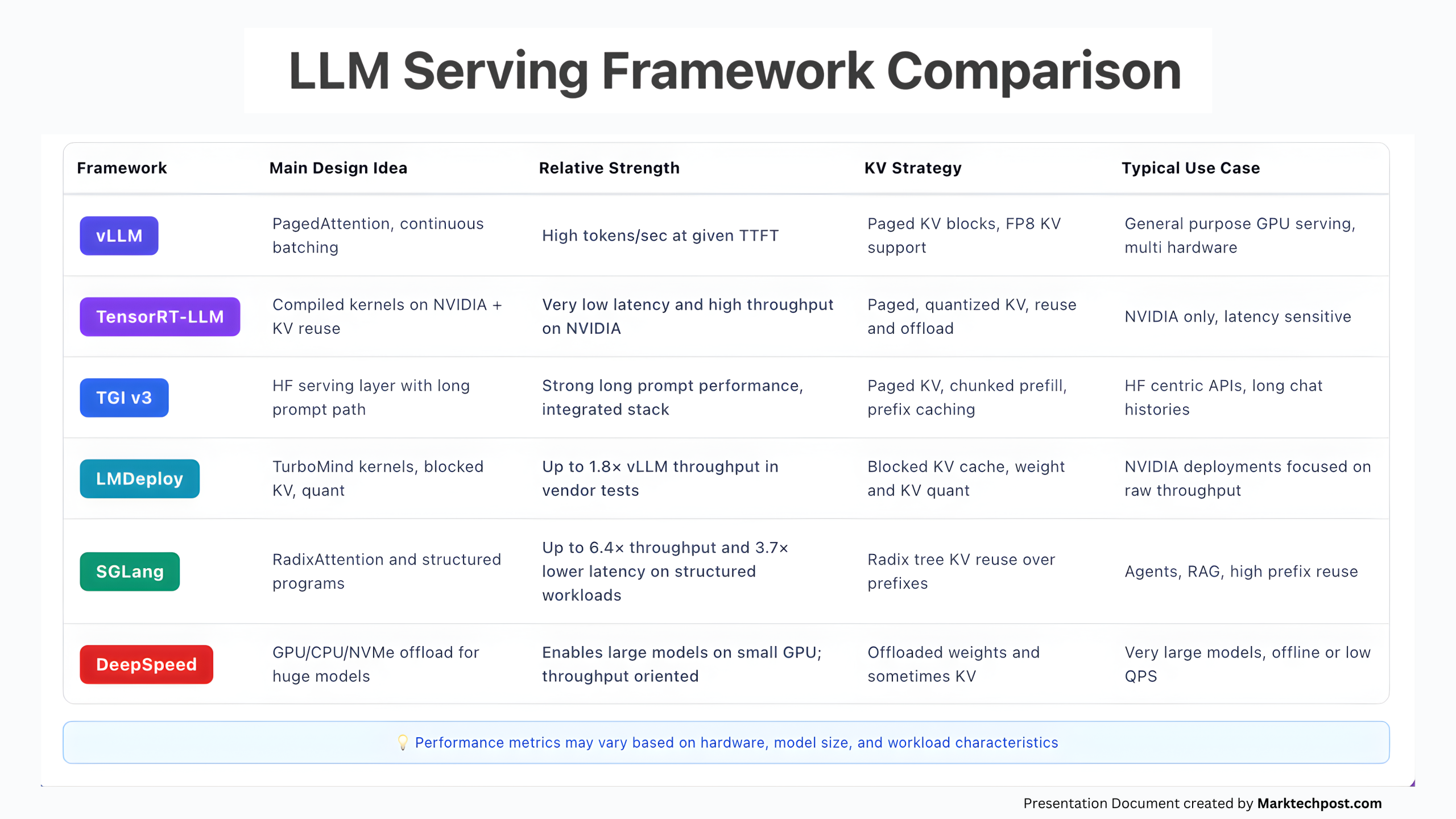

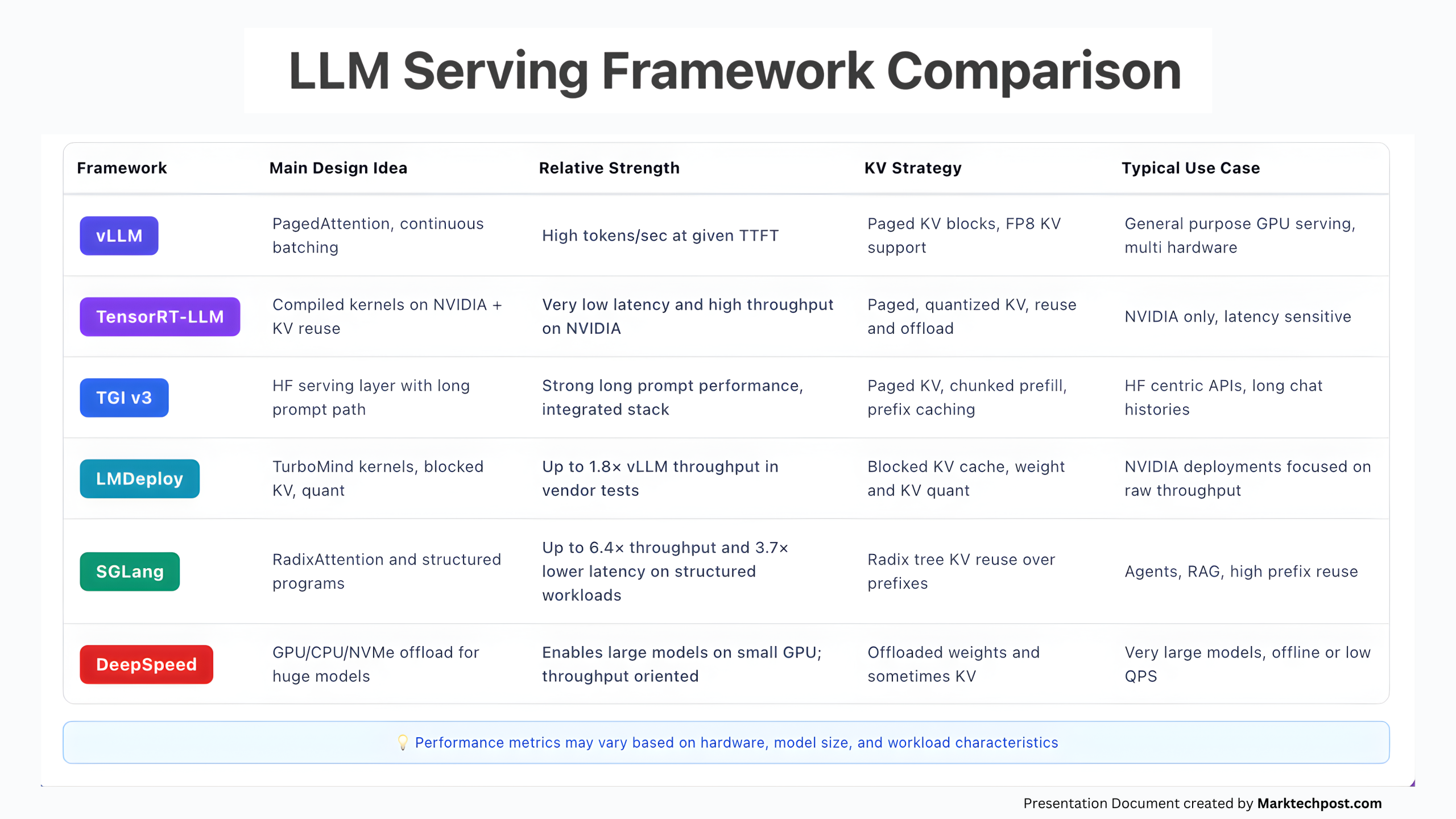

Comparability Tables

This desk summarizes the principle tradeoffs qualitatively:

| Runtime | Primary design thought | Relative power | KV technique | Typical use case |

|---|---|---|---|---|

| vLLM | PagedAttention, steady batching | Excessive tokens per second at given TTFT | Paged KV blocks, FP8 KV assist | Normal goal GPU serving, multi {hardware} |

| TensorRT LLM | Compiled kernels on NVIDIA + KV reuse | Very low latency and excessive throughput on NVIDIA | Paged, quantized KV, reuse and offload | NVIDIA solely, latency delicate |

| TGI v3 | HF serving layer with lengthy immediate path | Robust lengthy immediate efficiency, built-in stack | Paged KV, chunked prefill, prefix caching | HF centric APIs, lengthy chat histories |

| LMDeploy | TurboMind kernels, blocked KV, quant | As much as 1.8× vLLM throughput in vendor checks | Blocked KV cache, weight and KV quant | NVIDIA deployments centered on uncooked throughput |

| SGLang | RadixAttention and structured applications | As much as 6.4× throughput and three.7× decrease latency on structured workloads | Radix tree KV reuse over prefixes | Brokers, RAG, excessive prefix reuse |

| DeepSpeed | GPU CPU NVMe offload for large fashions | Allows massive fashions on small GPU; throughput oriented | Offloaded weights and typically KV | Very massive fashions, offline or low QPS |

Selecting a runtime in apply

For a manufacturing system, the selection tends to break down to a couple easy patterns:

- You desire a sturdy default engine with minimal customized work: You can begin with vLLM. It provides you good throughput, cheap TTFT, and stable KV dealing with on frequent {hardware}.

- You’re dedicated to NVIDIA and wish superb grained management over latency and KV: You should use TensorRT LLM, probably behind Triton or TGI. Plan for mannequin particular engine builds and tuning.

- Your stack is already on Hugging Face and also you care about lengthy chats: You should use TGI v3. Its lengthy immediate pipeline and prefix caching are very efficient for dialog type visitors.

- You need most throughput per GPU with quantized fashions: You should use LMDeploy with TurboMind and blocked KV, particularly for 4 bit Llama household fashions.

- You’re constructing brokers, software chains or heavy RAG methods: You should use SGLang and design prompts in order that KV reuse by way of RadixAttention is excessive.

- You should run very massive fashions on restricted GPUs: You should use DeepSpeed Inference / ZeRO Inference, settle for greater latency, and deal with the GPU as a throughput engine with SSD within the loop.

Total, all these engines are converging on the identical thought: KV cache is the actual bottleneck useful resource. The winners are the runtimes that deal with KV as a firstclass information construction to be paged, quantized, reused and offloaded, not only a massive tensor slapped into GPU reminiscence.