As we speak’s promoting calls for extra than simply eye-catching pictures. It wants a inventive that truly matches the tastes, segments, and expectations of a audience. That is the pure subsequent step after understanding your viewers; utilizing what you understand a few section’s preferences to create imagery that really resonates (custom-made to your viewers).

Multimodal Retrieval-Augmented Technology (RAG) gives a sensible manner to do that at scale. It really works by combining a text-based understanding of a goal section (e.g., “outdoor-loving canine proprietor”) with quick search and retrieval of semantically related actual pictures. These retrieved pictures then function context for producing a brand new inventive. This bridges the hole between buyer knowledge and high-quality content material, ensuring the output connects with the audience.

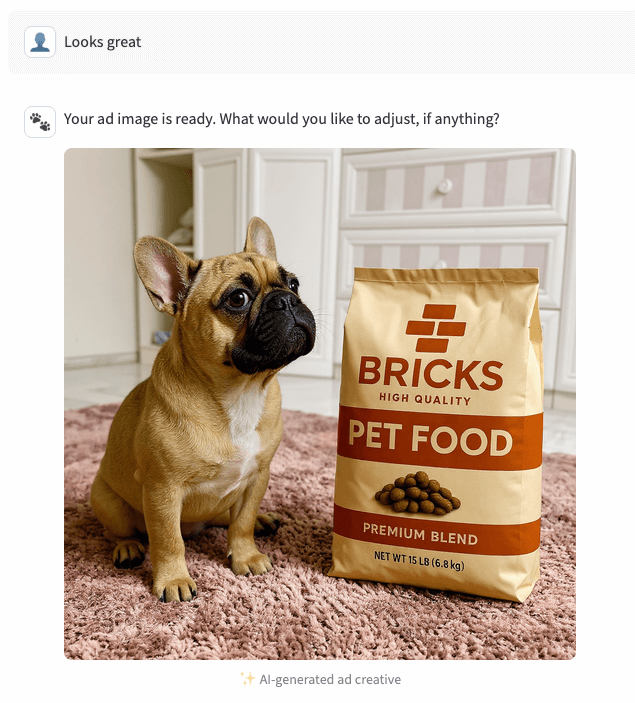

This weblog publish will show how trendy AI, by way of picture retrieval and multimodal RAG, could make advert inventive extra grounded and related, all powered end-to-end with Databricks. We’ll present how this works in apply for a hypothetical pet meals model known as “Bricks” working pet-themed campaigns, however the identical approach might be utilized to any business the place personalization and visible high quality matter.

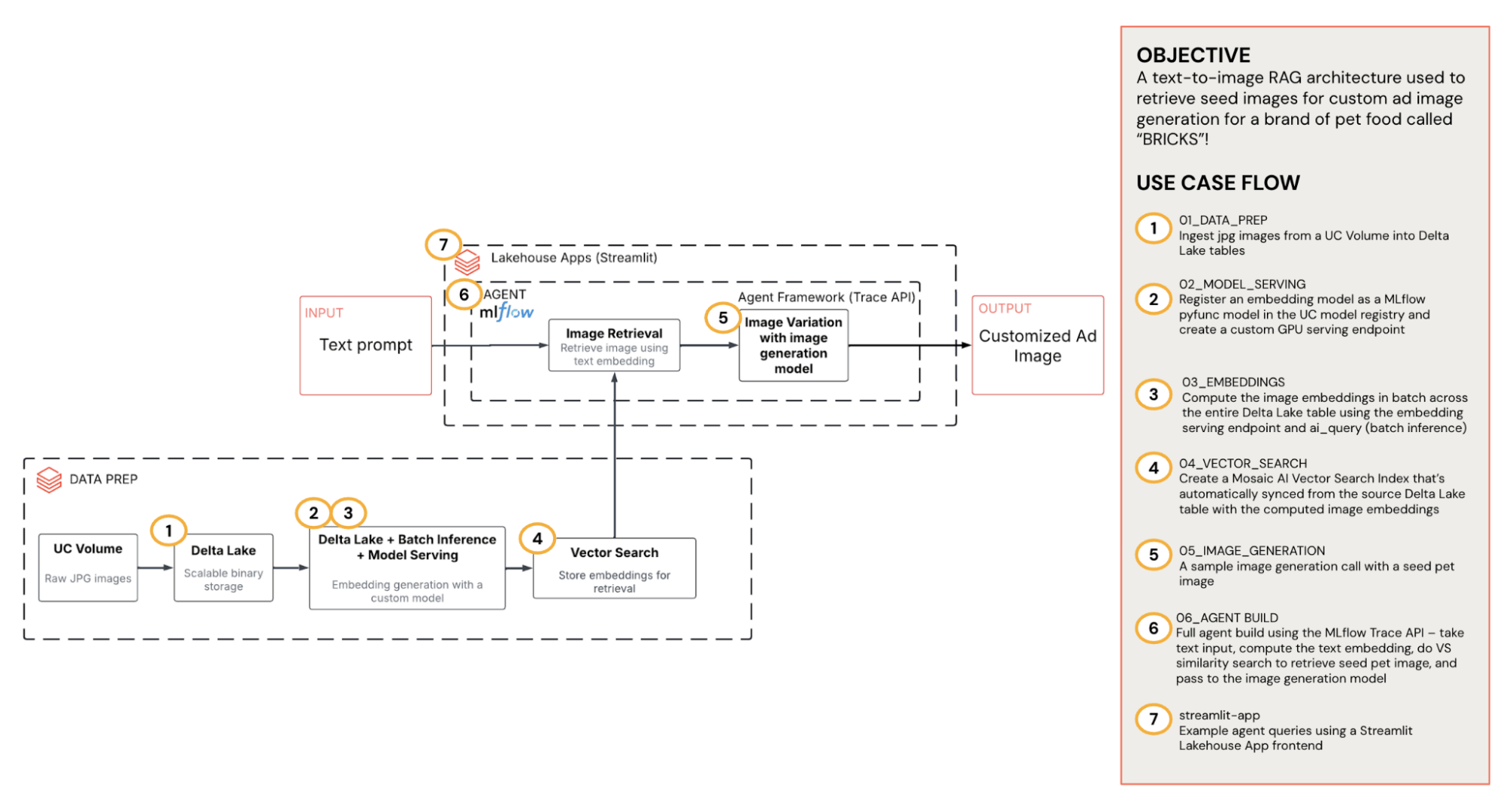

Answer Overview

This answer turns viewers understanding into related, on-brand visuals by leveraging Unity Catalog, Agent Framework, Mannequin Serving, Batch Inference, Vector Search, and Apps. The beneath diagram gives a high-level overview of the structure.

- Storage & Governance (Unity Catalog): All supply pictures and generated adverts reside in a Unity Catalog (UC) Quantity. We additionally ingest the supply pictures right into a Delta desk for batch processing.

- Deliver-your-own embeddings: A light-weight CLIP picture encoder serving endpoint converts pictures to vectors. We run batch inference over the Delta desk to provide embeddings at scale after which retailer them again alongside the UC Quantity paths.

- Vector Search retrieval: The embeddings are listed in Databricks Vector Search that’s mechanically synced from the supply Delta desk for low-latency semantic lookup. Given a brief textual content immediate, the index returns the top-Okay UC paths.

- RAG agent: A tool-using chat agent coordinates the workflow: taking textual content enter → suggest a pet description → compute textual content embedding → calling vector search to retrieve the seed picture → after which passing the seed to the picture era mannequin.

- Databricks app: A user-friendly app exposes the agent to customers and guides them in producing a personalised advert picture for his or her section.

As a result of all the pieces flows by way of UC, the system is safe by default, observable (MLflow tracing on retrieval and era), and straightforward to evolve (swap fashions, tune prompts). Let’s dive deeper.

Storage & Governance (Unity Catalog)

All the pet picture belongings (seed pet pictures, model picture, and closing adverts) reside in a UC Quantity, e.g:

UC Volumes present an environment friendly storage answer for our picture knowledge with a FUSE-mount level and are helpful as they permit for one governance airplane. The identical ACLs apply whether or not you entry the recordsdata from notebooks, serving endpoints, or the Databricks app. It additionally means there are not any blob keys in our code. We move paths (not bytes) throughout companies.

For quick indexing and governance, we then mirror the Quantity right into a Delta desk that masses the uncooked pictures bytes (as a BINARY column) alongside metadata. We then convert this right into a base64 encoded string (required for mannequin serving endpoint). This makes downstream batch jobs (like embedding) easy and environment friendly.

Now there’s one queryable desk and we’ve additionally preserved the unique Quantity paths for human readability and app use.

Deliver-your-own Embeddings

CLIP is a lightweight picture encoder with a transformer text-encoder mannequin that’s skilled through contrastive studying to provide aligned image-text embeddings for zero-shot classification and retrieval. To maintain retrieval quick and reproducible, we expose CLIP encoders behind a Databricks Mannequin Serving endpoint which we are able to use for each on-line lookups and offline batch inference. We merely bundle the encoder as an MLflow pyfunc perform, register and log as a UC mannequin and serve it with a customized mannequin serving, after which name it from SQL with ai_query to fill the embeddings column within the Delta desk.

Step 1: Package deal CLIP as an MLflow pyfunc

We wrap CLIP ViT-L/14 as a small pyfunc that accepts a base64 picture string and returns a normalized vector. After logging, we register the mannequin for serving.

Step 2: Register the mannequin and log into UC

We log the mannequin utilizing MLflow and register it to a UC mannequin registry.

Step 3: Serve the encoder

We create a GPU serving endpoint for the registered mannequin. This offers a low-latency, versioned API we are able to name.

Step 4: Batch inference with ai_query

With pictures landed in a Delta desk, we compute embeddings in place utilizing SQL. The result’s a brand new desk with an image_embeddings column, prepared for Vector Search.

Vector Search Retrieval

After we’ve materialized the picture embeddings in Delta, we make them searchable with Databricks Vector Search. The sample is to create a Delta Sync index with self-managed embeddings, then at runtime embed the textual content immediate utilizing CLIP and run a top-Okay similarity search. The service returns small, structured outcomes (paths + non-compulsory metadata), and we move UC Quantity paths ahead (not uncooked bytes) so the remainder of the pipeline stays mild and ruled.

Step 1: Indexing the embeddings desk

Create a vector index as soon as. It repeatedly syncs from our embeddings desk and serves low-latency queries.

As a result of the index is Delta synced, any new desk rows of embeddings (or up to date rows of embeddings) will mechanically be listed. Entry to each the desk and the index inherits Unity Catalog ACLs, so that you don’t want separate permissions.

Step 2: Querying top-Okay candidates at runtime

At inference we embed the textual content immediate and question the highest three outcomes with similarity search.

Once we move the outcomes to the agent later, we’ll preserve the response minimal and actionable. We return simply the ranked UC paths which the agent will cycle by way of (if a consumer rejects the preliminary seed picture) with out re-querying the index.

RAG Agent

Step 1: Picture era serving endpoint (retrieval + era)

Right here we create an endpoint which accepts a brief textual content immediate and orchestrates two steps:

- Retrieval: embed the textual content, question Vector Search Index, and return top-3 UC Quantity paths to the seed pictures (rank-ordered)

- Technology: masses the chosen seed, calls the picture era API, uploads the ultimate picture to UC quantity, and returns its path.

This second half is non-compulsory and managed by a toggle relying on whether or not we wish to run the picture era or simply the retrieval.

For the era step, we’re calling out to Replicate utilizing the Kontext multi-image max mannequin. This alternative is pragmatic:

- Multi-image conditioning: Kontext can take a seed pet photograph and a model picture and compose a sensible advert

- Photorealism + background retention: Kontext tends to maintain the pet’s unique pose/setting whereas inserting the product naturally

- High quality vs Latency: Kontext gives a great steadiness between high quality and latency. It produces a comparatively high-quality picture in about 7-10 seconds. Different high-quality picture era fashions that we examined comparable to gpt-4o, through the gpt-image-1 API, generate larger high quality pictures in about 50 seconds to 1 minute.

Technology name:

Write-back to UC Quantity – decode base64 from the generator and write through the Recordsdata API:

If desired, it’s simple to switch Kontext/Replicate with any exterior picture API (e.g. OpenAI) or an inside mannequin served on Databricks with out altering the remainder of the pipeline. Merely exchange the internals of the _replicate_image_generation technique and preserve the enter contract (pet seed bytes + model bytes) and output (PNG bytes → UC add) an identical. The chat agent, retrieval, and app keep the identical as a result of they function on UC paths, not picture payloads.

Step 2: Chat agent

The chat agent is a closing serving endpoint that holds the dialog coverage and calls the image-gen endpoint as a instrument. It proposes a pet kind, retrieves the picture seed candidates, and solely generates the ultimate advert picture on the consumer’s affirmation.

The instrument schema is saved minimal:

We then create the instrument execution perform the place the agent can name the image-gen endpoint which returns a structured JSON response.

The replicate_toogle param controls the picture era.

Why break up endpoints?

We separate out the image-gen and chat agent endpoints for a few causes:

- Separation of considerations: The image-gen endpoint is a deterministic service (retrieve top-Okay → optionally generate one → return UC paths). The chat agent endpoint owns coverage and consumer expertise. Each keep small, testable, and replaceable

- Modular extensibility: The agent can develop by including instruments later behind the identical interface e.g., copy or CTA recommendations, structured knowledge retrieval, with out altering the image-gen service

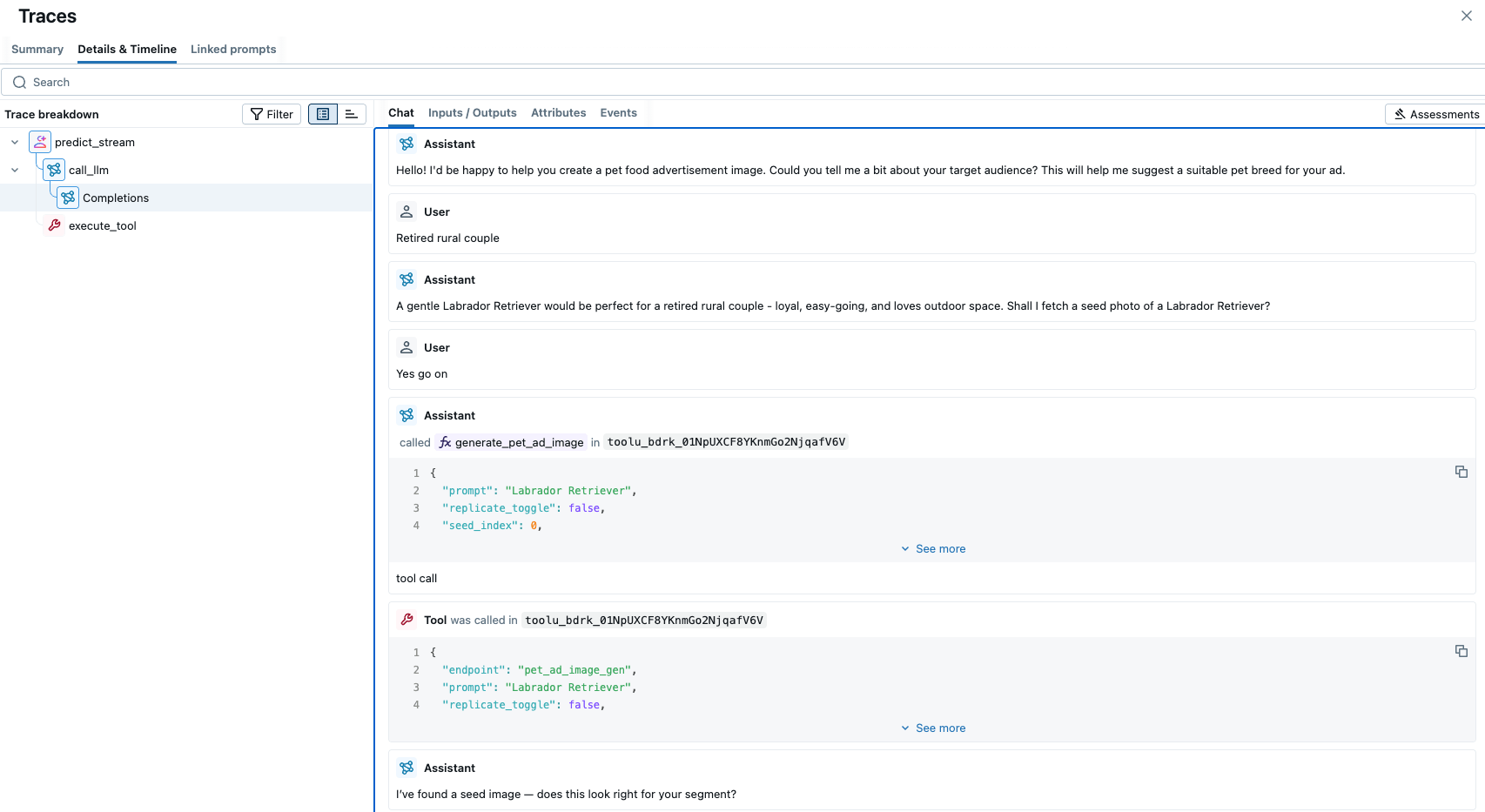

The above is all constructed on the Databricks Agent Framework, which provides us the instruments to show retrieval + era right into a dependable, ruled agent. First, the framework lets us register the image-gen service (together with Vector Search) as instruments and invoke them deterministically from the coverage/immediate. It additionally gives us with manufacturing scaffolding out of the field. We use the ResponsesAgent interface for the chat loop, with MLflow tracing on each step. This offers full observability for debugging and working a multi-step workflow in manufacturing. The MLflow tracing UI lets us discover this visually.

Databricks App

The Streamlit app presents a single conversational expertise that lets the consumer discuss to the chat agent and renders the pictures. It combines all of the items we’ve constructed right into a single, ruled product expertise.

The way it comes collectively:

- Governance and permissions: The app runs within the Databricks workspace and talks on to our chat agent endpoint. With on-behalf-of credentials, the app can obtain seed/closing pictures by UC path through the Recordsdata API below the viewer’s permissions. That offers per-user entry management and clear audit path

- Config as code: App settings (e.g., AGENT_ENDPOINT) are handed through setting variables/secrets and techniques

- Clear interfaces: The chat floor sends a compact Responses payload to the agent and receives a tiny JSON pointer again with a UC Quantity path. The UI then downloads the picture and renders to the consumer

Beneath reveals the 2 key touchpoints within the app code. First, name the agent endpoint:

And render by UC Quantity path:

Finish-to-end Instance

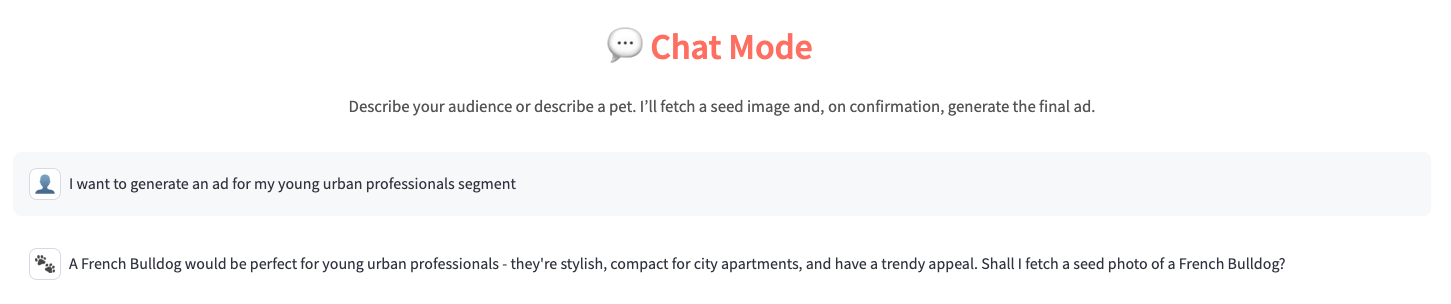

Let’s stroll by way of a single circulation that ties the entire system collectively. Think about a marketer desires to generate a custom-made pet advert picture for “younger city professionals”.

1. Suggest

The chat agent interprets the section and proposes a kind of pet e.g., French Bulldog.

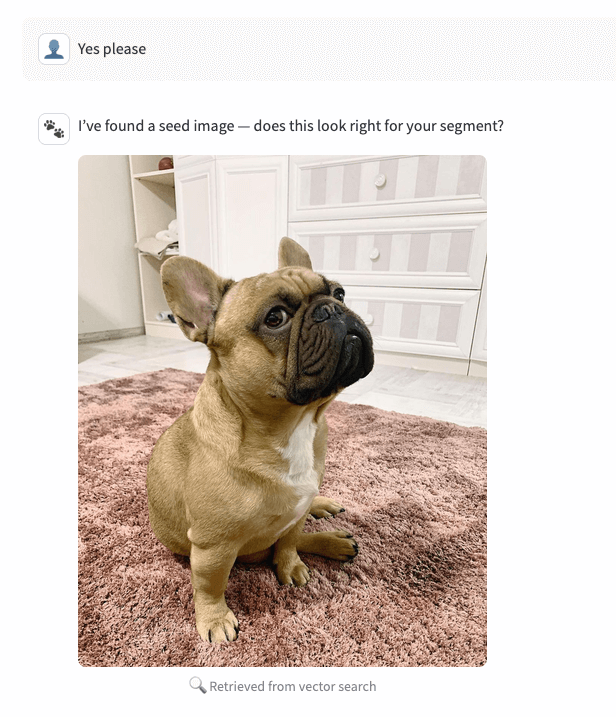

2. Retrieve (instrument name, no era)

On “sure”, the agent calls the image-gen endpoint in retrieval mode and returns the highest three pictures (Quantity paths) from Vector Search ranked by similarity. The app shows candidate #0 because the seed picture.

3. Verify (or iterate)

If the consumer says one thing to the impact of “seems proper”, we proceed. If they are saying “not fairly”, the agent increments seed_index to 1 (then 2) and re-uses the identical top-3 set (no further vector queries) to point out the subsequent possibility. If after three pictures, the consumer continues to be not comfortable, the agent will counsel a brand new pet description and set off one other Vector Search name. This retains the UX snappy and deterministic.

4. Generate and render

On affirmation, the agent calls the endpoint once more with replicate_toggle=true and the identical seed_index. The endpoint reads the chosen seed picture from UC, combines it with the model picture, runs the Kontext multi-image-max generator on Replicate, after which uploads the ultimate PNG again to a UC Quantity. Solely the UC path is returned. The app then downloads the picture and renders it again to the consumer.

Conclusion

On this weblog we’ve demonstrated how multimodal RAG unlocks superior advert personalization. Grounding era with actual picture retrieval is the distinction between generic visuals and artistic that resonates with a selected viewers. This unlocks:

- Greater visible high quality: Photographic seeds protect finer particulars that text-only prompts battle to breed.

- Contextual alignment: Retrieval anchors scenes and pets to the viewers context enhancing message-image coherence.

- Extra predictable era: Multi-image conditioning (seed + model picture) constrains the mannequin, reducing hallucinations and enhancing model security.

- Quick iteration: Returning the top-3 seeds lets the company cycle by way of with out re-querying. The marketer stays within the loop and steers the ultimate advert picture.

Databricks ensures scalable, ruled, high-performance functions. This structure stays manufacturing prepared with each step working end-to-end contained in the platform:

- Unity Catalog governs all the pieces – supply and generated pictures, tables, Agent. Entry, audit, and lineage are constant.

- Delta tables + Vector Search preserve embeddings transactional and queries low-latency. You’ll be able to re-embed, re-index or filter by metadata with out altering something downstream.

- Mannequin Serving + Mosaic AI Agent Framework separate considerations: the image-gen endpoint is a deterministic service, and the chat agent orchestrates coverage and instruments. Every scales, variations, and rolls again independently.

- Databricks Apps places the UI subsequent to knowledge and companies and MLflow tracing gives turn-by-turn observability.

The result’s a modular, ruled RAG agent that turns viewers insights into high-quality, on-brand inventive at scale.