Massive Language Mannequin software outputs could be unpredictable and hard to guage. As a LangChain developer, you may already be creating subtle chains and brokers, however to make them run reliably, you want nice analysis and debugging instruments. LangSmith is a product created by the LangChain crew to handle this requirement. On this tutorial-style information, we’ll discover how LangSmith integrates with LangChain to hint and consider LLM functions, utilizing sensible examples from the official LangSmith Cookbook. We’ll cowl allow tracing, create analysis datasets, log suggestions, run automated evaluations, and interpret the outcomes. Alongside the best way, we’ll examine LangSmith’s strategy to conventional analysis strategies and share finest practices for integrating it into your workflow.

What’s LangSmith?

LangSmith is an end-to-end set of instruments for constructing, debugging, and deploying LLM-enabled functions. It brings numerous capabilities collectively into one platform: you’ll be able to monitor each step of your LLM app, check output high quality, do immediate testing, and deploy administration inside one surroundings. LangSmith integrates properly with LangChain’s open-source libraries, nonetheless it isn’t LangChain framework focussed solely. You should utilize it even in proprietary LLM pipelines past LangChain and different frameworks.. By instrumenting your app with LangSmith, you’ve got visibility into its actions and an automatic technique of quantifying efficiency.

Not like common software program, LLM outputs could differ with the identical enter as a result of they’re probabilistic. This non-determinism makes observability and strict testing vital for staying answerable for high quality. Outdated-school testing instruments don’t cowl it as a result of they’re not designed for the evaluations of language outputs. LangSmith solves this by providing LLM-based analysis and observability workflows. In brief, LangSmith prevents you from sudden and unintentional mishappening, it means that you can statistically measure your LLM software’s efficiency and resolve points earlier than any mishappening

Tracing and Debugging LLM Functions

Certainly one of LangSmith’s key facets is tracing, which data each step and LLM name in your software for debugging. When utilizing LangChain, turning on LangSmith tracing is easy: you need to use surroundings variables (LANGCHAIN_API_KEY and LANGCHAIN_TRACING_V2=true) to routinely ahead LangChain runs to LangSmith. Alternatively, you’ll be able to immediately instrument your code utilizing the LangSmith SDK.

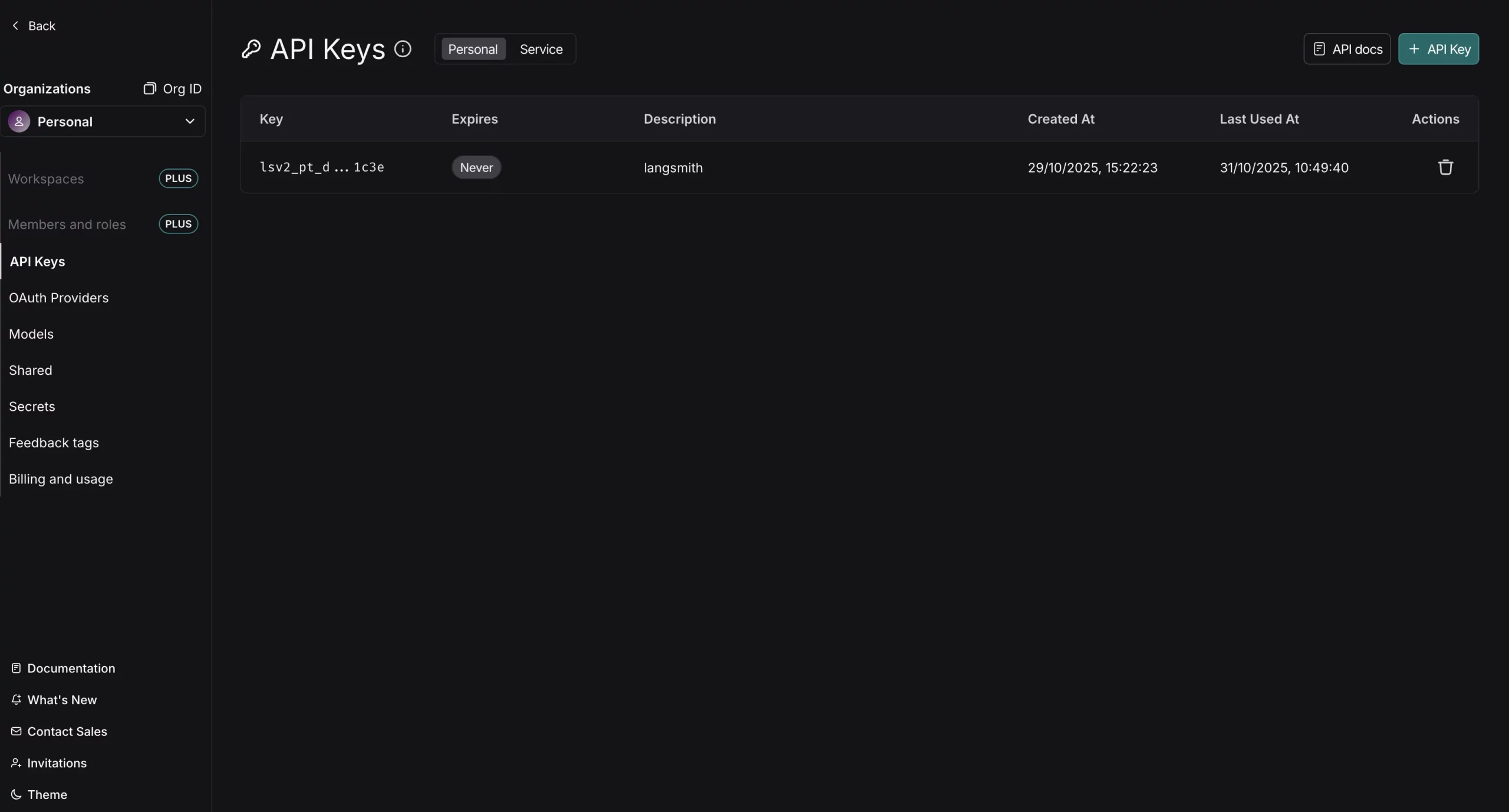

Head over to https://smith.langchain.com, create a brand new account or login. Now, go to settings from the suitable down house and one can find the API keys part. Now create a brand new API Key and retailer it someplace.

os.environ['OPENAI_API_KEY'] = “YOUR_OPENAI_API_KEY”

os.environ['LANGCHAIN_TRACING_V2'] = “true”

os.environ['LANGCHAIN_API_KEY'] = “YOUR_LANGSMITH_API_KEY”

os.environ['LANGCHAIN_PROJECT'] = 'demo-langsmith'Now we have configured the API keys right here and now we’re able to hint our LLM calls. Let’s check a easy instance. As an illustration, wrapping your LLM shopper and capabilities ensures each response and request is traced:

import openai

from langsmith.wrappers import wrap_openai

from langsmith import traceable

shopper = wrap_openai(openai.OpenAI())

@traceable

def example_pipeline(user_input: str) -> str:

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": user_input}]

)

return response.selections[0].message.content material

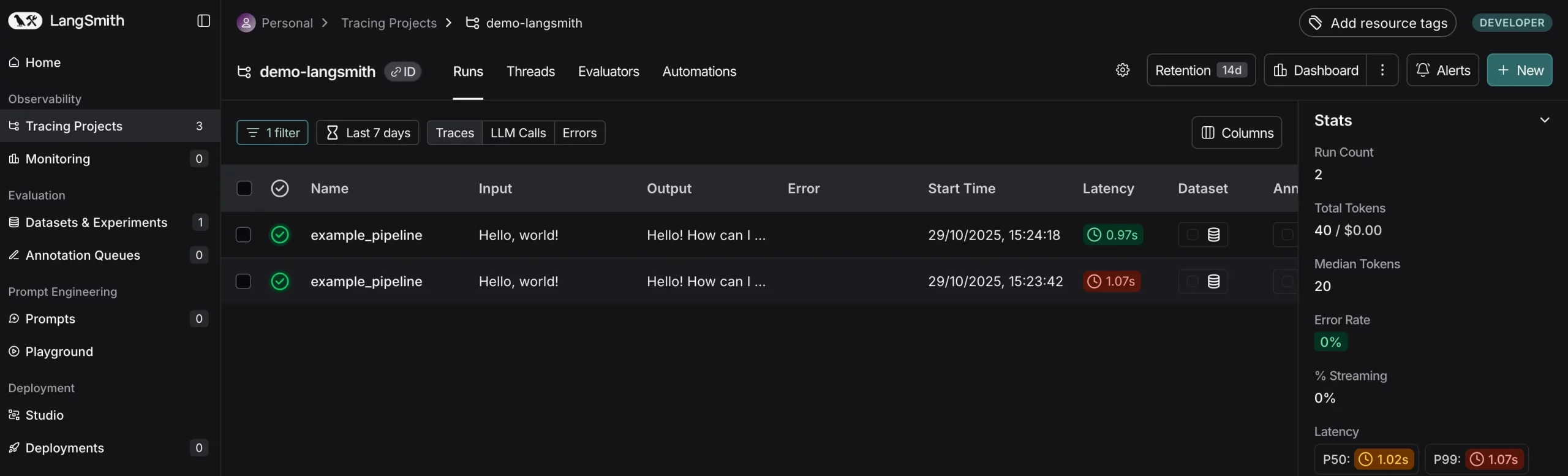

reply = example_pipeline("Whats up, world!")We wrapped the OpenAI shopper utilizing wrap_openai, and the @traceable decorator to hint our pipeline perform. This may log a hint to LangSmith each time example_pipeline is invoked (and each inner LLM API name). Traces will let you overview the chain of prompts, mannequin outputs, software calls, and so on., which is price its weight in gold when debugging difficult chains.

LangSmith’s structure permits tracing from the beginning of growth, wrap your LLM perform and calls early on as a way to see what your system is doing. When you’re constructing a LangChain app in prototype, you’ll be able to allow tracing with minimal work and start to catch issues effectively earlier than you attain manufacturing.

Every hint is structured as a tree of runs (a root run for the top-level name and baby runs for every interior name). In LangSmith‘s UI, you’ll be able to see these traces step-by-step, observe inputs and outputs at every step, and even add notes or suggestions. This tight integration of tracing and debugging implies that when an analysis (which we’ll cowl subsequent) flags a foul output, you’ll be able to bounce straight into the corresponding hint to diagnose the issue.

Creating Analysis Datasets

To check your LLM app systematically, you require a check instance dataset. In LangSmith, a Dataset is only a set of examples, with every instance containing an enter (the parameters you move to your software) and an anticipated or reference output. Take a look at datasets could be small and hand-selected or massive and extracted from precise utilization. In actuality, most groups start with just a few dozen vital check circumstances that handle widespread and edge circumstances, even 10-20 cleverly chosen examples could be very useful for regression testing

LangSmith simplifies dataset creation. You’ll be able to insert examples immediately by means of code or the interface, and you’ll even retailer actual traces from debugging or manufacturing immediately right into a dataset for use in future testing. For example, if in a person question, you discover there was a flaw in your agent’s reply, you may file that hint as a brand new check case (with the right anticipated reply) in order that the error will get corrected and doesn’t get repeated. Datasets are mainly the bottom fact towards which your LLM app shall be judged sooner or later

Let’s go over writing a easy dataset in code. With the LangSmith Python SDK, we first arrange a shopper after which outline a dataset with some examples:

!pip set up -q langsmith

from langsmith import Consumer

shopper = Consumer()

# Create a brand new analysis dataset

dataset = shopper.create_dataset("Pattern Dataset", description="A pattern analysis dataset.")

# Insert examples into the dataset: every instance has 'inputs' and 'outputs'

shopper.create_examples(

dataset_id=dataset.id,

examples=[

{

"inputs": {"postfix": "to LangSmith"},

"outputs": {"output": "Welcome to LangSmith"}

},

{

"inputs": {"postfix": "to Evaluations in LangSmith"},

"outputs": {"output": "Welcome to Evaluations in LangSmith"}

}

]

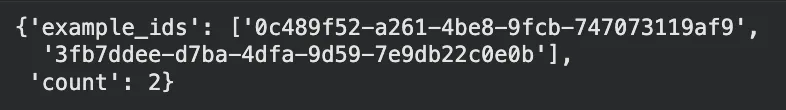

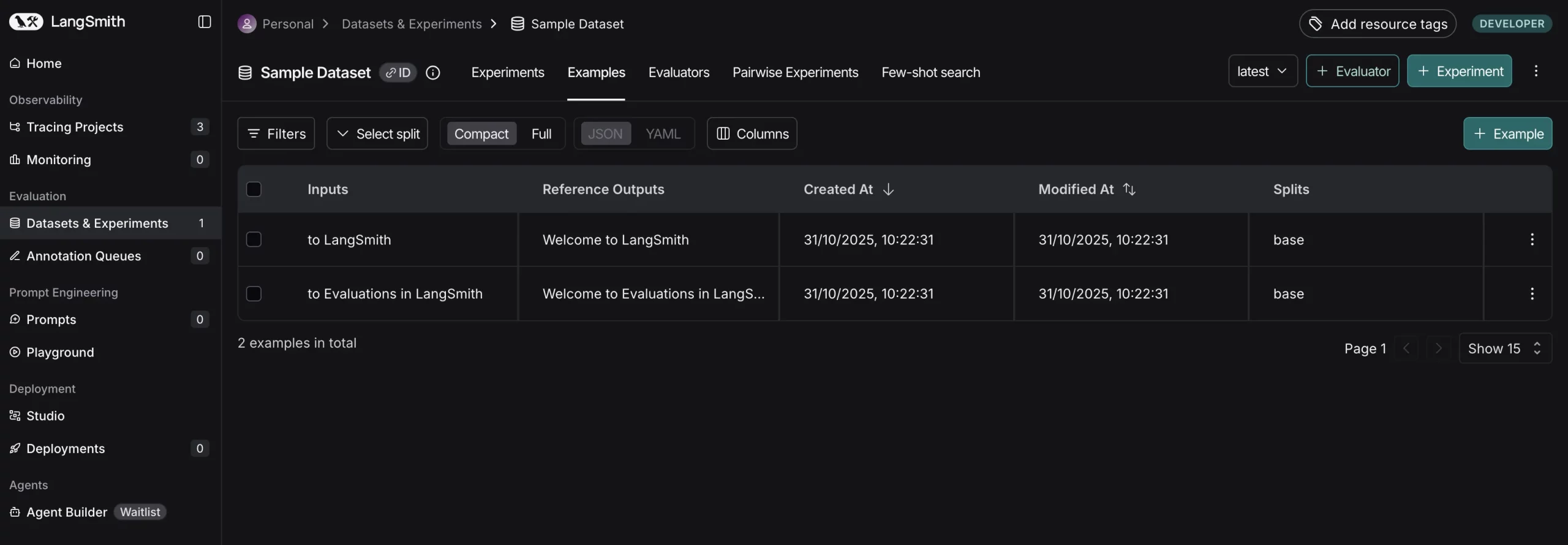

)On this toy knowledge set, the enter offers a postfix string, and the output needs to be a greeting message starting with “Welcome “. Now we have two examples, however it’s possible you’ll embrace as many as you want. Internally, LangSmith caches every instance along with the enter JSON and output JSON. The keys of the enter dict must match the parameters your software accepts. Keys within the output dict are the reference output (perfect or appropriate reply for the given enter). In our case, we wish the app to only add “Welcome ” to the start of the postfix.

Output:

This knowledge set is now saved in your LangSmith workspace (with the label “Pattern Dataset”). You’ll be able to see it within the LangSmith UI.

Now inside this dataset we are able to see all examples together with their inputs/anticipated outputs.

Instance: We are able to additionally create an analysis dataset from our Manufacturing Traces. To construct datasets from actual traces (e.g., failed person interactions), use the SDK to learn traces and create examples. Assume you’ve got a hint ID from the UI:

from langsmith import Consumer

shopper = Consumer()

# Fetch a hint by ID (substitute together with your hint ID)

hint = shopper.read_run(run_id="your-trace-run-id", project_name="demo-langsmith")

# Create dataset from the hint (add anticipated output manually)

dataset = shopper.create_dataset("Hint-Based mostly Dataset", description="From manufacturing traces.")

shopper.create_examples(

dataset_id=dataset.id,

examples=[

{

"inputs": {"input": trace.inputs}, # e.g., user query

"outputs": {"output": "Expected corrected response"} # Manually add ground truth

}

]

)We are actually able to specify how we measure the mannequin’s efficiency on such examples.

Writing Analysis Metrics with Evaluators

LangSmith evaluators are tiny capabilities (or packages) that grade outputs of your app for a particular instance. An evaluator could also be as simple as verifying if the output is similar to the anticipated textual content, or as superior as using a unique LLM to guage the output’s high quality. LangSmith accommodates each customized evaluators and inner ones. You might create your personal Python/TypeScript perform to execute any analysis logic and execute it by means of the SDK, or make the most of LangSmith’s inner evaluators inside the UI for standard metrics. For example, LangSmith has some out-of-the-box evaluators for issues like similarity comparability, factuality checking, and so on., however on this case we are going to develop a customized one for the sake of instance.

Given our easy pattern activity (the output should precisely match “Welcome X” for enter X), we are able to make an actual match evaluator. This evaluator will examine the mannequin output towards the reference output and return a rating of success or failure:

from langsmith.schemas import Run, Instance

# Create an evaluator perform for precise string match

def exact_match(run: Run, instance: Instance) -> dict:

# 'run.outputs' is the true output from the mannequin run

# 'instance.outputs' is the anticipated output from the dataset

return {"key": "exact_match", "rating": run.outputs["output"] == instance.outputs["output"]}Right here on this Python evaluator, we get a run (which holds the mannequin’s outputs for a particular instance) and the instance (which holds the anticipated outputs). We then examine the 2 strings and return a dict with a “rating” key. LangSmith requires evaluators to return a dict of measures; we use a boolean consequence right here, however you may return a numeric rating and even a number of measures if applicable. We are able to interpret the results of {“rating”: True} as a move, and False as a fail for this check.

Be aware: If we have been to have a extra subtle evaluation, we might calculate a share match or ranking. LangSmith leaves it as much as you to specify what the rating represents. For instance, you may return {“accuracy”: 0.8} or {“BLEU”: 0.67} for translation work, and even textual suggestions. For simplicity, our precise match merely returns a boolean rating.

The power of LangSmith’s analysis framework is that you could insert any logic right here. Some standard strategies are:

- Gold Customary Comparability: In case your knowledge set does comprise a reference resolution (like ours does), then you’ll be able to examine the mannequin output immediately with the reference (precise match or by way of similarity measures). That is what we’ve got accomplished above

- LLM-as-Decide: You’ll be able to make the most of a second LLM to grade the response towards a immediate or rubric. As an illustration, for open-ended questions, you’ll be able to ask an evaluator mannequin the person question, the mannequin’s response, and request a rating or verdict

- Purposeful Checks: Evaluators could be written to examine format or structural correctness. For instance, if the duty requires a JSON response, an evaluator can attempt to parse the JSON and return {”rating”: True} provided that the parsing is profitable (guaranteeing the mannequin didn’t hallucinate a improper format.

- Human-in-the-loop Suggestions: Whereas not strictly a code evaluator within the classical sense, LangSmith additionally facilitates human analysis by means of an annotation interface, by which human reviewers can present scores to output (these scores are consumed as suggestions in the identical system).

That is useful for refined standards equivalent to “helpfulness” or “type” that it’s tough to encode in code.

At the moment, our exact_match perform is enough for testing the pattern dataset. We would additionally specify abstract evaluators which calculate a worldwide measure over your entire dataset (equivalent to an general precision or accuracy). In LangSmith, a abstract evaluator is handed the checklist of all runs and all examples and returns a dataset-level measure (e.g. complete accuracy = appropriate predictions / complete). We gained’t be doing so right here, however keep in mind LangSmith does enable it (e.g., by means of a summary_evaluators param we’ll have a look at later). Generally computerized aggregation like imply rating will get executed, however you’ll be able to tailor additional if obligatory.

For subjective duties, we are able to use an LLM to attain outputs. Set up: pip set up langchain-openai. Outline a choose:

from langchain_openai import ChatOpenAI

from langsmith.schemas import Run, Instance

llm = ChatOpenAI(mannequin="gpt-4o-mini")

def llm_judge(run: Run, instance: Instance) -> dict:

immediate = f"""

Charge the standard of this response on a scale of 1-10 for relevance to the enter.

Enter: {instance.inputs}

Reference Output: {instance.outputs['output']}

Generated Output: {run.outputs['output']}

Reply with only a quantity.

"""

rating = int(llm.invoke(immediate).content material.strip())

return {"key": "relevance_score", "rating": rating / 10.0} # Normalize to 0-1This makes use of gpt-4o-mini to evaluate relevance, returning a float rating. Combine it into evaluations for non-exact duties.

Operating Evaluations with LangSmith

Having a dataset ready and evaluators established, we are able to now carry out the analysis. LangSmith affords an consider perform (and an equal Consumer.consider technique) to handle this. Whenever you invoke consider, LangSmith will execute every instance within the dataset, apply your software on the enter of the instance, retrieve the output, then invoke every evaluator on the output to generate scores. All these executes and scores shall be logged in LangSmith so that you can look at.

Let’s run an analysis for our pattern software. Suppose our software is simply the perform: lambda enter: “Welcome ” + enter[‘postfix’] (which is what we wish it to do). We’ll present that because the goal to guage with our dataset identify and the evaluators checklist:

from langsmith.analysis import consider

# Outline the goal perform to guage (our "software underneath check")

def generate_welcome(inputs: dict) -> dict:

# The perform returns a dict of outputs much like an precise chain would

return {"output": "Welcome " + inputs["postfix"]}

# Use the analysis on the dataset with our evaluator

outcomes = consider(

generate_welcome,

knowledge="Pattern Dataset", # we are able to entry the dataset by identify

evaluators=[exact_match], # use our exact_match evaluator

experiment_prefix="sample-eval", # label for this analysis run

metadata={"model": "1.0.0"} # elective metadata tags

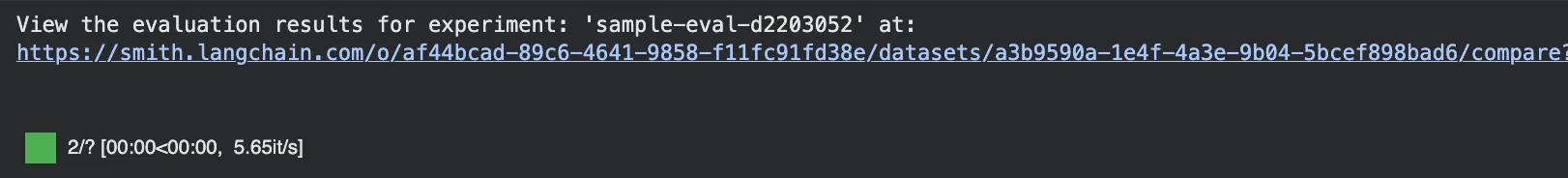

)Output:

After efficiently operating the experiment you’ll get a hyperlink to the detailed breakdown of the experiment like this:

A number of issues happen after we execute this code:

- Calling the goal: For each instance in “Pattern Dataset”, the generate_welcome perform known as with the instance’s inputs. The perform returns an output dict (on this instance merely{“output”: “Welcome .”}). In an precise software, this is likely to be an invocation of an LLM or a LangChain chain, for example, it’s possible you’ll provide an agent or chain object because the goal moderately than a fundamental perform. LangSmith is flexible: the goal could also be a perform, a LangChain Runnable, and even a whole beforehand executed chain run (for comparability assessments)

- Scoring utilizing evaluators: On each run, LangSmith calls the exact_match evaluator, giving it the run and instance. Our evaluator returns a rating (True/False). In case we had multiple evaluator, every would give its personal rating/suggestions for a given run.

- Recording outcomes: LangSmith data all this knowledge as an Experiment in its system. An Experiment is mainly a set of runs (one per instance within the dataset) plus all of the suggestions scores from evaluators. In our code, we provided an experiment_prefix of “sample-eval”. LangSmith will construct an experiment with a particular identify like sample-eval-<timestamp> (or add to an present experiment in the event you want). We additionally appended a metadata tag model: 1.0.0 – that is useful for understanding which model of our software or immediate was examined

- Analysis at Scale: The above instance is tiny, however LangSmith is designed for bigger evaluations. In case you have a number of hundred or hundreds of check circumstances, it’s possible you’ll want to execute evaluations within the background asynchronously. LangSmith SDK supplies an

aevaluatetechnique for Python which behaves much likeconsiderhowever executes the roles in parallel.

You too can use the max_concurrency parameter to set parallelism. That is useful to speed up analysis runs, significantly if each check makes a name to a stay mannequin (which is sluggish). Merely pay attention to the speed limits and costs when sending numerous mannequin calls concurrently.

Let’s take another instance by Evaluating a LangChain Chain. Substitute the easy perform with a LangChain chain (set up: pip set up langchain-openai). Outline a series which may fail on edge circumstances:

from langchain_openai import ChatOpenAI

from langchain_core.prompts import PromptTemplate

from langsmith.analysis import consider

llm = ChatOpenAI(mannequin="gpt-4o-mini")

immediate = PromptTemplate.from_template("Say: Welcome {postfix}")

chain = immediate | llm

# Consider the chain immediately

outcomes = consider(

chain,

knowledge="Pattern Dataset",

evaluators=[exact_match],

experiment_prefix="langchain-eval",

metadata={"chain_type": "prompt-llm"}

)

This traces the chain’s execution per instance, scoring outputs. Test for mismatches (e.g., further LLM phrasing) within the UI.

Decoding Analysis Outcomes

As soon as it has executed an analysis, LangSmith affords to research the outcomes utilizing instruments. Each experiment is accessible inside the LangSmith UI. You’ll have a desk of all examples with inputs, outputs, and suggestions scores for every evaluator.

Listed below are some methods you need to use the outcomes:

- Discover Failures: Take a look at which checks scored badly or failed. LangSmith factors these out, and you’ll filter or kind on rating. If any check failed, you’ve got prompt proof of a problem to appropriate. Since LangSmith data suggestions on each hint, you’ll be able to click on a failure run and look at its hint for a touch.

- View Metrics: When you return abstract evaluators (or simply need an mixture), LangSmith can show a complete metric for the experiment. For instance, in the event you returned {“rating”: True/False} for each run, it might show an general accuracy (complete True share) for the experiment. In additional subtle evaluations, you could have a number of metrics – all of those can be seen per instance and in abstract.

- Exporting Knowledge: LangSmith allows you to question or export outcomes of evaluations by means of the SDK or API. You may, for instance, retrieve all scores of suggestions for a specific experiment and make the most of that in a bespoke report or pipeline.

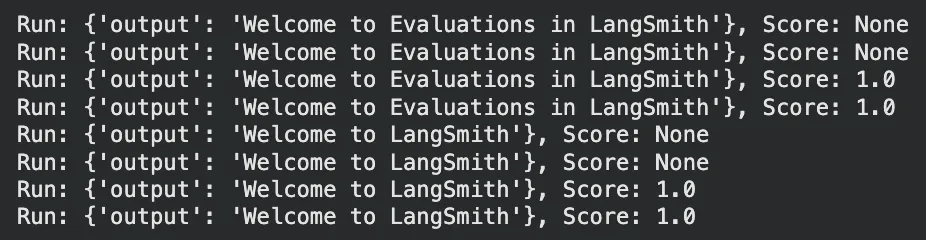

Fetching Experiment Outcomes Programmatically

To entry outcomes outdoors the UI, checklist experiments and fetch suggestions. Modify your experiment identify from the UI.

from langsmith import Consumer

shopper = Consumer()

project_name = "sample-eval-d2203052" # Matches experiment_prefix

# Record tasks and discover the goal venture

tasks = shopper.list_projects()

target_project = None

for venture in tasks:

if venture.identify == project_name:

target_project = venture

break

if target_project:

# Fetch suggestions for runs inside the goal venture

runs = shopper.list_runs(project_name=project_name)

for run in runs:

feedbacks = shopper.list_feedback(run_id=run.id)

for fb in feedbacks:

# Entry outputs safely, dealing with potential None values

run_outputs = run.outputs if run.outputs is just not None else "No outputs"

print(f"Run: {run_outputs}, Rating: {fb.rating}") # e.g., {'key': 'exact_match', 'rating': True}

else:

print(f"Mission '{project_name}' not discovered.") This prints scores per run, helpful for customized reporting or CI integration.

Outcomes interpretation is just not move/fail; it’s understanding why the mannequin is failing on specific inputs. Since LangSmith hyperlinks evaluation to wealthy hint knowledge and suggestions, it completes the loop so that you can intelligently debug. If, for instance, a number of failures got here on a specific query kind, that might be a immediate or information base hole, one thing you’ll be able to repair. If a basic measure is under your threshold of acceptance, you’ll be able to drill down into specific circumstances to inquire.

LangSmith vs. Conventional LLM Analysis Practices

How does LangSmith differ from the “conventional” strategies builders use to check LLM functions? Here’s a comparability of the 2:

| Facet | Conventional Strategies | LangSmith Strategy |

|---|---|---|

| Immediate Testing | Handbook trial-and-error iteration. | Systematic, scalable immediate testing with metrics. |

| Analysis Type | One-off accuracy checks or visible inspections. | Steady analysis in dev, CI, and manufacturing. |

| Metrics | Restricted to fundamental like accuracy or ROUGE. | Customized standards together with multi-dimensional scores. |

| Debugging | No context; guide overview of failures. | Full traceability with traces linked to failures. |

| Device Integration | Scattered instruments and customized code. | Unified platform for tracing, testing, and monitoring. |

| Operational Components | Ignores latency/value or handles individually. | Tracks high quality alongside latency and price. |

General, LangSmith applies self-discipline and group to LLM testing. It’s like going from hand-coded checks to automated check batteries in programming. By doing so, you could be extra sure that you just’re not flying blind together with your LLM app – you’ve got proof to help adjustments and religion in your mannequin’s habits throughout totally different conditions.

Finest Practices for Incorporating LangSmith into Your Workflow

To achieve essentially the most from LangSmith, keep in mind the next finest practices, significantly in the event you’re integrating it right into a present LangChain venture:

- Activate tracing early: Arrange LangSmith tracing from day one so you’ll be able to see how prompts and chains behave and debug sooner.

- Begin with a small, significant dataset: Create a handful of examples that cowl core duties and tough edge circumstances, then maintain including to it as new points seem.

- Combine analysis strategies: Use exact-match checks for info, LLM-based scoring for subjective qualities, and format checks for schema compliance.

- Feed person suggestions again into the system: Log and tag unhealthy responses so that they change into future check circumstances.

- Automate regression checks: Run LangSmith evaluations in CI/CD to catch immediate or mannequin regressions earlier than they hit manufacturing.

- Model and tag all the things: Label runs with immediate, mannequin, or chain variations to check efficiency over time.

- Monitor in manufacturing: Arrange on-line evaluators and alerts to catch real-time high quality drops when you go stay.

- Steadiness pace, value, and accuracy: Assessment qualitative and quantitative metrics to seek out the suitable trade-offs on your use case.

- Hold people within the loop: Periodically ship tough outputs for human overview and feed that knowledge again into your eval set.

By adopting these practices, you’ll bake LangSmith in as a one-time examine, but additionally as an ongoing high quality guardian throughout your LLM software’s lifetime. From prototyping (the place you debug and iterate utilizing traces and tiny checks) to manufacturing (the place you monitor and obtain suggestions at scale), LangSmith could be included in every step to assist guarantee reliability.

Conclusion

Testing and debugging LLM functions is tough, however LangSmith makes it a lot simpler. By integrating tracing, structured datasets, automated evaluators, and suggestions logging, it affords an entire system for testing AI methods. We have been capable of see how LangSmith could be employed to hint a LangChain app, generate reference datasets, have customized analysis metrics, and iterate quickly with considerate suggestions. This technique is an enchancment over the traditional immediate tweaking or stand-alone accuracy testing. It applies a software program engineering self-discipline to the realm of LLMs.

Able to take your LLM app’s reliability to the following stage? Strive LangSmith in your subsequent venture. Create some traces, write a few evaluators on your most vital outputs, and conduct an experiment. What you study will allow you to iterate with certainty and ship updates supported by knowledge, not vibes. Evaluating made simple!

Steadily Requested Questions

A. LangSmith is a platform for tracing, evaluating, and debugging LLM functions, integrating seamlessly with LangChain by way of surroundings variables like LANGCHAIN_TRACING_V2 for computerized run logging.

A. Set surroundings variables equivalent to LANGCHAIN_API_KEY and LANGCHAIN_TRACING_V2=”true”, then use decorators like @traceable or wrap shoppers to log each LLM name and chain step.

A. Datasets are collections of input-output examples used to check LLM apps, created by way of the SDK with shopper.create_dataset and shopper.create_examples for reference floor fact.

Login to proceed studying and revel in expert-curated content material.