This weblog publish focuses on new options and enhancements. For a complete checklist, together with bug fixes, please see the launch notes.

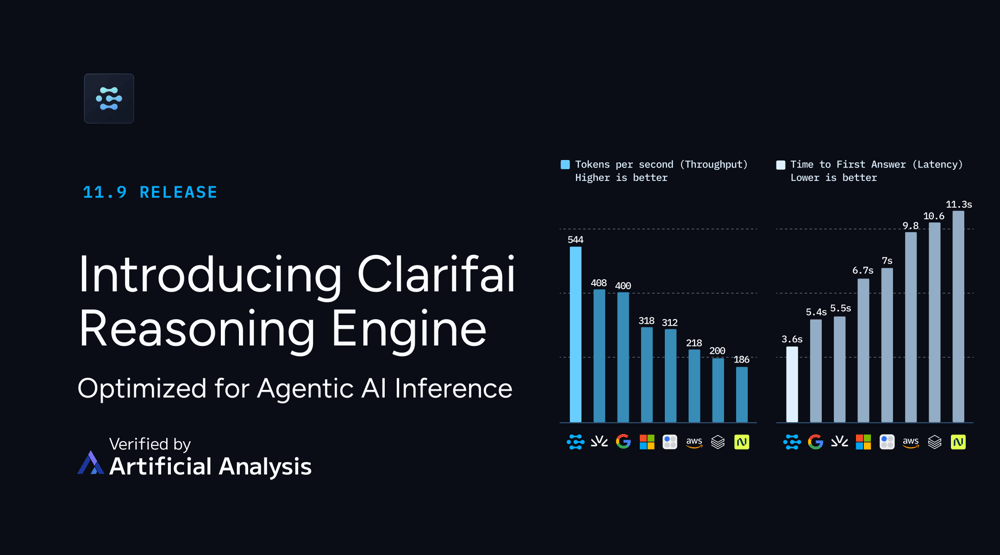

Clarifai Reasoning Engine: Optimized for Agentic AI Inference

We’re introducing the Clarifai Reasoning Engine — a full-stack efficiency framework constructed to ship record-setting inference pace and effectivity for reasoning and agentic AI workloads.

In contrast to conventional inference methods that plateau after deployment, the Clarifai Reasoning Engine constantly learns from workload habits, dynamically optimizing kernels, batching, and reminiscence utilization. This adaptive method means the system will get quicker and extra environment friendly over time, particularly for repetitive or structured agentic duties, with none trade-off in accuracy.

In latest benchmarks by Synthetic Evaluation on GPT-OSS-120B, the Clarifai Reasoning Engine set new business information for GPU inference efficiency:

544 tokens/sec throughput — quickest GPU-based inference measured

0.36s time-to-first-token — near-instant responsiveness

$0.16 per million tokens — the bottom blended value

These outcomes not solely outperformed each different GPU-based inference supplier but in addition rivaled specialised ASIC accelerators, proving that fashionable GPUs, when paired with optimized kernels, can obtain comparable and even superior reasoning efficiency.

The Reasoning Engine’s design is model-agnostic. Whereas GPT-OSS-120B served because the benchmark reference, the identical optimizations have been prolonged to different massive reasoning fashions like Qwen3-30B-A3B-Pondering-2507, the place we noticed a 60% enchancment in throughput in comparison with the bottom implementation. Builders may also deliver their very own reasoning fashions and expertise comparable efficiency beneficial properties utilizing Clarifai’s compute orchestration and kernel optimization stack.

At its core, the Clarifai Reasoning Engine represents a brand new commonplace for working reasoning and agentic AI workloads — quicker, cheaper, adaptive, and open to any mannequin.

Attempt the GPT-OSS-120B mannequin instantly on Clarifai and expertise the efficiency of the Clarifai Reasoning Engine. You can too deliver your personal fashions or discuss to our AI consultants to use these adaptive optimizations and see how they enhance throughput and latency in actual workloads.

Toolkits

Added assist for initializing fashions with the vLLM, LMStudio, and Hugging Face toolkits for native runners.

Hugging Face Toolkit

We’ve added a Hugging Face Toolkit to the Clarifai CLI, making it straightforward to initialize, customise, and serve Hugging Face fashions via Native Runners.

Now you can obtain and run supported Hugging Face fashions instantly by yourself {hardware} — laptops, workstations, or edge bins — whereas exposing them securely by way of Clarifai’s public API. Your mannequin runs domestically, your knowledge stays non-public, and the Clarifai platform handles routing, authentication, and governance.

Why use the Hugging Face Toolkit:

Use native compute – Run open-weight fashions by yourself GPUs or CPUs whereas protecting them accessible via the Clarifai API.

Protect privateness – All inference occurs in your machine; solely metadata flows via Clarifai’s safe management aircraft.

Skip guide setup – Initialize a mannequin listing with one CLI command; dependencies and configs are mechanically scaffolded.

Step-by-step: Operating a Hugging Face mannequin domestically

1. Set up the Clarifai CLI

Be sure you have Python 3.11+ and the most recent Clarifai CLI:

2. Authenticate with Clarifai

Log in and create a configuration context in your Native Runner:

You’ll be prompted in your Consumer ID, App ID, and Private Entry Token (PAT), which it’s also possible to set as an setting variable:

3. Get your Hugging Face entry token

When you’re utilizing fashions from non-public repos, create a token at huggingface.co/settings/tokens and export it:

4. Initialize a mannequin with the Hugging Face Toolkit

Use the brand new CLI flag --toolkit huggingface to scaffold a mannequin listing.

This command generates a ready-to-run folder with mannequin.py, config.yaml, and necessities.txt — pre-wired for Native Runners. You possibly can modify mannequin.py to fine-tune habits or change checkpoints in config.yaml.

5. Set up dependencies

6. Begin your Native Runner

Your runner registers with Clarifai, and the CLI prints a ready-to-use public API endpoint.

7. Check your mannequin

You possibly can name it like all Clarifai-hosted mannequin by way of SDK:

Behind the scenes, requests are routed to your native machine — the mannequin runs completely in your {hardware}. See the Hugging Face Toolkit documentation for the total setup information, configuration choices, and troubleshooting suggestions.

vLLM Toolkit

Run Hugging Face fashions on the high-performance vLLM inference engine

vLLM is an open-source runtime optimized for serving massive language fashions with distinctive throughput and reminiscence effectivity. In contrast to typical runtimes, vLLM makes use of steady batching and superior GPU scheduling to ship quicker, cheaper inference—supreme for native deployments and experimentation.

With Clarifai’s vLLM Toolkit, you may initialize and run any Hugging Face-compatible mannequin by yourself machine, powered by vLLM’s optimized backend. Your mannequin runs domestically however behaves like all hosted Clarifai mannequin via a safe public API endpoint.

Take a look at the vLLM Toolkit documentation to learn to initialize and serve vLLM fashions with Native Runners.

LM Studio Toolkit

Run open-weight fashions from LM Studio and expose them by way of Clarifai APIs

LM Studio is a well-liked desktop software for working and chatting with open-source LLMs domestically—no web connection required. With Clarifai’s LM Studio Toolkit, you may join these domestically working fashions to the Clarifai platform, making them callable by way of a public API whereas protecting knowledge and execution totally on-device.

Builders can use this integration to increase LM Studio fashions into production-ready APIs with minimal setup.

Learn the LM Studio Toolkit information to see supported setups and the way to run LM Studio fashions utilizing Native Runners.

New Fashions on the Platform

We’ve added a number of highly effective new fashions optimized for reasoning, long-context duties, and multi-modal capabilities:

- Qwen3-Subsequent-80B-A3B-Pondering – An 80B-parameter, sparsely activated reasoning mannequin that delivers near-flagship efficiency on advanced duties with excessive effectivity in coaching and ultra-long context inference (as much as 256K tokens).

- Qwen3-30B-A3B-Instruct-2507 – Enhanced for comprehension, coding, multilingual data, and person alignment, with 256K token long-context dealing with.

- Qwen3-30B-A3B-Pondering-2507 – Additional improved reasoning, basic capabilities, alignment, and long-context understanding.

New Cloud Cases: B200s and GH200s

We’ve added new cloud situations to provide builders extra choices for GPU-based workloads:

B200 Cases – Competitively priced, working from Seattle.

GH200 Cases – Powered by Vultr for high-performance duties.

Study extra about Enterprise-Grade GPU Internet hosting for AI fashions and request entry, or join with our AI consultants to debate your workload wants.

Extra Modifications

Able to Begin Constructing?

With the Clarifai Reasoning Engine, you may run reasoning and agentic AI workloads quicker, extra effectively, and at decrease value — all whereas sustaining full management over your fashions. The Reasoning Engine constantly optimizes for throughput and latency, whether or not you’re utilizing GPT-OSS-120B, Qwen fashions, or your personal customized fashions.

Deliver your personal fashions and see how adaptive optimizations enhance efficiency in actual workloads. Speak to our AI consultants to find out how the Clarifai Reasoning Engine can optimize efficiency of your customized fashions.