Within the area of multimodal AI, instruction-based picture modifying fashions are reworking how customers work together with visible content material. Simply launched in August 2025 by Alibaba’s Qwen Workforce, Qwen-Picture-Edit builds on the 20B-parameter Qwen-Picture basis to ship superior modifying capabilities. This mannequin excels in semantic modifying (e.g., type switch and novel view synthesis) and look modifying (e.g., exact object modifications), whereas preserving Qwen-Picture’s power in complicated textual content rendering for each English and Chinese language. Built-in with Qwen Chat and accessible through Hugging Face, it lowers obstacles for skilled content material creation, from IP design to error correction in generated paintings.

Structure and Key Improvements

Qwen-Picture-Edit extends the Multimodal Diffusion Transformer (MMDiT) structure of Qwen-Picture, which includes a Qwen2.5-VL multimodal giant language mannequin (MLLM) for textual content conditioning, a Variational AutoEncoder (VAE) for picture tokenization, and the MMDiT spine for joint modeling. For modifying, it introduces twin encoding: the enter picture is processed by Qwen2.5-VL for high-level semantic options and the VAE for low-level reconstructive particulars, concatenated within the MMDiT’s picture stream. This permits balanced semantic coherence (e.g., sustaining object identification throughout pose modifications) and visible constancy (e.g., preserving unmodified areas).

The Multimodal Scalable RoPE (MSRoPE) positional encoding is augmented with a body dimension to distinguish pre- and post-edit pictures, supporting duties like text-image-to-image (TI2I) modifying. The VAE, fine-tuned on text-rich information, achieves superior reconstruction with 33.42 PSNR on basic pictures and 36.63 on text-heavy ones, outperforming FLUX-VAE and SD-3.5-VAE. These enhancements permit Qwen-Picture-Edit to deal with bilingual textual content edits whereas retaining unique font, measurement, and magnificence.

Key Options of Qwen-Picture-Edit

- Semantic and Look Enhancing: Helps low-level visible look modifying (e.g., including, eradicating, or modifying components whereas conserving different areas unchanged) and high-level visible semantic modifying (e.g., IP creation, object rotation, and magnificence switch, permitting pixel modifications with semantic consistency).

- Exact Textual content Enhancing: Permits bilingual (Chinese language and English) textual content modifying, together with direct addition, deletion, and modification of textual content in pictures, whereas preserving the unique font, measurement, and magnificence.

- Sturdy Benchmark Efficiency: Achieves state-of-the-art outcomes on a number of public benchmarks for picture modifying duties, positioning it as a strong basis mannequin for technology and manipulation.

Coaching and Information Pipeline

Leveraging Qwen-Picture’s curated dataset of billions of image-text pairs throughout Nature (55%), Design (27%), Individuals (13%), and Artificial (5%) domains, Qwen-Picture-Edit employs a multi-task coaching paradigm unifying T2I, I2I, and TI2I goals. A seven-stage filtering pipeline refines information for high quality and steadiness, incorporating artificial textual content rendering methods (Pure, Compositional, Advanced) to deal with long-tail points in Chinese language characters.

Coaching makes use of movement matching with a Producer-Client framework for scalability, adopted by supervised fine-tuning and reinforcement studying (DPO and GRPO) for choice alignment. For editing-specific duties, it integrates novel view synthesis and depth estimation, utilizing DepthPro as a instructor mannequin. This ends in strong efficiency, reminiscent of correcting calligraphy errors by means of chained edits.

Superior Enhancing Capabilities

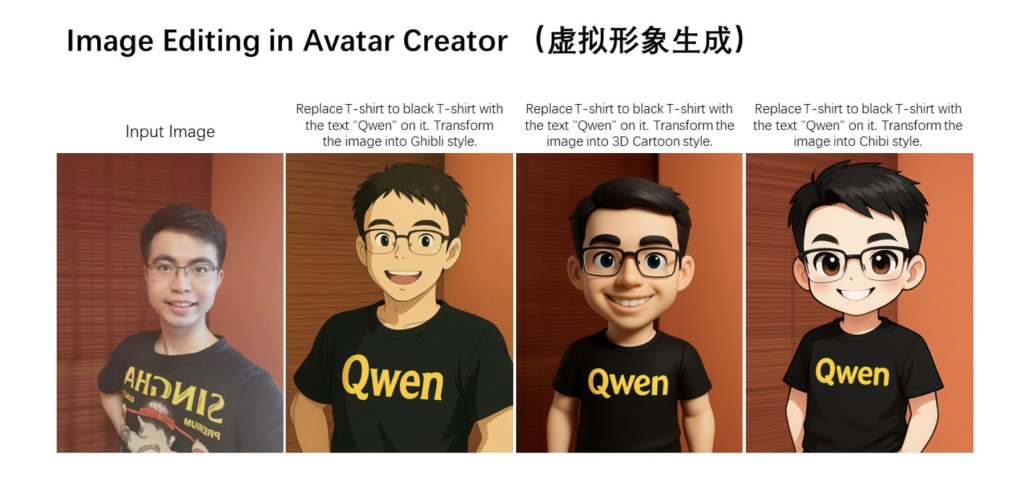

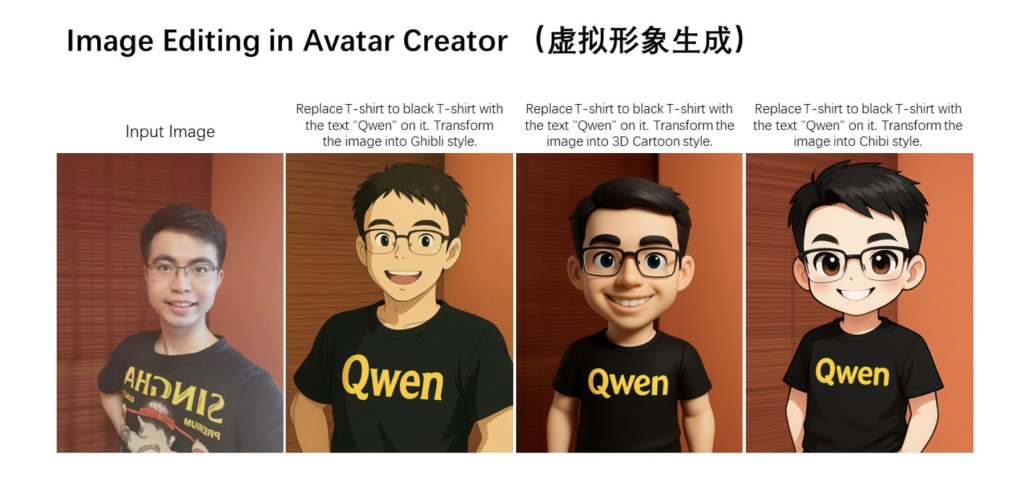

Qwen-Picture-Edit shines in semantic modifying, enabling IP creation like producing MBTI-themed emojis from a mascot (e.g., Capybara) whereas preserving character consistency. It helps 180-degree novel view synthesis, rotating objects or scenes with excessive constancy, reaching 15.11 PSNR on GSO—surpassing specialised fashions like CRM. Type switch transforms portraits into inventive varieties, reminiscent of Studio Ghibli, sustaining semantic integrity.

For look modifying, it provides components like signboards with lifelike reflections or removes effective particulars like hair strands with out altering environment. Bilingual textual content modifying is exact: altering “Hope” to “Qwen” on posters or correcting Chinese language characters in calligraphy through bounding bins. Chained modifying permits iterative corrections, e.g., fixing “稽” step-by-step till correct.

Benchmark Outcomes and Evaluations

Qwen-Picture-Edit leads modifying benchmarks, scoring 7.56 total on GEdit-Bench-EN and seven.52 on CN, outperforming GPT Picture 1 (7.53 EN, 7.30 CN) and FLUX.1 Kontext [Pro] (6.56 EN, 1.23 CN). On ImgEdit, it achieves 4.27 total, excelling in duties like object alternative (4.66) and magnificence modifications (4.81). Depth estimation yields 0.078 AbsRel on KITTI, aggressive with DepthAnything v2.

Human evaluations on AI Area place its base mannequin third amongst APIs, with robust textual content rendering benefits. These metrics spotlight its superiority in instruction-following and multilingual constancy.

Deployment and Sensible Utilization

Qwen-Picture-Edit is deployable through Hugging Face Diffusers:

from diffusers import QwenImageEditPipeline

import torch

from PIL import Picture

pipeline = QwenImageEditPipeline.from_pretrained("Qwen/Qwen-Picture-Edit")

pipeline.to(torch.bfloat16).to("cuda")

picture = Picture.open("enter.png").convert("RGB")

immediate = "Change the rabbit's coloration to purple, with a flash gentle background."

output = pipeline(picture=picture, immediate=immediate, num_inference_steps=50, true_cfg_scale=4.0).pictures

output.save("output.png")

Alibaba Cloud’s Mannequin Studio provides API entry for scalable inference. Licensed beneath Apache 2.0, the GitHub repository supplies coaching code.

Future Implications

Qwen-Picture-Edit advances vision-language interfaces, enabling seamless content material manipulation for creators. Its unified strategy to understanding and technology suggests potential extensions to video and 3D, fostering progressive functions in AI-driven design.

Try the Technical Particulars, Fashions on Hugging Face and Strive the Chat right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.