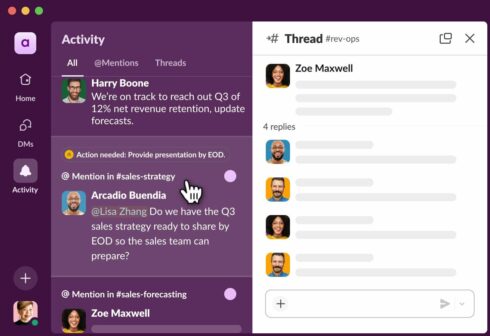

Slack’s AI search now works throughout a corporation’s total information base

Slack is introducing a variety of new AI-powered instruments to make crew collaboration simpler and extra intuitive.

“At present, 60% of organizations are utilizing generative AI. However most nonetheless fall wanting its productiveness promise. We’re altering that by placing AI the place work already occurs — in your messages, your docs, your search — all designed to be intuitive, safe, and constructed for the best way groups truly work,” Slack wrote in a weblog publish.

The brand new enterprise search functionality will allow customers to look not simply in Slack, however any app that’s related to Slack. It might probably search throughout methods of document like Salesforce or Confluence, file repositories like Google Drive or OneDrive, developer instruments like GitHub or Jira, and venture administration instruments like Asana.

“Enterprise search is about turning fragmented info into actionable insights, serving to you make faster, extra knowledgeable choices, with out leaving Slack,” the corporate defined.

The platform can also be getting AI-generated channel recaps and thread summaries, serving to customers atone for conversations rapidly. It’s introducing AI-powered translations as properly to allow customers to learn and reply of their most popular language.

Anthropic’s Claude Code will get new analytics dashboard to supply insights into how groups are utilizing AI tooling

Anthropic has introduced the launch of a brand new analytics dashboard in Claude Code to provide improvement groups insights into how they’re utilizing the device.

It tracks metrics corresponding to strains of code accepted, suggestion acceptance charge, whole person exercise over time, whole spend over time, common day by day spend for every person, and common day by day strains of code accepted for every person.

These metrics might help organizations perceive developer satisfaction with Claude Code strategies, observe code technology effectiveness, and establish alternatives for course of enhancements.

Mistral launches first voice mannequin

Voxtral is an open weight mannequin for speech understanding, that Mistral says gives “state-of-the-art accuracy and native semantic understanding within the open, at lower than half the worth of comparable APIs. This makes high-quality speech intelligence accessible and controllable at scale.”

It is available in two mannequin sizes: a 24B model for production-scale purposes and a 3B model for native deployments. Each sizes can be found underneath the Apache 2.0 license and will be accessed through Mistral’s API.

JFrog releases MCP server

The MCP server will permit customers to create and look at tasks and repositories, get detailed vulnerability info from JFrog, and overview the parts in use at a corporation.

“The JFrog Platform delivers DevOps, Safety, MLOps, and IoT companies throughout your software program provide chain. Our new MCP Server enhances its accessibility, making it even simpler to combine into your workflows and the day by day work of builders,” JFrog wrote in a weblog publish.

JetBrains declares updates to its coding agent Junie

Junie is now totally built-in into GitHub, enabling asynchronous improvement with options corresponding to the flexibility to delegate a number of duties concurrently, the flexibility to make fast fixes with out opening the IDE, crew collaboration instantly in GitHub, and seamless switching between the IDE and GitHub. Junie on GitHub is at present in an early entry program and solely helps JVM and PHP.

JetBrains additionally added assist for MCP to allow Junie to connect with exterior sources. Different new options embody 30% quicker process completion velocity and assist for distant improvement on macOS and Linux.

Gemini API will get first embedding mannequin

A lot of these fashions generate embeddings for phrases, phrases, sentences, and code, to supply context-aware outcomes which might be extra correct than keyword-based approaches. “They effectively retrieve related info from information bases, represented by embeddings, that are then handed as further context within the enter immediate to language fashions, guiding it to generate extra knowledgeable and correct responses,” the Gemini docs say.

The embedding mannequin within the Gemini API helps over 100 languages and a 2048 enter token size. It is going to be supplied through each free and paid tiers to allow builders to experiment with it totally free after which scale up as wanted.