(Chuysang/Shutterstock)

On this planet of monitoring software program, the way you course of telemetry information can considerably affect your capacity to derive insights, troubleshoot points, and handle prices.

There are 2 main use instances for the way telemetry information is leveraged:

- Radar (Monitoring of methods) normally falls into the bucket of recognized knowns and recognized unknowns. This results in eventualities the place some information is sort of ‘pre-determined’ to behave, be plotted in a sure means – as a result of we all know what we’re on the lookout for.

- Blackbox (Debugging, RCA and so forth.) ones however are extra to do with unknown unknowns. Which entails to what we don’t know and will must hunt for to construct an understanding of the system.

Understanding Telemetry Knowledge Challenges

Earlier than diving into processing approaches, it’s necessary to grasp the distinctive challenges of telemetry information:

- Quantity: Trendy methods generate huge quantities of telemetry information

- Velocity: Knowledge arrives in steady, high-throughput streams

- Selection: A number of codecs throughout metrics, logs, traces, profiles and occasions

- Time-sensitivity: Worth typically decreases with age

- Correlation wants: Knowledge from totally different sources should be linked collectively

These traits create particular issues when selecting between ETL and ELT approaches.

ETL for Telemetry: Remodel-First Structure

Technical Structure

In an ETL strategy, telemetry information undergoes transformation earlier than reaching its ultimate vacation spot:

A typical implementation stack would possibly embody:

- Assortment: OpenTelemetry, Prometheus, Fluent Bit

- Transport: Kafka or Kinesis or in reminiscence because the buffering layer

- Transformation: Stream processing

- Storage: Time-series databases (Prometheus) or specialised indices or Object Storage (s3)

Key Technical Elements

- Aggregation Methods

Pre-aggregation considerably reduces information quantity and question complexity. A typical pre-aggregation stream appears like this:

This transformation condenses uncooked information into 5-minute summaries, dramatically decreasing storage necessities and bettering question efficiency.

Instance: For a gaming software dealing with tens of millions of requests per day, uncooked request latency metrics (doubtlessly billions of information factors) may be grouped by service and endpoint, then aggregated into 5-minute (or 1-minute) home windows. A single API name that generates 100 latency information factors per second (8.64 million per day) is lowered to simply 288 aggregated entries per day (one per 5-minute window), whereas nonetheless preserving important p50/p90/p99 percentiles wanted for SLA monitoring.

- Cardinality Administration

Excessive-cardinality metrics can break time-series databases. The cardinality administration course of follows this sample:

Efficient methods embody:

- Label filtering and normalization

- Strategic aggregation of particular dimensions

- Hashing methods for high-cardinality values whereas preserving question patterns

Instance: A microservice monitoring HTTP requests contains consumer IDs and request paths in its metrics. With 50,000 each day energetic customers and hundreds of distinctive URL paths, this creates tens of millions of distinctive label combos. The cardinality administration system filters out consumer IDs completely (configurable, too excessive cardinality), normalizes URL paths by changing dynamic segments with placeholders (e.g., /customers/123/profilebecomes /customers/{id}/profile), and applies constant categorization to errors. This reduces distinctive time sequence from tens of millions to lots of, permitting the time-series database to perform effectively.

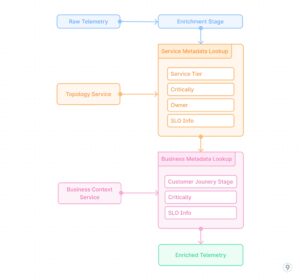

- Actual-time Enrichment

Including context to metrics in the course of the transformation section entails integrating exterior information sources:

This course of provides important enterprise and operational context to uncooked telemetry information, enabling extra significant evaluation and alerting based mostly on service significance, buyer affect, and different components past pure technical metrics.

Instance: A cost processing service emits fundamental metrics like request counts, latencies, and error charges. The enrichment pipeline joins this telemetry with service registry information so as to add metadata concerning the service tier (important), SLO targets (99.99% availability), and group possession (payments-team). It then incorporates enterprise context to tag transactions with their kind (subscription renewal, one-time buy, refund) and estimated income affect. When an incident happens, alerts are mechanically prioritized based mostly on enterprise affect reasonably than simply technical severity, and routed to the suitable group with wealthy context.

Technical Benefits

- Question efficiency: Pre-calculated aggregates remove computation at question time

- Predictable useful resource utilization: Each storage and question compute are managed

- Schema enforcement: Knowledge conformity is assured earlier than storage

- Optimized storage codecs: Knowledge may be saved in codecs optimized for particular entry patterns

Technical Limitations

- Lack of granularity: Some element is completely misplaced

- Schema rigidity: Adapting to new necessities requires pipeline modifications

- Processing overhead: Actual-time transformation provides complexity and useful resource calls for

- Transformation-time selections: Evaluation paths should be recognized prematurely

ELT for Telemetry: Uncooked Storage with Versatile Transformation

Technical Structure

ELT structure prioritizes getting uncooked information into storage, with transformations carried out at question time:

A typical implementation would possibly embody:

- Assortment: OpenTelemetry, Prometheus, Fluent Bit

- Transport: Direct ingestion with out complicated processing

- Storage: Object storage (S3, GCS) or information lakes in Parquet format

- Transformation: SQL engines (Presto, Athena), Spark jobs, or specialised OLAP methods

Key Technical Elements

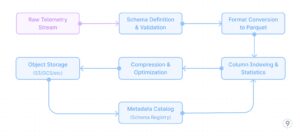

- Environment friendly Uncooked Storage

Optimizing for long-term storage of uncooked telemetry requires cautious consideration of file codecs and storage group:

This strategy leverages columnar storage codecs like Parquet with acceptable compression (ZSTD for traces, Snappy for metrics), dictionary encoding, and optimized column indexing based mostly on frequent question patterns (trace_id, service, time ranges).

Instance: A cloud-native software generates 10TB of hint information each day throughout its distributed providers. As a substitute of discarding or closely sampling this information, the entire hint info is captured utilizing OpenTelemetry collectors and transformed to Parquet format with ZSTD compression. Key fields like trace_id, service title, and timestamp are listed for environment friendly querying. This strategy reduces the storage footprint by 85% in comparison with uncooked JSON whereas sustaining question efficiency. When a important customer-impacting situation occurred, engineers had been in a position to entry full hint information from 3 months prior, figuring out a refined sample of intermittent failures that may have been misplaced with conventional sampling.

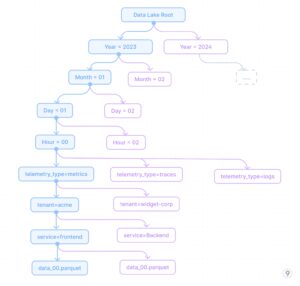

- Partitioning Methods

Efficient partitioning is essential for question efficiency towards uncooked telemetry. A well-designed partitioning technique follows this hierarchy:

This partitioning strategy permits environment friendly time-range queries whereas additionally permitting filtering by service and tenant, that are frequent question dimensions. The partitioning technique is designed to:

- Optimize for time-based retrieval (most typical question sample)

- Allow environment friendly tenant isolation for multi-tenant methods

- Permit service-specific queries with out scanning all information

- Separate telemetry sorts for optimized storage codecs per kind

Instance: A SaaS platform with 200+ enterprise clients makes use of this partitioning technique for its observability information lake. When a high-priority buyer experiences a difficulty that occurred final Tuesday between 2-4pm, engineers can instantly question simply these particular partitions: /yr=2023/month=11/day=07/hour=1[4-5]/tenant=enterprise-x/*. This strategy reduces the scan dimension from doubtlessly petabytes to just some gigabytes, enabling responses in seconds reasonably than hours. When evaluating present efficiency towards historic baselines, the time-based partitioning permits environment friendly month-over-month comparisons by scanning solely the related time partitions.

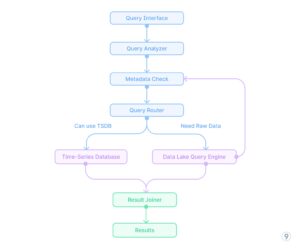

- Question-time Transformations

SQL and analytical engines present highly effective query-time transformations. The question processing stream for on-the-fly evaluation appears like this (See Fig. 8).

This question stream demonstrates how complicated evaluation like calculating service latency percentiles, error charges, and utilization patterns may be carried out completely at question time without having pre-computation. The analytical engine applies optimizations like predicate pushdown, parallel execution, and columnar processing to attain cheap efficiency even towards giant uncooked datasets.

Instance: A DevOps group investigating a efficiency regression found it solely affected premium clients utilizing a particular characteristic. Utilizing query-time transformations towards the ELT information lake, they wrote a single question that first filtered to the affected time interval, joined buyer tier info, extracted related attributes about characteristic utilization, calculated percentile response occasions grouped by buyer section, and recognized that premium clients with excessive transaction volumes had been experiencing degraded efficiency solely when a particular elective characteristic flag was enabled. This evaluation would have been unattainable with pre-aggregated information for the reason that buyer section + characteristic flag dimension hadn’t been beforehand recognized as necessary for monitoring.

Technical Benefits

- Schema flexibility: New dimensions may be analyzed with out pipeline modifications

- Value-effective storage: Object storage is considerably cheaper than specialised DBs

- Retroactive evaluation: Historic information may be examined with new views

Technical Limitations

- Question efficiency challenges: Interactive evaluation could also be gradual on giant datasets

- Useful resource-intensive evaluation: Compute prices may be excessive for complicated queries

- Implementation complexity: Requires extra refined question tooling

- Storage overhead: Uncooked information consumes considerably more room

Technical Implementation: The Hybrid Method

Core Structure Elements

Implementation Technique

- Twin-path processing

Instance: A world ride-sharing platform carried out a dual-path telemetry system that routes service well being metrics and buyer expertise indicators (journey wait occasions, ETA accuracy) by means of the ETL path for real-time dashboards and alerting. In the meantime, all uncooked information together with detailed consumer journeys, driver actions, and software logs flows by means of the ELT path to cost-effective storage. When a regional outage occurred, operations groups used the real-time dashboards to rapidly determine and mitigate the quick situation. Later, information scientists used the preserved uncooked information to carry out a complete root trigger evaluation, correlating a number of components that wouldn’t have been seen in pre-aggregated information alone.

- Sensible information routing

Instance: A monetary providers firm deployed a sensible routing system for his or her telemetry information. All information is preserved within the information lake, however important metrics like transaction success charges, fraud detection alerts, and authentication service well being metrics are instantly routed to the real-time processing pipeline. Moreover, any security-related occasions reminiscent of failed login makes an attempt, permission modifications, or uncommon entry patterns are instantly despatched to a devoted safety evaluation pipeline. Throughout a current safety incident, this routing enabled the safety group to detect and reply to an uncommon sample of authentication makes an attempt inside minutes, whereas the entire context of consumer journeys and software conduct was preserved within the information lake for subsequent forensic evaluation.

- Unified question interface

Actual-world Implementation Instance

A particular engineering implementation at last9.io demonstrates how this hybrid strategy works in observe:

For a large-scale Kubernetes platform with lots of of clusters and hundreds of providers, we carried out a hybrid telemetry pipeline with:

- Crucial-path metrics processed by means of a pipeline that:

- Performs dimensional discount (limiting label combos)

- Pre-calculates service-level aggregations

- Computes derived metrics like success charges and latency percentiles

- Uncooked telemetry saved in an economical information lake:

- Partitioned by time, information kind, and tenant

- Optimized for typical question patterns

- Compressed with acceptable codecs (Zstd for traces, Snappy for metrics)

- Unified question layer that:

- Routes dashboard and alerting queries to pre-aggregated storage

- Redirects exploratory and ad-hoc evaluation to the info lake

- Manages correlation queries throughout each methods

This strategy delivered each the question efficiency wanted for real-time operations and the analytical depth required for complicated troubleshooting.

Determination Framework

When architecting telemetry pipelines, these technical issues ought to information your strategy:

| Determination Issue | Use ETL | Use ELT |

| Question latency necessities | < 1 second | Can wait minutes |

| Knowledge retention wants | Days/Weeks | Months/Years |

| Cardinality | Low/Medium | Very excessive |

| Evaluation patterns | Nicely-defined | Exploratory |

| Price range precedence | Compute | Storage |

Conclusion

The technical realities of telemetry information processing demand considering past easy ETL vs. ELT paradigms. Engineering groups ought to architect tiered methods that leverage the strengths of each approaches:

- ETL-processed information for operational use instances requiring quick insights

- ELT-processed information for deeper evaluation, troubleshooting, and historic patterns

- Metadata-driven routing to intelligently direct queries to the suitable tier

This engineering-centric strategy balances efficiency necessities with price issues whereas sustaining the pliability required in trendy observability methods.

Concerning the creator: Nishant Modak is the founder and CEO of Last9, a excessive cardinality observability platform firm backed by Sequoia India (now PeakXV). He’s been an entrepreneur and dealing with giant scale corporations for almost 20 years.

India (now PeakXV). He’s been an entrepreneur and dealing with giant scale corporations for almost 20 years.

Associated Gadgets:

From ETL to ELT: The Subsequent Era of Knowledge Integration Success