On Monday, Apple shared its plans to permit customers to decide into on-device Apple Intelligence coaching utilizing Differential Privateness strategies which are extremely much like its failed CSAM detection system.

Differential Privateness is an idea Apple embraced brazenly in 2016 with iOS 10. It’s a privacy-preserving technique of information assortment that introduces noise to pattern knowledge to forestall the information collectors from determining the place the information got here from.

In line with a publish on Apple’s machine studying weblog, Apple is working to implement Differential Privateness as a way to assemble person knowledge to coach Apple Intelligence. The info is supplied on an opt-in foundation, anonymously, and in a means that may’t be traced again to a person person.

The story was first lined by Bloomberg, which defined Apple’s report on utilizing artificial knowledge skilled on real-world person info. Nevertheless, it is not so simple as grabbing person knowledge off of an iPhone to investigate in a server farm.

As a substitute, Apple will make the most of a way known as Differential Privateness, which, should you’ve forgotten, is a system designed to introduce noise to knowledge assortment so particular person knowledge factors can’t be traced again to the supply. Apple takes it a step additional by leaving person knowledge on machine — solely polling for accuracy and taking the ballot outcomes off of the person’s machine.

These strategies make sure that Apple’s ideas behind privateness and safety are preserved. Customers that decide into sharing machine analytics will take part on this system, however none of their knowledge will ever depart their iPhone.

Analyzing knowledge with out identifiers

Differential Privateness is an idea Apple leaned on and developed since no less than 2006, however did not make part of its public id till 2016. It began as a approach to find out how individuals used emojis, to search out new phrases for native dictionaries, to energy deep hyperlinks inside apps, and as a Notes search software.

Apple says that beginning with iOS 18.5, Differential Privateness shall be used to investigate person knowledge and practice particular Apple Intelligence programs beginning with Genmoji. It is going to be capable of determine patterns of frequent prompts individuals use so Apple can higher practice the AI and get higher outcomes for these prompts.

Principally, Apple supplies synthetic prompts it believes are widespread, like “dinosaur in a cowboy hat” and it seems to be for sample matches in person knowledge analytics. Due to artificially injected noise and a threshold of needing a whole bunch of fragment matches, there’s no approach to floor distinctive or individual-identifying prompts.

Plus, these searches for fragments of prompts solely lead to a constructive or unfavourable ballot, so no person knowledge is derived from the evaluation. Once more, no knowledge might be remoted and traced again to a single particular person or identifier.

The identical method shall be used for analyzing Picture Playground, Picture Wand, Reminiscences Creation, and Writing Instruments. These programs depend on quick prompts, so the evaluation might be restricted to easy immediate sample matching.

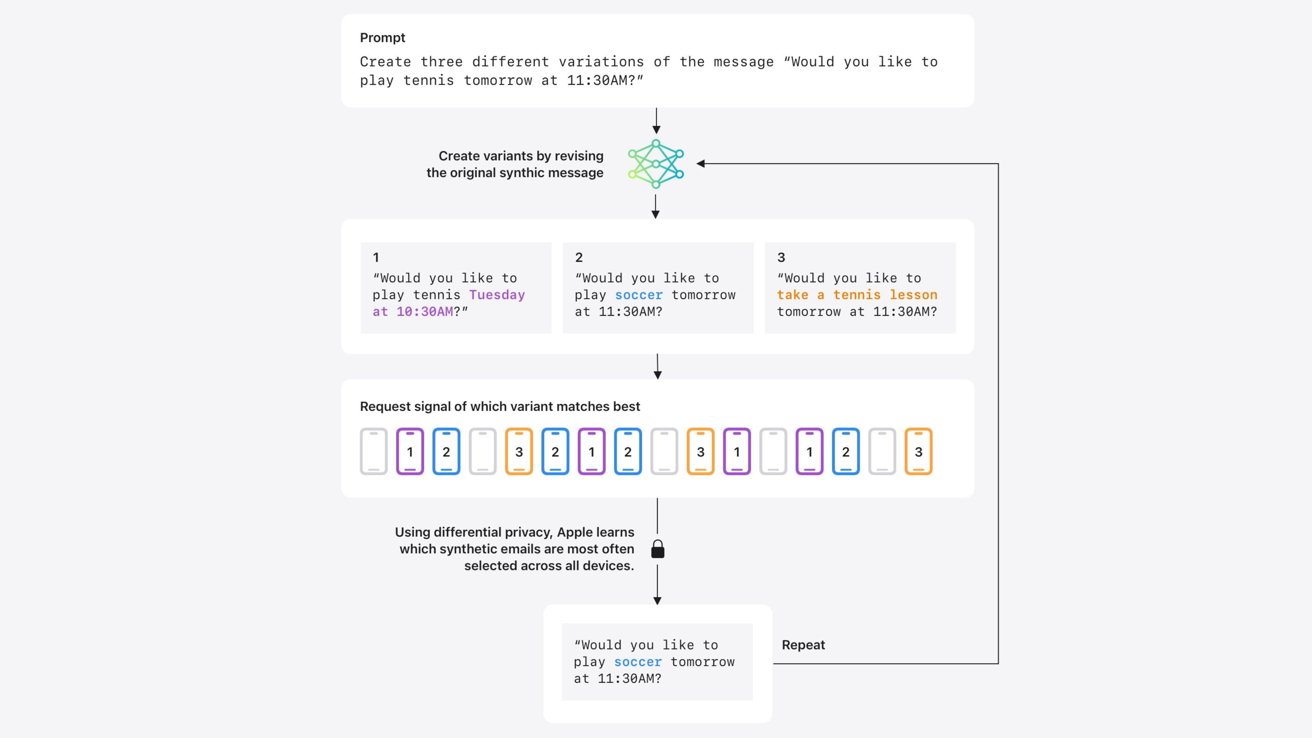

Apple needs to take these strategies additional by implementing them for textual content technology. Since textual content technology for electronic mail and different programs leads to for much longer prompts, and certain, extra non-public person knowledge, Apple took additional steps.

Apple is utilizing latest analysis into creating artificial knowledge that can be utilized to signify mixture tendencies in actual person knowledge. After all, that is executed with out eradicating a single little bit of textual content from the person’s machine.

After producing artificial emails that will signify actual emails, they’re in comparison with restricted samples of latest person emails which have been computed into artificial embeddings. The artificial embeddings closest to the samples throughout many units show which artificial knowledge generated by Apple are most consultant of actual human communication.

As soon as a sample is discovered throughout units, that artificial knowledge and sample matching might be refined to work throughout completely different subjects. The method allows Apple to coach Apple Intelligence to provide higher summaries and strategies.

Once more, the Differential Privateness technique of Apple Intelligence coaching is opt-in and takes place on-device. Person knowledge by no means leaves the machine, and gathered polling outcomes have noise launched, so even whereas person knowledge is not current, particular person outcomes cannot be tied again to a single identifier.

These Apple Intelligence coaching strategies ought to sound very acquainted

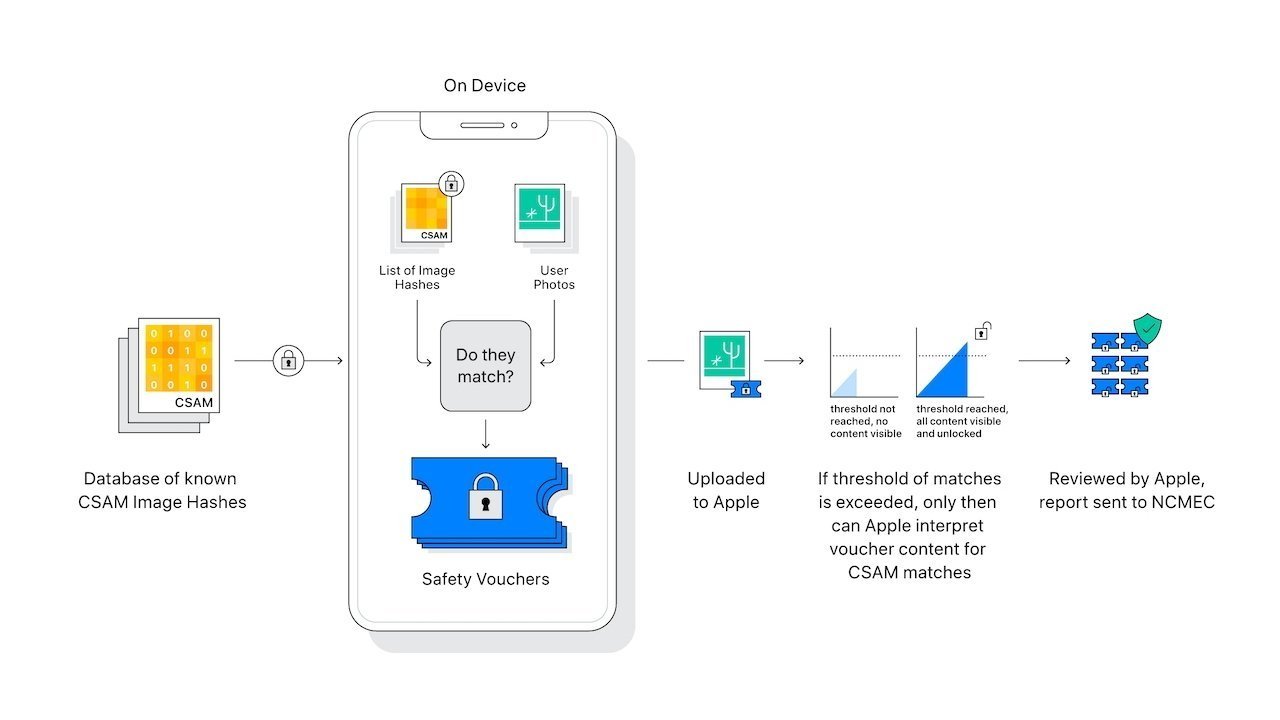

If Apple’s strategies right here ring any bells, it is as a result of they’re practically an identical to the strategies the corporate deliberate to implement for CSAM detection. The system would have transformed person pictures into hashes that had been in comparison with a database of hashes of recognized CSAM.

Apple’s CSAM detection function relied on hashing pictures with out violating privateness or breaking encryption

That evaluation would happen both on-device for native pictures, or in iCloud picture storage. In both occasion, Apple was capable of carry out the picture hash matching with out ever taking a look at a person picture or eradicating a photograph from the machine or iCloud.

When sufficient cases of potential constructive outcomes for CSAM hash matches occurred on a single machine, it could set off a system that despatched affected photographs to be analyzed by people. If the found photographs had been CSAM, the authorities had been notified.

The CSAM detection system preserved person privateness, knowledge encryption, and extra, nevertheless it additionally launched many new assault vectors which may be abused by authoritarian governments. For instance, if such a system could possibly be used to search out CSAM, individuals fearful governments may compel Apple to make use of it to search out sure sorts of speech or imagery.

Apple in the end deserted the CSAM detection system. Advocates have spoken out towards Apple’s choice, suggesting the corporate is doing nothing to forestall the unfold of such content material.

Opting out of Apple Intelligence coaching

Whereas the know-how spine is identical, it appears Apple has landed on a a lot much less controversial use. Even so, there are those who would favor to not provide knowledge, privateness protected or not, to coach Apple Intelligence.

Nothing has been applied but, so don’t be concerned, there’s nonetheless time to make sure you are opted out. Apple says it would introduce the function in iOS 18.5 and testing will start in a future beta.

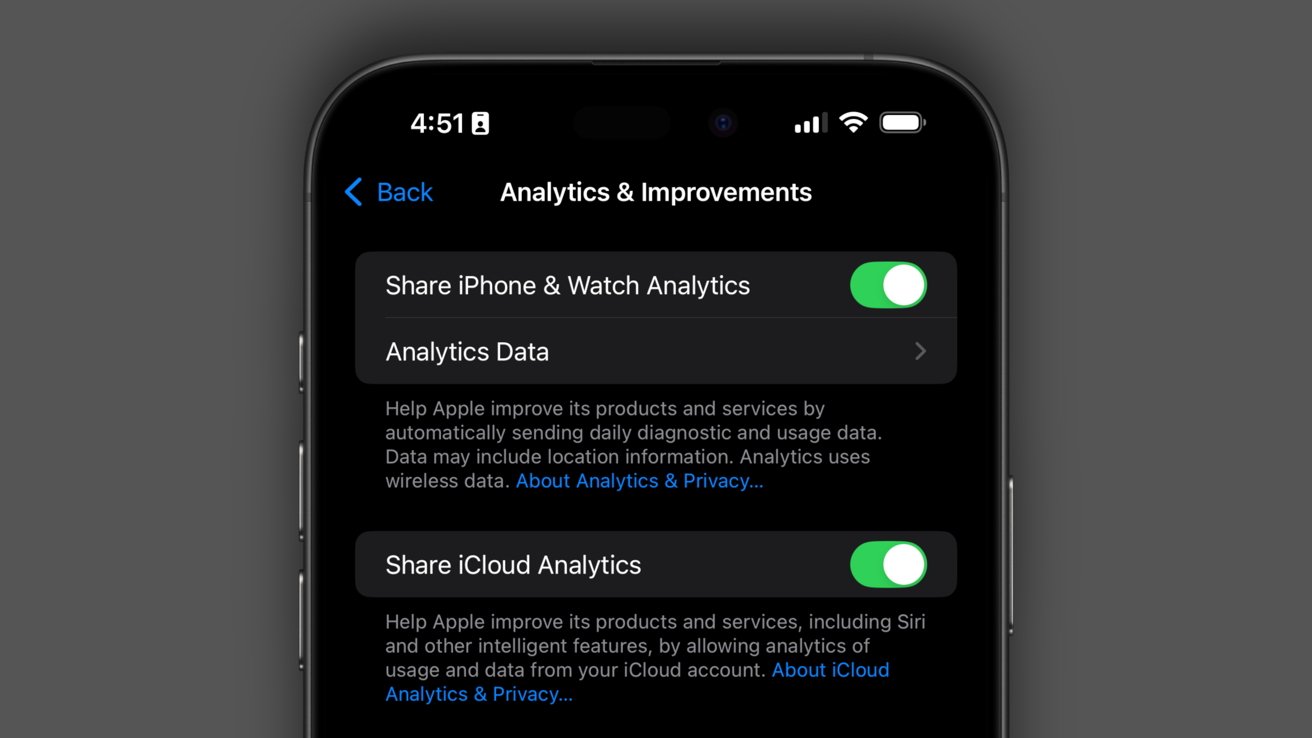

To verify should you’re opted in or not, open Settings, scroll down and choose Privateness & Safety, then deciding on Analytics & Enhancements. Toggle the “Share iPhone & Watch Analytics” setting to decide out of AI coaching if you have not already.