Constructing an end-to-end AI or ML platform usually requires a number of technological layers for storage, analytics, enterprise intelligence (BI) instruments, and ML fashions in an effort to analyze knowledge and share learnings with enterprise capabilities. The problem is deploying constant and efficient governance controls throughout totally different components with totally different groups.

Unity Catalog is Databricks’ built-in, centralized metadata layer designed to handle knowledge entry, safety, and lineage. It additionally serves as the muse for search and discovery inside the platform. Unity Catalog facilitates collaboration amongst groups by providing sturdy options like role-based entry management (RBAC), audit trails, and knowledge masking, making certain delicate data is protected with out hindering productiveness. It additionally helps the end-to-end lifecycles for ML fashions.

This information will present a complete overview and pointers on use unity catalogs for machine studying use circumstances and collaborating amongst groups by sharing compute sources.

This weblog put up takes you thru the steps for the top to finish lifecycle of machine studying with the benefit options with unity catalogs on Databricks.

The instance on this article makes use of the dataset containing data for the variety of circumstances of the COVID-19 virus by date within the US, with further geographical data. The objective is to forecast what number of circumstances of the virus will happen over the subsequent 7 days within the US.

Key Options for ML on Databricks

Databricks launched a number of options to have higher help for ML with unity catalog

Necessities

- The workspace have to be enabled for Unity Catalog. Workspace admins can test the doc to point out allow workspaces for unity catalog.

- You will need to use Databricks Runtime 15.4 LTS ML or above.

- A workspace admin should allow the Compute: Devoted group clusters preview utilizing the Previews UI. See Handle Databricks Previews.

- If the workspace has Safe Egress Gateway (SEG) enabled, pypi.org have to be added to the Allowed domains record. See Managing community insurance policies for serverless egress management.

Setup a gaggle

With a purpose to allow the collaboration, an account admin or a workspace admin must setup a gaggle by

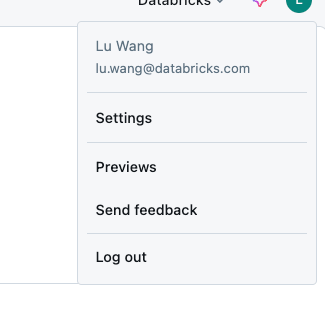

- Click on your person icon within the higher proper and click on Settings

- Within the “Workspace Admin” part, click on “Identification and entry”, then click on “Handle” within the Teams part

- Click on “Add group”,

- click on “Add new”

- Enter the group identify, and click on Add

- Seek for your newly created group and confirm that the Supply column says “Account”

- Click on your group’s identify within the search outcomes to go to group particulars

- Click on the “Members” tab and add desired members to the group

- Click on the “Entitlements” tab and test each “Workspace entry” and “Databricks SQL entry” entitlements

- If you would like to have the ability to handle the group from any non-admin account, you’ll be able to grant “Group: Supervisor” entry to the account within the “Permissions” tab

- NOTE: person account MUST be a member of the group in an effort to use group clusters – being a gaggle supervisor is just not enough.

Allow Devoted group clusters

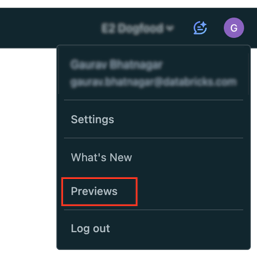

Devoted group clusters are in public preview, to allow the characteristic, the workspace admin ought to allow the characteristic utilizing the Previews UI.

- Click on your username within the high bar of the Databricks workspace.

- From the menu, choose Previews.

- Use toggles On for Compute: Devoted group clusters to allow or disable previews.

Create Group compute

Devoted entry mode is the most recent model of single person entry mode. With devoted entry, a compute useful resource may be assigned to a single person or group, solely permitting the assigned person(s) entry to make use of the compute useful resource.

To create a Databricks runtime with ML with

- In your Databricks workspace, go to Compute and click on Create compute.

- Verify “Machine studying” within the Efficiency part to decide on Databricks runtime with ML. Select “15.4 LTS” in Databricks Runtime. Choose desired occasion sorts and variety of employees as wanted.

- Develop the Superior part on the underside of the web page.

- Underneath Entry mode, click on Handbook after which choose Devoted (previously: Single-user) from the dropdown menu.

- Within the Single person or group area, choose the group you need assigned to this useful resource.

- Configure the opposite desired compute settings as wanted then click on Create.

After the cluster begins, all customers within the group can share the identical cluster. For extra particulars, see greatest practices for managing group clusters.

Information Preprocessing through Delta dwell desk (DLT)

On this sectional, we’ll

- Learn the uncooked knowledge and save to Quantity

- Learn the data from the ingestion desk and use Delta Dwell Tables expectations to create a brand new desk that incorporates cleansed knowledge.

- Use the cleansed data as enter to Delta Dwell Tables queries that create derived datasets.

To setup a DLT pipeline, it’s possible you’ll must following permissions:

- USE CATALOG, BROWSE for the mother or father catalog

- ALL PRIVILEGES or USE SCHEMA, CREATE MATERIALIZED VIEW, and CREATE TABLE privileges on the goal schema

- ALL PRIVILEGES or READ VOLUME and WRITE VOLUME on the goal quantity

- Obtain the information to Quantity: This instance masses knowledge from a Unity Catalog quantity.

Change <catalog-name>, <schema-name>, and <volume-name> with the catalog, schema, and quantity names for a Unity Catalog quantity. The supplied code makes an attempt to create the desired schema and quantity if these objects don’t exist. You will need to have the suitable privileges to create and write to things in Unity Catalog. See Necessities. - Create a pipeline. To configure a brand new pipeline, do the next:

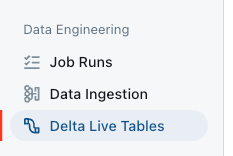

- Within the sidebar, click on Delta Dwell Tables in Information Engineering part.

- Click on Create pipeline.

- In Pipeline identify, kind a novel pipeline identify.

- Choose the Serverless checkbox.

- In Vacation spot, to configure a Unity Catalog location the place tables are printed, choose a Catalog and a Schema.

- In Superior, click on Add configuration after which outline pipeline parameters for the catalog, schema, and quantity to which you downloaded knowledge utilizing the next parameter names:

- my_catalog

- my_schema

- my_volume

- Click on Create.

The pipelines UI seems for the brand new pipeline. A supply code pocket book is robotically created and configured for the pipeline.

- Within the sidebar, click on Delta Dwell Tables in Information Engineering part.

- Declare materialized views and streaming tables. You should utilize Databricks notebooks to interactively develop and validate supply code for Delta Dwell Tables pipelines.

- Begin a pipeline replace by clicking the beginning button on high proper of the pocket book or the DLT UI. The DLT might be generated to the catalog and schema outlined the DLT

`<my_catalog>.<my_schema>`.

Mannequin Coaching on the materialized view of DLT

We are going to launch a serverless forecasting experiment on the materialized view generated from the DLT.

- click on Experiments within the sidebar in Machine Studying part

- Within the Forecasting tile, choose Begin coaching

- Fill within the config types

- Choose the materialized view because the Coaching knowledge:

`<my_catalog>.<my_schema>.covid_case_by_date` - Choose date because the Time column

- Choose Days within the Forecast frequency

- Enter 7 within the horizon

- Choose circumstances within the goal column in Prediction part

- Choose Mannequin registration as

`<my_catalog>.<my_schema>` - Click on Begin coaching to begin the forecasting experiment.

- Choose the materialized view because the Coaching knowledge:

After coaching completes, the prediction outcomes are saved within the specified Delta desk and the perfect mannequin is registered to Unity Catalog.

From the experiments web page, you select from the next subsequent steps:

- Choose View predictions to see the forecasting outcomes desk.

- Choose Batch inference pocket book to open an auto-generated pocket book for batch inferencing utilizing the perfect mannequin.

- Choose Create serving endpoint to deploy the perfect mannequin to a Mannequin Serving endpoint.

Conclusion

On this weblog, we’ve got explored the end-to-end technique of organising and coaching forecasting fashions on Databricks, from knowledge preprocessing to mannequin coaching. By leveraging unity catalogs, group clusters, delta dwell desk, and AutoML forecasting, we have been capable of streamline mannequin improvement and simplify the collaborations between groups.