Massive language fashions are enhancing quickly; so far, this enchancment has largely been measured by way of educational benchmarks. These benchmarks, corresponding to MMLU and BIG-Bench, have been adopted by researchers in an try to check fashions throughout numerous dimensions of functionality associated to common intelligence. Nonetheless enterprises care concerning the high quality of AI methods in particular domains, which we name area intelligence. Area intelligence entails knowledge and duties that take care of the inside workings of enterprise processes: particulars, jargon, historical past, inside practices and workflows, and the like.

Subsequently, enterprise practitioners deploying AI in real-world settings want evaluations that immediately measure area intelligence. With out domain-specific evaluations, organizations might overlook fashions that may excel at their specialised duties in favor of people who rating nicely on presumably misaligned common benchmarks. We developed the Area Intelligence Benchmark Suite (DIBS) to assist Databricks clients construct higher AI methods for his or her particular use circumstances, and to advance our analysis on fashions that may leverage area intelligence. DIBS measures efficiency on datasets curated to mirror specialised area information and customary enterprise use circumstances that conventional educational benchmarks typically overlook.

Within the the rest of this weblog submit, we’ll talk about how present fashions carry out on DIBS compared to comparable educational benchmarks. Our key takeaways embody:

- Fashions’ rankings throughout educational benchmarks don’t essentially map to their rankings throughout trade duties. We discover discrepancies in efficiency between educational and enterprise rankings, emphasizing the necessity for domain-specific testing.

- There may be room for enchancment in core capabilities. Some enterprise wants like structured knowledge extraction present clear paths for enchancment, whereas extra advanced domain-specific duties require extra refined reasoning capabilities.

- Builders ought to select fashions based mostly on particular wants. There is no such thing as a single finest mannequin or paradigm. From open-source choices to retrieval methods, completely different options excel in numerous situations.

This underscores the necessity for builders to check fashions on their precise use circumstances and keep away from limiting themselves to any single mannequin choice.

Introducing our Area Intelligence Benchmark Suite (DIBS)

DIBS focuses on three of the most typical enterprise use circumstances surfaced by Databricks clients:

- Information Extraction: Textual content to JSON

- Changing unstructured textual content (like emails, reviews, or contracts) into structured JSON codecs that may be simply processed downstream.

- Software Use: Perform Calling

- Enabling LLMs to work together with exterior instruments and APIs by producing correctly formatted operate calls.

- Agent Workflows: Retrieval Augmented Technology (RAG)

- Enhancing LLM responses by first retrieving related info from an organization’s information base or paperwork.

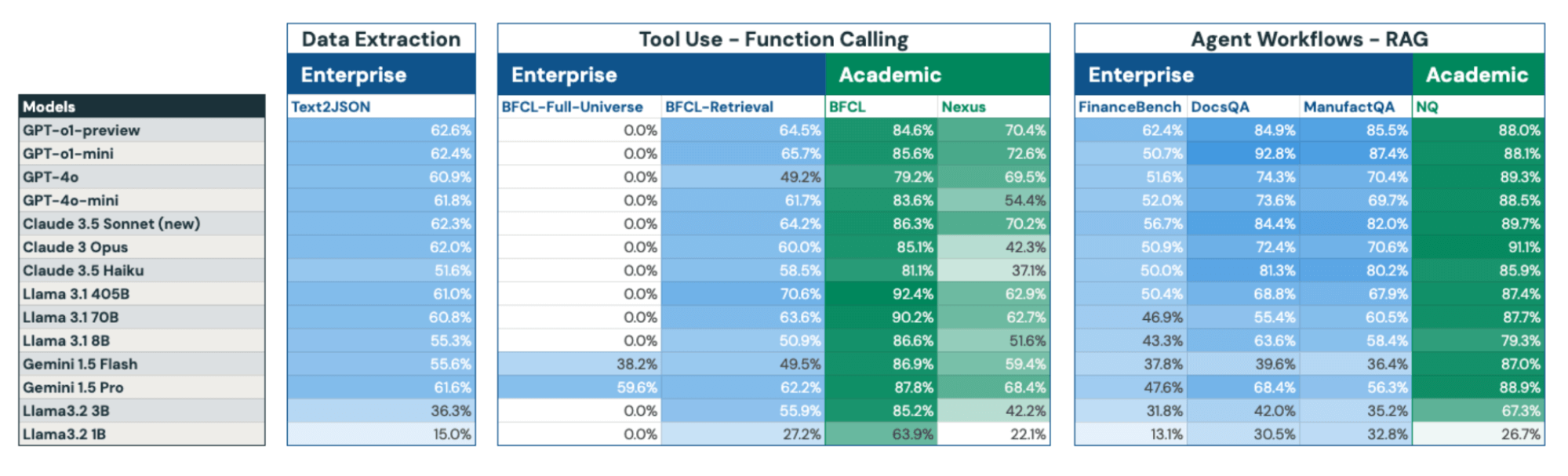

We evaluated fourteen well-liked fashions throughout DIBS and three educational benchmarks, spanning enterprise domains in finance, software program, and manufacturing. We’re increasing our analysis scope to incorporate authorized, knowledge evaluation and different verticals, and welcome collaboration alternatives to evaluate further trade domains and duties.

In Desk 1, we briefly present an outline of every process, the benchmark now we have been utilizing internally, and educational counterparts if accessible. Later, in Benchmark Overviews, we talk about these in additional element.

| Job Class | Dataset Identify | Enterprise or Tutorial | Area | Job Description |

|---|---|---|---|---|

Information Extraction: Textual content to JSON | Text2JSON | Enterprise | Misc. Data | Given a immediate containing a schema and some Wikipedia-style paragraphs, extract related info into the schema. |

| Software Use: Perform Calling | BFCL-Full Universe | Enterprise | Perform calling | Modification of BFCL the place, for every question, the mannequin has to pick out the proper operate from the complete set of features current within the BFCL universe. |

| Software Use: Perform Calling | BFCL-Retrieval | Enterprise | Perform calling | Modification of BFCL the place, for every question, we use text-embedding-3-large to pick out 10 candidate features from the complete set of features current within the BFCL universe. The duty then turns into to decide on the proper operate from that set. |

| Software Use: Perform Calling | Nexus | Tutorial | APIs | Single flip operate calling analysis throughout 7 APIs of various problem |

| Software Use: Perform Calling | Berkeley Perform Calling Leaderboard (BFCL) | Tutorial | Perform calling | See authentic BFCL weblog. |

Agent Workflows: RAG | DocsQA | Enterprise | Software program – Databricks Documentation with Code | Reply actual person questions based mostly on public Databricks documentation net pages. |

Agent Workflows: RAG | ManufactQA | Enterprise | Manufacturing – Semiconductors –Buyer FAQs

| Given a technical buyer question about debugging or product points, retrieve probably the most related web page from a corpus of lots of of product manuals and datasheets, and assemble a solution like a buyer help agent. |

Agent Workflows: RAG | FinanceBench | Enterprise | Finance – SEC Filings | Carry out monetary evaluation on SEC filings, from Patronus AI |

Agent Workflows: RAG | Pure Questions | Tutorial | Wikipedia | Extractive QA over Wikipedia articles |

Desk 1. We consider the set of fashions throughout 9 duties spanning 3 enterprise process classes: knowledge extraction, device use, and agent workflows. The three classes we talk about have been chosen as a result of their relative frequency in enterprise workloads. Past these classes, we’re persevering with to increase to a broader set of analysis duties in collaboration with our clients.

What We Realized Evaluating LLMs on Enterprise Duties

Tutorial Benchmarks Obscure Enterprise Efficiency Gaps

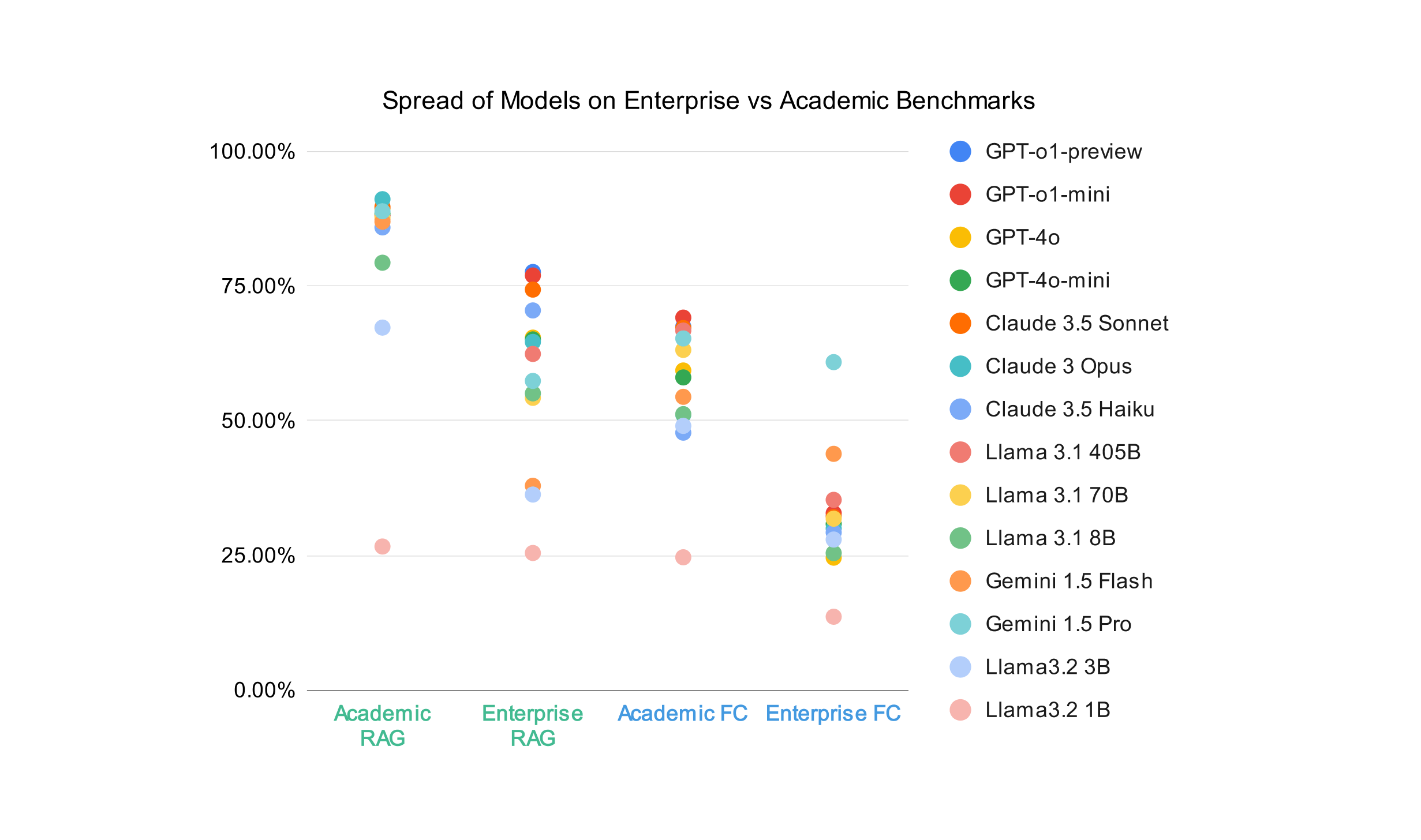

In Determine 1, we present a comparability of RAG and performance calling (FC) capabilities between the enterprise and educational benchmarks, with common scores plotted for all fourteen fashions. Whereas the tutorial RAG common has a bigger vary (91.14% on the high, and 26.65% on the backside), we will see that the overwhelming majority of fashions rating between 85% and 90%. The enterprise RAG set of scores has a narrower vary, as a result of it has a decrease ceiling – this reveals that there’s extra room to enhance in RAG settings than a benchmark like NQ may counsel.

Determine 1 visually reveals wider efficiency gaps in enterprise RAG scores, proven by the extra dispersed distribution of information factors, in distinction to the tighter clustering seen within the educational RAG column. That is more than likely as a result of educational benchmarks are based mostly on common domains like Wikipedia, are public, and are a number of years previous – subsequently, there’s a excessive likelihood that retrieval fashions and LLM suppliers have already skilled on the info. For a buyer with personal, area particular knowledge although, the capabilities of the retrieval and LLM fashions are extra precisely measured with a benchmark tailor-made to their knowledge and use case. An identical impact could be noticed, although it’s much less pronounced, within the operate calling setting.

Structured Extraction (Text2JSON) presents an achievable goal

At a excessive stage, we see that almost all fashions have vital room for enchancment in prompt-based Text2JSON; we didn’t consider mannequin efficiency when utilizing structured technology.

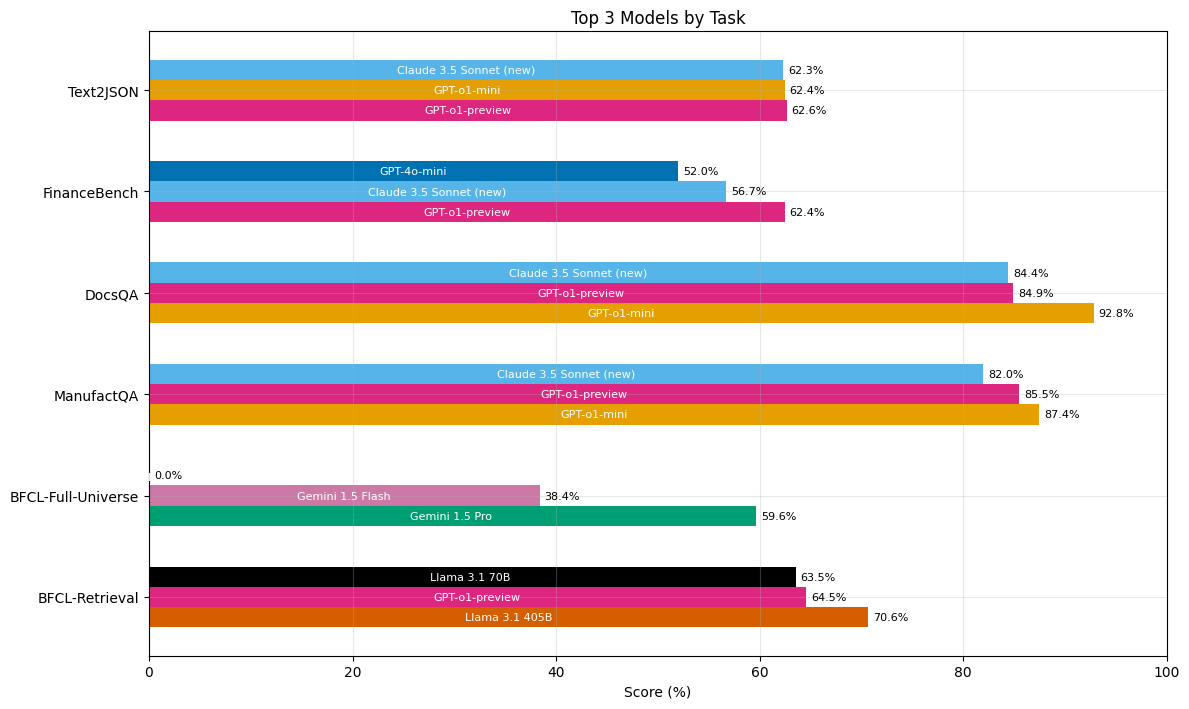

Determine 2 exhibits that on this process, there are three distinct tiers of mannequin efficiency:

- Most closed-source fashions in addition to Llama 3.1 405B and 70B rating round simply 60%

- Claude 3.5 Haiku, Llama 3.1 8B and Gemini 1.5 Flash deliver up the center of the pack with scores between 50% and 55%.

- The smaller Llama 3.2 fashions are a lot worse performers.

Taken collectively, this means that prompt-based Text2JSON is probably not ample for manufacturing use off-the-shelf even from main mannequin suppliers. Whereas structured technology choices can be found, they might impose restrictions on viable JSON schemas and be topic to completely different knowledge utilization stipulations. Happily, now we have had success fine-tuning fashions to enhance at this functionality.

Different duties might require extra refined capabilities

We additionally discovered FinanceBench and Perform Calling with Retrieval to be difficult duties for many fashions. That is doubtless as a result of the previous requires a mannequin to be proficient with numerical complexity, and the latter requires a capability to disregard distractor info.

No Single Mannequin Dominates all Duties

Our analysis outcomes don’t help the declare that anybody mannequin is strictly superior to the remaining. Determine 3 demonstrates that probably the most persistently high-performing fashions have been o1-preview, Claude Sonnet 3.5 (New), and o1-mini, attaining high scores in 5, 4, and three out of the 6 enterprise benchmark duties respectively. These similar three fashions have been total the most effective performers for knowledge extraction and RAG duties. Nonetheless, solely Gemini fashions at the moment have the context size essential to carry out the operate calling process over all attainable features. In the meantime, Llama 3.1 405B outperformed all different fashions on the operate calling as retrieval process.

Small fashions have been surprisingly sturdy performers: they principally carried out equally to their bigger counterparts, and generally considerably outperformed them. The one notable degradation was between o1-preview and o1-mini on the FinanceBench process. That is attention-grabbing on condition that, as we will see in Determine 3, o1-mini outperforms o1-preview on the opposite two enterprise RAG duties. This underscores the task-dependent nature of mannequin choice.

Open Supply vs. Closed Supply Fashions

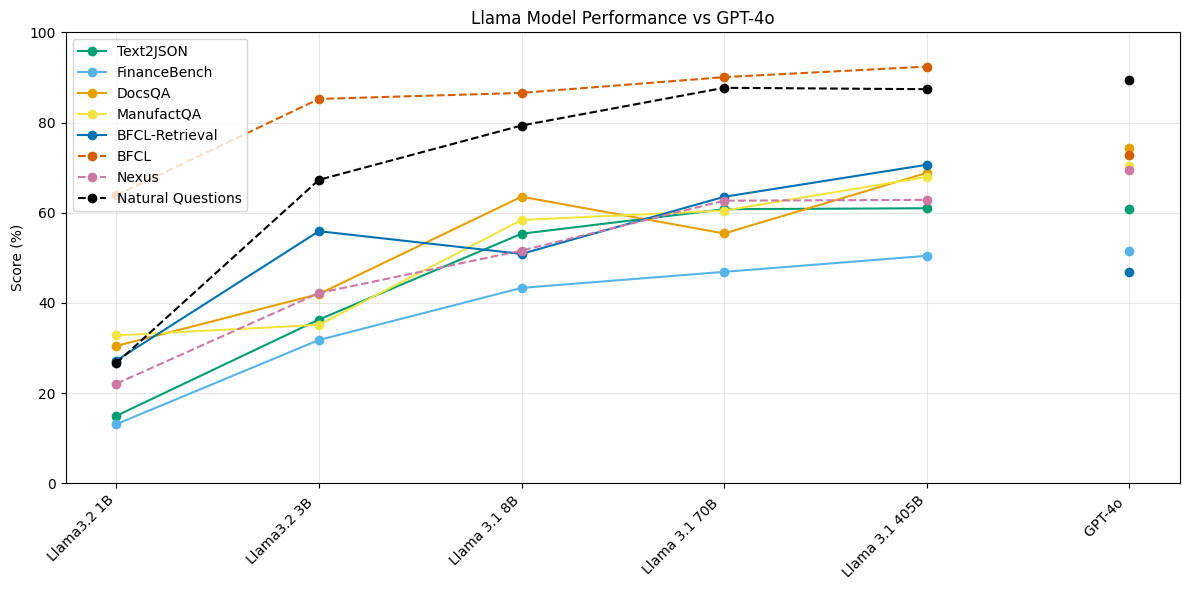

We evaluated 5 completely different Llama fashions, every at a distinct measurement. In Determine 4, we plot the scores of every of those fashions on every of our benchmarks towards GPT-4o’s scores for comparability. We discover that Llama 3.1 405B and Llama 3.1 70B carry out extraordinarily competitively on Text2JSON and Perform Calling duties as in comparison with closed-source fashions, surpassing or performing equally to GPT 4o. Nonetheless, the hole between these mannequin courses is extra pronounced on RAG duties.

Moreover, we be aware that Llama 3.1 and three.2 collection of fashions present diminishing returns relating to mannequin scale and efficiency. The efficiency hole between Llama 3.1 405B and Llama 3.1 70B is negligible on the Text2JSON process, and considerably smaller on each different process than Llama 3.1 8B. Nonetheless, we observe that Llama 3.2 3B outperforms Llama 3.1 8B on the operate calling with retrieval process (BFCL-Retrieval in Determine 4).

This means two issues. First, open-source fashions are off-the-shelf viable for at the least two high-frequency enterprise use circumstances. Second, there’s room to enhance these fashions’ means to leverage retrieved info.

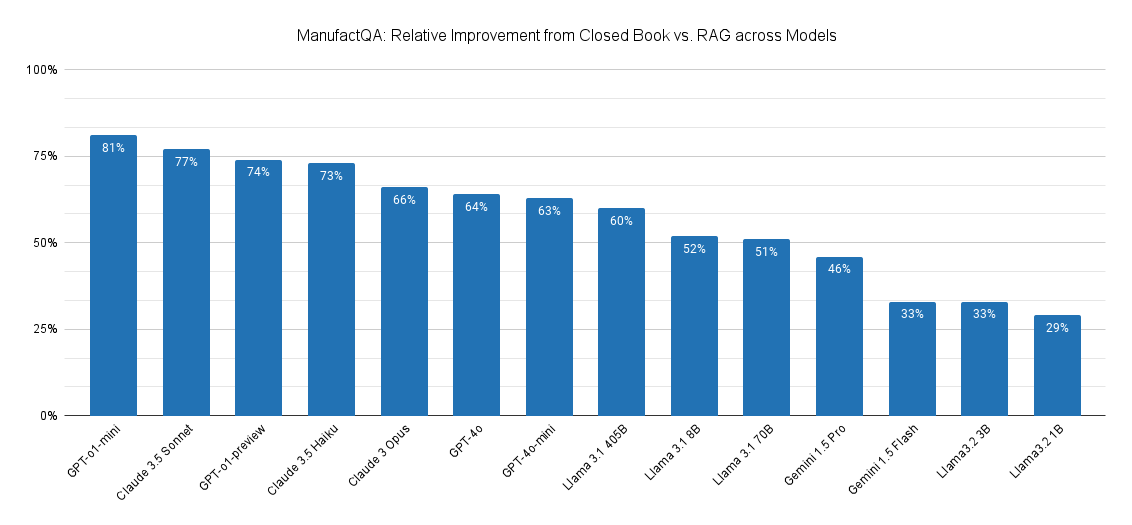

To additional examine this, we in contrast how a lot better every mannequin would carry out on ManufactQA below a closed guide setting vs. a default RAG setting. In a closed guide setting, fashions are requested to reply the queries with none given context – which measures a mannequin’s pretrained information. Within the default RAG setting, the LLM is supplied with the highest 10 paperwork retrieved by OpenAI’s text-embedding-3-large, which had a recall@10 of 81.97%. This represents probably the most reasonable configuration in a RAG system. We then calculated the relative error discount between the rag and closed guide settings.

Primarily based on Determine 5, we observe that the GPT-o1-mini (surprisingly!) and Claude-3.5 Sonnet are capable of leverage retrieved context probably the most, adopted by GPT-o1-preview and Claude 3.5 Haiku. The open supply Llama fashions and Gemini fashions all path behind, suggesting that these fashions have extra room to enhance in leveraging area particular context for RAG.

For operate calling at scale, top quality retrieval could also be extra beneficial than bigger context home windows.

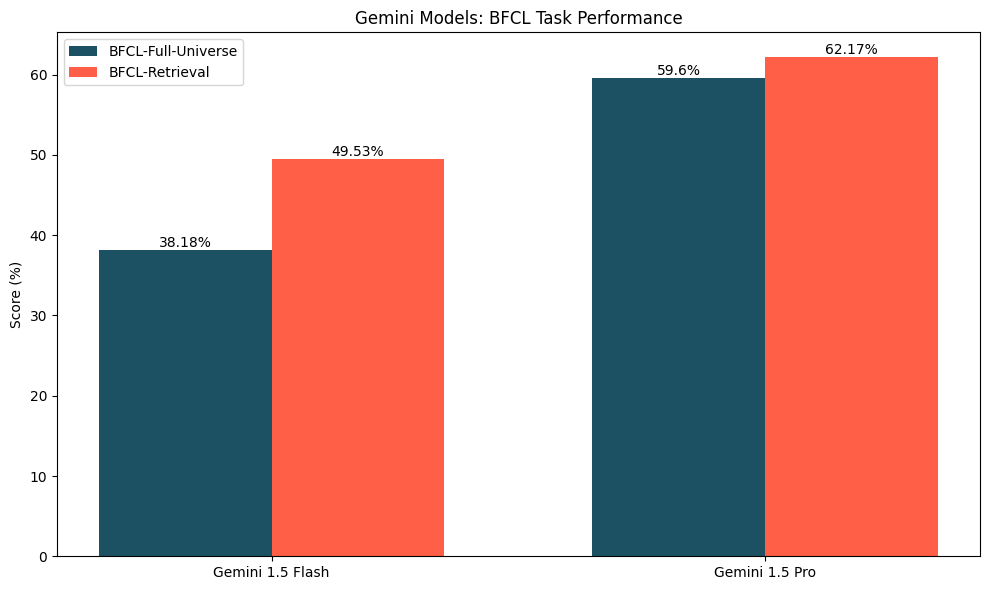

Our operate calling evaluations present one thing attention-grabbing: simply because a mannequin can match a complete set of features into its context window doesn’t imply that it ought to. The one fashions able to doing this at the moment are Gemini 1.5 Flash and Gemini 1.5 Professional; as Determine 6 shows, these fashions carry out higher on the operate calling with retrieval variant, the place a retriever selects a subset of the complete set of features related to the question. The development in efficiency was extra exceptional for Gemini 1.5 Flash (~11% enchancment) than for Gemini 1.5 Professional (~2.5%). This enchancment doubtless stems from the fact {that a} well-tuned retriever can improve the chance that the proper operate is within the context whereas vastly decreasing the variety of distractor features current. Moreover, now we have beforehand seen that fashions might battle with long-context duties for a wide range of causes.

Benchmark Overviews

Having outlined DIBS’s construction and key findings, we current a complete abstract of fourteen open and closed-source fashions’ efficiency throughout our enterprise and educational benchmarks in Determine 7. Beneath, we offer detailed descriptions of every benchmark within the the rest of this part.

Information Extraction: Textual content to JSON

In at present’s data-driven panorama, the power to rework huge quantities of unstructured knowledge into actionable info has develop into more and more beneficial. A key problem many enterprises face is constructing unstructured knowledge to structured knowledge pipelines, both as standalone pipelines or as half of a bigger system.

One frequent variant now we have seen within the discipline is changing unstructured textual content – typically a big corpus of paperwork – to JSON. Whereas this process shares similarities with conventional entity extraction and named entity recognition, it goes additional – typically requiring a complicated mix of open-ended extraction, summarization, and synthesis capabilities.

No open-source educational benchmark sufficiently captures this complexity; we subsequently procured human-written examples and created a customized Text2JSON benchmark. The examples we procured contain extracting and summarizing info from passages right into a specified JSON schema. We additionally consider multi-turn capabilities, e.g. modifying current JSON outputs to include further fields and knowledge. To make sure our benchmark displays precise enterprise wants and offers a related evaluation of extraction capabilities, we used the identical analysis methods as our clients.

Software Use: Perform Calling

Software use capabilities allow LLMs to behave as half of a bigger compound AI system. We now have seen sustained enterprise curiosity in operate calling as a device, and we beforehand wrote about learn how to successfully consider operate calling capabilities.

Lately, organizations have taken to device calling at a a lot bigger scale. Whereas educational evaluations sometimes take a look at fashions with small operate units—typically ten or fewer choices—real-world functions often contain lots of or hundreds of obtainable features. In follow, this implies enterprise operate calling is just like needle-in-a-haystack take a look at, with many distractor features current throughout any given question.

To higher mirror these enterprise situations, we have tailored the established BFCL educational benchmark to judge each operate calling capabilities and the function of retrieval at scale. In its authentic model, the BFCL benchmark requires a mannequin to decide on one or fewer features from a predefined set of 4 features. We constructed on high of our earlier modification of the benchmark to create two variants: one which requires the mannequin to select from the complete set of features that exist in BFCL for every question, and one which leverages a retriever to establish ten features which might be the more than likely to be related.

Agent Workflows: Retrieval-Augmented Technology

RAG makes it attainable for LLMs to work together with proprietary paperwork, augmenting current LLMs with area intelligence. In our expertise, RAG is without doubt one of the hottest methods to customise LLMs in follow. RAG methods are additionally essential for enterprise brokers, as a result of any such agent should study to function inside the context of the actual group through which it’s being deployed.

Whereas the variations between trade and educational datasets are nuanced, their implications for RAG system design are substantial. Design selections that seem optimum based mostly on educational benchmarks might show suboptimal when utilized to real-world trade knowledge. Because of this architects of business RAG methods should rigorously validate their design selections towards their particular use case, slightly than relying solely on educational efficiency metrics.

Pure Questions stays a well-liked educational benchmark at the same time as others, corresponding to HotpotQA have fallen out of favor. Each of those datasets take care of Wikipedia-based query answering. In follow, LLMs have listed a lot of this info already. For extra reasonable enterprise settings, we use FinanceBench and DocsQA – as mentioned in our earlier explorations on lengthy context RAG – in addition to ManufactQA, an artificial RAG dataset simulating technical buyer help interactions with product manuals, designed for manufacturing corporations’ use circumstances.

Conclusion

To find out whether or not educational benchmarks might sufficiently inform duties referring to area intelligence, we evaluated a complete of fourteen fashions throughout 9 duties. We developed a area intelligence benchmark suite comprising six enterprise benchmarks that characterize: knowledge extraction (textual content to JSON), device use (operate calling), and agentic workflows (RAG). We chosen fashions to judge based mostly on buyer curiosity in utilizing them for his or her AI/ML wants; we moreover evaluated the Llama 3.2 fashions for extra datapoints on the consequences of mannequin measurement.

Our findings present that counting on educational benchmarks to make selections about enterprise duties could also be inadequate. These benchmarks are overly saturated – hiding true mannequin capabilities – and considerably misaligned with enterprise wants. Moreover, the sphere of fashions is muddied: there are a number of fashions which might be typically sturdy performers, and fashions which might be unexpectedly succesful at particular duties. Lastly, educational benchmark efficiency might lead one to consider that fashions are sufficiently succesful; in actuality, there should still be room for enchancment in the direction of being production-workload prepared.

At Databricks, we’re persevering with to assist our clients by investing assets into extra complete enterprise benchmarking methods, and in the direction of growing refined approaches to area experience. As a part of this, we’re actively working with corporations to make sure we seize a broad spectrum of enterprise-relevant wants, and welcome collaborations. If you’re an organization trying to create domain-specific agentic evaluations, please check out our Agent Analysis Framework. If you’re a researcher serious about these efforts, take into account making use of to work with us.